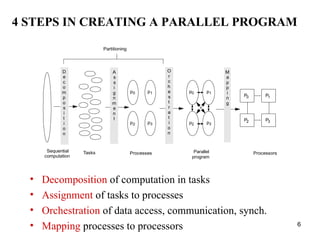

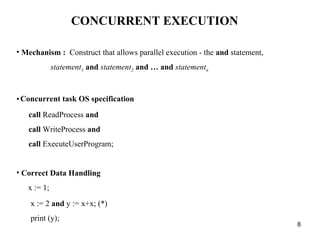

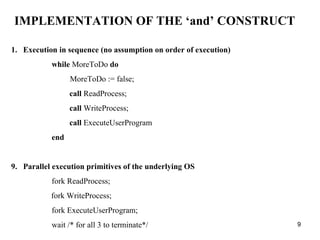

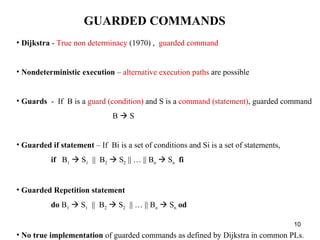

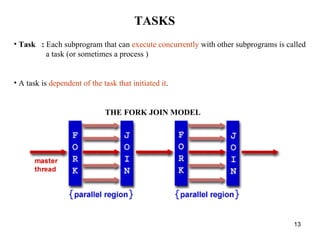

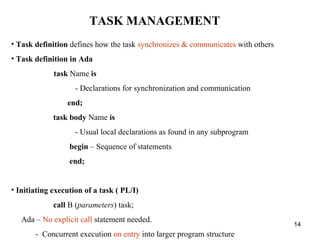

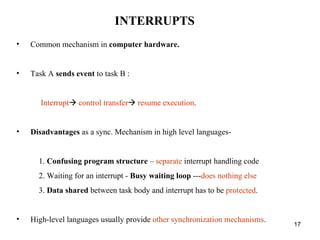

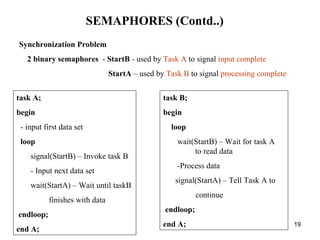

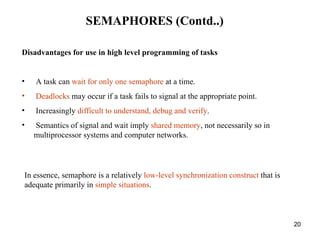

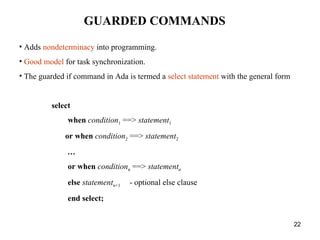

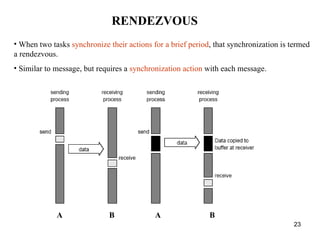

The document discusses parallel programming and synchronization techniques for concurrent tasks. It covers parallel programming concepts like concurrent execution, guarded commands, and tasks. It also describes synchronization mechanisms like semaphores, messages, and rendezvous that allow tasks to coordinate access to shared resources and data. The document uses Ada as an example programming language to illustrate features for defining and managing concurrent tasks.