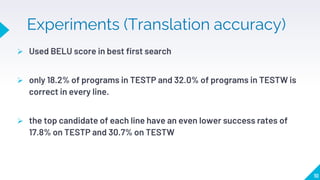

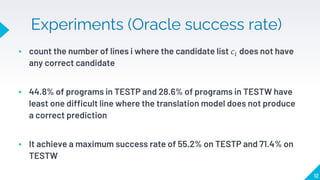

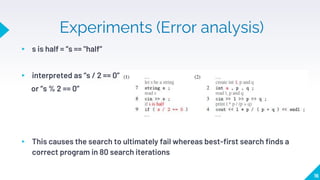

The document presents an approach called SPoC that uses search-based techniques to synthesize code from pseudocode. SPoC treats each line of pseudocode as a discrete portion of the program and uses a translation model and best-first search to map pseudocode to code. Experimental results show the approach achieves a maximum success rate of 55.2% on one test set and 71.4% on another. The approach also uses error localization techniques to improve results by identifying likely error locations when programs fail to compile.