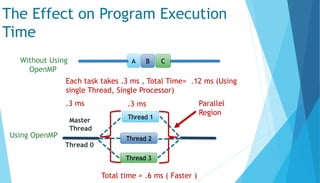

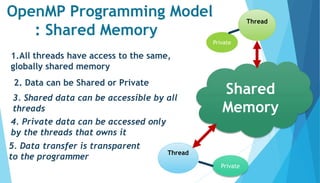

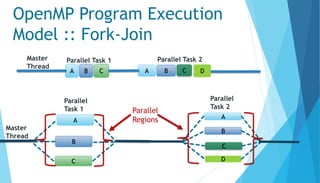

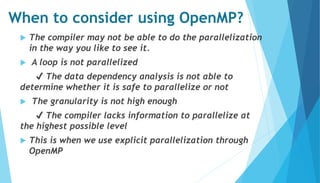

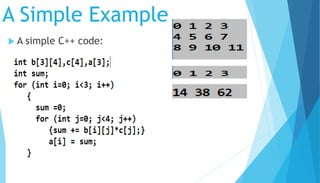

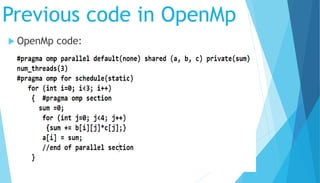

The document provides an overview of OpenMP, an API for managing multi-threaded, shared memory parallelism, which is useful for large computations like matrix multiplication. It explains the OpenMP programming model, execution model, and scenarios where explicit parallelization may be preferred. Additionally, a simple C++ code example demonstrates the efficiency gains achieved through OpenMP.

![Task Distributions in

Threades

Thread_id=1

i=1

for (int j=0; j<4; j++)

{sum += b[i][j]*c[j];}

a[i] = sum;

Thread_id=2

i=2

for (int j=0; j<4; j++)

{sum += b[i][j]*c[j];}

a[i] = sum;

Thread_id=3

i=3

for (int j=0; j<4; j++)

{sum += b[i][j]*c[j];}

a[i] = sum;](https://image.slidesharecdn.com/parallelprocessing-openmp-181105062408/85/Parallel-processing-open-mp-9-320.jpg)