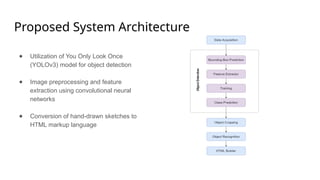

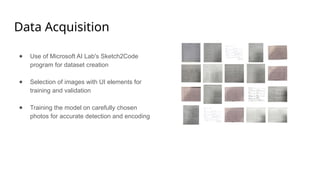

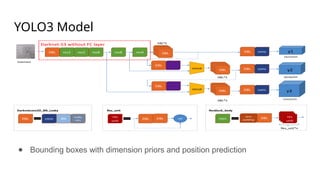

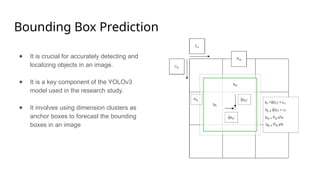

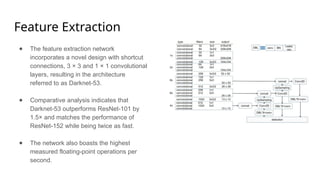

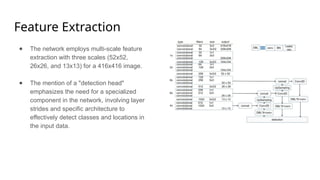

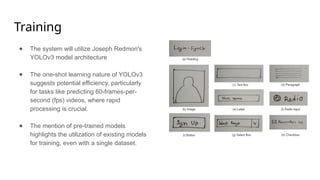

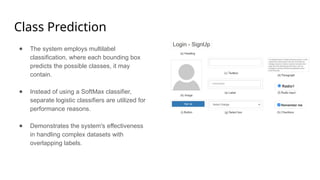

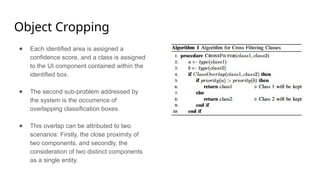

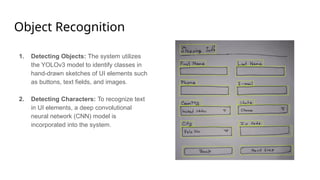

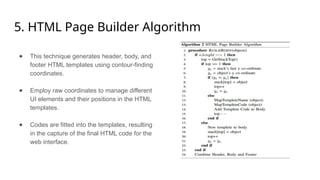

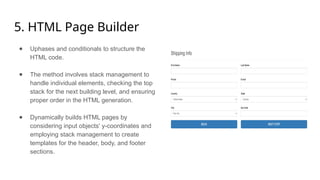

The document outlines a methodology for generating HTML code from hand-drawn sketches using deep learning techniques, particularly employing the YOLOv3 model for object detection and feature extraction. It discusses the architecture and training process of the model, achieving an accuracy rate of 87.71%, while also noting limitations such as the inability to support hyperlinks. Overall, the proposed system allows for efficient transformation of design mock-ups into structured HTML code, facilitating streamlined web page development.