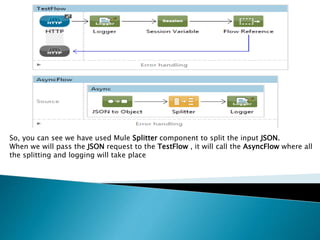

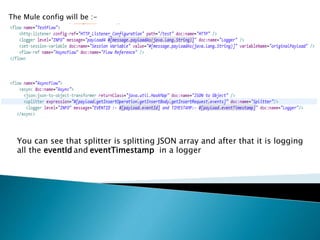

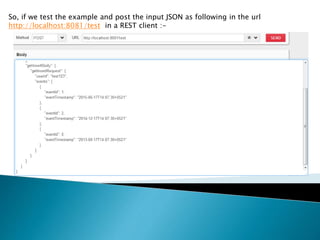

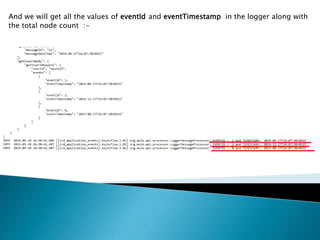

Mule ESB can split a large message into smaller parts using a Splitter flow control component. This allows large messages containing repeating elements, like a list, to be broken into individual fragments that can then be processed separately. The document demonstrates using a Splitter to break up a JSON payload containing an array of "events" into separate logs of each event's ID and timestamp, handling the large list efficiently. Splitters are useful for processing large repeating data in XML or JSON payloads.

![Let us consider we have following JSON data as input:-

{

"getInsertOperation": {

"getInsertContext": {

"messageId": "21",

"messageDateTime": "2014-08-17T14:07:30+0521"

},

"getInsertBody": {

"getInsertRequest": {

"userId": "test123",

"events": [

{

"eventId": 1,

"eventTimestamp": "2015-06-17T14:07:30+0521"

},

{

"eventId": 2,

"eventTimestamp": "2014-12-17T14:07:30+0521"

},

{

"eventId": 0,

"eventTimestamp": "2013-08-17T14:07:30+0521"

}

]

}

}

}

}](https://image.slidesharecdn.com/splittingwithmulepart2-150510111715-lva1-app6891/85/Splitting-with-mule-part2-4-320.jpg)