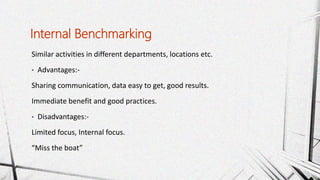

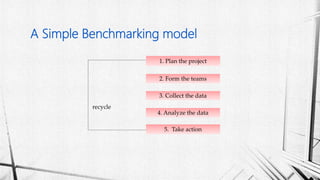

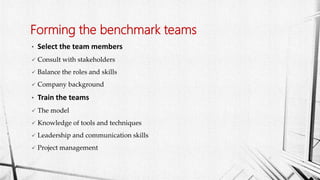

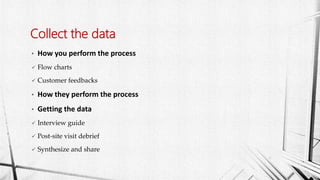

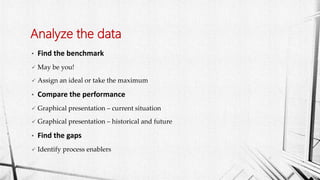

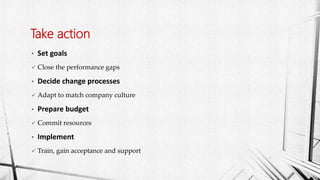

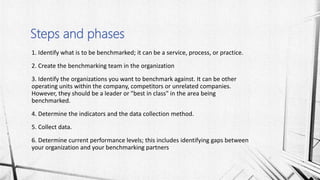

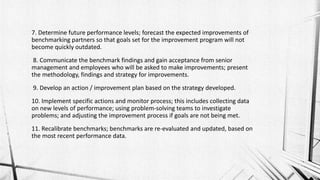

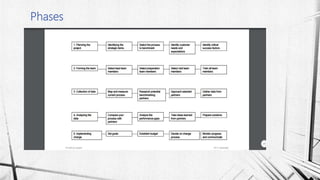

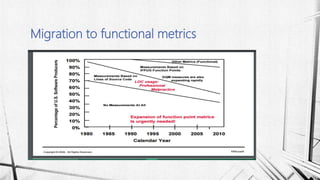

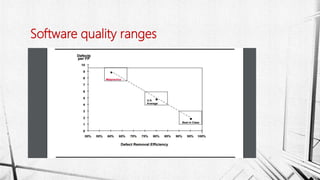

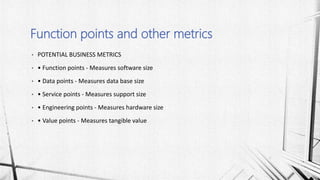

The document discusses benchmarking and function points as metrics for software projects. It defines benchmarking as comparing business processes and performance metrics to industry best practices. It outlines the benchmarking process which includes identifying what to benchmark, creating a team, collecting data from other organizations, analyzing gaps, and implementing an action plan. The document also discusses function points as a standardized software metric that measures functionality rather than lines of code. It notes the strengths and weaknesses of using function points for economic and quality analyses in software projects.