Slide smallfiles

•

0 likes•267 views

Swift Small files proposal (Atlanta PTG)

Report

Share

Report

Share

Download to read offline

Recommended

Declarative Infrastructure Tools

Abstract: At DataRobot we deal with automation challenges every day. This talk will give insight into how we use Python tools built around Ansible, Terraform, and Docker to solve real-world problems in infrastructure and automation.

Cassandra 2.1 boot camp, Compaction

Cassandra Summit Boot Camp, 2014

Protocol, Queries, and Cell Names

Marcus Eriksson presenter

"Metrics: Where and How", Vsevolod Polyakov

Abstract: Nowadays it’s only a lazy one who haven’t written his own metric storage and aggregation system. I am lazy, and that’s why I have to choose what to use and how to use. I don’t want you to do the same job, so I decided to share my considerations concerning architectures and test results.

OpenNebulaConf2018 - OpenNebula and LXD Containers - Rubén S. Montero - OpenN...

In this talk we'll showcase the new support for LXD containers in OpenNebula. The talk will describe the basic functionality of the new drivers and will provide some hints on the integration internals. LXD support will be released in OpenNebula 5.8 and it will let you manage LXD containers in your cloud using the same interfaces as with VMs, leveraging all the OpenNebula ecosystem and functionality including: Marketplace, multi-tenancy or service composition with OpenNebula Flow.

CRIU: Time and Space Travel for Linux Containers

This talk describes CRIU (checkpoint/restore in userspace) software, used to checkpoint, restore, and live migrate Linux containers and processes. It describes the live migration, compares it to that of VM, and shows other uses for checkpoint/restore.

Recommended

Declarative Infrastructure Tools

Abstract: At DataRobot we deal with automation challenges every day. This talk will give insight into how we use Python tools built around Ansible, Terraform, and Docker to solve real-world problems in infrastructure and automation.

Cassandra 2.1 boot camp, Compaction

Cassandra Summit Boot Camp, 2014

Protocol, Queries, and Cell Names

Marcus Eriksson presenter

"Metrics: Where and How", Vsevolod Polyakov

Abstract: Nowadays it’s only a lazy one who haven’t written his own metric storage and aggregation system. I am lazy, and that’s why I have to choose what to use and how to use. I don’t want you to do the same job, so I decided to share my considerations concerning architectures and test results.

OpenNebulaConf2018 - OpenNebula and LXD Containers - Rubén S. Montero - OpenN...

In this talk we'll showcase the new support for LXD containers in OpenNebula. The talk will describe the basic functionality of the new drivers and will provide some hints on the integration internals. LXD support will be released in OpenNebula 5.8 and it will let you manage LXD containers in your cloud using the same interfaces as with VMs, leveraging all the OpenNebula ecosystem and functionality including: Marketplace, multi-tenancy or service composition with OpenNebula Flow.

CRIU: Time and Space Travel for Linux Containers

This talk describes CRIU (checkpoint/restore in userspace) software, used to checkpoint, restore, and live migrate Linux containers and processes. It describes the live migration, compares it to that of VM, and shows other uses for checkpoint/restore.

“Show Me the Garbage!”, Understanding Garbage Collection

“Just leave the garbage outside and we will take care of it for you”. This is the panacea promised by garbage collection mechanisms built into most software stacks available today. So, we don’t need to think about it anymore, right? Wrong! When misused, garbage collectors can fail miserably. When this happens they slow down your application and lead to unacceptable pauses. In this talk we will go over different garbage collectors approaches in different software runtimes and what are the conditions which enable them to function well.

Presented on Reversim summit 2019

https://summit2019.reversim.com/session/5c754052d0e22f001706cbd8

C* Summit 2013: Time-Series Metrics with Cassandra by Mike Heffner

Librato's Metrics platform relies on Cassandra as its sole data storage platform for time-series data. This session will discuss how we have scaled from a single six node Cassandra ring two years ago to the multiple storage rings that handle over 150,000 writes/second today. We'll cover the steps we have taken to scale the platform including the evolution of our underlying schema, operational tricks, and client-library improvements. The session will finish with our suggestions on how we believe Cassandra as a project and its community can be improved.

Распределенные системы хранения данных, особенности реализации DHT в проекте ...

В этом докладе будет описана система хранения данных Elliptics network, основной задачей которой является предоставление пользователям доступа к данным, расположенным на физически распределенных серверах с плоской адресной моделью в децентрализованном окружении. Распределенная система хранения данных, предоставляющая доступ к объекту по ключу (key/value storage), и в частности распределенная хэш-таблица (distributed hash table), является весьма эффективным решением с незначительным набором ограничений. Для подтверждения работоспособности данной идеи и функционала в докладе будет представлена практическая реализация распределенной хэш-таблицы с модульной системой хранения данных и различными системами доступа: от POSIX файловой системы до доступа по протоколу HTTP. Также мы обсудим ограничения, накладываемые технологией распределенной хэш таблицы, и сравним особенности высоконагруженного и высоконадежного доступа в ненадежной среде с классическими моделями, использующими централизованные системы. Опираясь на полученные практические результаты и гибкость реализованной системы, будут предложены способы решения поставленных задач и расширения функционала.

Be a Zen monk, the Python way

Be a Zen monk, the Python way.

A short tech talk at Imaginea to get developers bootstrapped with the focus and philosophy of Python and their point of convergence with the philosophy.

CRIU: time and space travel for Linux containers -- Kir Kolyshkin

CRIU: time and space travel for Linux containers

Kirill Kolyshkin, ContainerDays NYC, 30 Oct 2015

http://www.youtube.com/watch?v=0y7a3_Nn2hI

.NET Memory Primer

The understanding of .NET Memory Management goes from the basics of how Windows memory works to the physical memory layout and allocation. This presentations covers both using Visual Studio IDE as main workplace.

Ceph Day NYC: Developing With Librados

This talk, given by Sage Weil at Ceph Day NYC, introduces developers to librados and discusses the hidden capabilities of RADOS.

Garbage collection

GARBAGE COLLECTOR Automatic garbage collection is the process of looking at heap memory, identifying which objects are in use and which are not, and deleting the unused objects. An in use object, or a referenced object, means that some part of your program still maintains a pointer to that object. An unused object, or unreferenced object, is no longer referenced by any part of your program. So the memory used by an unreferenced object can be reclaimed. In a programming language like C, allocating and deallocating memory is a manual process. In Java, process of deallocating memory is handled automatically by the garbage collector.

An Introduction to Priam

In-depth exploration of Priam, a side kick application to help cassandra run inside of Amazon's cloud.

FOSDEM2015: Live migration for containers is around the corner

https://fosdem.org/2015/schedule/event/livemigration/

More Related Content

What's hot

“Show Me the Garbage!”, Understanding Garbage Collection

“Just leave the garbage outside and we will take care of it for you”. This is the panacea promised by garbage collection mechanisms built into most software stacks available today. So, we don’t need to think about it anymore, right? Wrong! When misused, garbage collectors can fail miserably. When this happens they slow down your application and lead to unacceptable pauses. In this talk we will go over different garbage collectors approaches in different software runtimes and what are the conditions which enable them to function well.

Presented on Reversim summit 2019

https://summit2019.reversim.com/session/5c754052d0e22f001706cbd8

C* Summit 2013: Time-Series Metrics with Cassandra by Mike Heffner

Librato's Metrics platform relies on Cassandra as its sole data storage platform for time-series data. This session will discuss how we have scaled from a single six node Cassandra ring two years ago to the multiple storage rings that handle over 150,000 writes/second today. We'll cover the steps we have taken to scale the platform including the evolution of our underlying schema, operational tricks, and client-library improvements. The session will finish with our suggestions on how we believe Cassandra as a project and its community can be improved.

Распределенные системы хранения данных, особенности реализации DHT в проекте ...

В этом докладе будет описана система хранения данных Elliptics network, основной задачей которой является предоставление пользователям доступа к данным, расположенным на физически распределенных серверах с плоской адресной моделью в децентрализованном окружении. Распределенная система хранения данных, предоставляющая доступ к объекту по ключу (key/value storage), и в частности распределенная хэш-таблица (distributed hash table), является весьма эффективным решением с незначительным набором ограничений. Для подтверждения работоспособности данной идеи и функционала в докладе будет представлена практическая реализация распределенной хэш-таблицы с модульной системой хранения данных и различными системами доступа: от POSIX файловой системы до доступа по протоколу HTTP. Также мы обсудим ограничения, накладываемые технологией распределенной хэш таблицы, и сравним особенности высоконагруженного и высоконадежного доступа в ненадежной среде с классическими моделями, использующими централизованные системы. Опираясь на полученные практические результаты и гибкость реализованной системы, будут предложены способы решения поставленных задач и расширения функционала.

Be a Zen monk, the Python way

Be a Zen monk, the Python way.

A short tech talk at Imaginea to get developers bootstrapped with the focus and philosophy of Python and their point of convergence with the philosophy.

CRIU: time and space travel for Linux containers -- Kir Kolyshkin

CRIU: time and space travel for Linux containers

Kirill Kolyshkin, ContainerDays NYC, 30 Oct 2015

http://www.youtube.com/watch?v=0y7a3_Nn2hI

.NET Memory Primer

The understanding of .NET Memory Management goes from the basics of how Windows memory works to the physical memory layout and allocation. This presentations covers both using Visual Studio IDE as main workplace.

Ceph Day NYC: Developing With Librados

This talk, given by Sage Weil at Ceph Day NYC, introduces developers to librados and discusses the hidden capabilities of RADOS.

Garbage collection

GARBAGE COLLECTOR Automatic garbage collection is the process of looking at heap memory, identifying which objects are in use and which are not, and deleting the unused objects. An in use object, or a referenced object, means that some part of your program still maintains a pointer to that object. An unused object, or unreferenced object, is no longer referenced by any part of your program. So the memory used by an unreferenced object can be reclaimed. In a programming language like C, allocating and deallocating memory is a manual process. In Java, process of deallocating memory is handled automatically by the garbage collector.

An Introduction to Priam

In-depth exploration of Priam, a side kick application to help cassandra run inside of Amazon's cloud.

FOSDEM2015: Live migration for containers is around the corner

https://fosdem.org/2015/schedule/event/livemigration/

What's hot (20)

Fedora Virtualization Day: Linux Containers & CRIU

Fedora Virtualization Day: Linux Containers & CRIU

“Show Me the Garbage!”, Understanding Garbage Collection

“Show Me the Garbage!”, Understanding Garbage Collection

C* Summit 2013: Time-Series Metrics with Cassandra by Mike Heffner

C* Summit 2013: Time-Series Metrics with Cassandra by Mike Heffner

Распределенные системы хранения данных, особенности реализации DHT в проекте ...

Распределенные системы хранения данных, особенности реализации DHT в проекте ...

CRIU: time and space travel for Linux containers -- Kir Kolyshkin

CRIU: time and space travel for Linux containers -- Kir Kolyshkin

FOSDEM2015: Live migration for containers is around the corner

FOSDEM2015: Live migration for containers is around the corner

Similar to Slide smallfiles

Ceph scale testing with 10 Billion Objects

In this performance testing, we ingested 10 Billion objects into the Ceph Object Storage system and measured its performance. We have observed deterministic performance, check out this presentation to know the details.

BlueStore: a new, faster storage backend for Ceph

Traditionally Ceph has made use of local file systems like XFS or btrfs to store its data. However, the mismatch between the OSD's requirements and the POSIX interface provided by kernel file systems has a huge performance cost and requires a lot of complexity. BlueStore, an entirely new OSD storage backend, utilizes block devices directly, doubling performance for most workloads. This talk will cover the motivation a new backend, the design and implementation, the improved performance on HDDs, SSDs, and NVMe, and discuss some of the thornier issues we had to overcome when replacing tried and true kernel file systems with entirely new code running in userspace.

“Show Me the Garbage!”, Garbage Collection a Friend or a Foe

“Just leave the garbage outside and we will take care of it for you”. This is the panacea promised by garbage collection mechanisms built into most software stacks available today. So, we don’t need to think about it anymore, right? Wrong! When misused, garbage collectors can fail miserably. When this happens they slow down your application and lead to unacceptable pauses. In this talk we will go over different garbage collectors approaches and understand under which conditions they function well.

Let's talk about Garbage Collection

Garbage collection is the most famous (infamous) JVM mechanism and it dates back to Java 1.0. Every Java developer knows about its existence yet most of the time we wish we can ignore its behavior and assume it works perfectly. Unfortunately this is not the case and if you are ignoring it, GC may hit you really hard.... in production. Furthermore the information that you may find on the web can be a lot of times misleading. In this event we will try to demystify some of the misconceptions around GC by understanding how different GC mechanisms work and how to make the right decisions in order to make them work for you.

BlueStore: a new, faster storage backend for Ceph

An updated and expanded talk on BlueStore, similar to the one originally presented at Vault.

The Hive Think Tank: Ceph + RocksDB by Sage Weil, Red Hat.

Rocking the Database World with RocksDB

Sage Weil, Ceph Principal Architect, Red Hat

Sage helped design Ceph as part of his graduate research at the University of California, Santa Cruz. Since then, he has continued to refine the system with the goal of providing a stable next generation distributed storage system for Linux.

Specialties: Distributed system design, storage and file systems, management, software development.

Ceph and RocksDB

My short talk at a RocksDB meetup on 2/3/16 about the new Ceph OSD backend BlueStore and its use of rocksdb.

BlueStore, A New Storage Backend for Ceph, One Year In

BlueStore is a new storage backend for Ceph OSDs that consumes block devices directly, bypassing the local XFS file system that is currently used today. It's design is motivated by everything we've learned about OSD workloads and interface requirements over the last decade, and everything that has worked well and not so well when storing objects as files in local files systems like XFS, btrfs, or ext4. BlueStore has been under development for a bit more than a year now, and has reached a state where it is becoming usable in production. This talk will cover the BlueStore design, how it has evolved over the last year, and what challenges remain before it can become the new default storage backend.

SUE 2018 - Migrating a 130TB Cluster from Elasticsearch 2 to 5 in 20 Hours Wi...

The talk I gave at the Snow Unix Event in Nederland about upgrading a massive production Elasticsearch cluster from a major version to another without downtime and a complete rollback plan.

Flink Forward Berlin 2017: Robert Metzger - Keep it going - How to reliably a...

Let’s be honest: Running a distributed stateful stream processor that is able to handle terabytes of state and tens of gigabytes of data per second while being highly available and correct (in an exactly-once sense) does not work without any planning, configuration and monitoring. While the Flink developer community tries to make everything as simple as possible, it is still important to be aware of all the requirements and implications In this talk, we will provide some insights into the greatest operations mysteries of Flink from a high-level perspective: - Capacity and resource planning: Understand the theoretical limits. - Memory and CPU configuration: Distribute resources according to your needs. - Setting up High Availability: Planning for failures. - Checkpointing and State Backends: Ensure correctness and fast recovery For each of the listed topics, we will introduce the concepts of Flink and provide some best practices we have learned over the past years supporting Flink users in production.

TritonSort: A Balanced Large-Scale Sorting System (NSDI 2011)

We present TritonSort, a highly efficient, scalable sorting system. It is designed to process large datasets, and has been evaluated against as much as 100 TB of input data spread across 832 disks in 52 nodes at a rate of 0.916 TB/min. When evaluated against the annual Indy GraySort sorting benchmark, TritonSort is 60% better in absolute performance and has over six times the per-node efficiency of the previous record holder. In this paper, we describe the hardware and software architecture necessary to operate TritonSort at this level of efficiency. Through careful management of system resources to ensure cross-resource balance, we are able to sort data at approximately 80% of the disks' aggregate sequential write speed. We believe the work holds a number of lessons for balanced system design and for scale-out architectures in general. While many interesting systems are able to scale linearly with additional servers, per-server performance can lag behind per-server capacity by more than an order of magnitude. Bridging the gap between high scalability and high performance would enable either significantly cheaper systems that are able to do the same work or provide the ability to address significantly larger problem sets with the same infrastructure.

Save Java memory

Java heap memory model has wasteful memory usage. References, object headers, internal collection structure, extra fields such as String.hashCode… This talk shows practical ways to reduce memory usage and fit more data into memory: primitive types, specialized java collections, bit packing, reducing number of pointers, replacing String with char[], semi-serialized objects… As bonus we get lower GC overhead by reducing number of references.

.NET Core, ASP.NET Core Course, Session 4

Session 4,

What is Garbage Collector?

Fundamentals of memory

Conditions for a garbage collection

Generations

Configuring garbage collection

Workstation

Server

Similar to Slide smallfiles (20)

“Show Me the Garbage!”, Garbage Collection a Friend or a Foe

“Show Me the Garbage!”, Garbage Collection a Friend or a Foe

The Hive Think Tank: Ceph + RocksDB by Sage Weil, Red Hat.

The Hive Think Tank: Ceph + RocksDB by Sage Weil, Red Hat.

BlueStore, A New Storage Backend for Ceph, One Year In

BlueStore, A New Storage Backend for Ceph, One Year In

SUE 2018 - Migrating a 130TB Cluster from Elasticsearch 2 to 5 in 20 Hours Wi...

SUE 2018 - Migrating a 130TB Cluster from Elasticsearch 2 to 5 in 20 Hours Wi...

Flink Forward Berlin 2017: Robert Metzger - Keep it going - How to reliably a...

Flink Forward Berlin 2017: Robert Metzger - Keep it going - How to reliably a...

TritonSort: A Balanced Large-Scale Sorting System (NSDI 2011)

TritonSort: A Balanced Large-Scale Sorting System (NSDI 2011)

Recently uploaded

DfMAy 2024 - key insights and contributions

We have compiled the most important slides from each speaker's presentation. This year’s compilation, available for free, captures the key insights and contributions shared during the DfMAy 2024 conference.

Gen AI Study Jams _ For the GDSC Leads in India.pdf

Gen AI Study Jams _ For the GDSC Leads in India.pdf

Hierarchical Digital Twin of a Naval Power System

A hierarchical digital twin of a Naval DC power system has been developed and experimentally verified. Similar to other state-of-the-art digital twins, this technology creates a digital replica of the physical system executed in real-time or faster, which can modify hardware controls. However, its advantage stems from distributing computational efforts by utilizing a hierarchical structure composed of lower-level digital twin blocks and a higher-level system digital twin. Each digital twin block is associated with a physical subsystem of the hardware and communicates with a singular system digital twin, which creates a system-level response. By extracting information from each level of the hierarchy, power system controls of the hardware were reconfigured autonomously. This hierarchical digital twin development offers several advantages over other digital twins, particularly in the field of naval power systems. The hierarchical structure allows for greater computational efficiency and scalability while the ability to autonomously reconfigure hardware controls offers increased flexibility and responsiveness. The hierarchical decomposition and models utilized were well aligned with the physical twin, as indicated by the maximum deviations between the developed digital twin hierarchy and the hardware.

Harnessing WebAssembly for Real-time Stateless Streaming Pipelines

Traditionally, dealing with real-time data pipelines has involved significant overhead, even for straightforward tasks like data transformation or masking. However, in this talk, we’ll venture into the dynamic realm of WebAssembly (WASM) and discover how it can revolutionize the creation of stateless streaming pipelines within a Kafka (Redpanda) broker. These pipelines are adept at managing low-latency, high-data-volume scenarios.

Nuclear Power Economics and Structuring 2024

Title: Nuclear Power Economics and Structuring - 2024 Edition

Produced by: World Nuclear Association Published: April 2024

Report No. 2024/001

© 2024 World Nuclear Association.

Registered in England and Wales, company number 01215741

This report reflects the views

of industry experts but does not

necessarily represent those

of World Nuclear Association’s

individual member organizations.

Top 10 Oil and Gas Projects in Saudi Arabia 2024.pdf

Saudi Arabia stands as a titan in the global energy landscape, renowned for its abundant oil and gas resources. It's the largest exporter of petroleum and holds some of the world's most significant reserves. Let's delve into the top 10 oil and gas projects shaping Saudi Arabia's energy future in 2024.

Water Industry Process Automation and Control Monthly - May 2024.pdf

Water Industry Process Automation and Control Monthly - May 2024.pdfWater Industry Process Automation & Control

Welcome to WIPAC Monthly the magazine brought to you by the LinkedIn Group Water Industry Process Automation & Control.

In this month's edition, along with this month's industry news to celebrate the 13 years since the group was created we have articles including

A case study of the used of Advanced Process Control at the Wastewater Treatment works at Lleida in Spain

A look back on an article on smart wastewater networks in order to see how the industry has measured up in the interim around the adoption of Digital Transformation in the Water Industry.一比一原版(UofT毕业证)多伦多大学毕业证成绩单如何办理

UofT毕业证原版定制【微信:176555708】【多伦多大学毕业证成绩单-学位证】【微信:176555708】(留信学历认证永久存档查询)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

◆◆◆◆◆ — — — — — — — — 【留学教育】留学归国服务中心 — — — — — -◆◆◆◆◆

【主营项目】

一.毕业证【微信:176555708】成绩单、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理各国各大学文凭(一对一专业服务,可全程监控跟踪进度)

如果您处于以下几种情况:

◇在校期间,因各种原因未能顺利毕业……拿不到官方毕业证【微信:176555708】

◇面对父母的压力,希望尽快拿到;

◇不清楚认证流程以及材料该如何准备;

◇回国时间很长,忘记办理;

◇回国马上就要找工作,办给用人单位看;

◇企事业单位必须要求办理的

◇需要报考公务员、购买免税车、落转户口

◇申请留学生创业基金

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分→ 【关于价格问题(保证一手价格)

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

选择实体注册公司办理,更放心,更安全!我们的承诺:可来公司面谈,可签订合同,会陪同客户一起到教育部认证窗口递交认证材料,客户在教育部官方认证查询网站查询到认证通过结果后付款,不成功不收费!

学历顾问:微信:176555708

CW RADAR, FMCW RADAR, FMCW ALTIMETER, AND THEIR PARAMETERS

It consists of cw radar and fmcw radar ,range measurement,if amplifier and fmcw altimeterThe CW radar operates using continuous wave transmission, while the FMCW radar employs frequency-modulated continuous wave technology. Range measurement is a crucial aspect of radar systems, providing information about the distance to a target. The IF amplifier plays a key role in signal processing, amplifying intermediate frequency signals for further analysis. The FMCW altimeter utilizes frequency-modulated continuous wave technology to accurately measure altitude above a reference point.

Technical Drawings introduction to drawing of prisms

Method of technical Drawing of prisms,and cylinders.

Governing Equations for Fundamental Aerodynamics_Anderson2010.pdf

Governing Equations for Fundamental Aerodynamics

Water billing management system project report.pdf

Our project entitled “Water Billing Management System” aims is to generate Water bill with all the charges and penalty. Manual system that is employed is extremely laborious and quite inadequate. It only makes the process more difficult and hard.

The aim of our project is to develop a system that is meant to partially computerize the work performed in the Water Board like generating monthly Water bill, record of consuming unit of water, store record of the customer and previous unpaid record.

We used HTML/PHP as front end and MYSQL as back end for developing our project. HTML is primarily a visual design environment. We can create a android application by designing the form and that make up the user interface. Adding android application code to the form and the objects such as buttons and text boxes on them and adding any required support code in additional modular.

MySQL is free open source database that facilitates the effective management of the databases by connecting them to the software. It is a stable ,reliable and the powerful solution with the advanced features and advantages which are as follows: Data Security.MySQL is free open source database that facilitates the effective management of the databases by connecting them to the software.

Understanding Inductive Bias in Machine Learning

This presentation explores the concept of inductive bias in machine learning. It explains how algorithms come with built-in assumptions and preferences that guide the learning process. You'll learn about the different types of inductive bias and how they can impact the performance and generalizability of machine learning models.

The presentation also covers the positive and negative aspects of inductive bias, along with strategies for mitigating potential drawbacks. We'll explore examples of how bias manifests in algorithms like neural networks and decision trees.

By understanding inductive bias, you can gain valuable insights into how machine learning models work and make informed decisions when building and deploying them.

Investor-Presentation-Q1FY2024 investor presentation document.pptx

this is the investor presemtaiton document for qurrter 1 2024

Recently uploaded (20)

Gen AI Study Jams _ For the GDSC Leads in India.pdf

Gen AI Study Jams _ For the GDSC Leads in India.pdf

Harnessing WebAssembly for Real-time Stateless Streaming Pipelines

Harnessing WebAssembly for Real-time Stateless Streaming Pipelines

Steel & Timber Design according to British Standard

Steel & Timber Design according to British Standard

Top 10 Oil and Gas Projects in Saudi Arabia 2024.pdf

Top 10 Oil and Gas Projects in Saudi Arabia 2024.pdf

Fundamentals of Electric Drives and its applications.pptx

Fundamentals of Electric Drives and its applications.pptx

Water Industry Process Automation and Control Monthly - May 2024.pdf

Water Industry Process Automation and Control Monthly - May 2024.pdf

CW RADAR, FMCW RADAR, FMCW ALTIMETER, AND THEIR PARAMETERS

CW RADAR, FMCW RADAR, FMCW ALTIMETER, AND THEIR PARAMETERS

Technical Drawings introduction to drawing of prisms

Technical Drawings introduction to drawing of prisms

Governing Equations for Fundamental Aerodynamics_Anderson2010.pdf

Governing Equations for Fundamental Aerodynamics_Anderson2010.pdf

Water billing management system project report.pdf

Water billing management system project report.pdf

Planning Of Procurement o different goods and services

Planning Of Procurement o different goods and services

Investor-Presentation-Q1FY2024 investor presentation document.pptx

Investor-Presentation-Q1FY2024 investor presentation document.pptx

DESIGN AND ANALYSIS OF A CAR SHOWROOM USING E TABS

DESIGN AND ANALYSIS OF A CAR SHOWROOM USING E TABS

Slide smallfiles

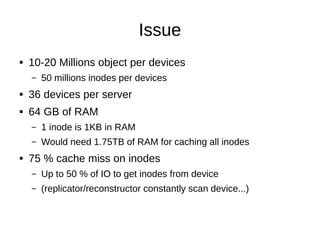

- 1. Issue ● 10-20 Millions object per devices – 50 millions inodes per devices ● 36 devices per server ● 64 GB of RAM – 1 inode is 1KB in RAM – Would need 1.75TB of RAM for caching all inodes ● 75 % cache miss on inodes – Up to 50 % of IO to get inodes from device – (replicator/reconstructor constantly scan device...)

- 2. Solution ● Get rid of inodes ● Haystack-like solution – Objects in volumes (a.k.a. big files, 5GB or 10GB) – K/V store to map object to (volume id, position) ● K/V is an gRPC service ● Backed by LevelDB (for now...) ● Need to avoid compaction issue – fallocate(PUNCH_HOLE) – Smart selection of volumes

- 3. Benefits ● 42 bytes per object in K/V – Compared to 1KB for an XFS inode – Fit in memory (20GB vs 1.75TB) – Should easily go down to 30 bytes per object ● Listdir happens in K/V (so in memory) ● Space efficiency vs Block aligned (!) ● Flat namespace for objects – No part/sfx/ohash – Increasing part power is just a ring thing

- 4. Adding an object 1.Select a volume 2.Append objet data 1.Object header (magic string, ohash, size, …) 2.Object metadata 3.Object data 3.fdatasync() volume 4.Insert new entry in K/V (no transaction) ● <o><policy><ohash><filename> => <volume id><offset> => If crash, the volume act as a journal to replay

- 5. Removing an object 1.Select a volume 2.Insert a tombstone 3.fdatasync() volume 4.Insert tombstone in K/V 5.Run cleanup_ondisk_files() 1.Punch_hole the object 2.Remove the old entry from K/V

- 6. Volume selection ● Avoid holes in volumes to reduce compaction – Try to group objects by partition ● => rebalance is compaction – Put short life objects in dedicated volumes ● tombstone ● x-delete-at soon – Dedicated volumes for handoff?

- 7. Benchmarks ● Atom C2750 2.40Ghz ● 16GB RAM ● HGST HUS726040ALA610 (4TB) ● Directly connecting to objet servers

- 8. Benchmarks ● Single threaded PUT (100 bytes objects) – From 0 to 4 millions objects ● XFS : 19.8/s ● Volumes : 26.2/s – From 4 millions to 8 millions objects ● XFS : 17/s ● Volumes : 39.2/s (b/c of not creating more volumes?) ● What we see (need numbers!) – XFS : memory is full ; Volumes : memory is free – Disks is more busy with XFS

- 9. Benchmarks ● Single threaded random GET – XFS : 39/s – Volumes : 93/s

- 10. Benchmarks ● Concurrent PUT, 20 threads for 10 minutes avg 50% 95% 99% max XFS 641ms 67ms 3.5s 4.7s 5.9s Volumes 82ms 50ms 261ms 615ms 1.24s

- 11. Status ● Done – HEAD/GET/PUT/DELETE/POST (replica) ● Todo – REPLICATE/SSYNC – Erasure Code – XFS read compatibility – Smarter volumes selection – Func tests on object servers (is there any?) – Doc