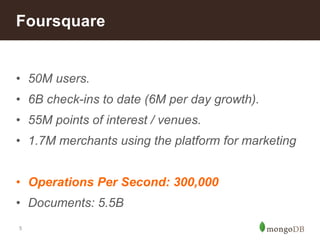

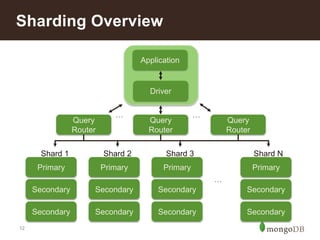

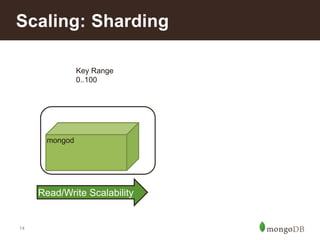

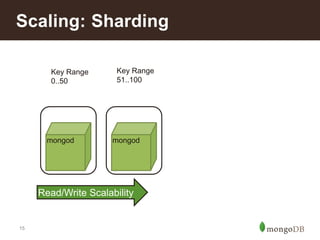

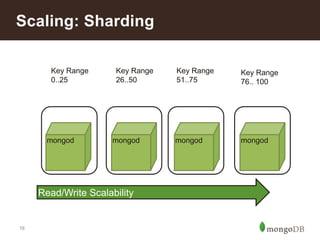

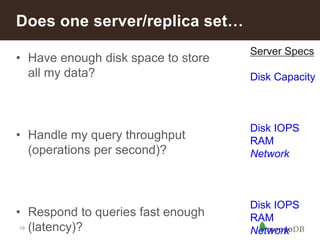

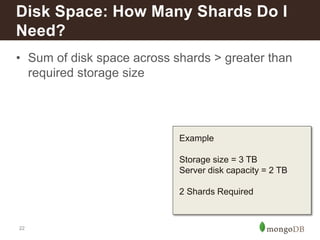

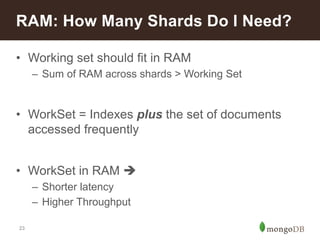

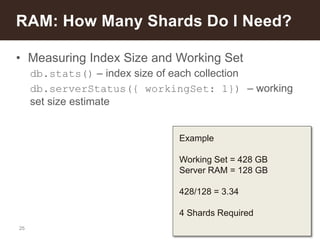

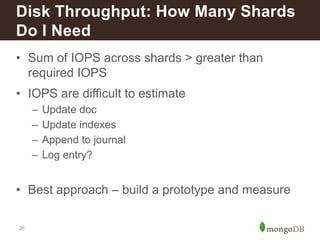

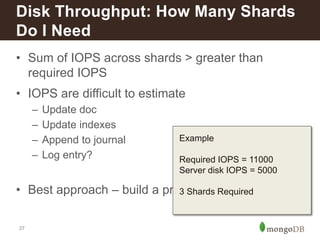

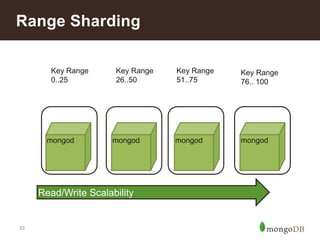

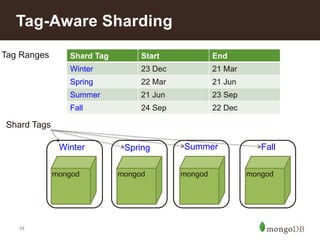

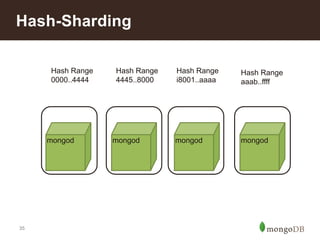

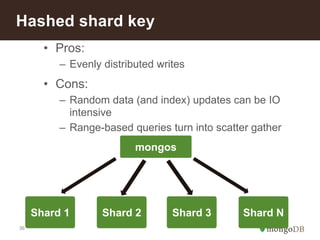

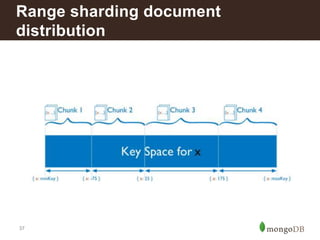

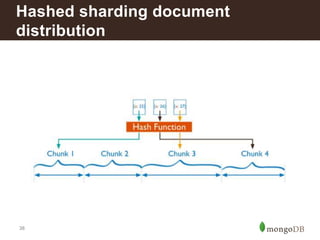

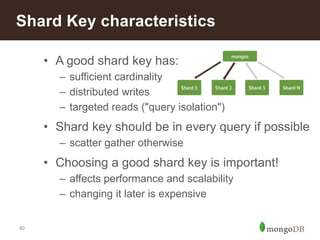

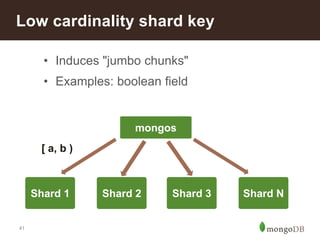

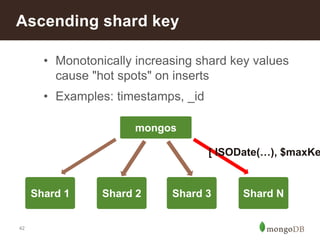

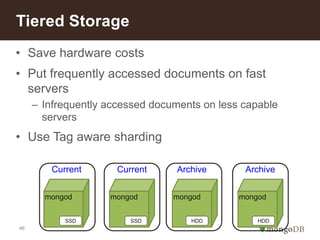

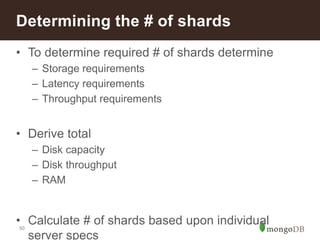

Sharding in MongoDB allows for scaling of data and queries across multiple servers. When determining the number of shards needed, key factors to consider include total storage requirements, latency needs, and throughput requirements. These are used to calculate the necessary disk capacity, disk throughput, and RAM across shards. Different types of sharding include range, tag-aware, and hashed, with range being best for query isolation. Choosing a high cardinality shard key that matches common queries is important for performance and scalability.