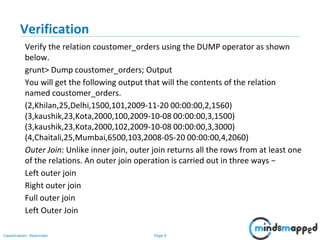

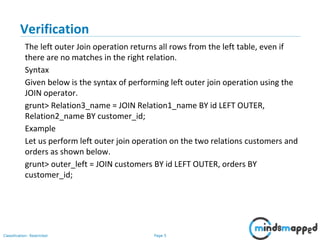

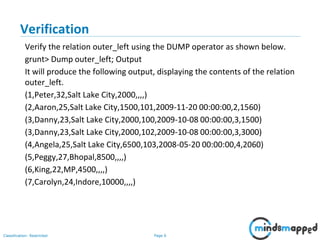

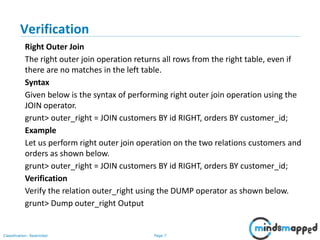

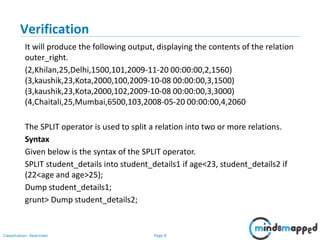

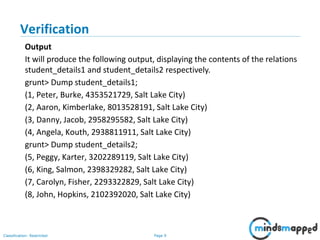

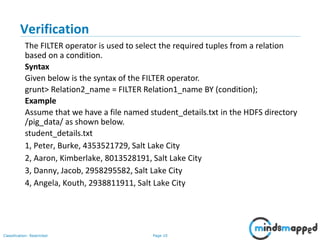

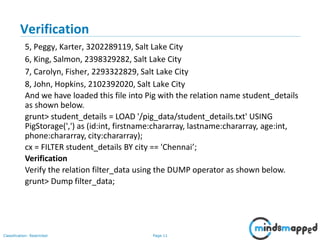

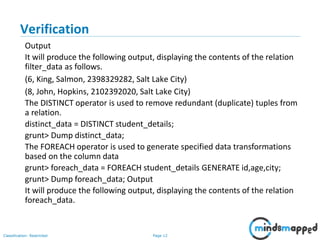

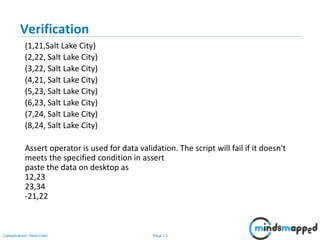

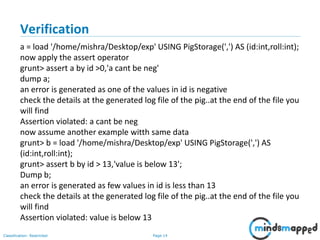

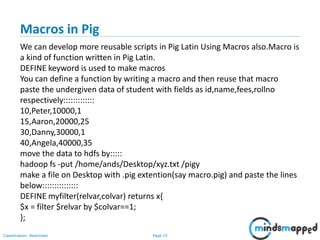

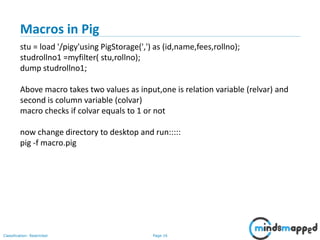

The document provides a comprehensive overview of using Pig, a high-level platform for creating programs that run on Apache Hadoop. It covers various join operations (inner, left outer, right outer), the split operator, filter and distinct operators, the foreach operator for data transformations, and the assert operator for data validation. Additionally, it discusses the creation of reusable scripts with macros in Pig Latin and outlines topics for the next training session.