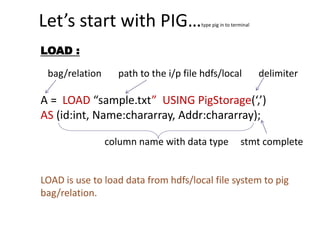

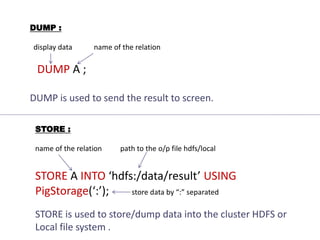

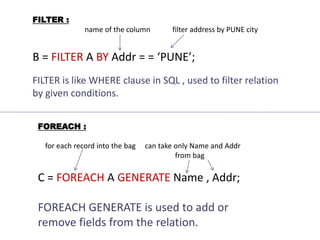

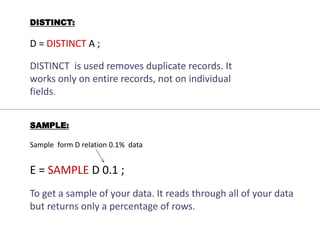

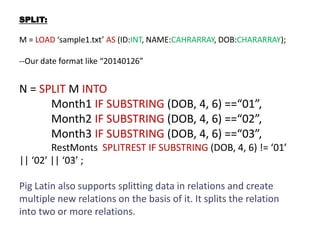

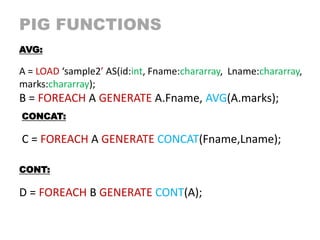

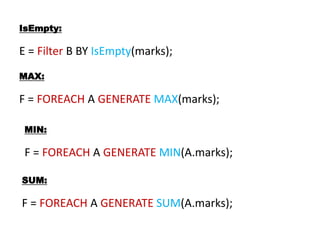

Pig is a platform for analyzing large datasets using a high-level language called Pig Latin. Pig Latin scripts are compiled into MapReduce jobs for execution. Pig can load, filter, join, group, order, and store data. Common operations include LOAD to import data, FILTER to filter records, FOREACH to generate new fields, DISTINCT to remove duplicates, and STORE to export data. Pig also supports functions like AVG, MAX, MIN, SUM, and TOKENIZE to analyze data.

![data = <1 , {<2,3>,<4,5>,<6,7>},["key":"value"]>

Method Example Result

Position $0 1

Name field2 bag{<2,3>,<4,5>,<6,7>}

Projection field2.$1 bag{<3>,<5>,<7>}

Function AVG(field2.$0) (2+4+6)/3=4

Conditional field1 == 1? 'yes' : 'no' yes

Lookup field3#'key' value

• Collection of statements

• Statements built using operators,

expressions and return relations.

• Data in Relations:

• Atom * Tuple * Bag * Map –

Field

DATA PROCESS COMBINE VIEW

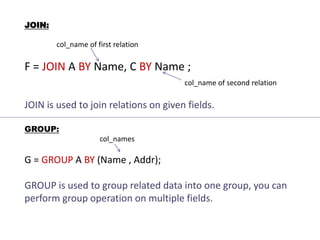

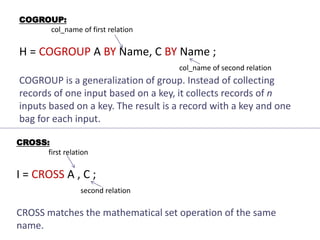

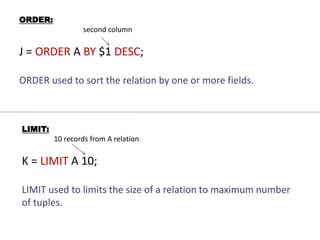

LOAD FILTER JOIN ORDER

DUMP FOREACH GROUP LIMIT

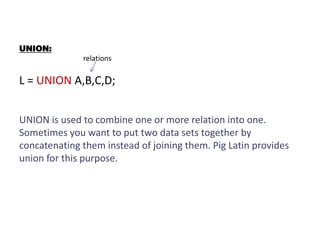

STORE DISTINCT COGROUP UNION

SAMPLE CROSS SPLIT

Common Operations

Pig Latin](https://image.slidesharecdn.com/pigstatements-150126114014-conversion-gate02/85/Pig-statements-3-320.jpg)