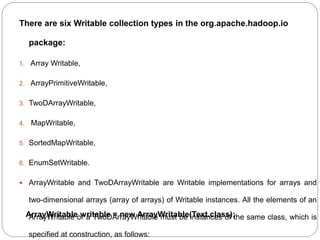

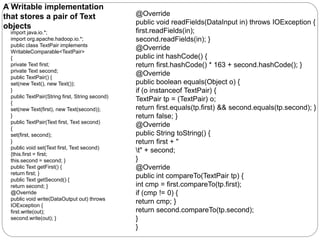

The document discusses six writable collection types in Hadoop: ArrayWritable, ArrayPrimitiveWritable, TwoDArrayWritable, MapWritable, SortedMapWritable, and EnumSetWritable. It describes how ArrayWritable and TwoDArrayWritable store arrays and two-dimensional arrays of writable instances. It also discusses how to create custom writable types like TextPair to represent user-defined data types for MapReduce.