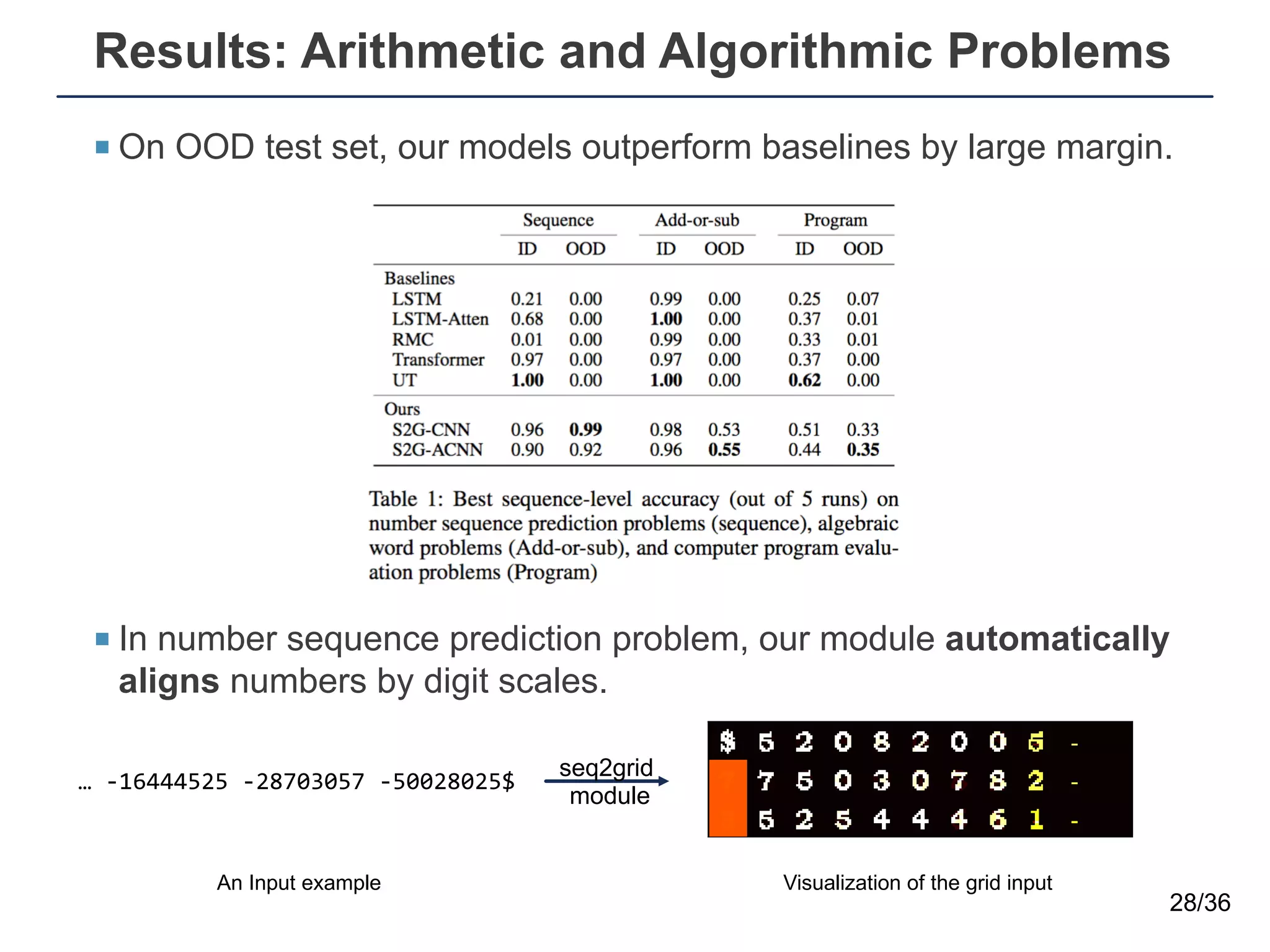

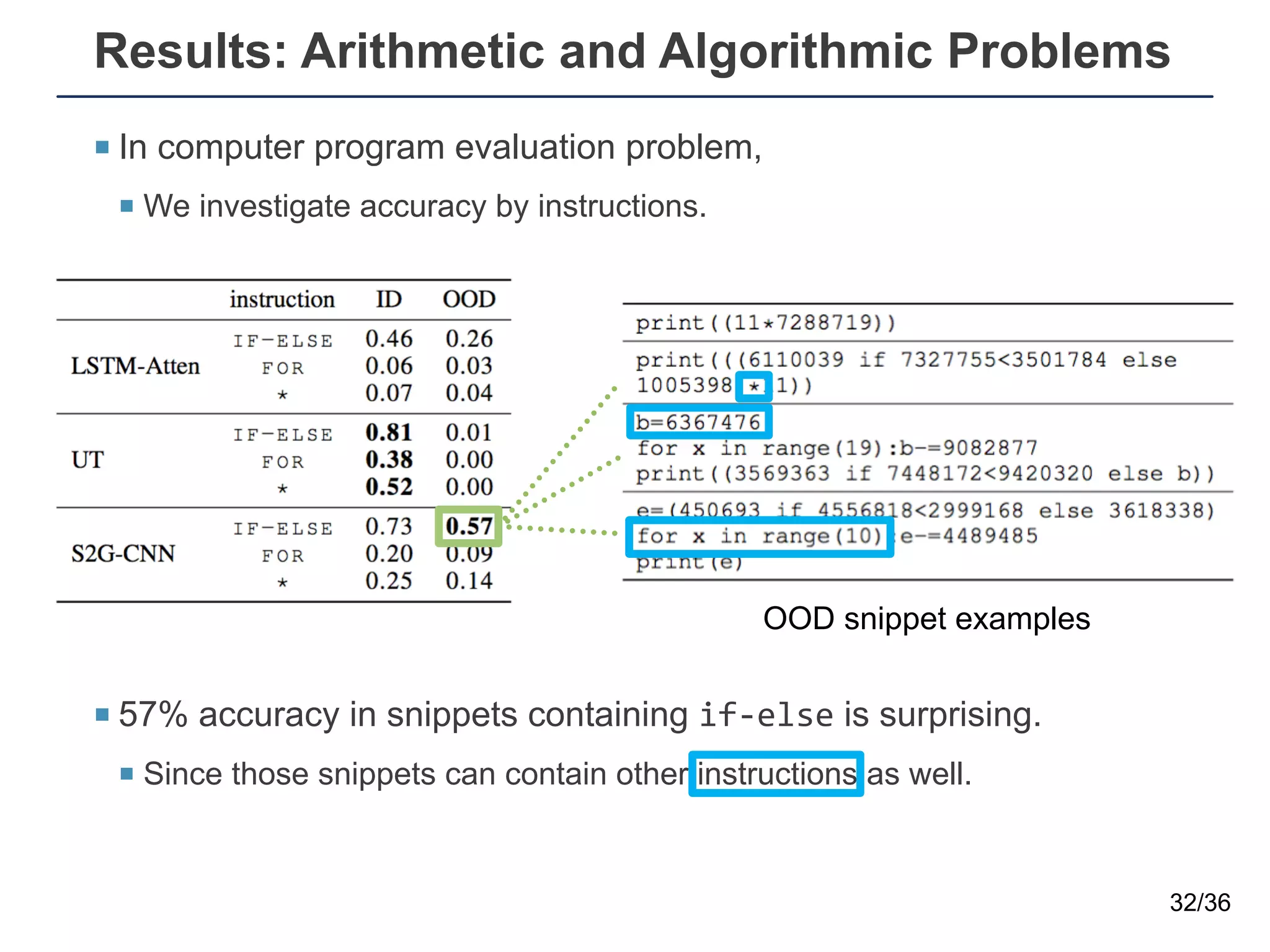

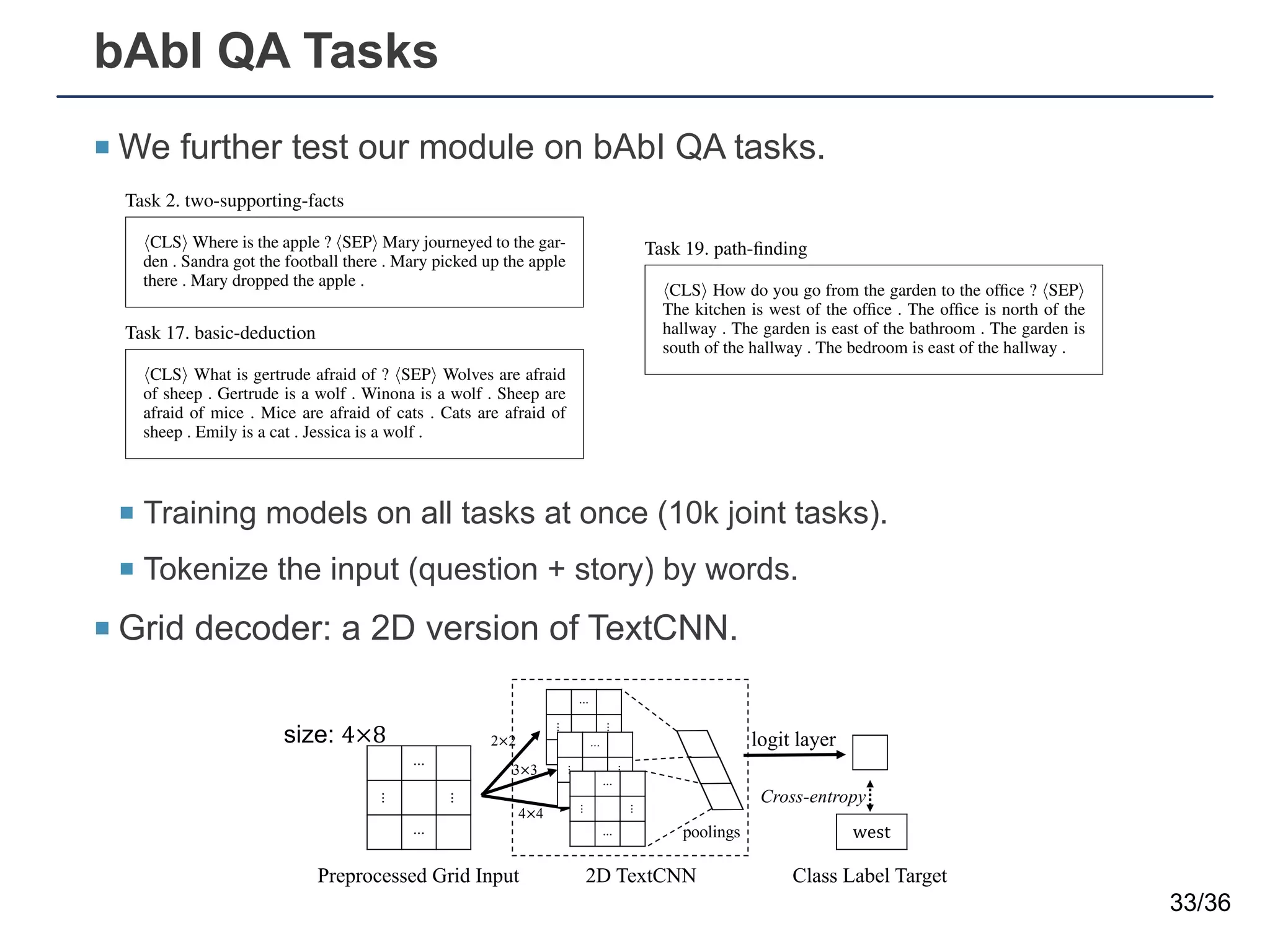

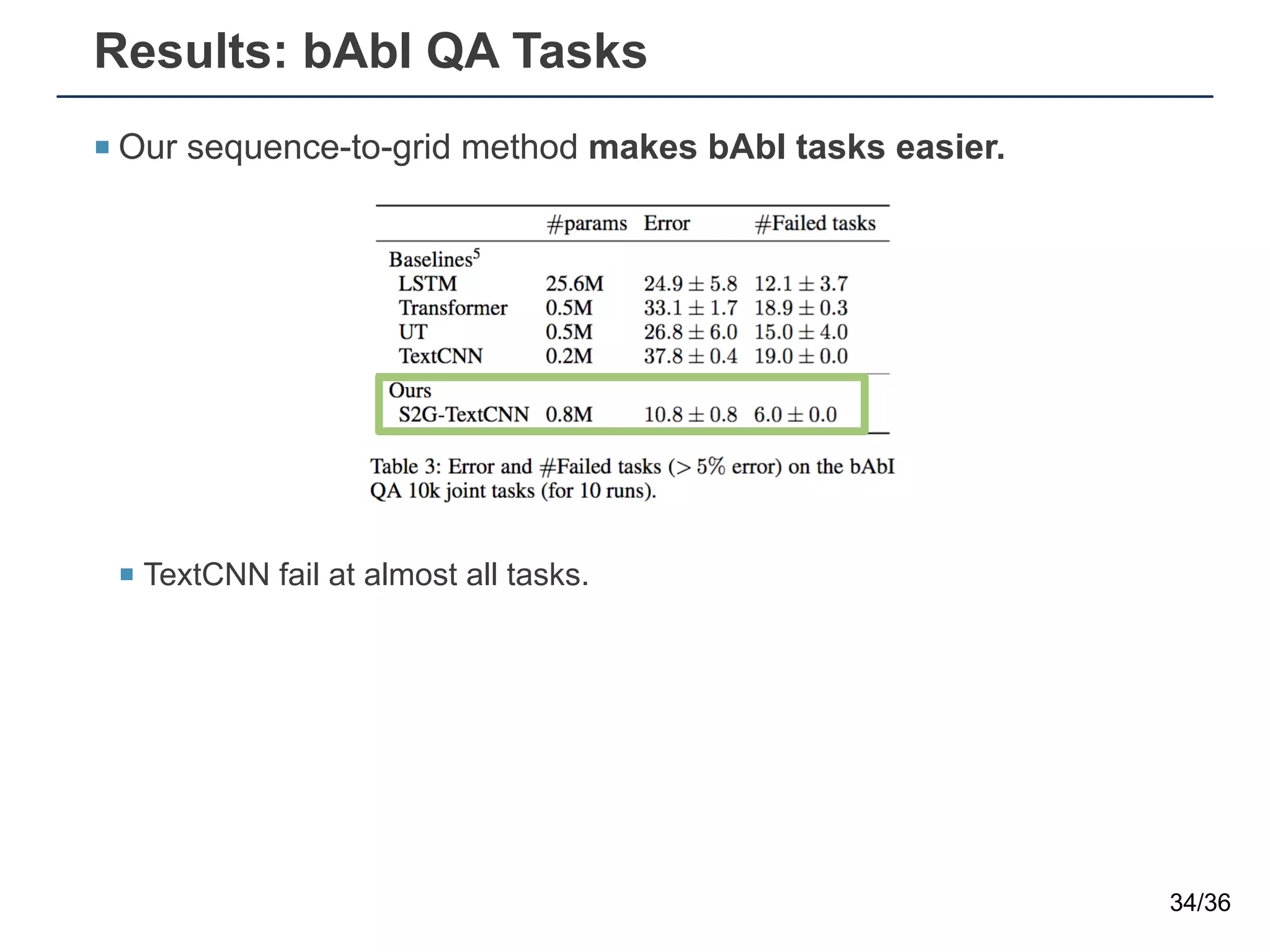

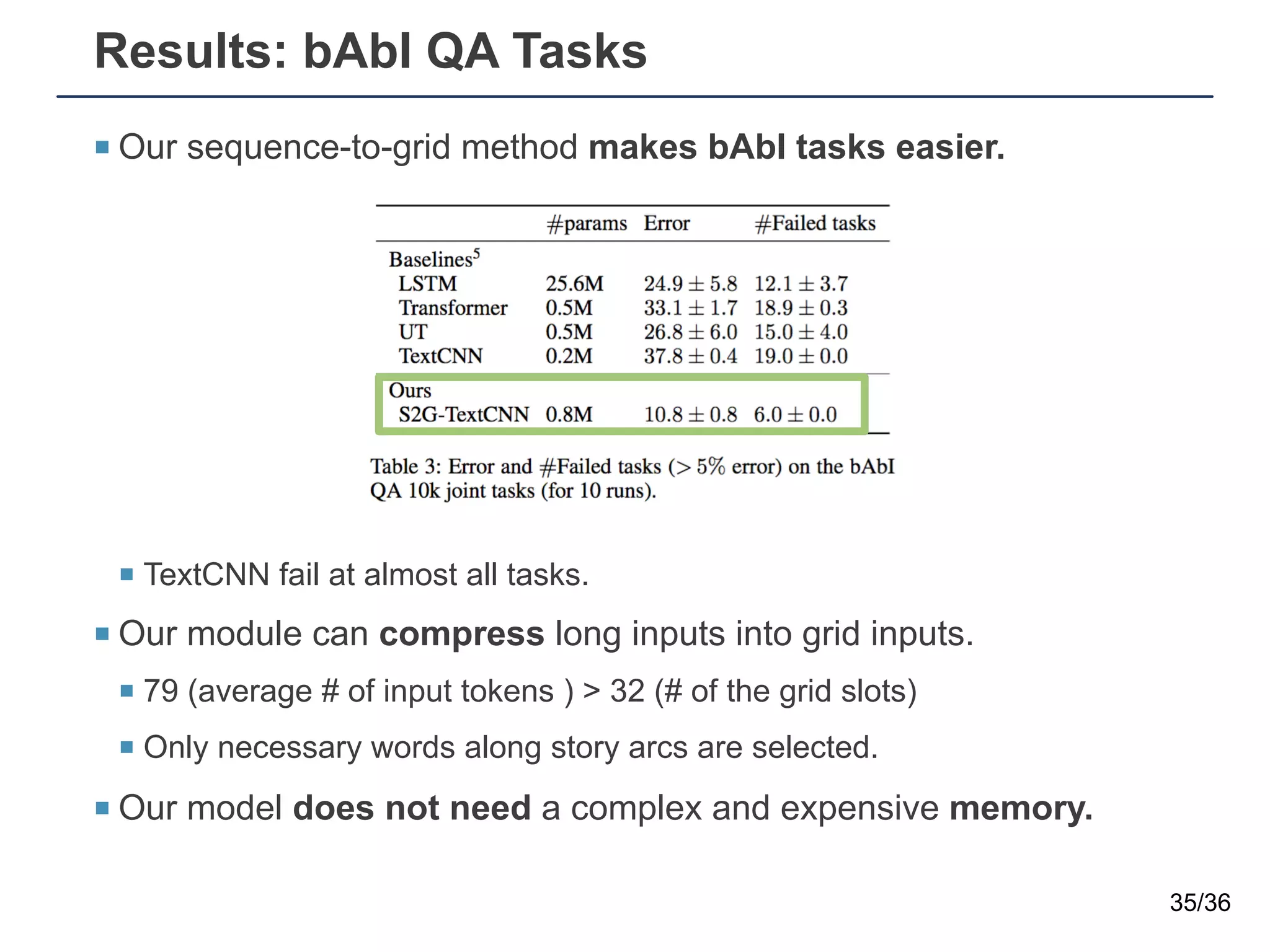

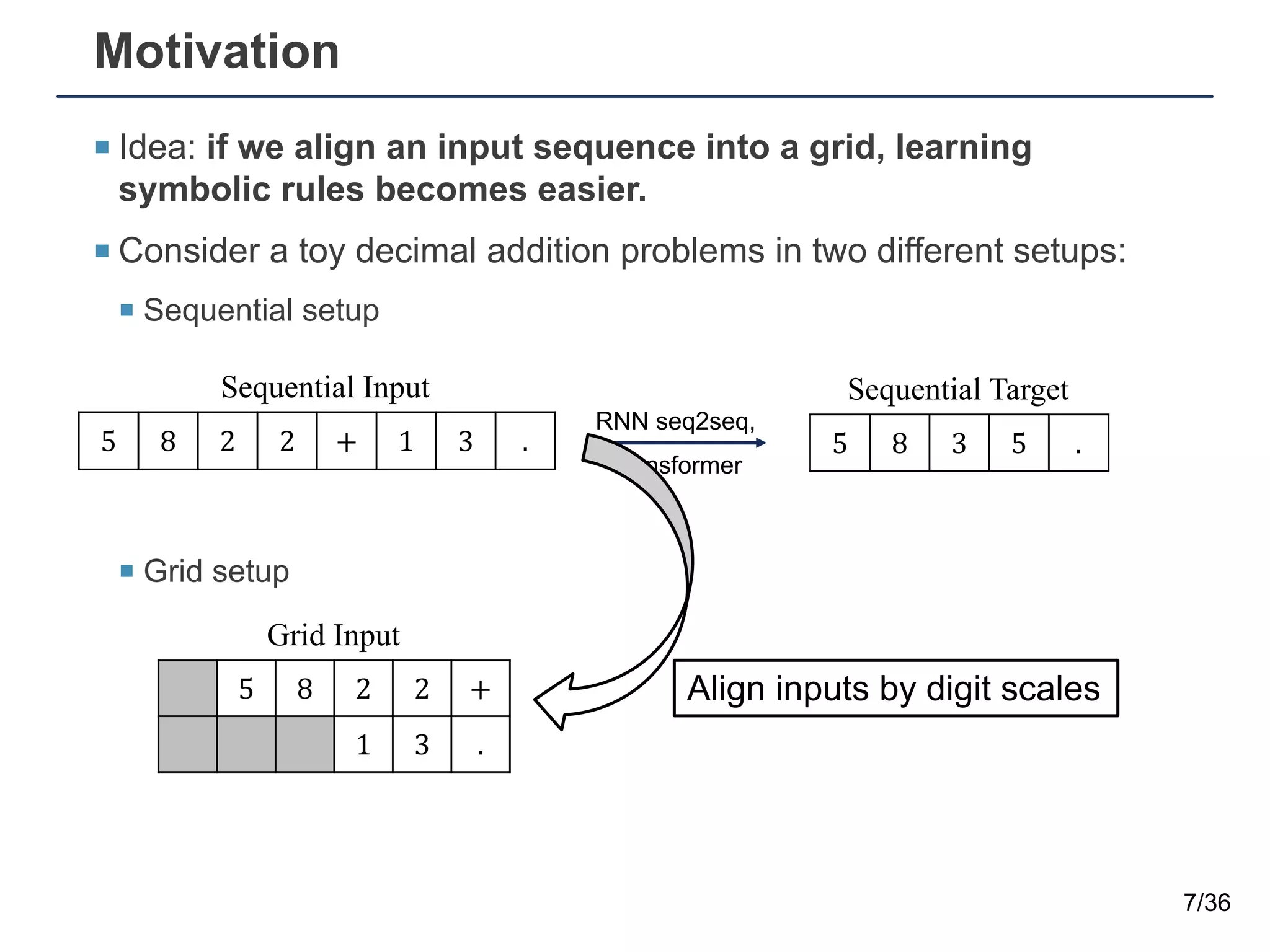

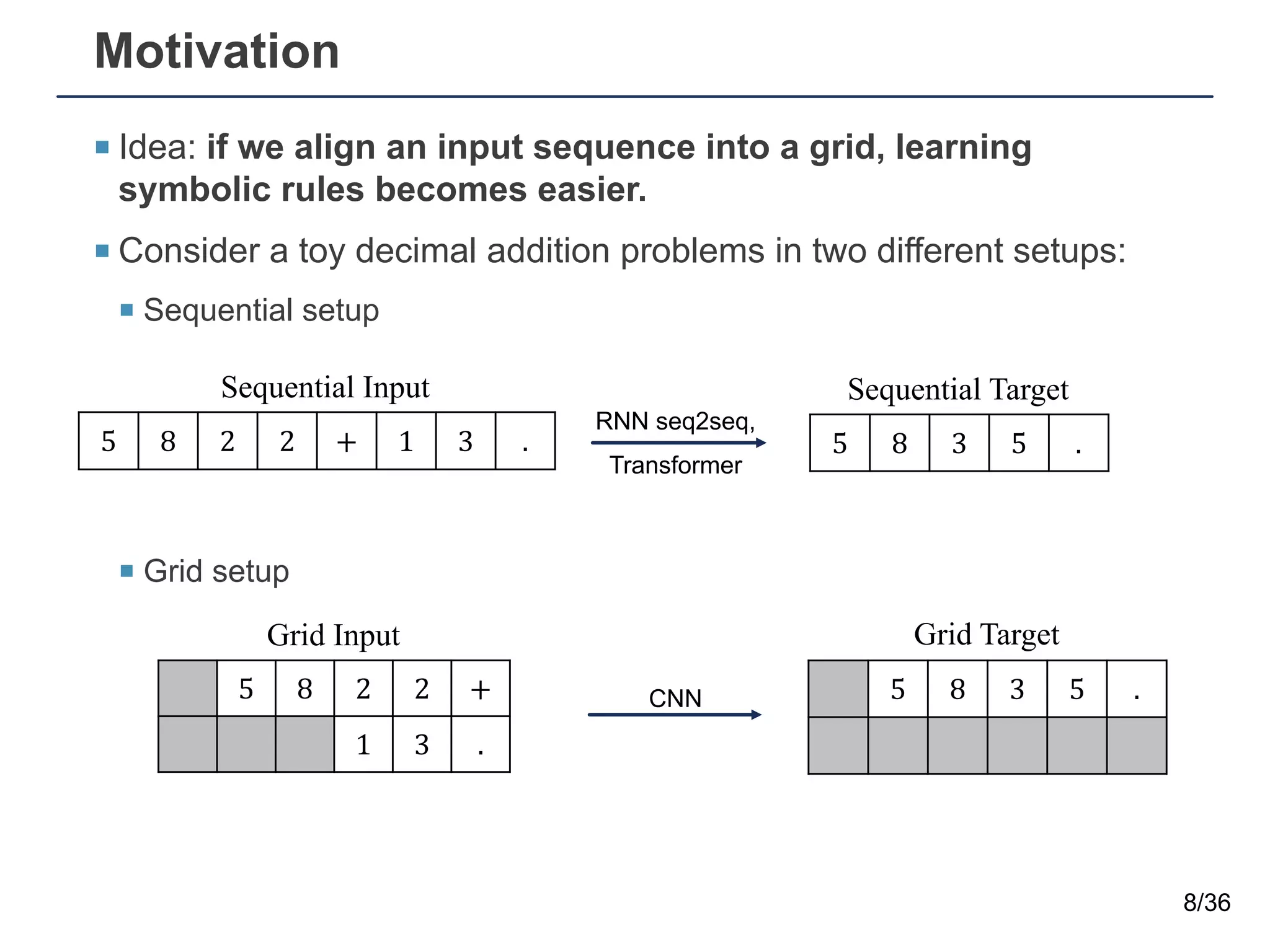

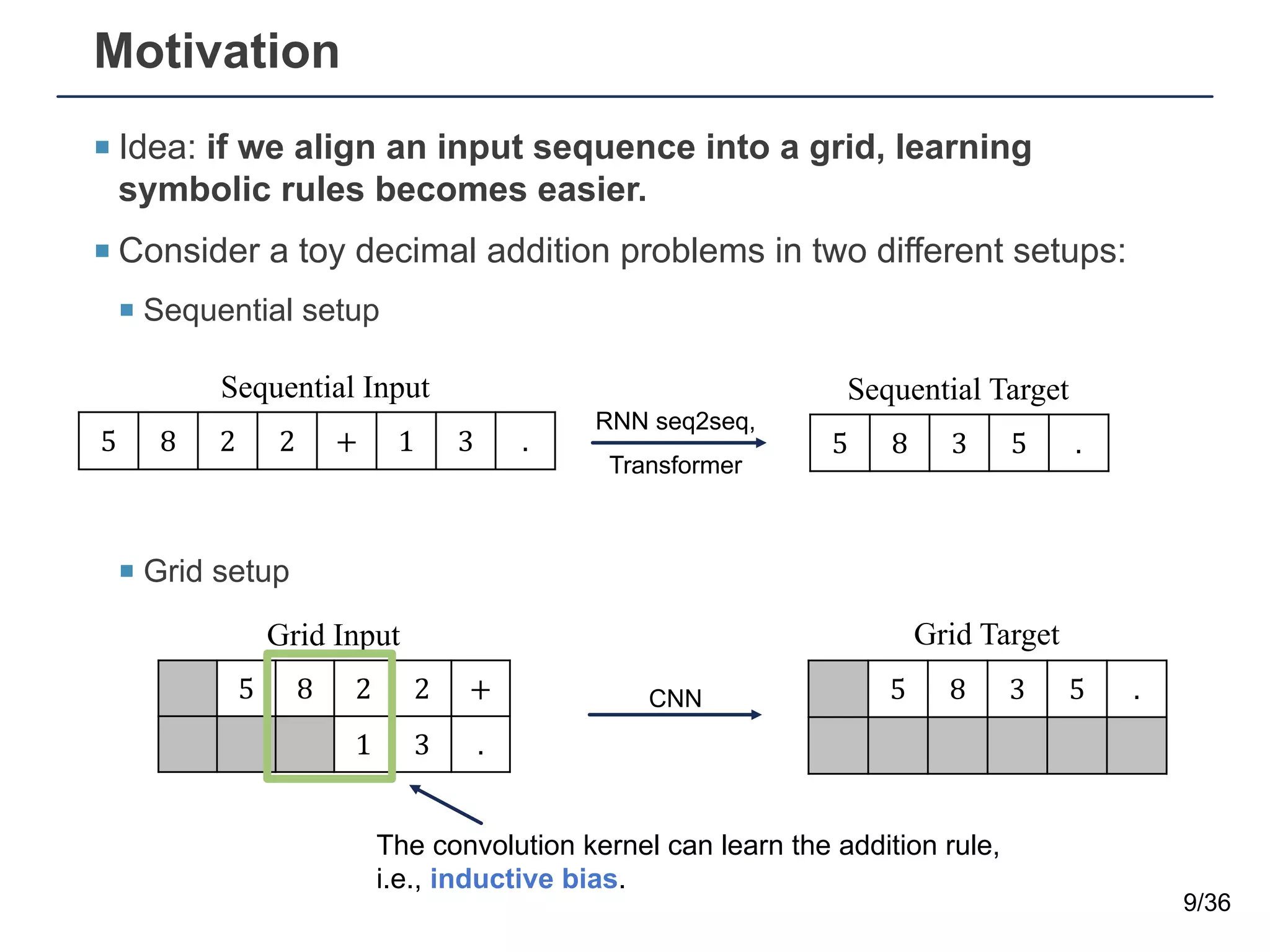

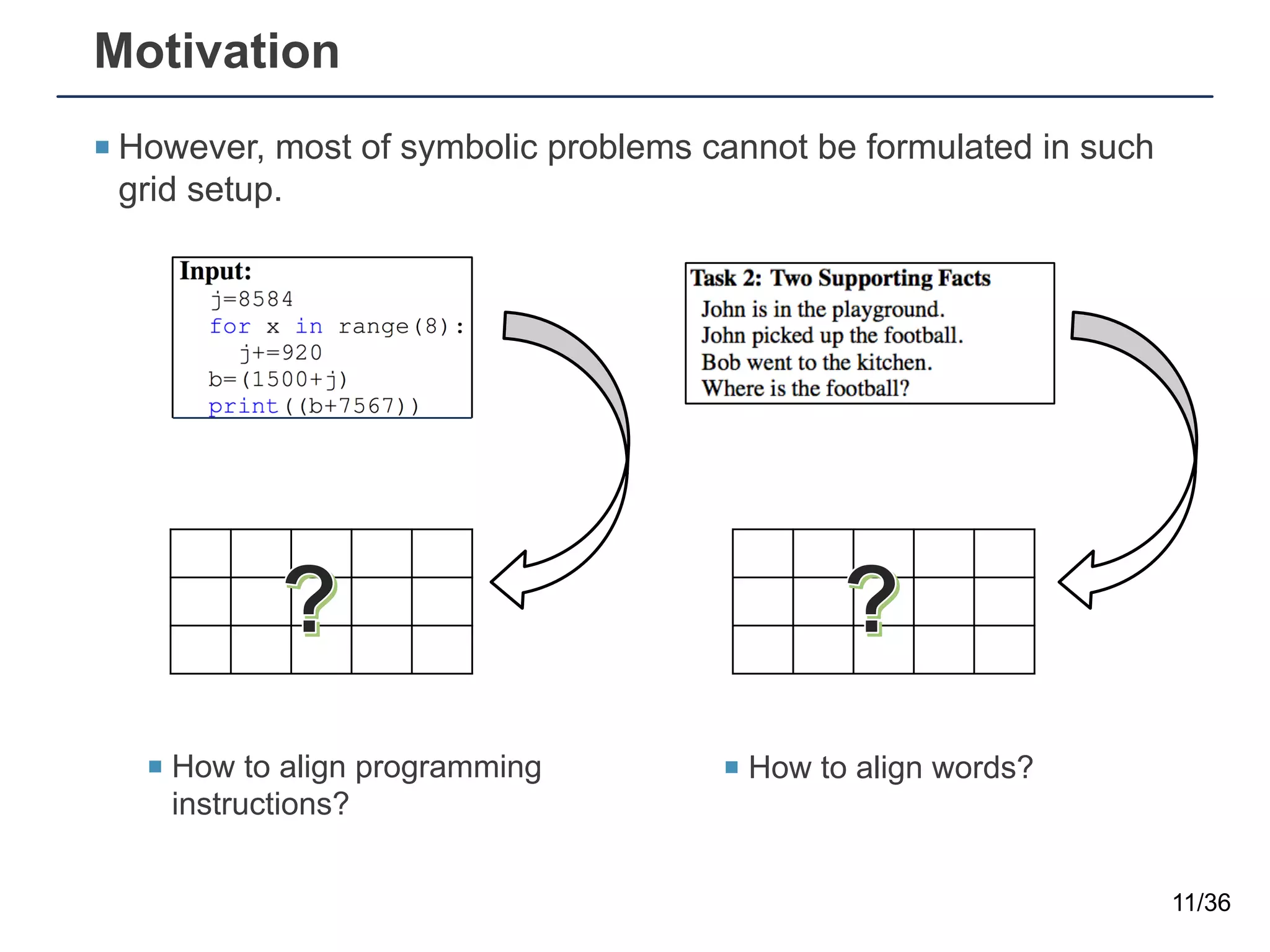

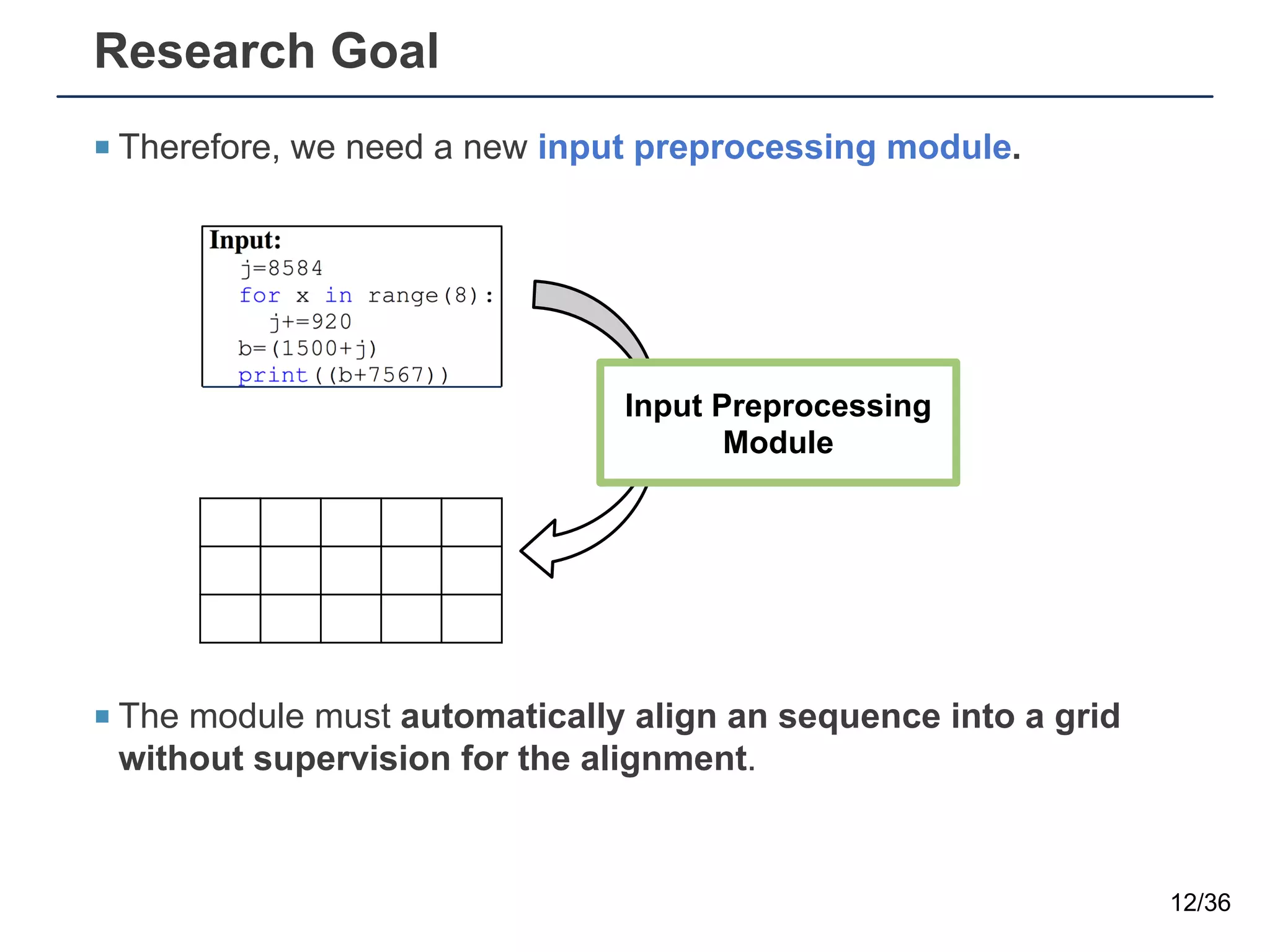

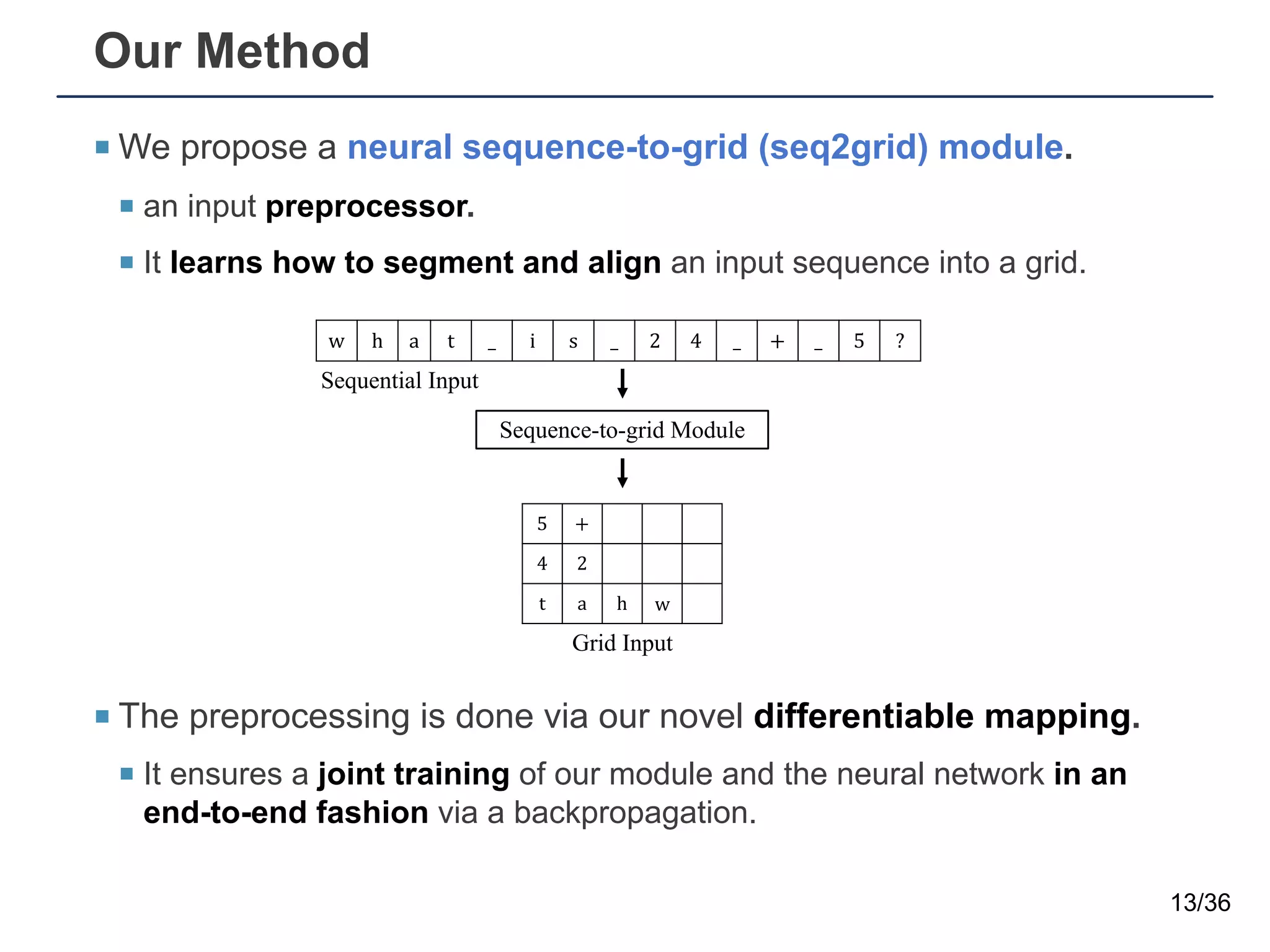

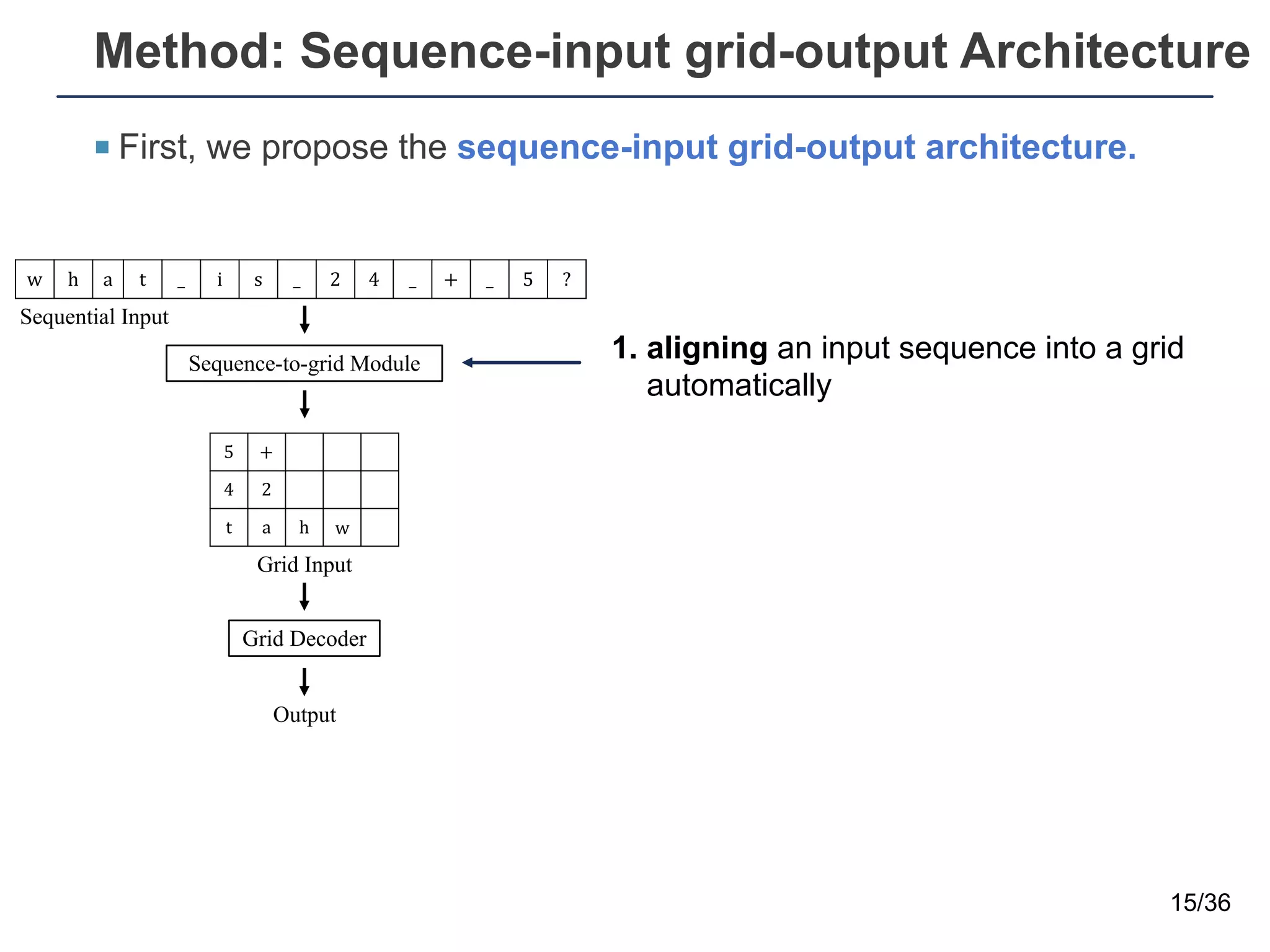

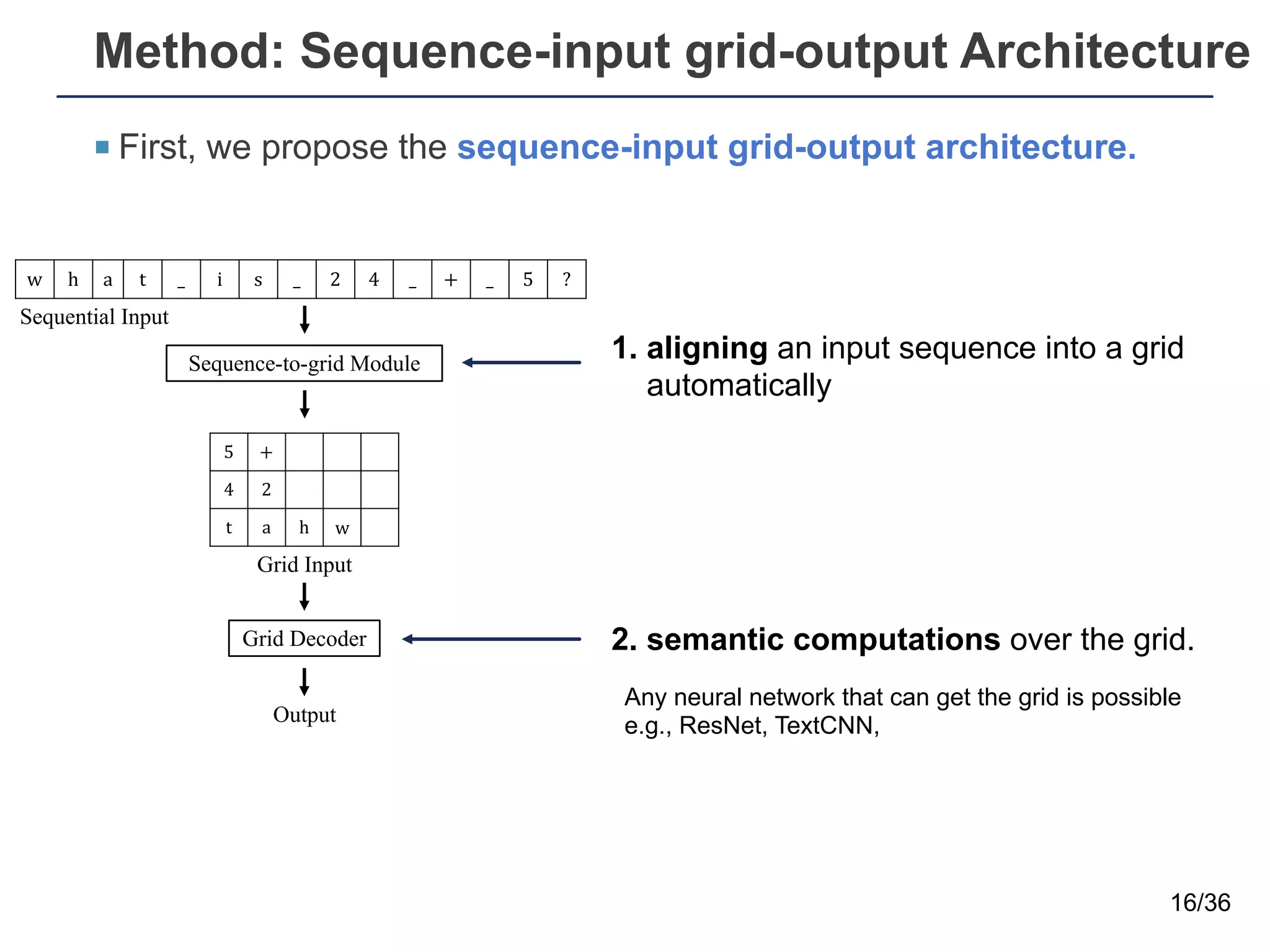

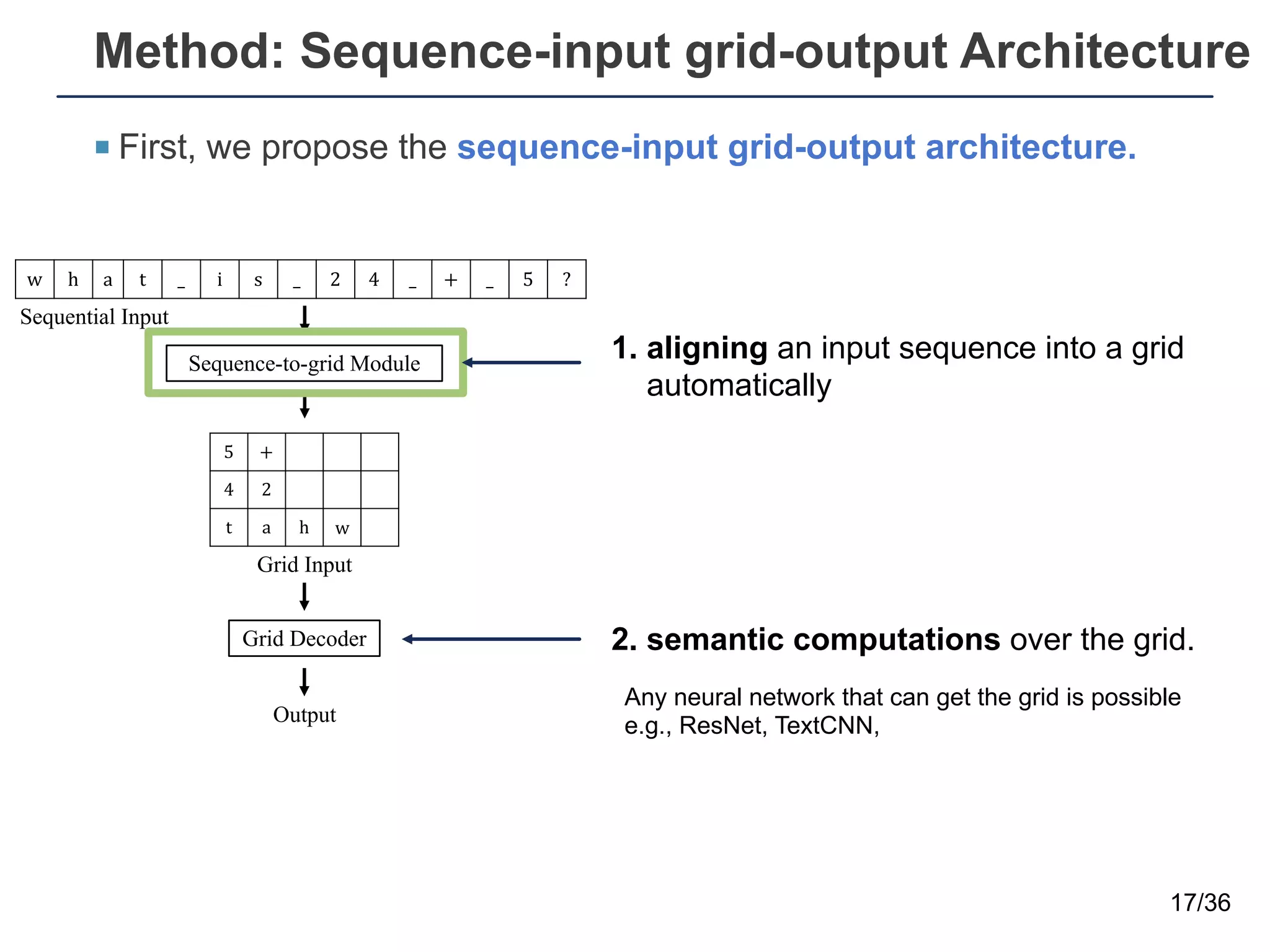

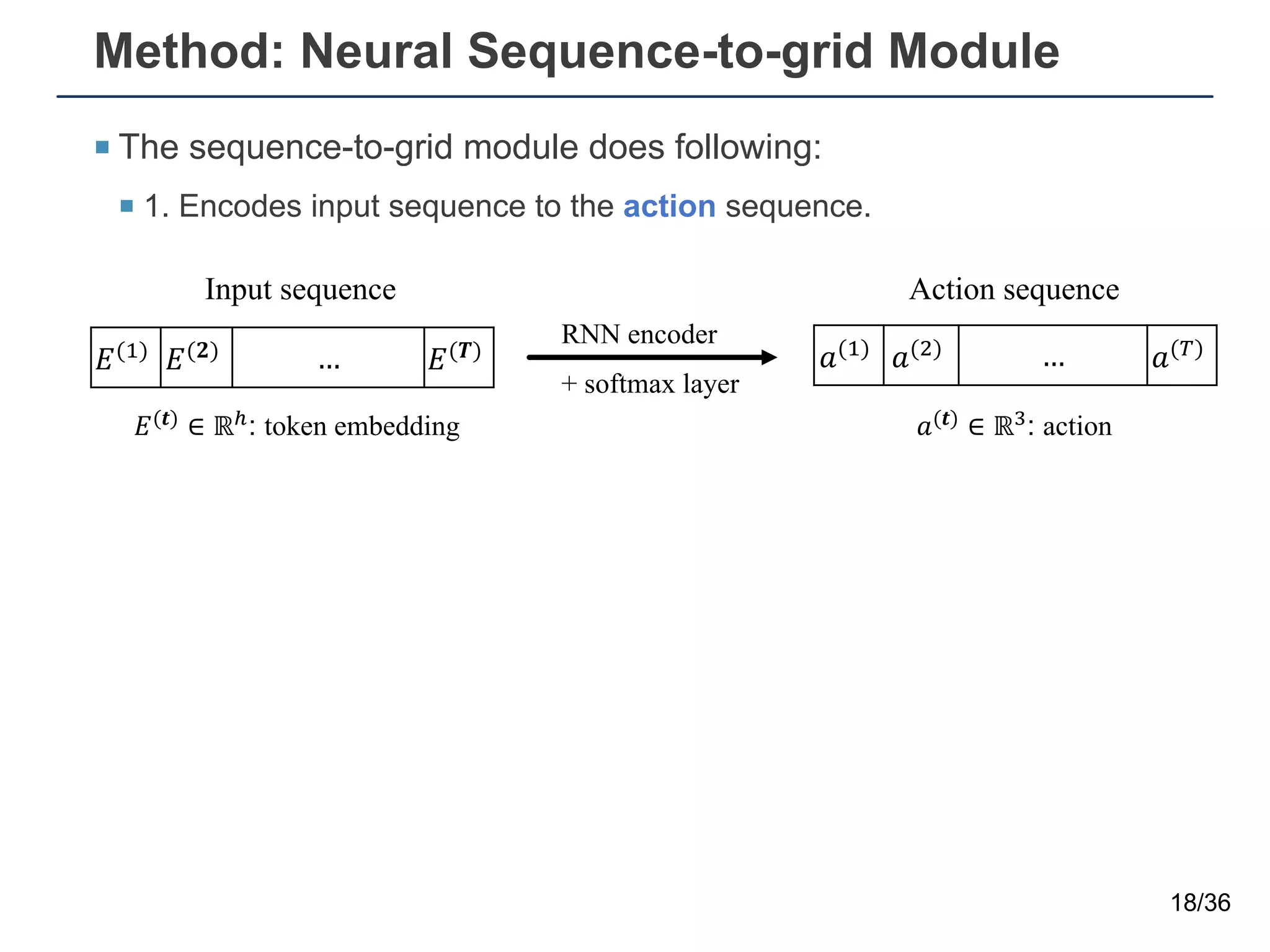

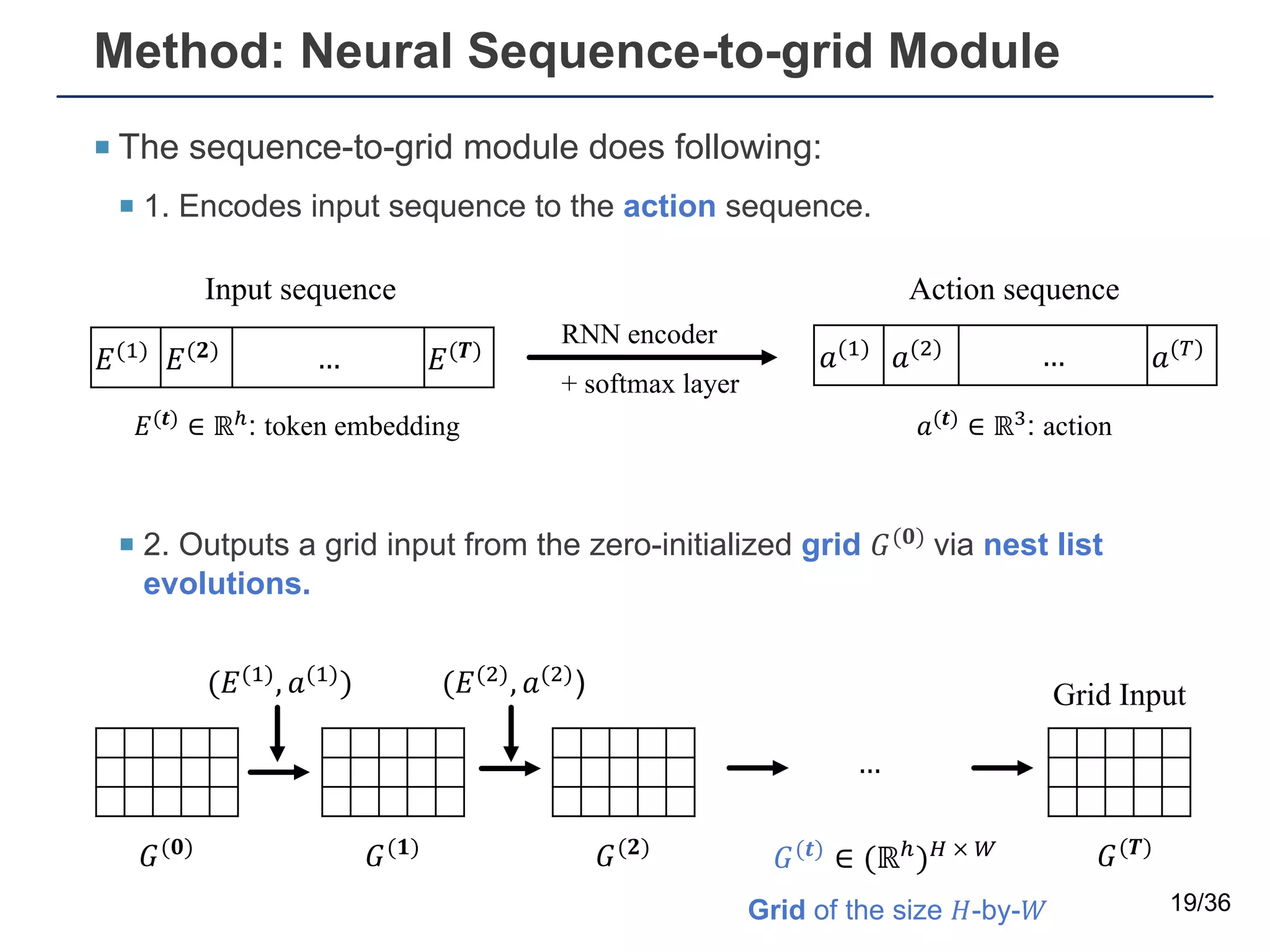

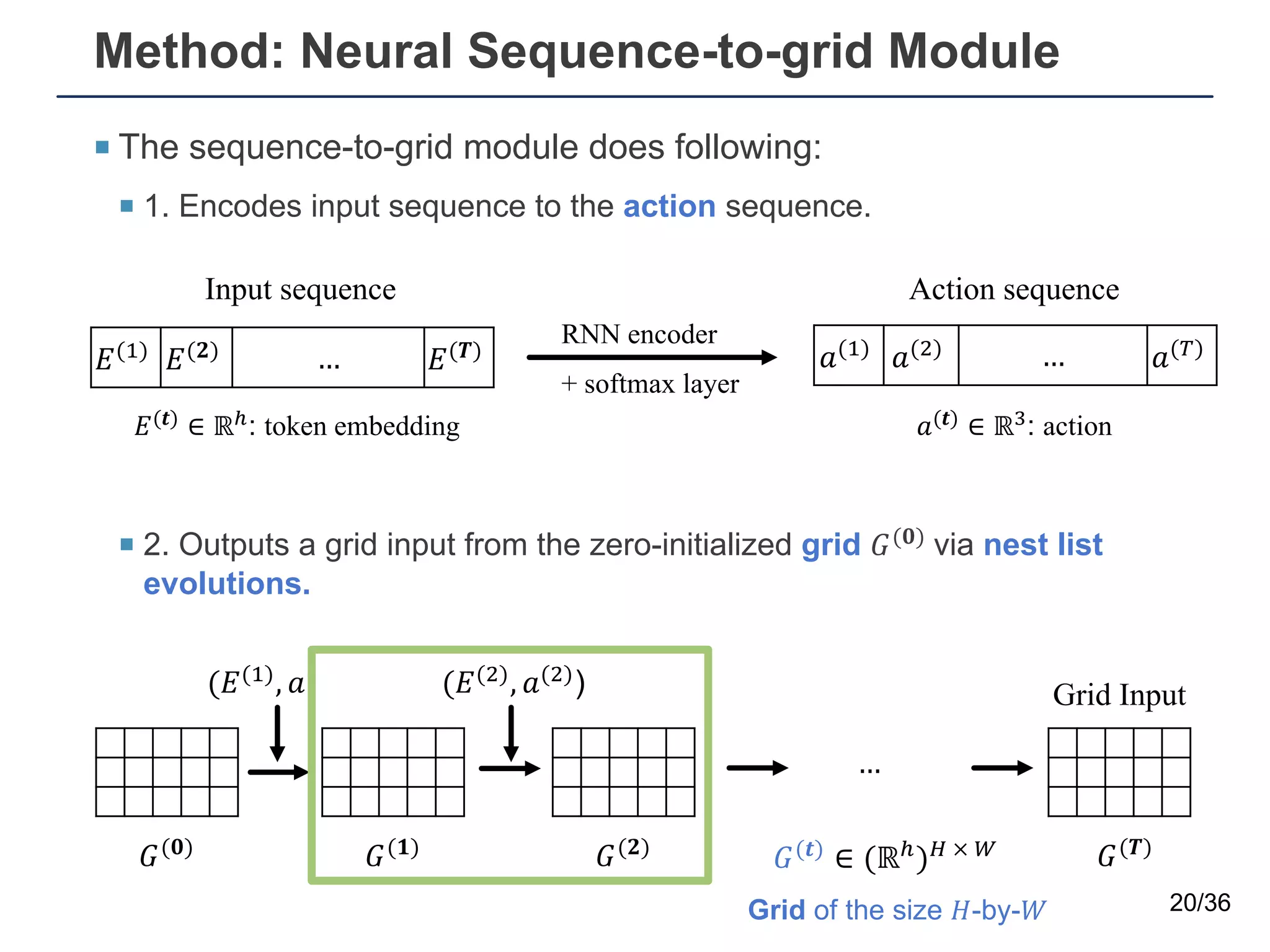

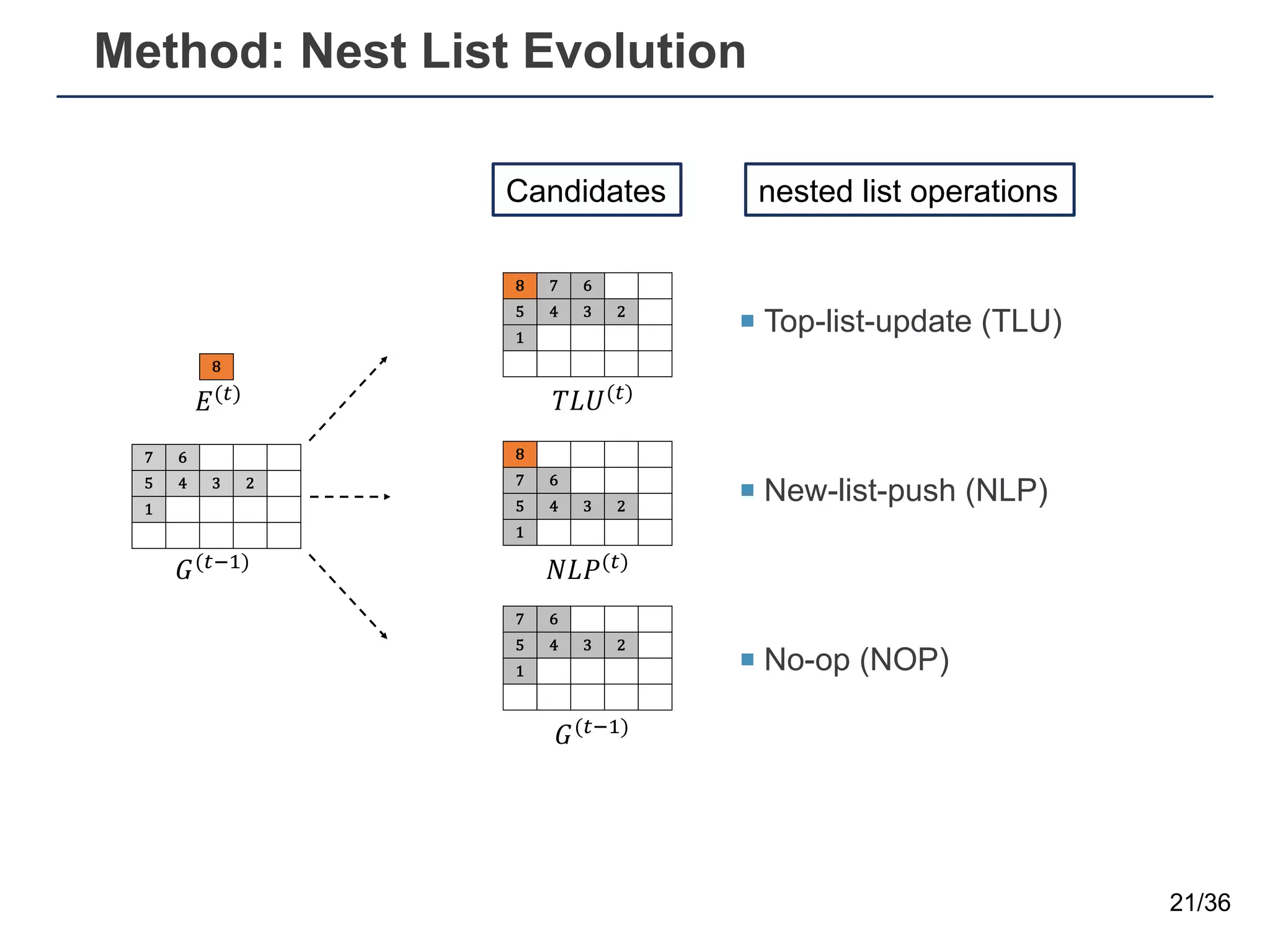

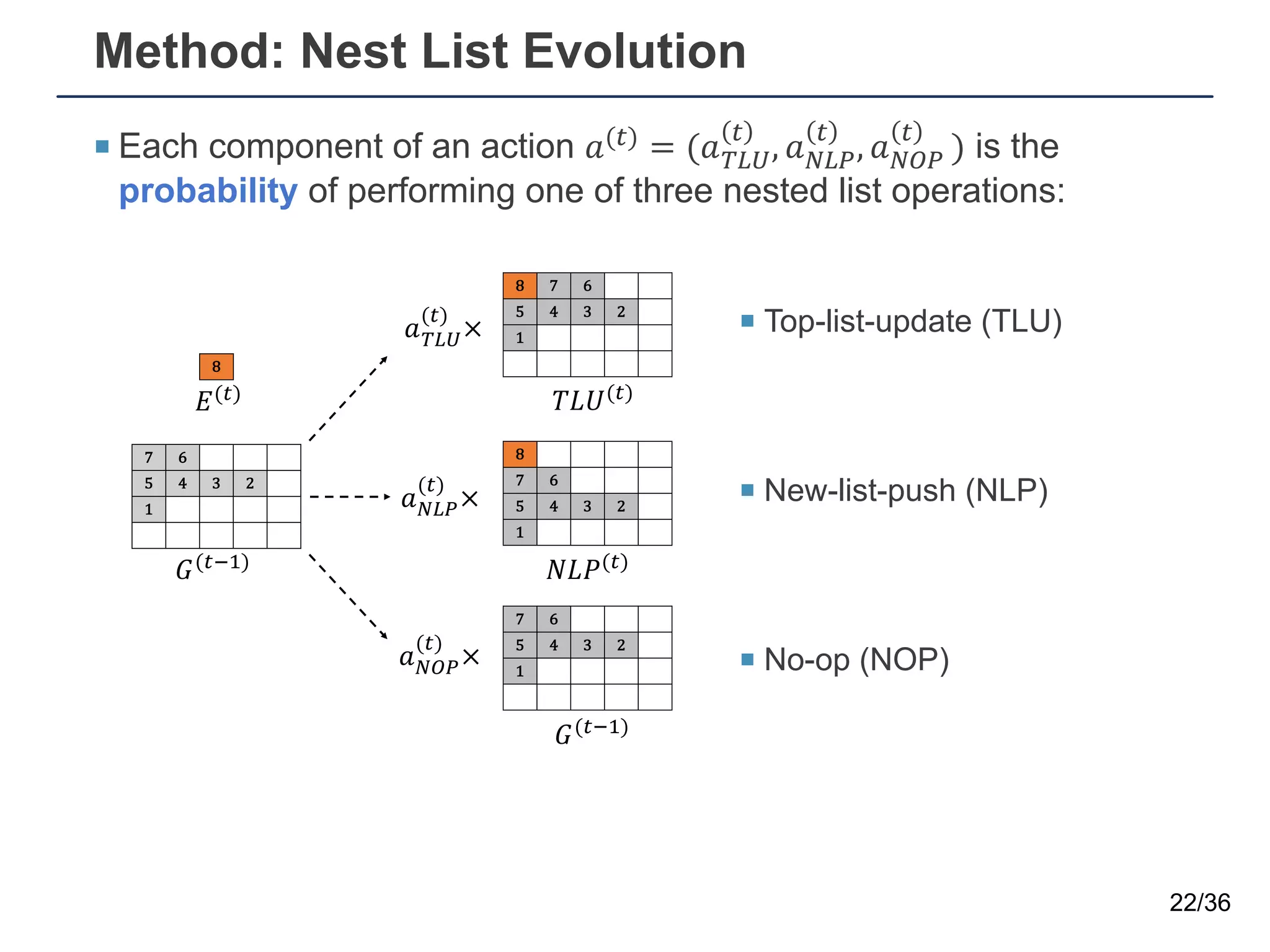

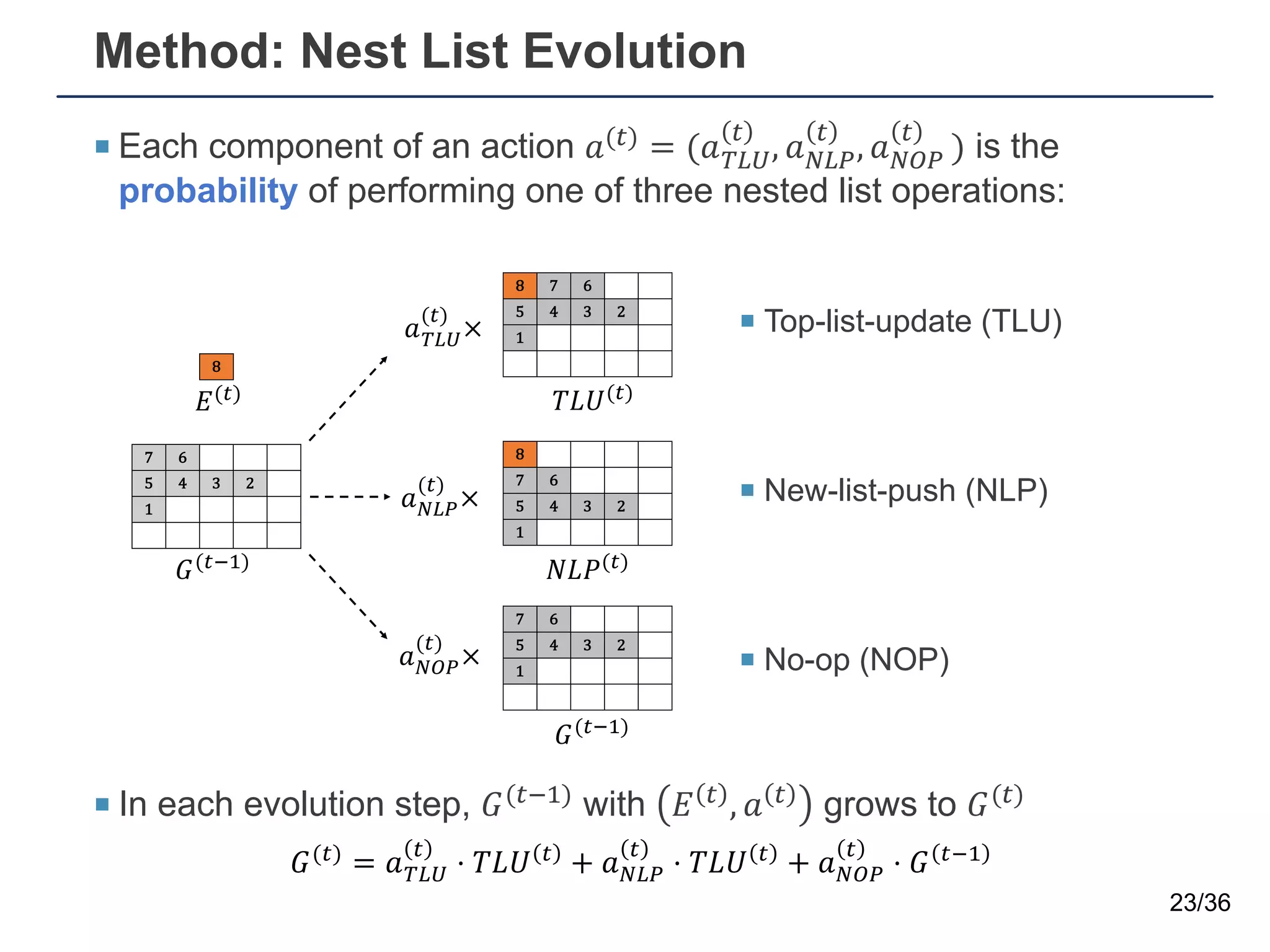

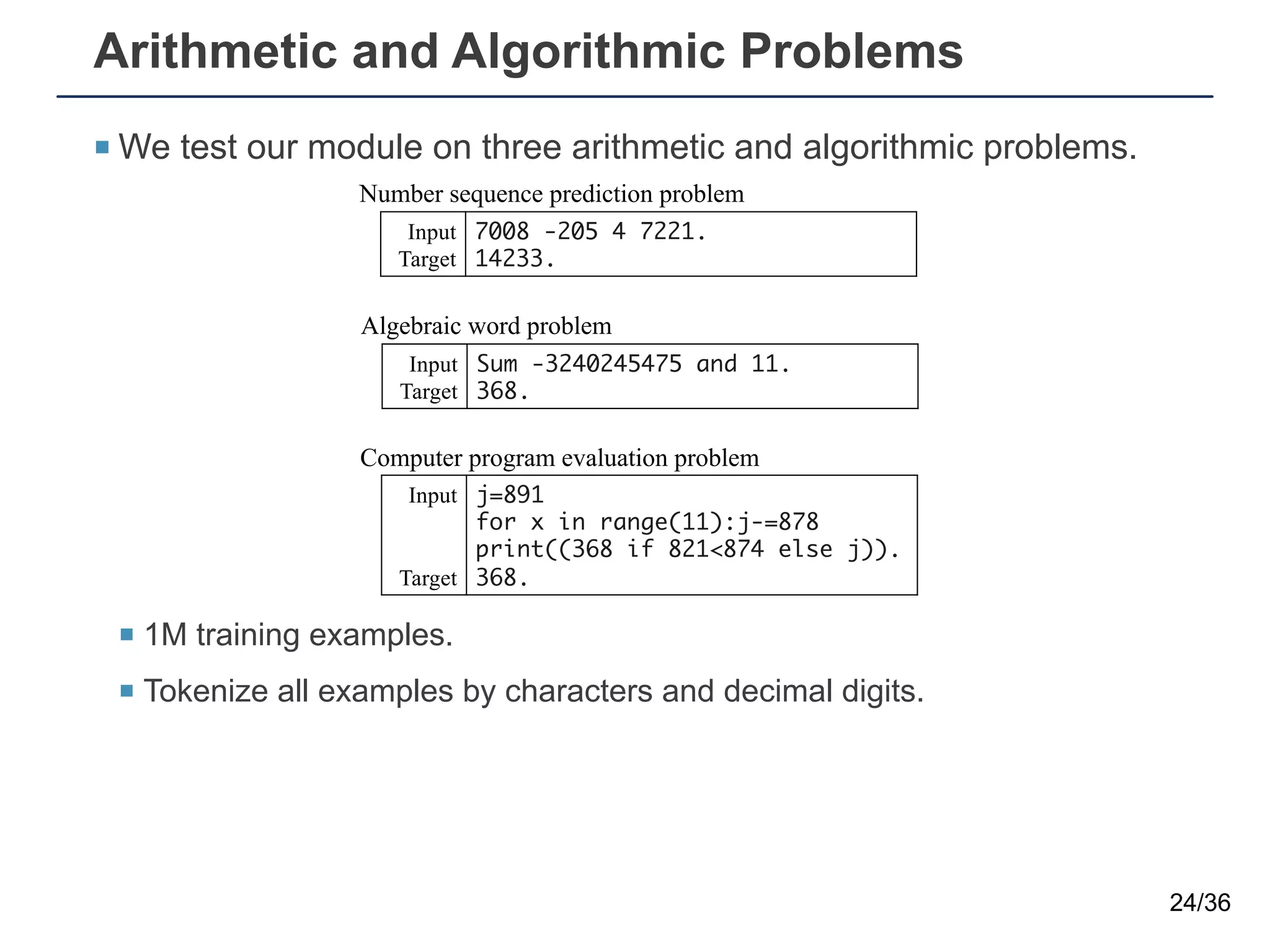

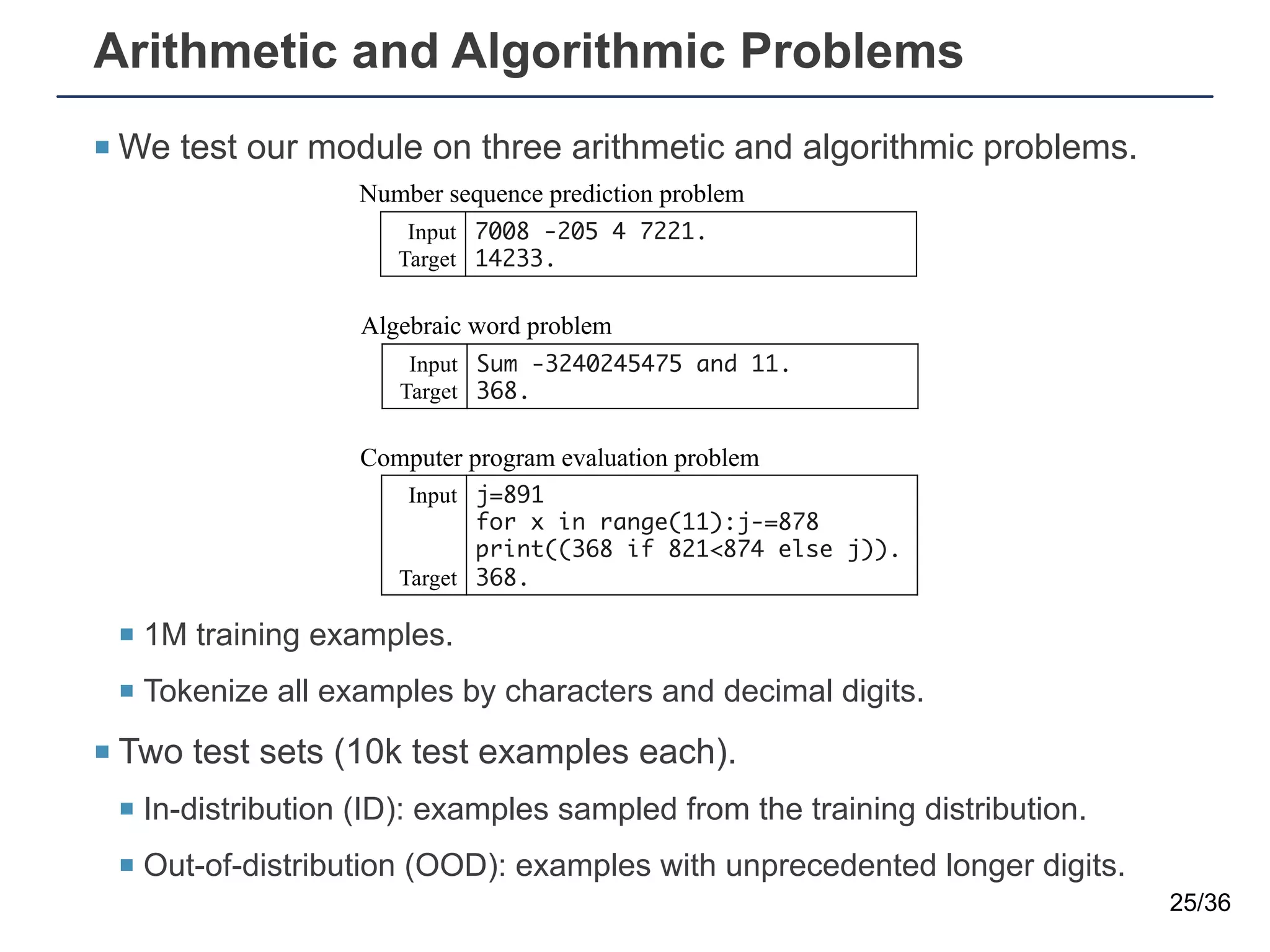

The document presents a neural sequence-to-grid (seq2grid) module designed to improve the ability of deep learning models to learn symbolic rules by aligning input sequences into grids. This method enhances the models' performance on out-of-distribution (OOD) examples, particularly in tasks like arithmetic and algorithmic problems. The seq2grid module undergoes joint training with the neural network, allowing it to effectively preprocess inputs for better generalization.

![Background

¡ Symbolic reasoning problems are testbeds for assessing logical

inference abilities of deep learning models.

2/36

Program code evaluation [1] bAbI tasks [2]

[1] Zaremba, et al. Learning to execute. arXiv 2014.

[2] Weston, et al. Towards ai-complete question answering: A set of prerequisite toy tasks. ICLR 2016.](https://image.slidesharecdn.com/seq2gridaaai2021-210219005234/75/Seq2grid-aaai-2021-2-2048.jpg)

![Background

¡ Symbolic reasoning problems are testbeds for assessing logical

inference abilities of deep learning models.

¡ The determinism of symbolic problems allows us to systematically

test deep learning models with out-of-distribution (OOD) data.

¡ Humans with algebraic mind can naturally extend learned rules.

3/36

5 8 2 + 1 = 3 0 5 3 4 + 4 2 1 =

[1] Zaremba, et al. Learning to execute. arXiv 2014.

[2] Weston, et al. Towards ai-complete question answering: A set of prerequisite toy tasks. ICLR 2016.

Program code evaluation [1] bAbI tasks [2]

6 7 + 3 = 6 9 5 2 1 + 5 0 0 2 9 =

Training examples OOD Test Examples](https://image.slidesharecdn.com/seq2gridaaai2021-210219005234/75/Seq2grid-aaai-2021-3-2048.jpg)

![Background

¡ However, deep learning models cannot extend learned rules to

OOD (out-of-distribution) examples.

4/36

[3] Nam, et al. Number sequence prediction problems and computational power of neural networks, AAAI 2019

[4] Saxton, et al. Analysing Mathmatical Reasoning Abilities of Neural Models, ICLR 2019.

Number sequence prediction problems [3]

Middle school level mathematics problems [4]

Error

Accuracy](https://image.slidesharecdn.com/seq2gridaaai2021-210219005234/75/Seq2grid-aaai-2021-4-2048.jpg)

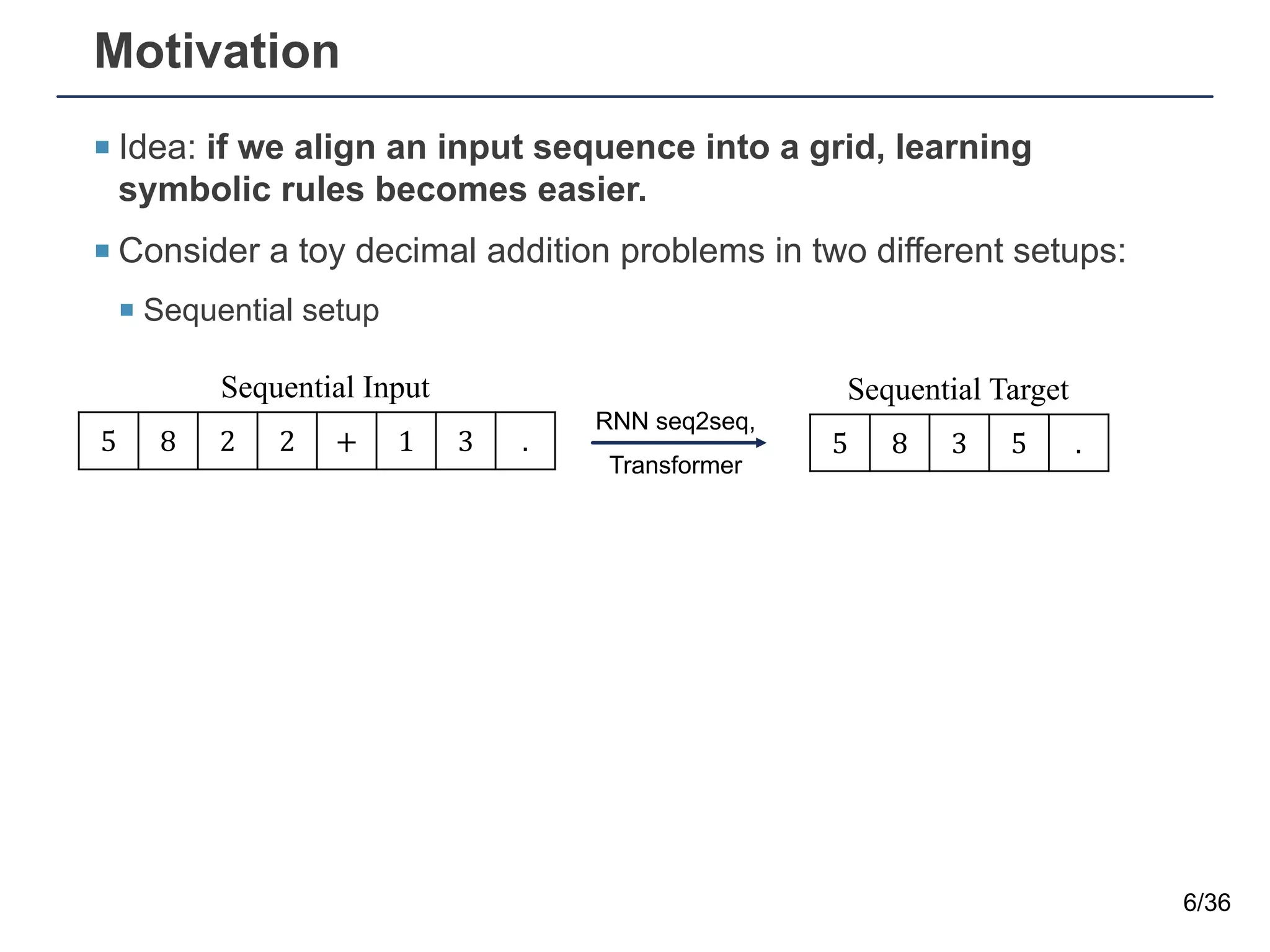

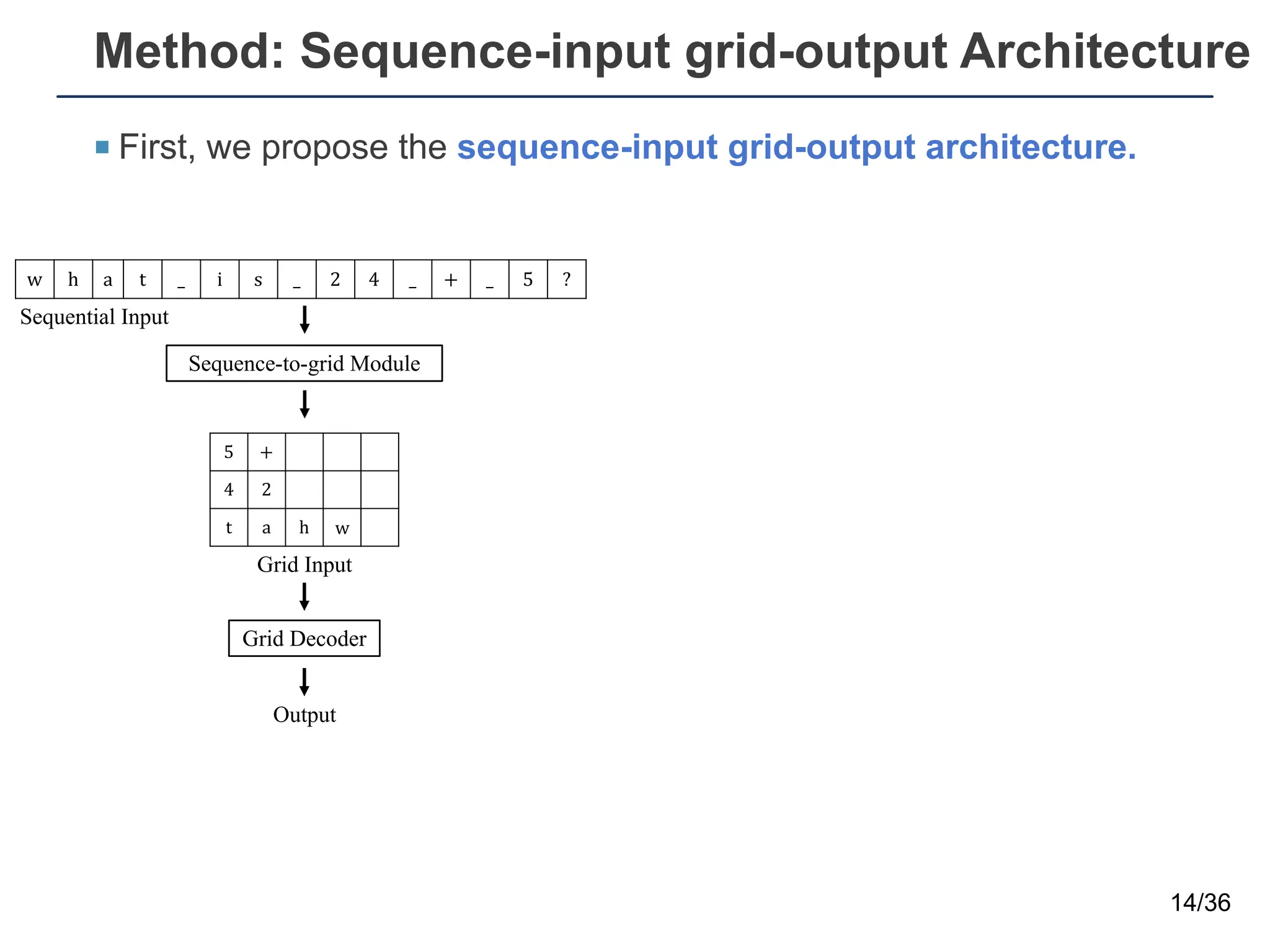

![Arithmetic and Algorithmic Problems

¡ Grid decoder: three stacks of 3-layered bottleneck blocks of ResNet.

26/36

[5] He, et al. Deep Residual Learning for Image Recognition, CVPR 2015

Sequence-to-grid Module

Sequential Input

w h a t _ i s _ 2 4 _ + _ 5 ?

5 +

4 2

t a h w

Grid Input

Grid Decoder

Output

Sequence-to-grid Module

Sequential Input

w h a t _ i s _ 2 4 _ + _ 5 ?

5 +

4 2

t a h w

Grid Input

Grid Decoder

Output

3-layered bottleneck blocks [5]

×3

size: 3×25](https://image.slidesharecdn.com/seq2gridaaai2021-210219005234/75/Seq2grid-aaai-2021-26-2048.jpg)

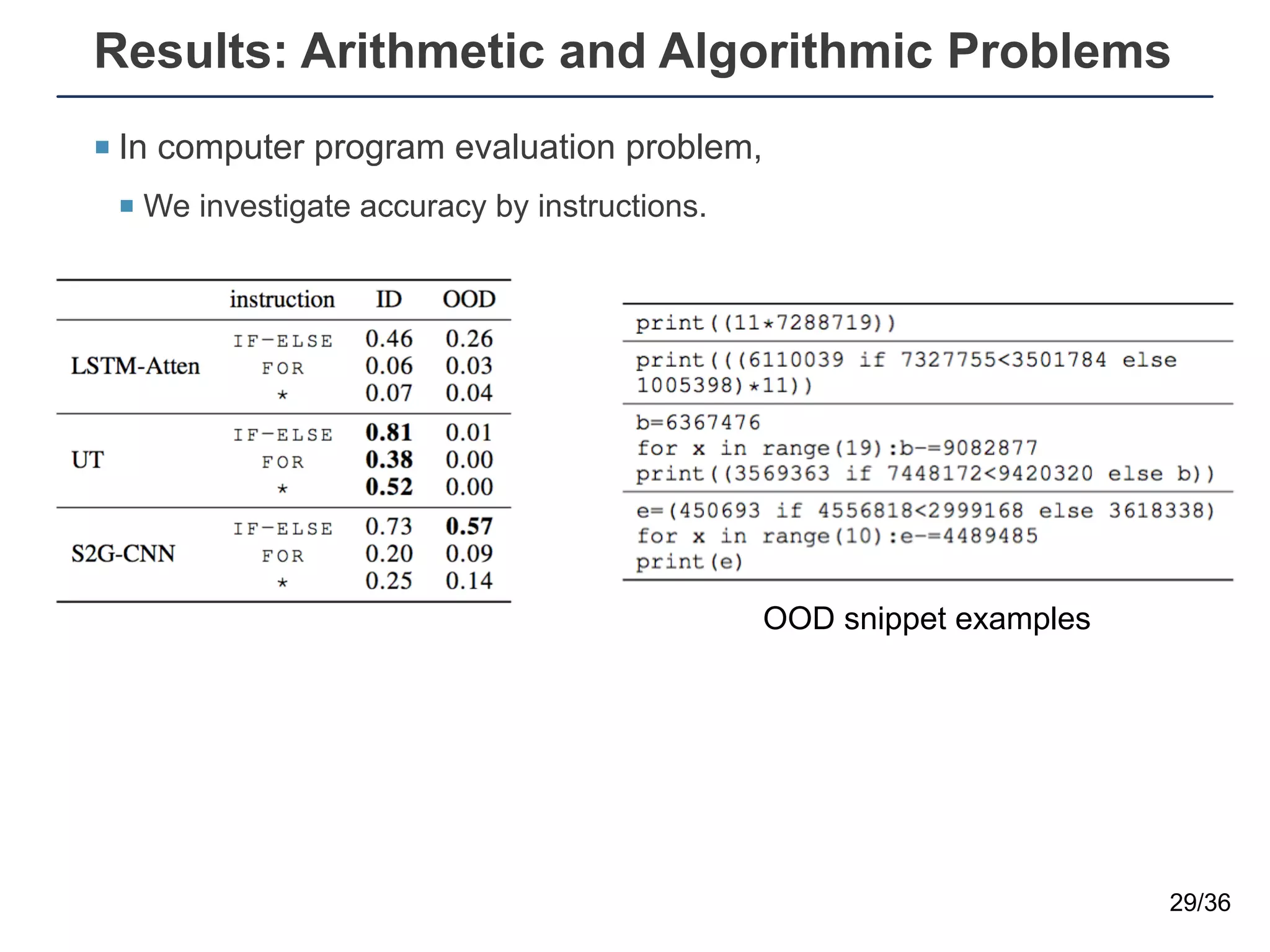

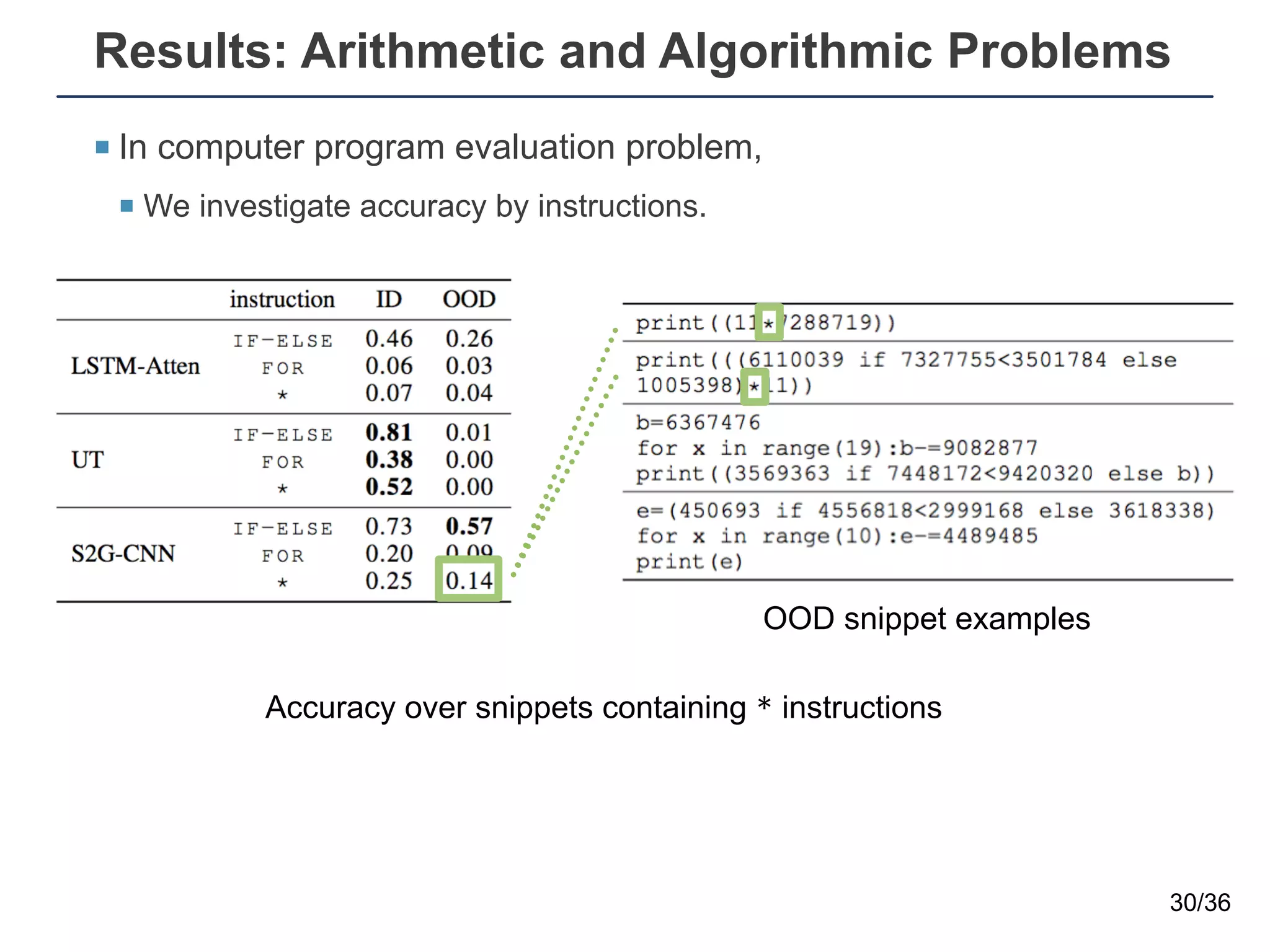

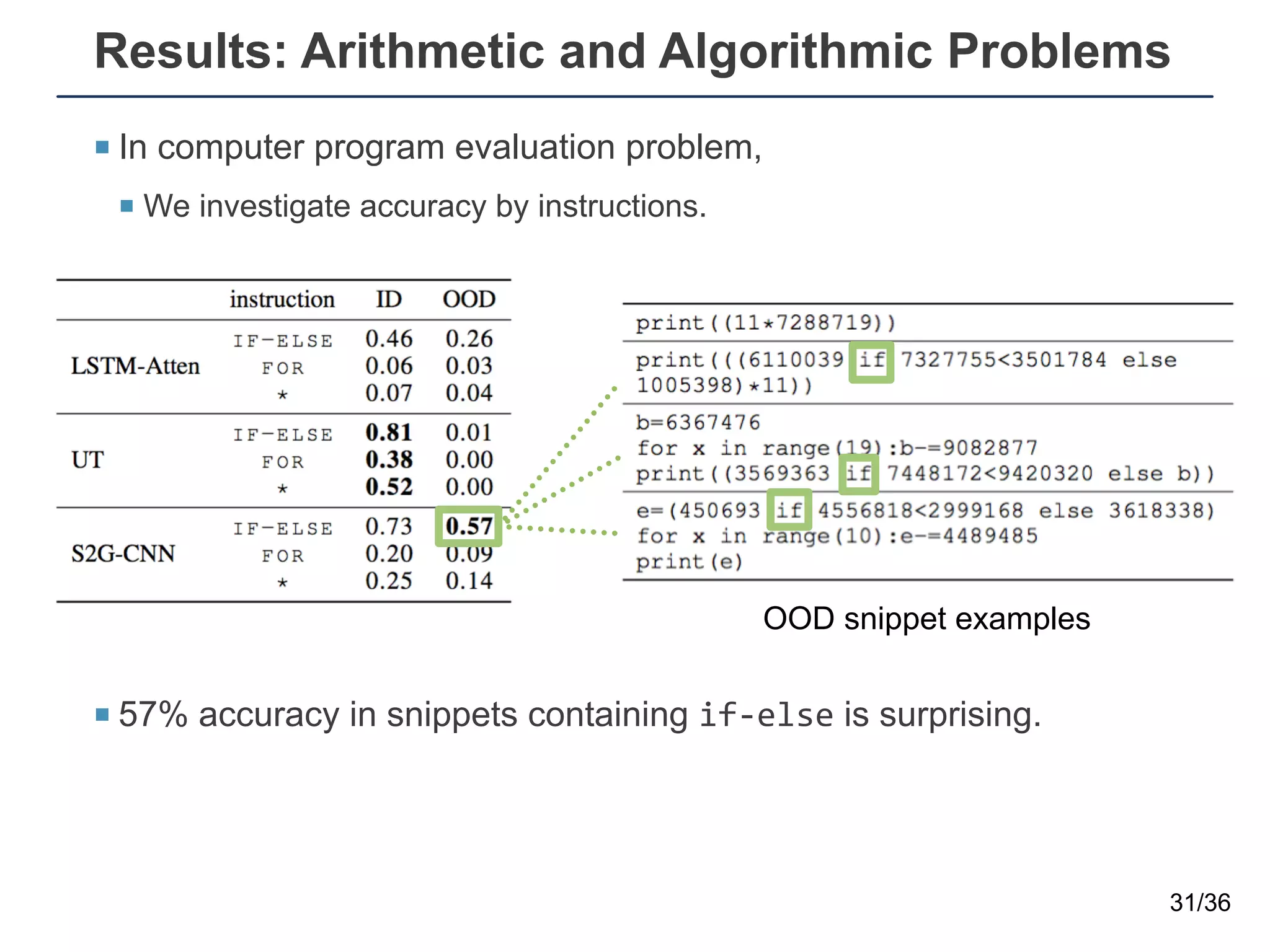

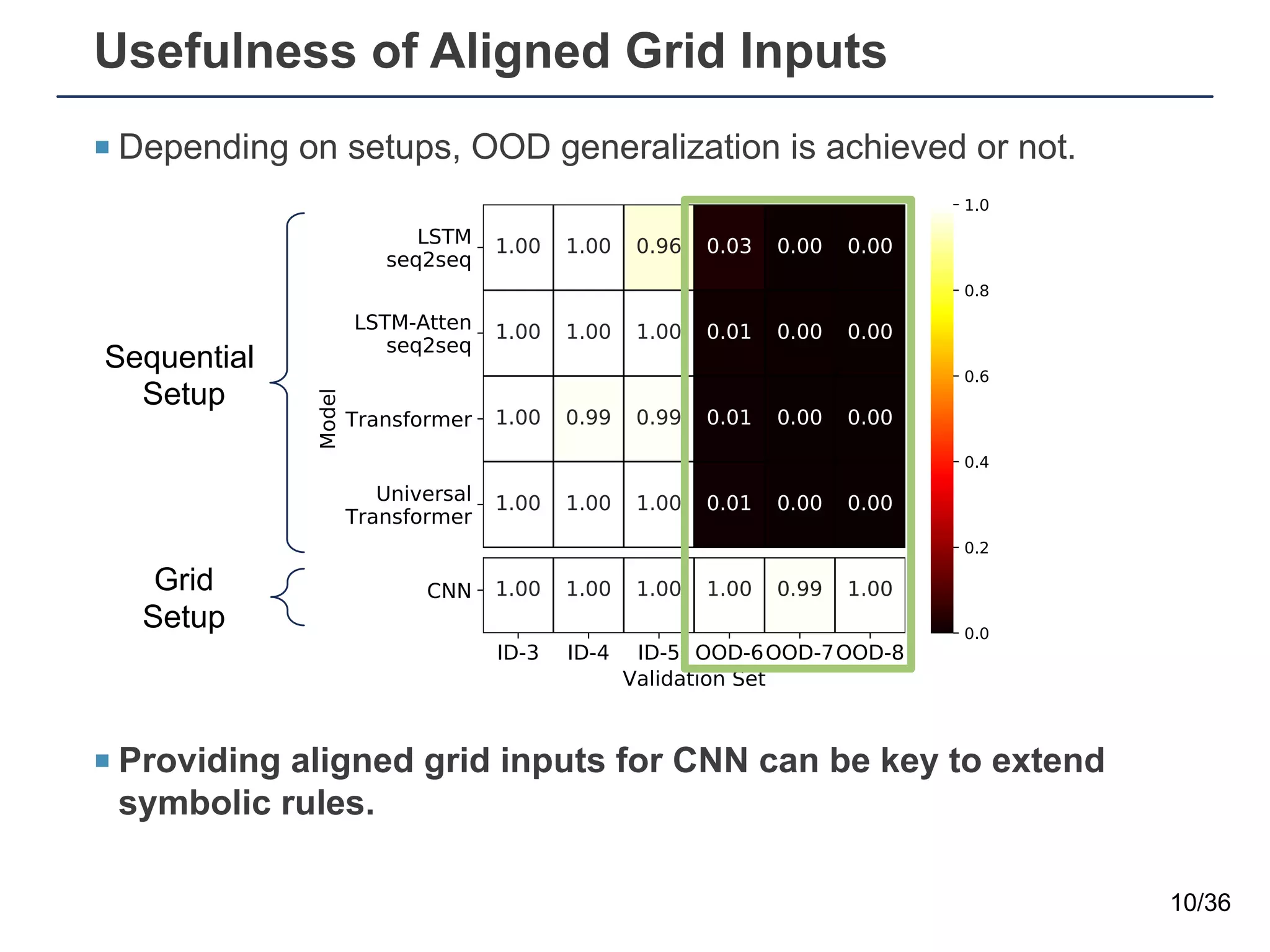

![Arithmetic and Algorithmic Problems

¡ Grid decoder: three stacks of 3-layered bottleneck blocks of ResNet.

¡ The seq2grid module and the grid decoder are simultaneously

trained by reducing cross-entropy loss.

27/36

[5] He, et al. Deep Residual Learning for Image Recognition, CVPR 2015

Sequence-to-grid Module

Sequential Input

w h a t _ i s _ 2 4 _ + _ 5 ?

5 +

4 2

t a h w

Grid Input

Grid Decoder

Output

Sequence-to-grid Module

Sequential Input

w h a t _ i s _ 2 4 _ + _ 5 ?

5 +

4 2

t a h w

Grid Input

Grid Decoder

Output

3-layered bottleneck blocks [5]

×3

bottleneck

blocks

9 2 ∅

Flipped Sequential Target

Cross-entropy

logit layer

Preprocessed Grid Input

2 9

Original Target

size: 3×25

pad token](https://image.slidesharecdn.com/seq2gridaaai2021-210219005234/75/Seq2grid-aaai-2021-27-2048.jpg)