Embed presentation

Downloaded 108 times

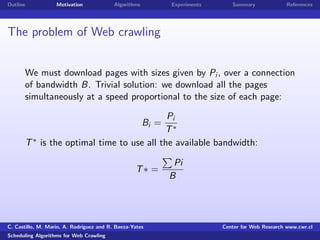

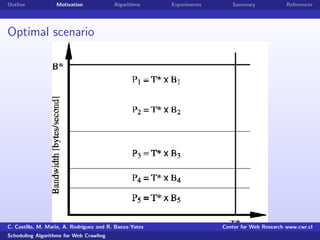

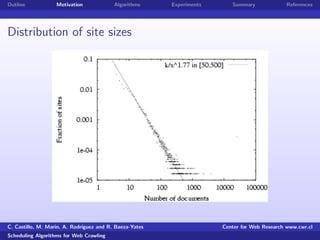

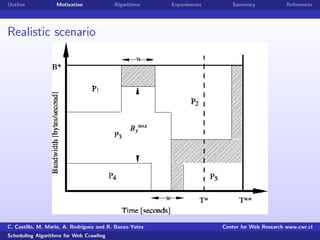

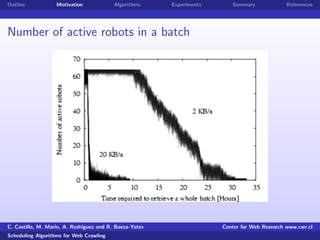

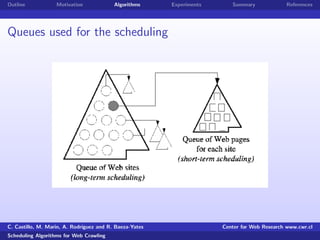

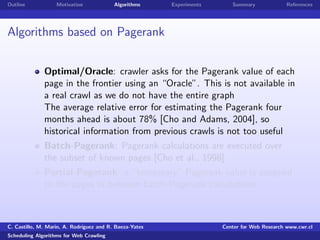

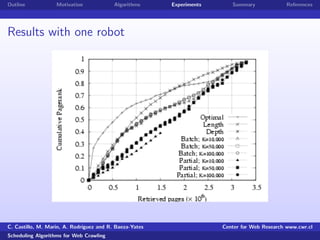

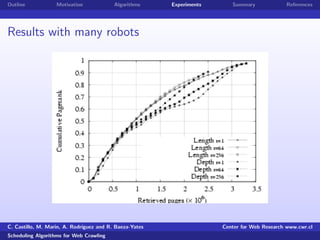

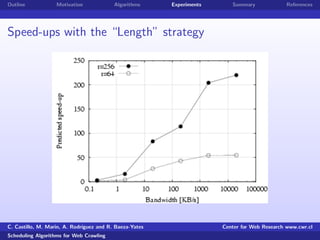

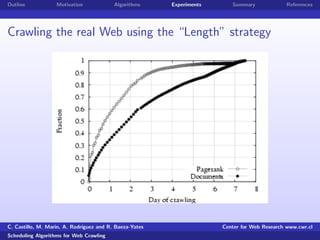

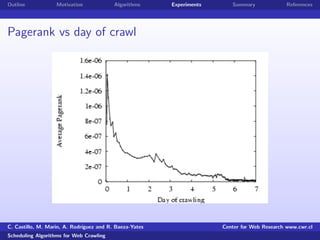

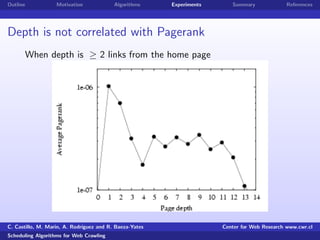

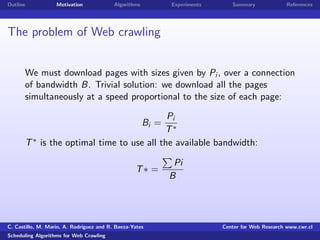

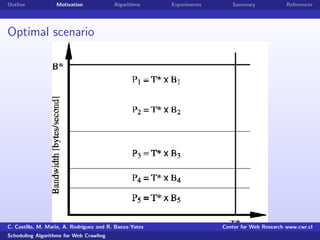

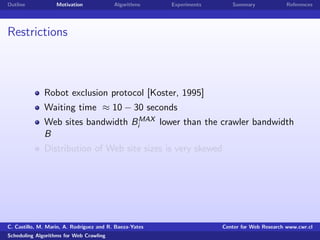

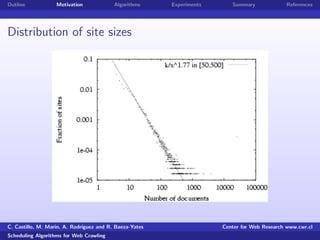

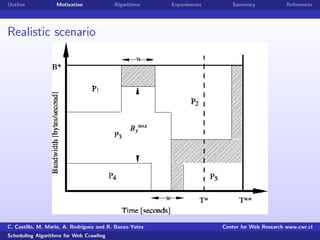

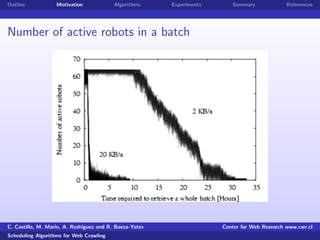

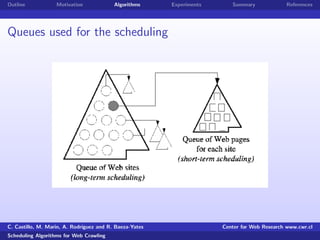

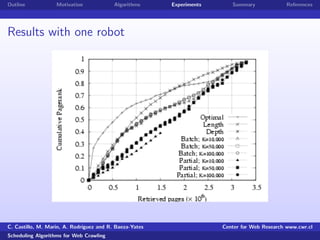

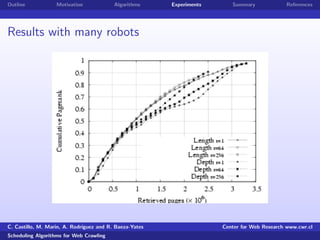

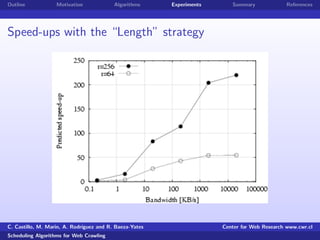

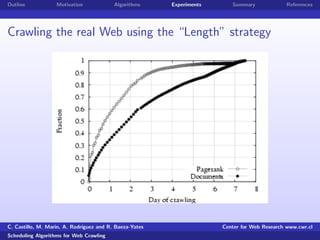

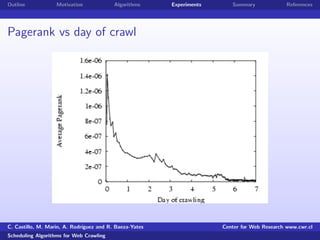

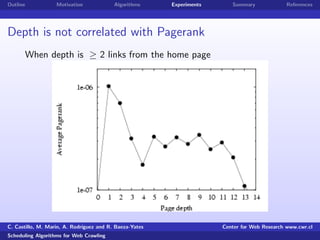

The document outlines and compares different algorithms for scheduling web crawlers to download web pages in an efficient manner. It discusses the motivation for prioritizing which pages to download given bandwidth limitations. It describes algorithms that prioritize pages based on PageRank values, depth, length of hosting websites, and compares them to optimal strategies. Experiments involved simulating the algorithms on a sample of 3.5 million pages from over 50,000 websites to analyze performance based on parameters like waiting time and number of robots.