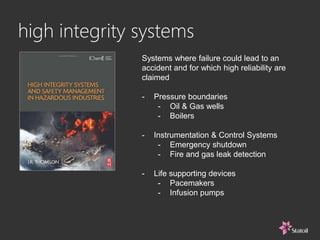

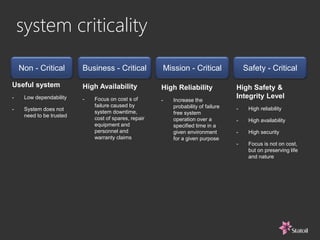

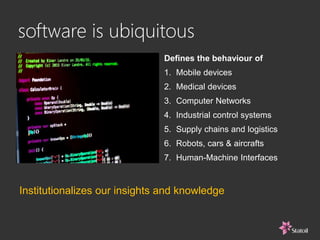

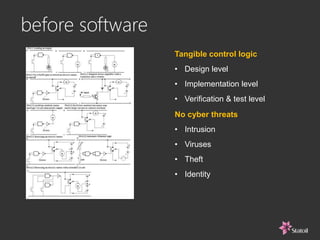

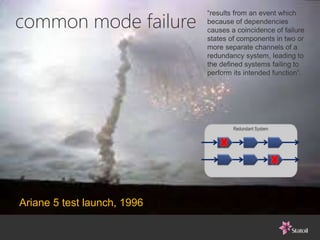

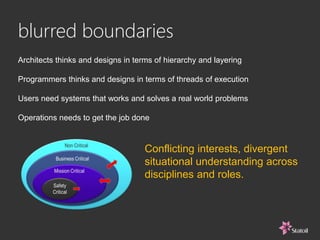

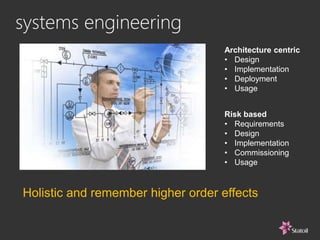

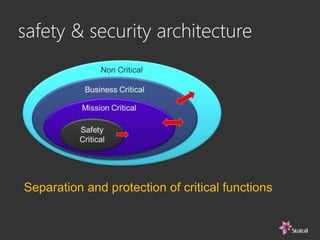

This document discusses safety and security challenges with distributed systems. It notes that many industries involve critical functions supported by software-based systems. Two unique properties of software are that it cannot be inspected like physical components and the execution sequence is unknown. This can lead to common mode failures and issues from malware, hacking, and human error. The document recommends rigorous systems engineering, safety and security architecture informed by standards, and addressing human factors from the start to help build high-integrity systems for critical functions.