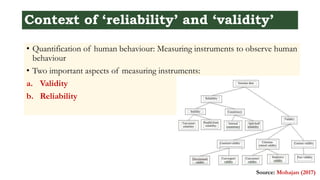

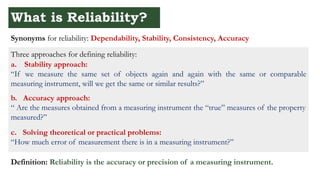

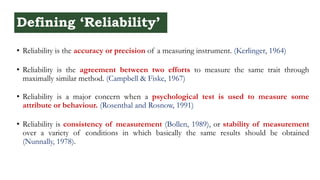

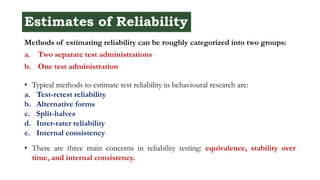

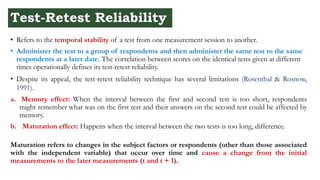

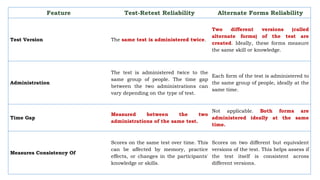

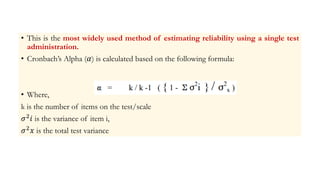

The document discusses the concepts of reliability and validity in behavioral research, emphasizing the importance of measuring instruments for accurate observation of human behavior. It outlines several methods for estimating reliability, including test-retest reliability, alternative forms, and internal consistency, along with their advantages and disadvantages. Additionally, it highlights the significance of reliability testing in ensuring consistency and accuracy in psychological assessments.