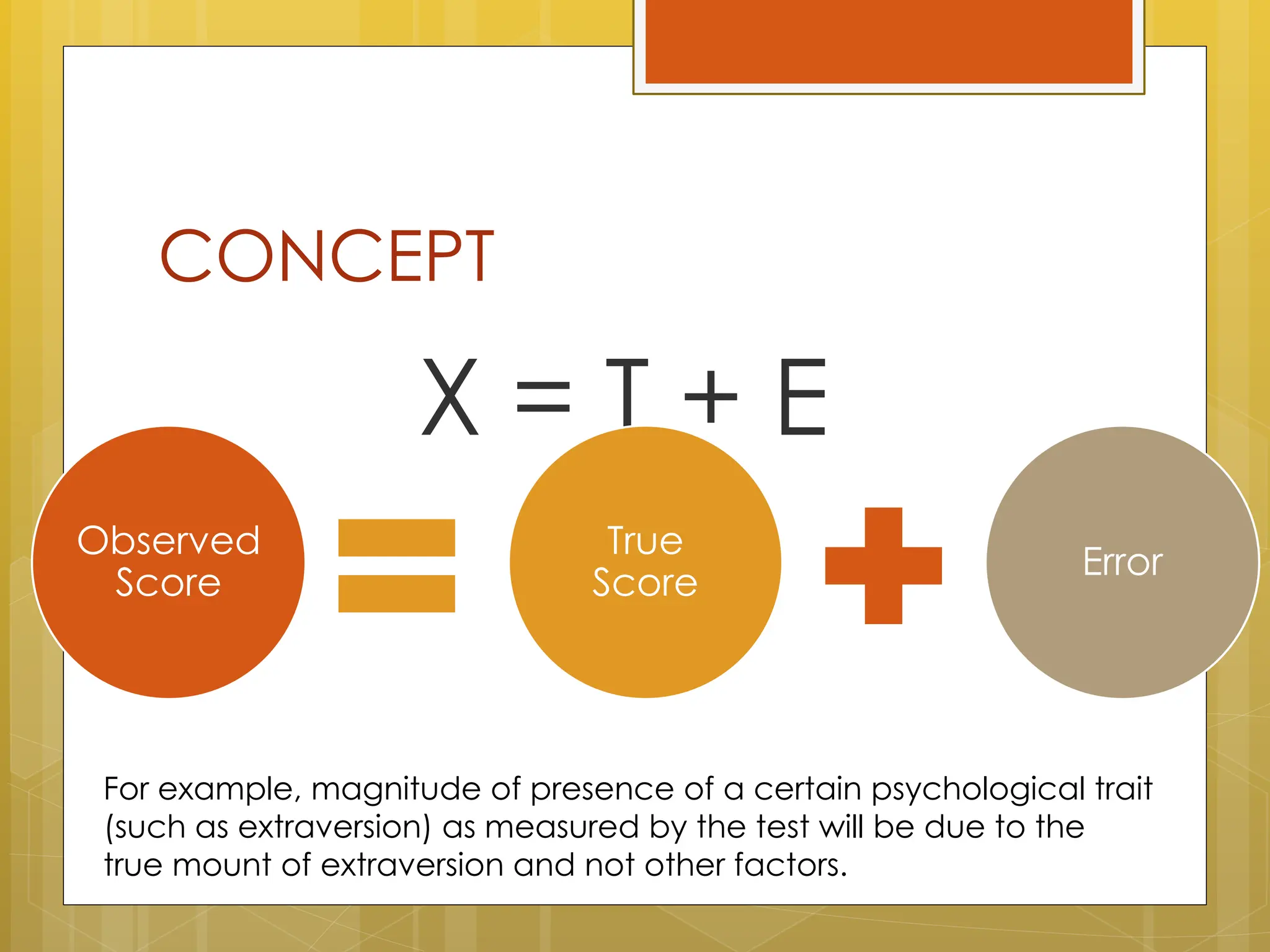

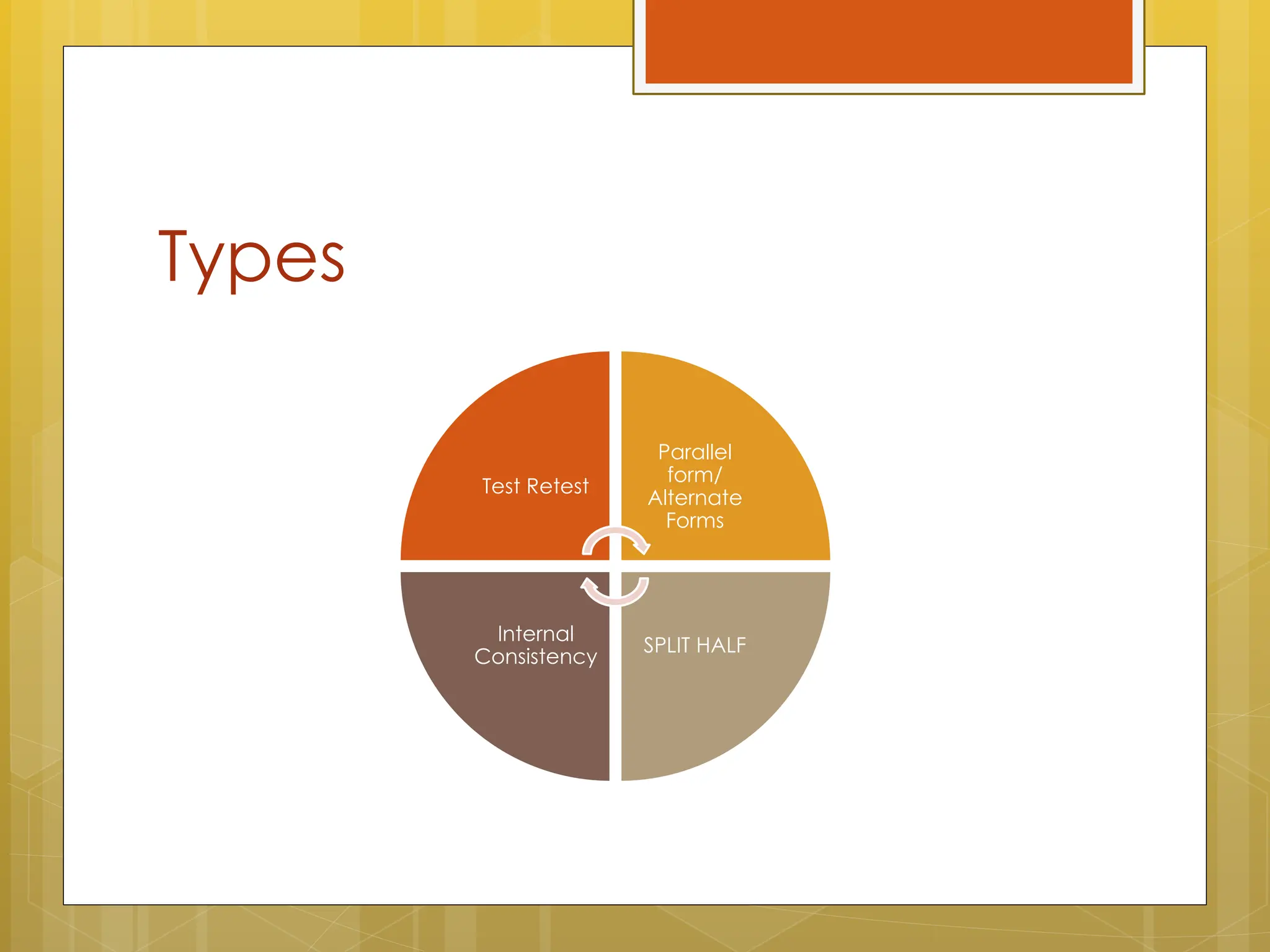

The document outlines the concepts of reliability and validity in testing, explaining that reliability refers to the consistency of test scores while validity measures whether a test accurately assesses its intended construct. It highlights that a reliable test does not guarantee validity, illustrated by examples of unreliable questionnaires. Various methods for measuring reliability, such as test-retest, parallel forms, and internal consistency, are discussed along with associated coefficients that indicate reliability strength.