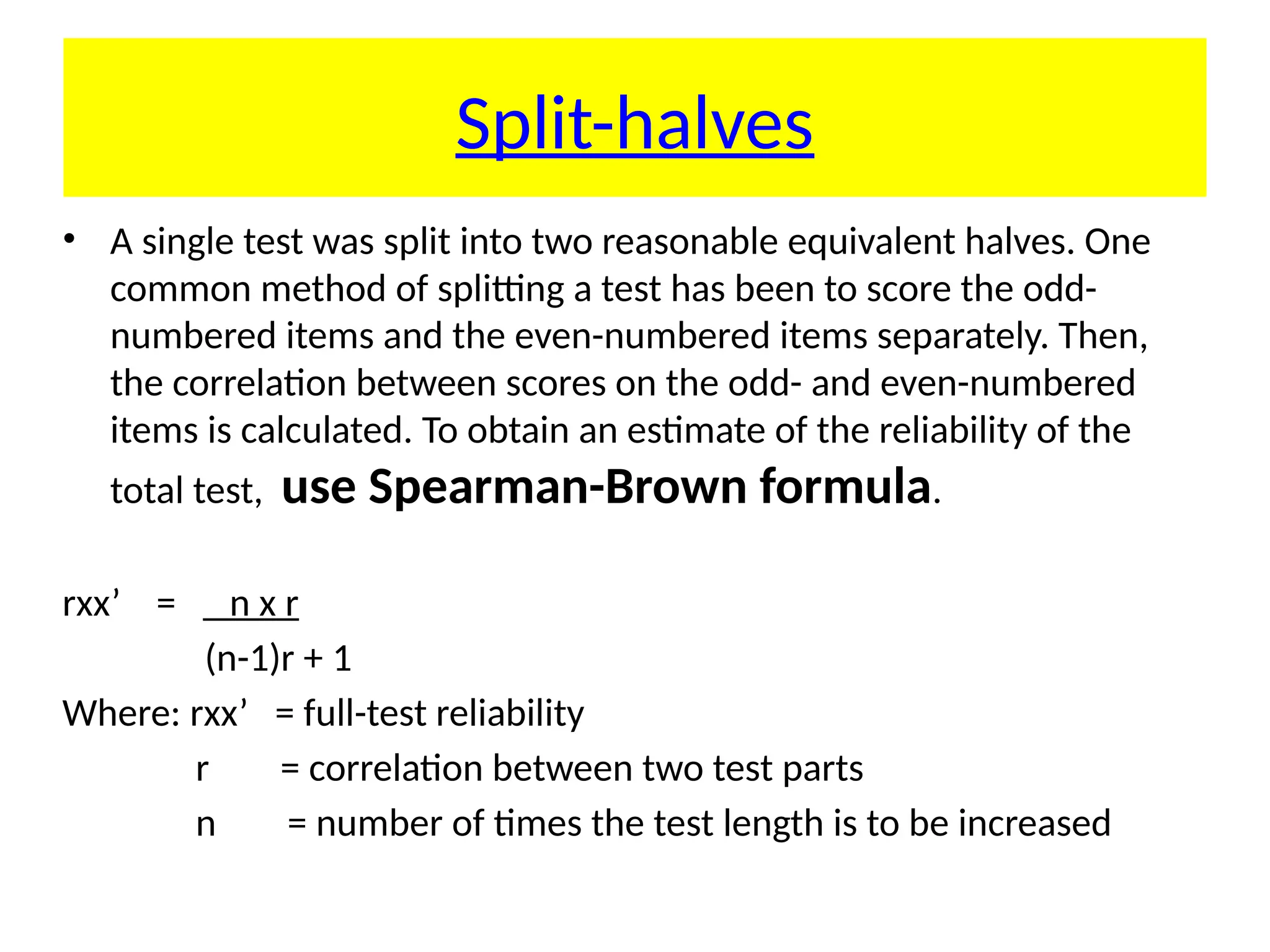

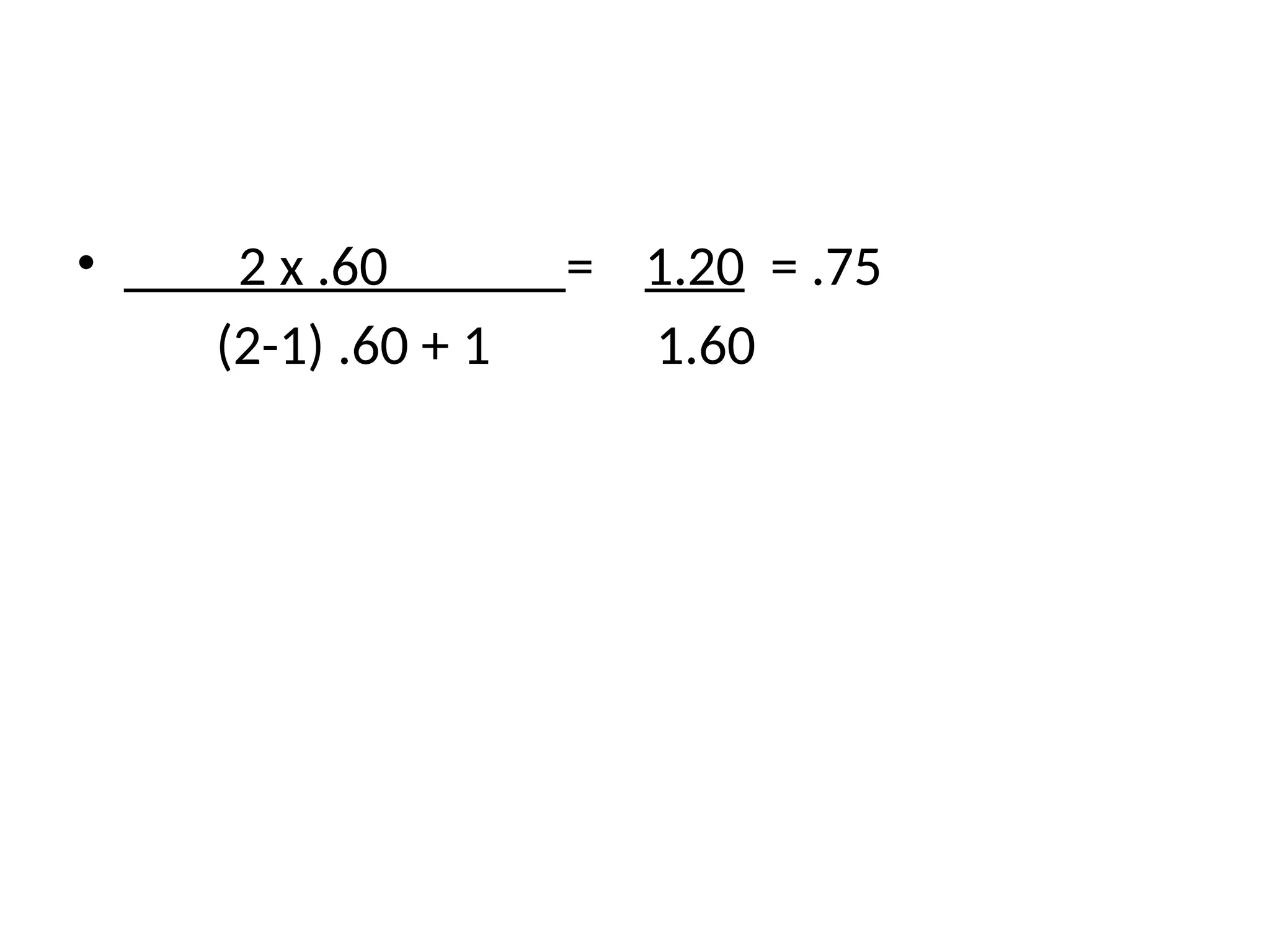

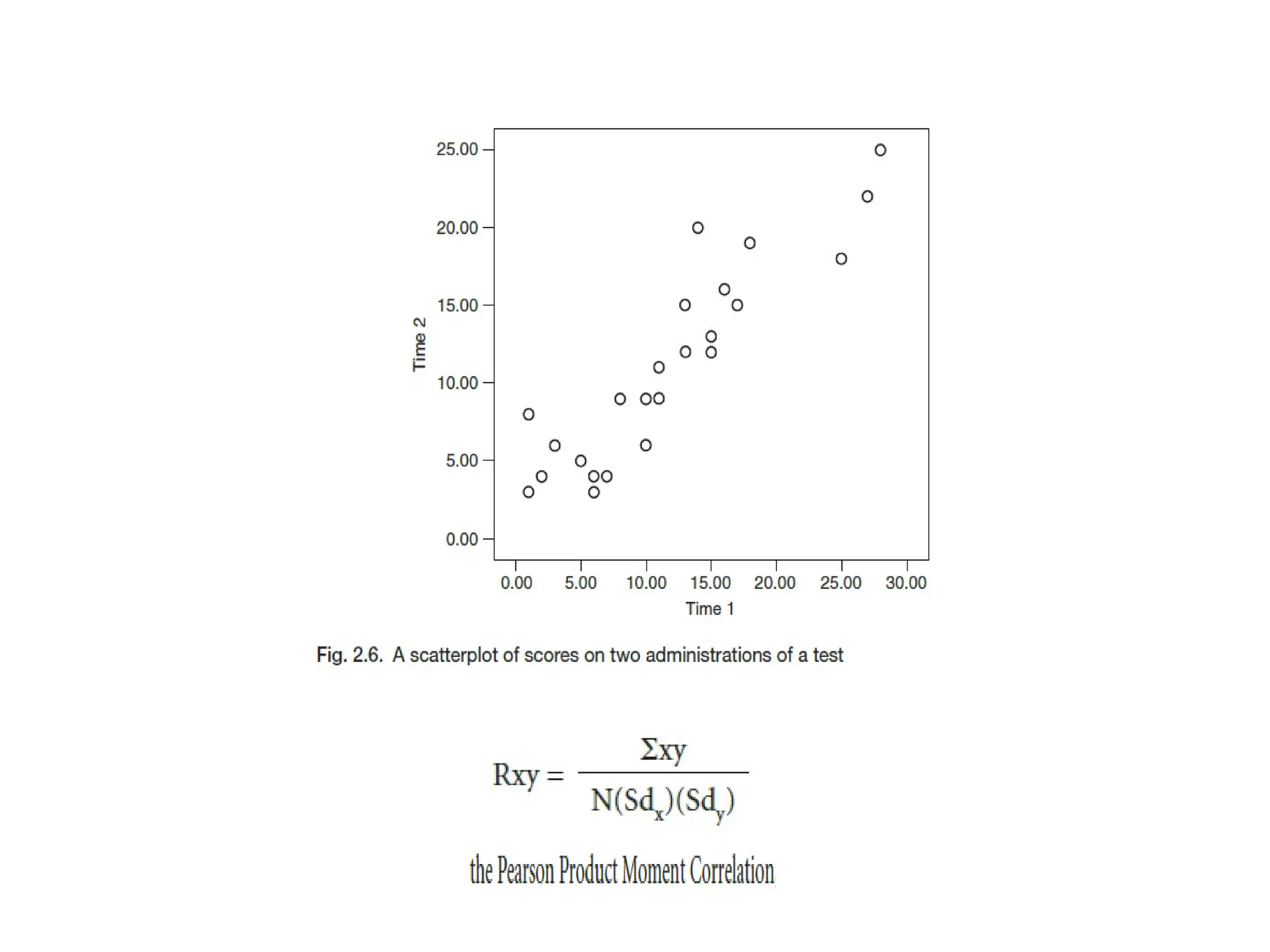

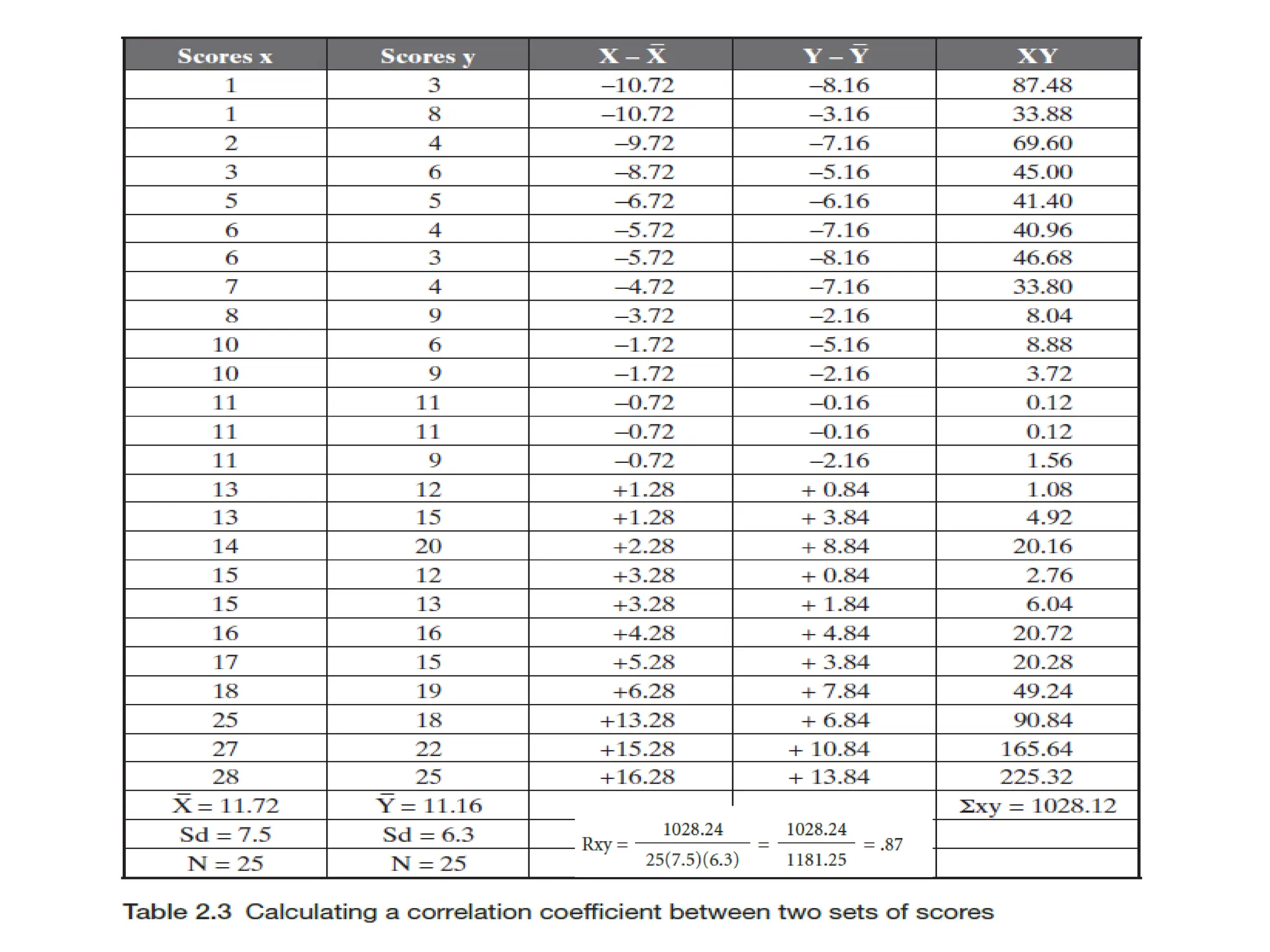

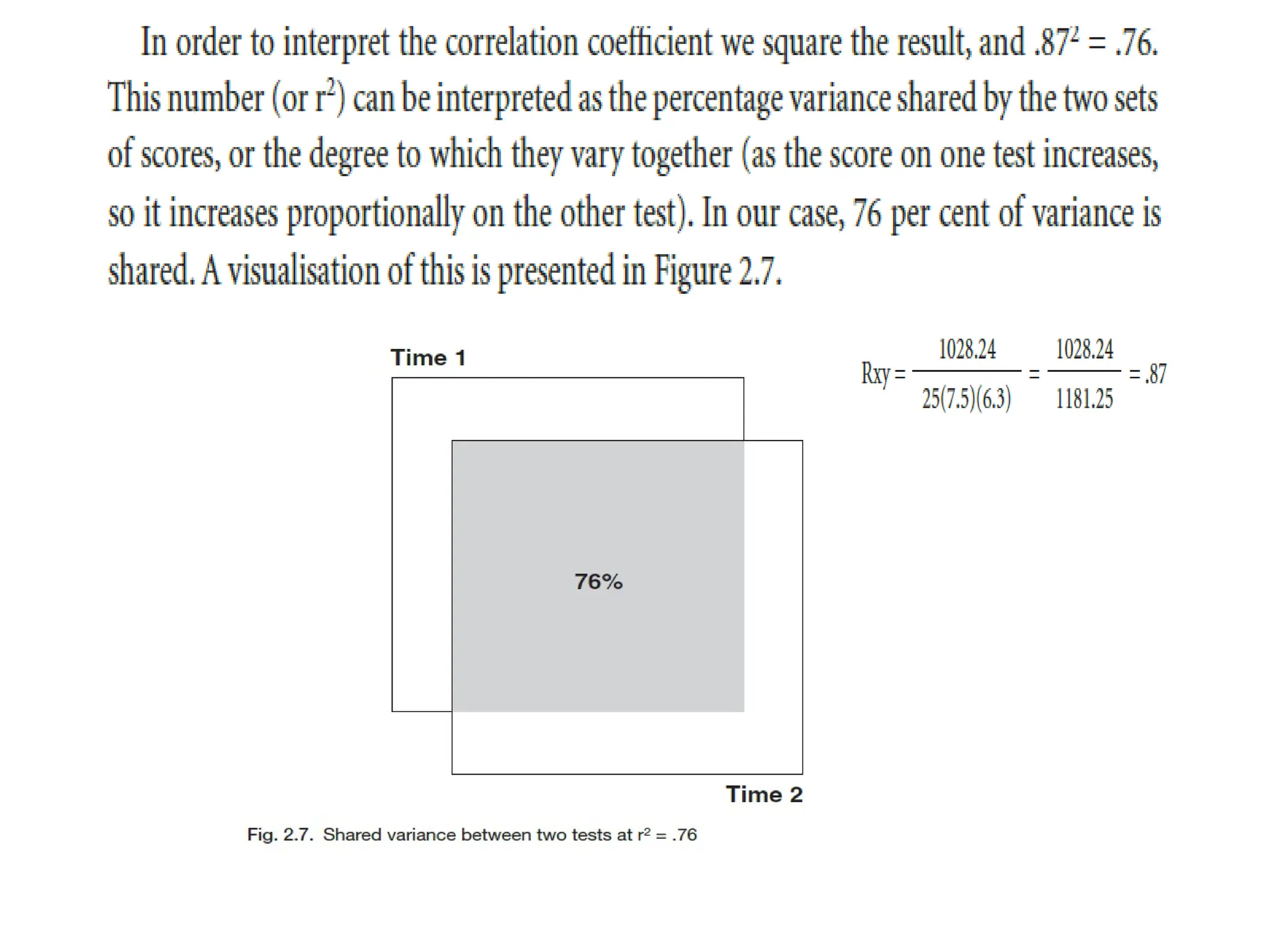

The document discusses the concept of reliability in testing, highlighting various factors that can contribute to unreliability such as student-related issues, rater inconsistencies, test administration conditions, and inherent test characteristics. It explains methods for calculating reliability, including internal consistency measures like the split-half and Kuder-Richardson formulas, as well as external reliability through test-retest and equivalent-forms methods. The overall aim is to establish dependable measures of test scores to ensure accurate evaluations of test-takers' abilities.