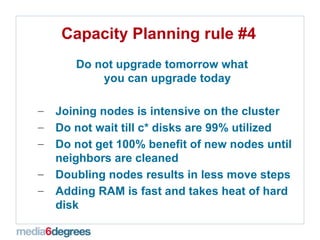

The document discusses capacity planning considerations for running Cassandra in a production environment based on the author's experience. Some key points include starting with a small number of nodes and low replication factor initially, using tools like Puppet from the beginning, upgrading nodes quickly to the same version, ensuring sufficient disk space, memory, and fast disks, avoiding upgrading right before capacity is reached, and understanding read/write patterns and latency needs. Different strategies are needed for real-time versus batch workloads.