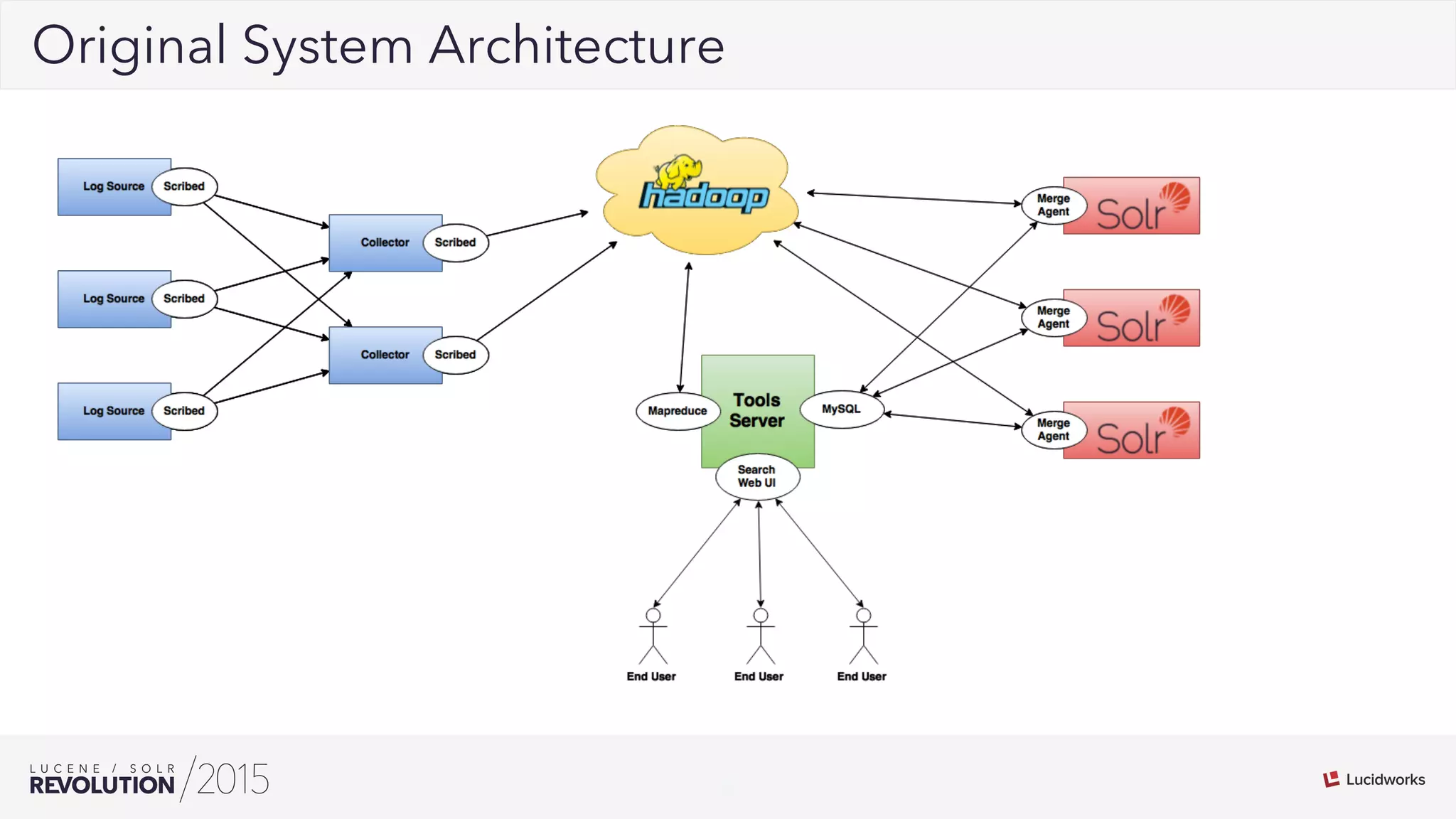

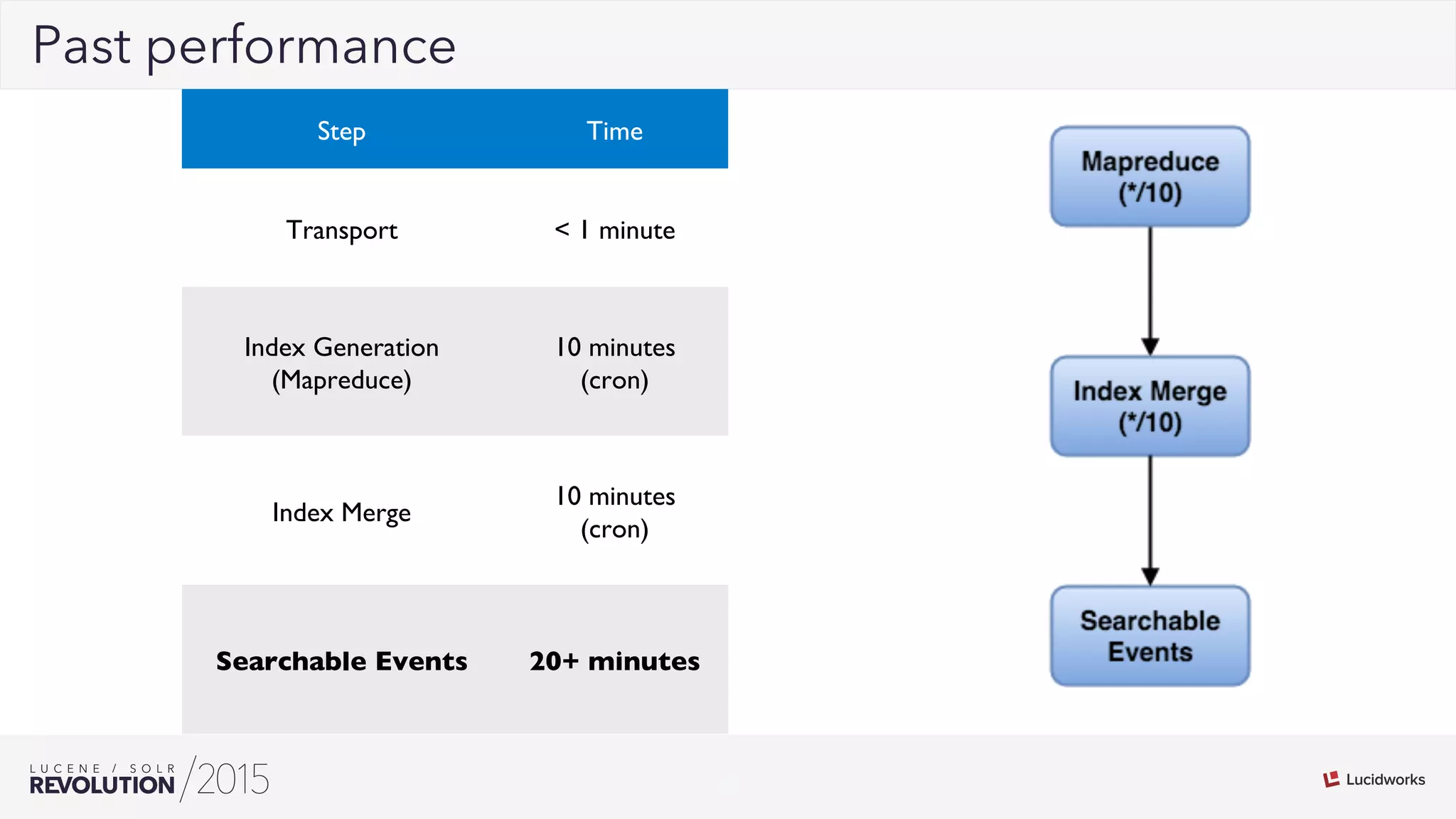

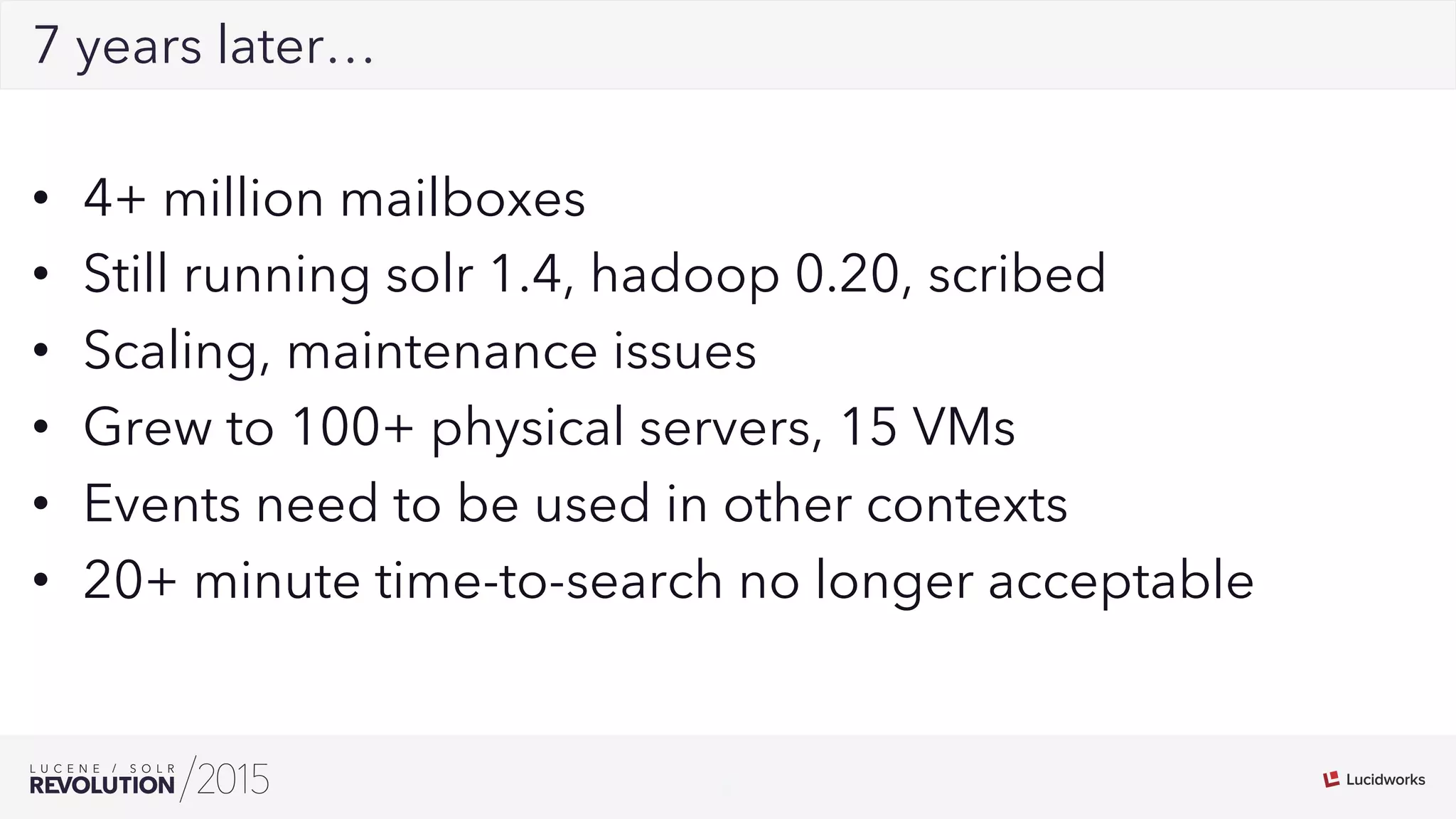

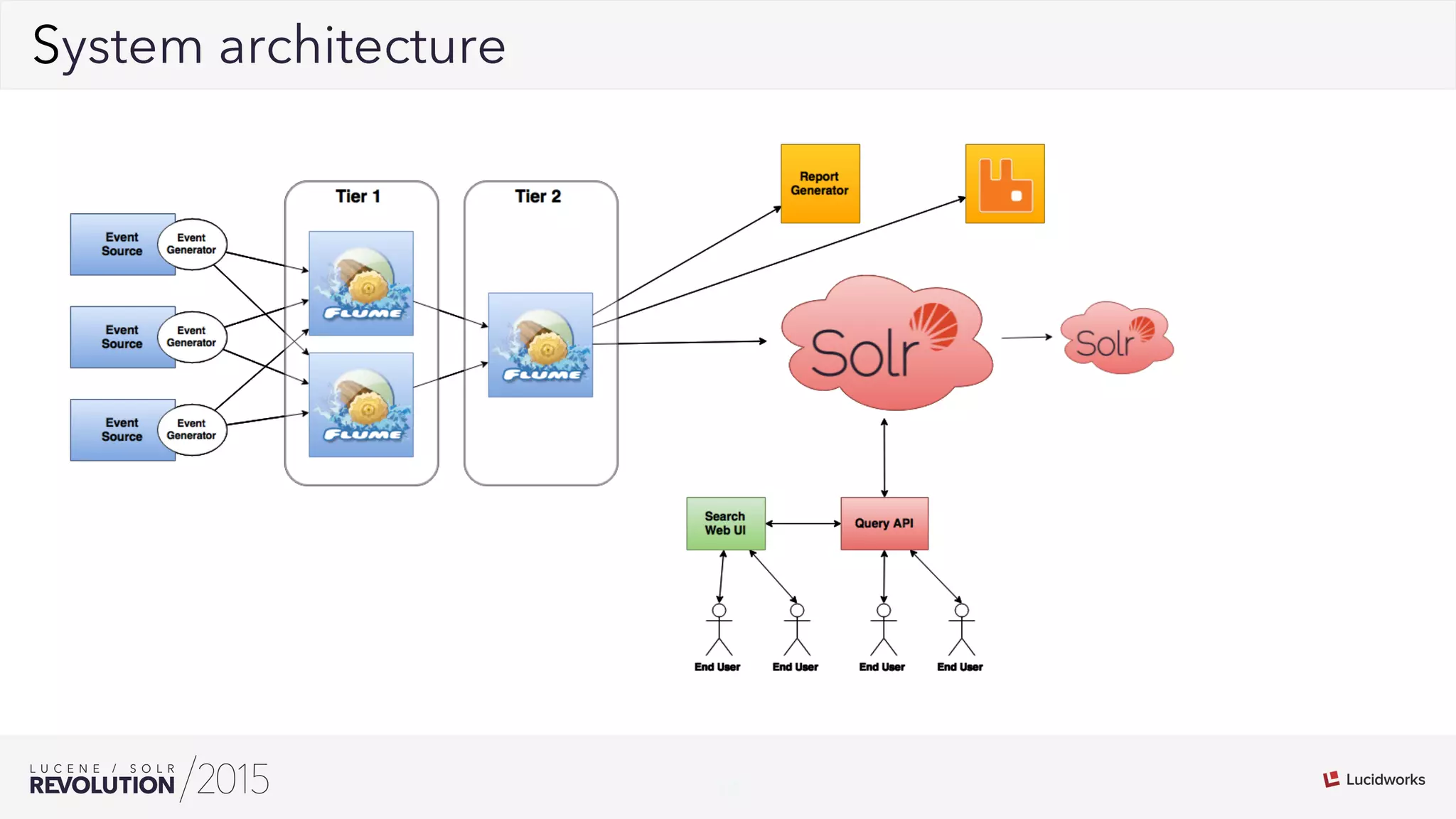

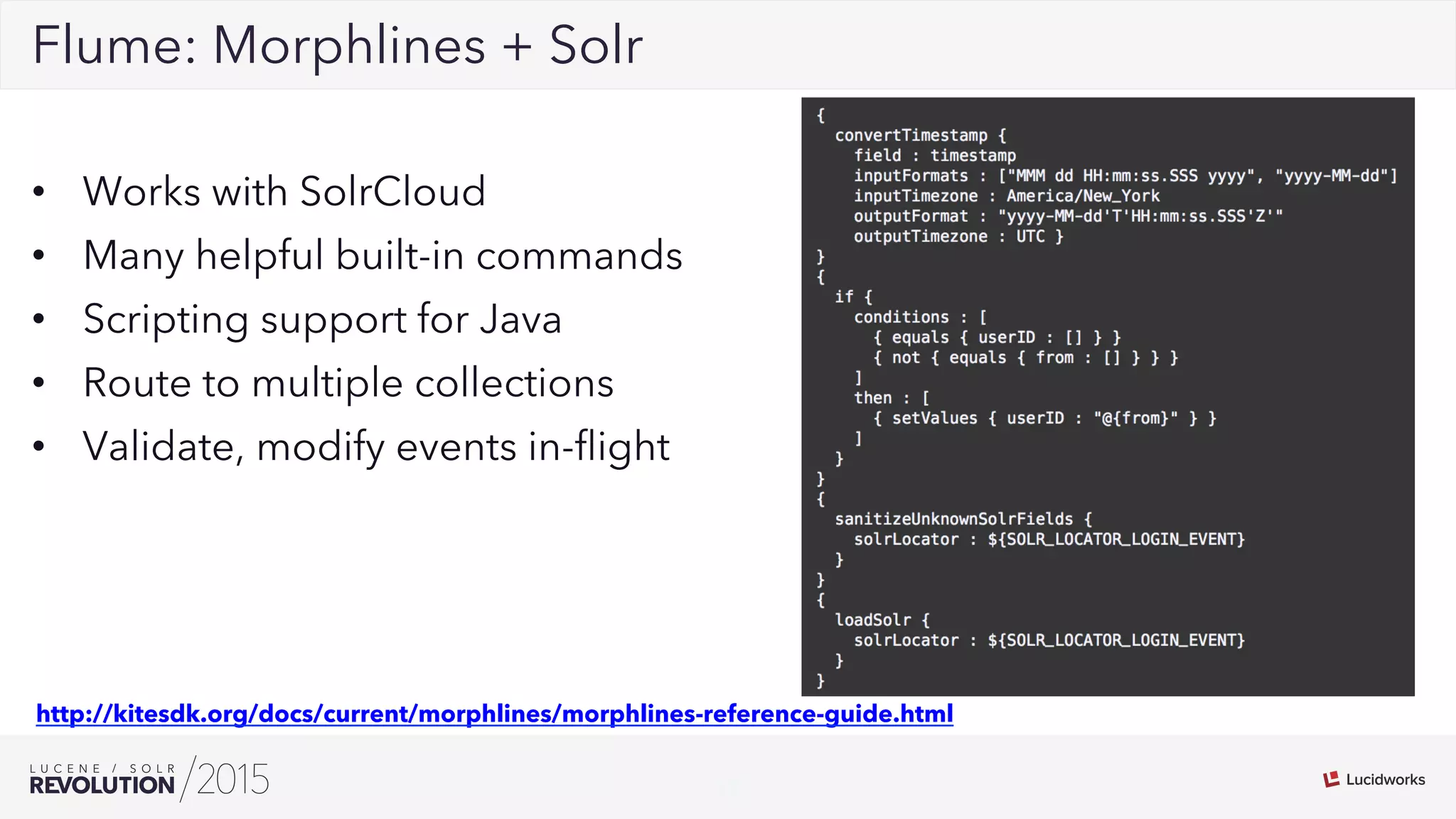

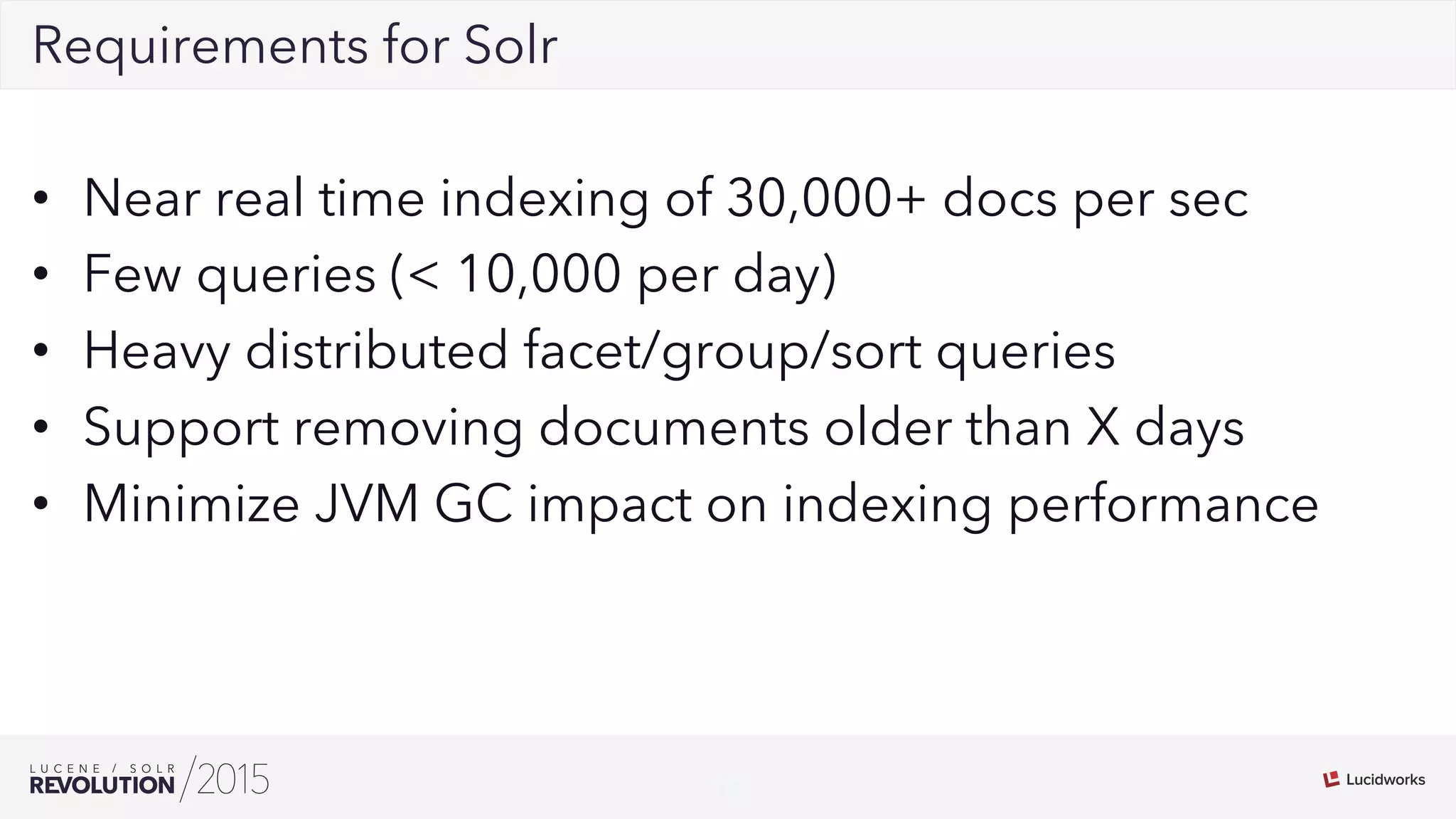

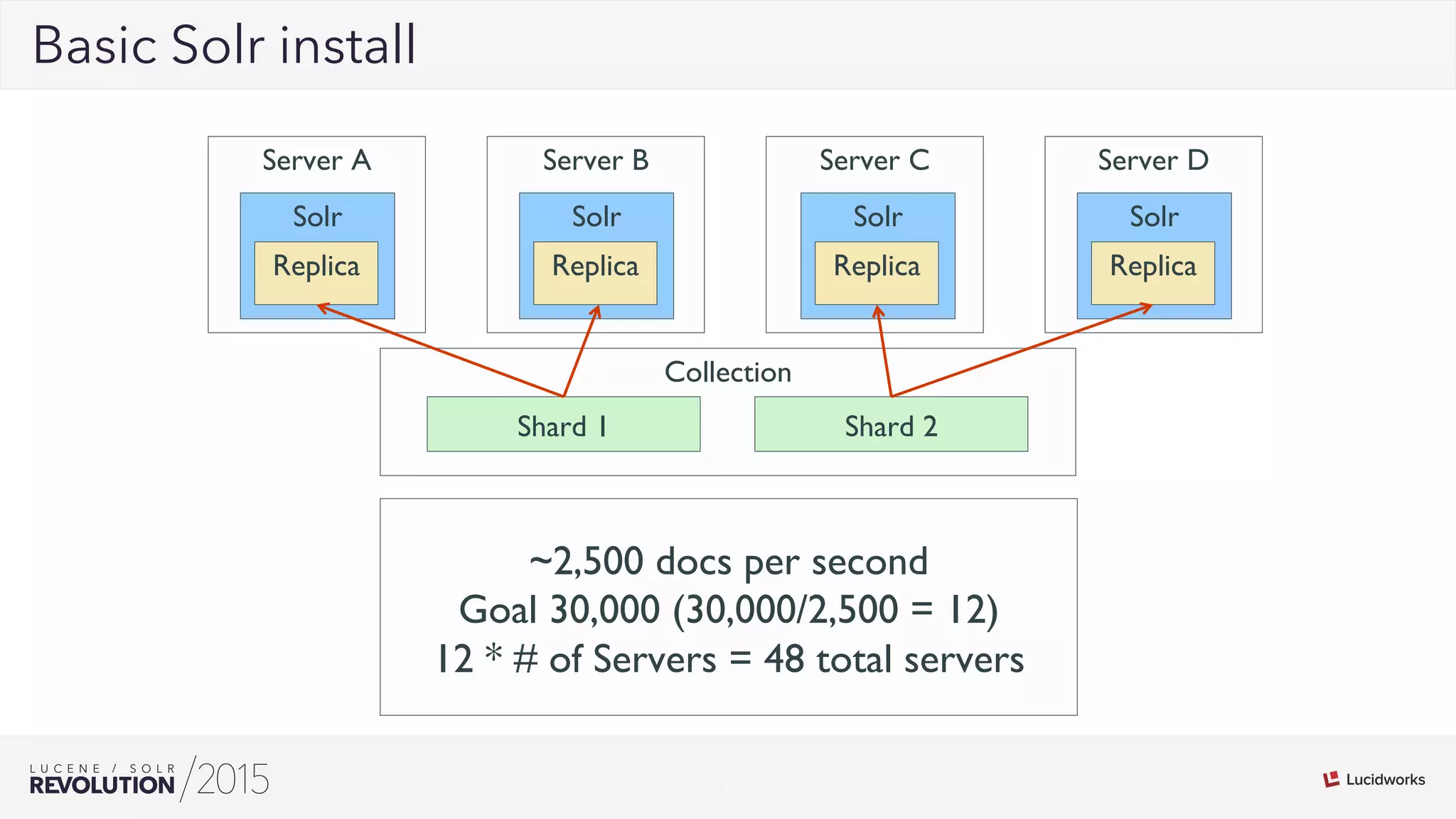

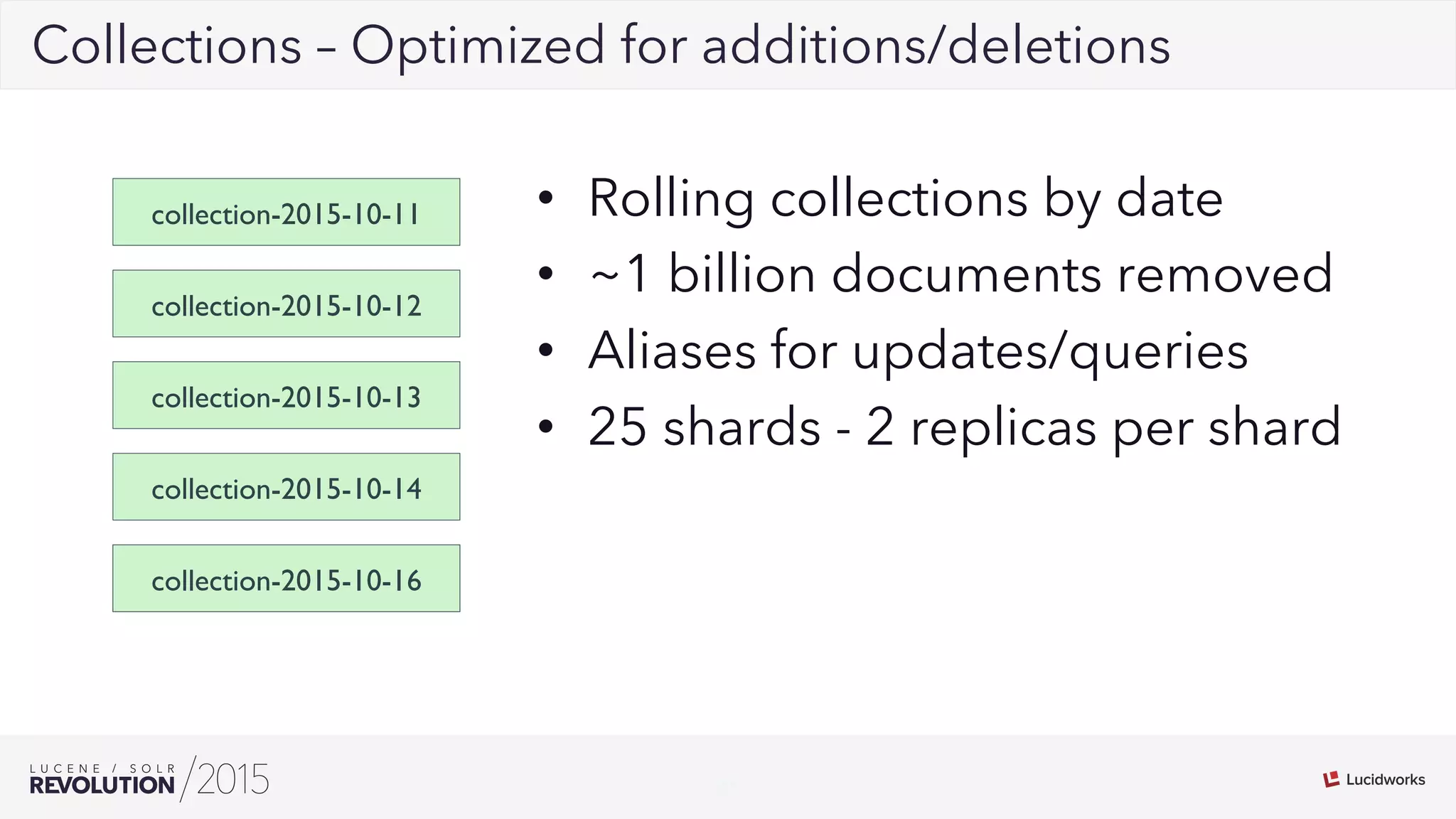

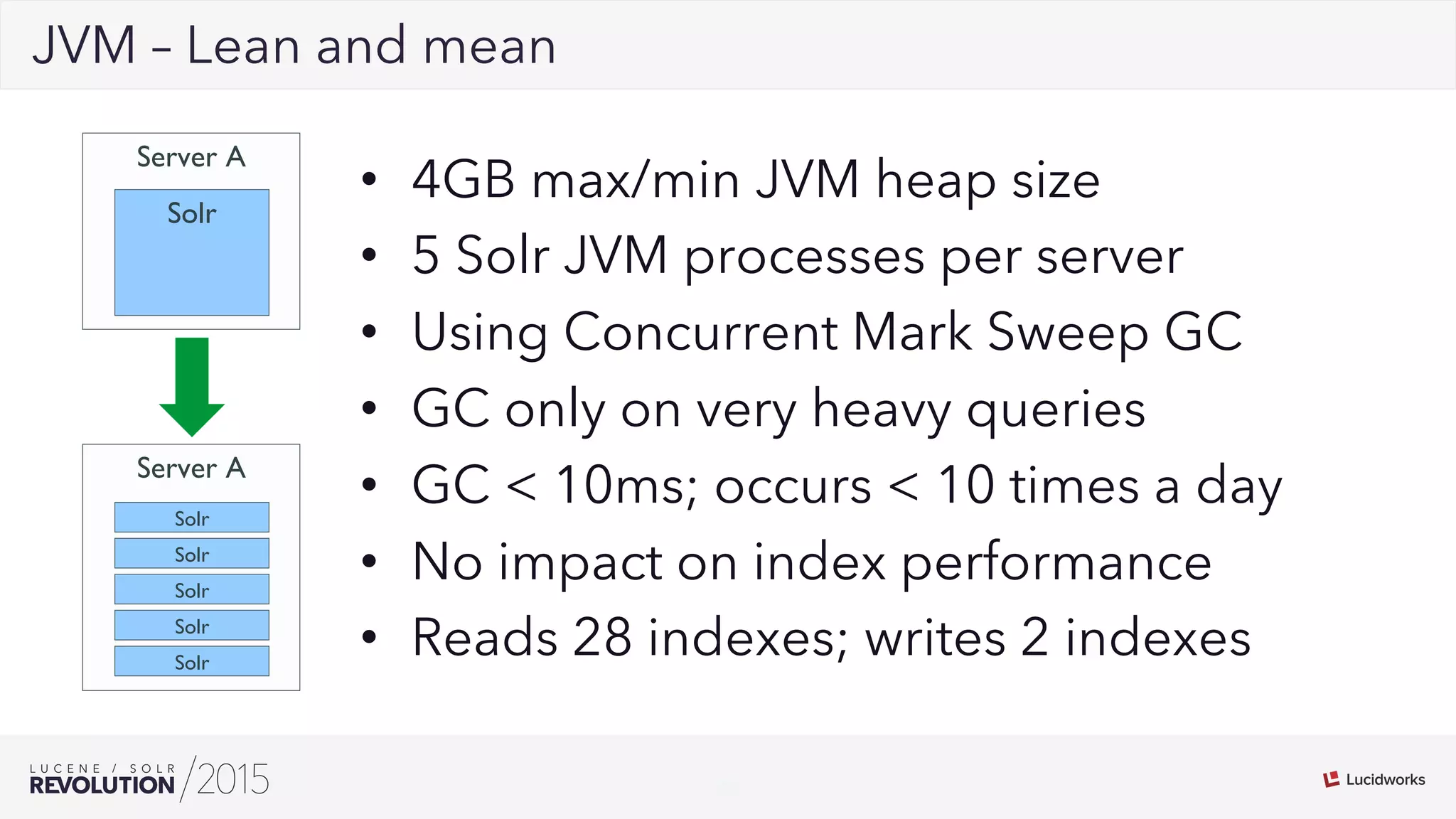

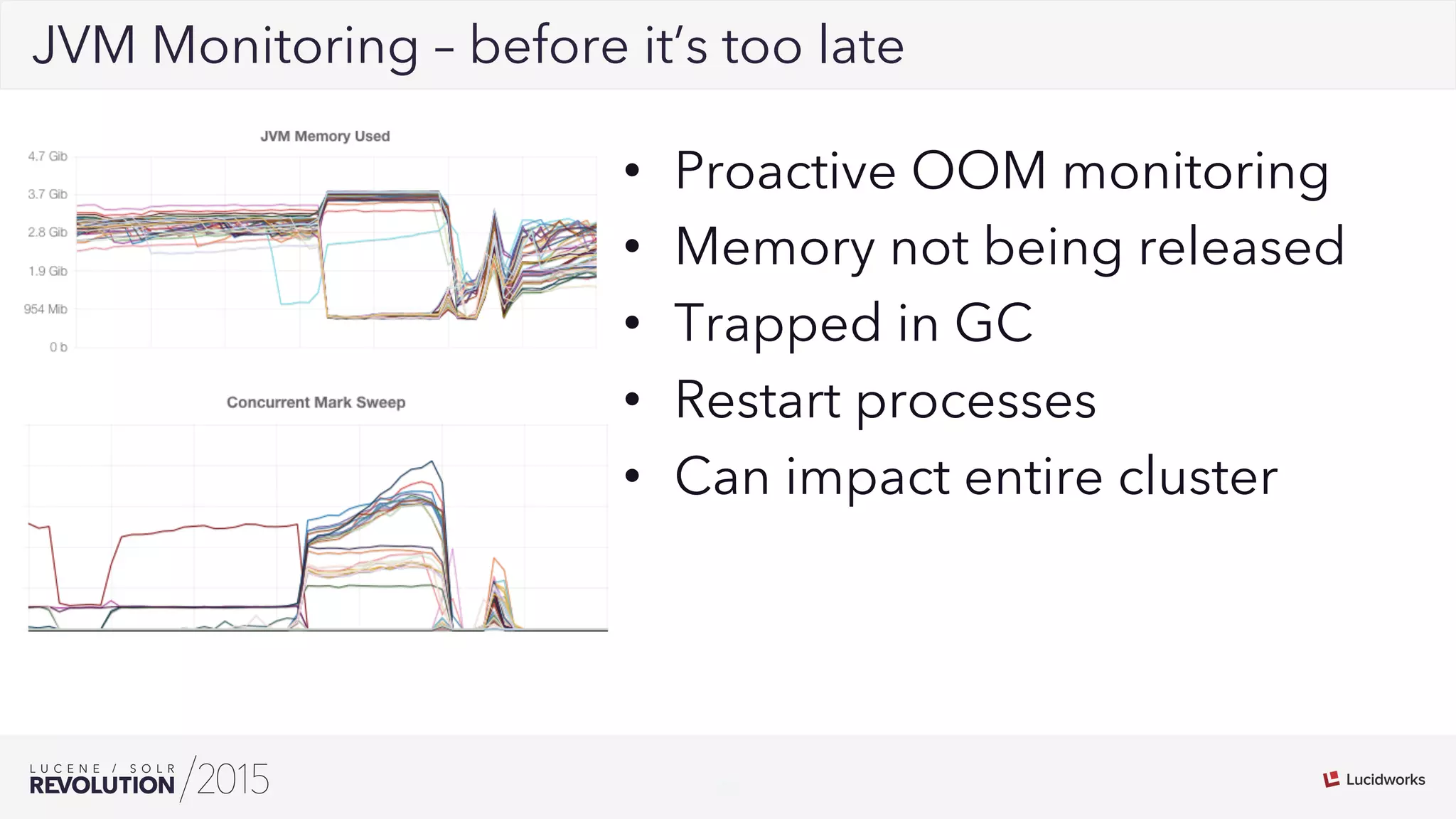

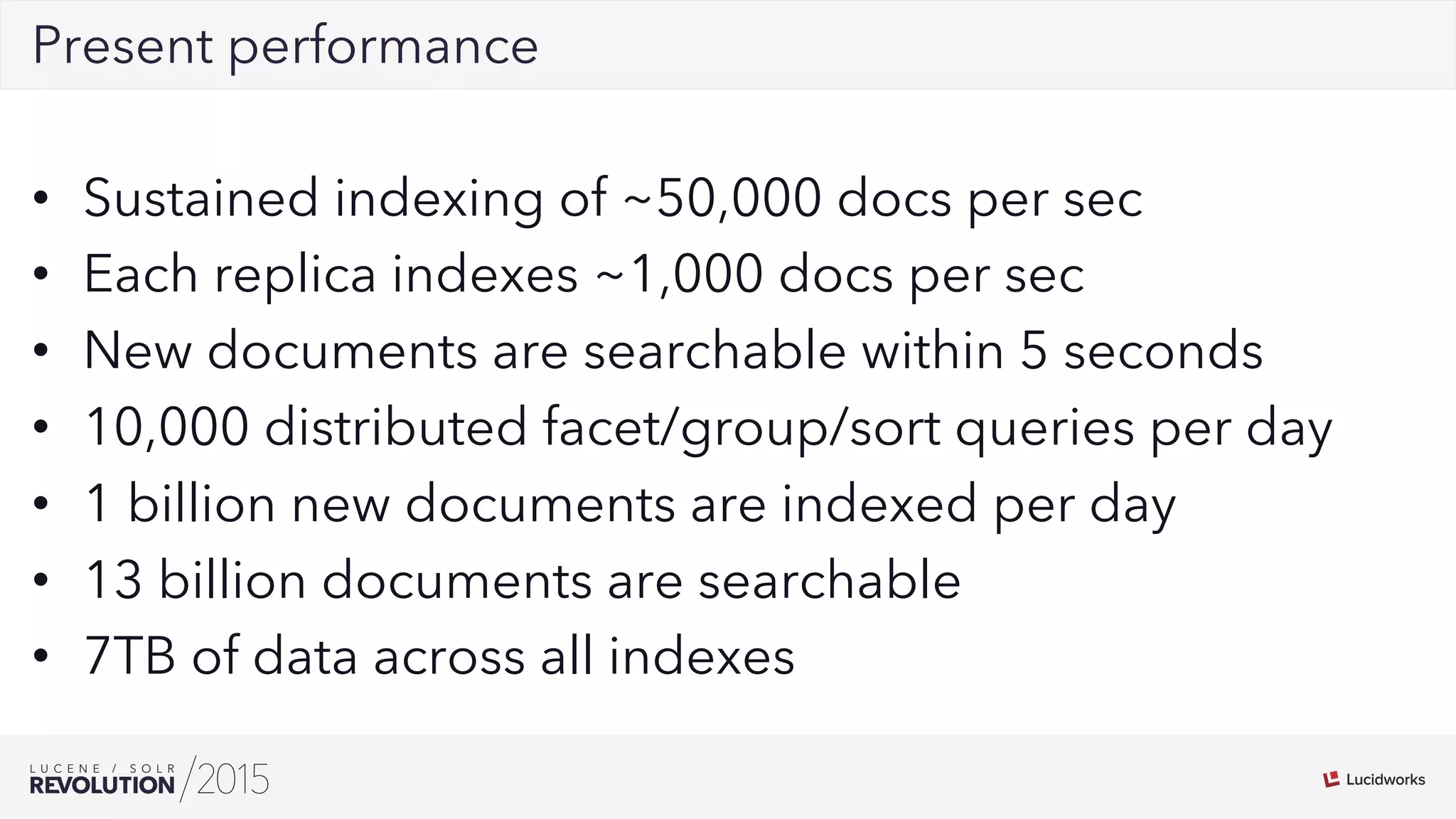

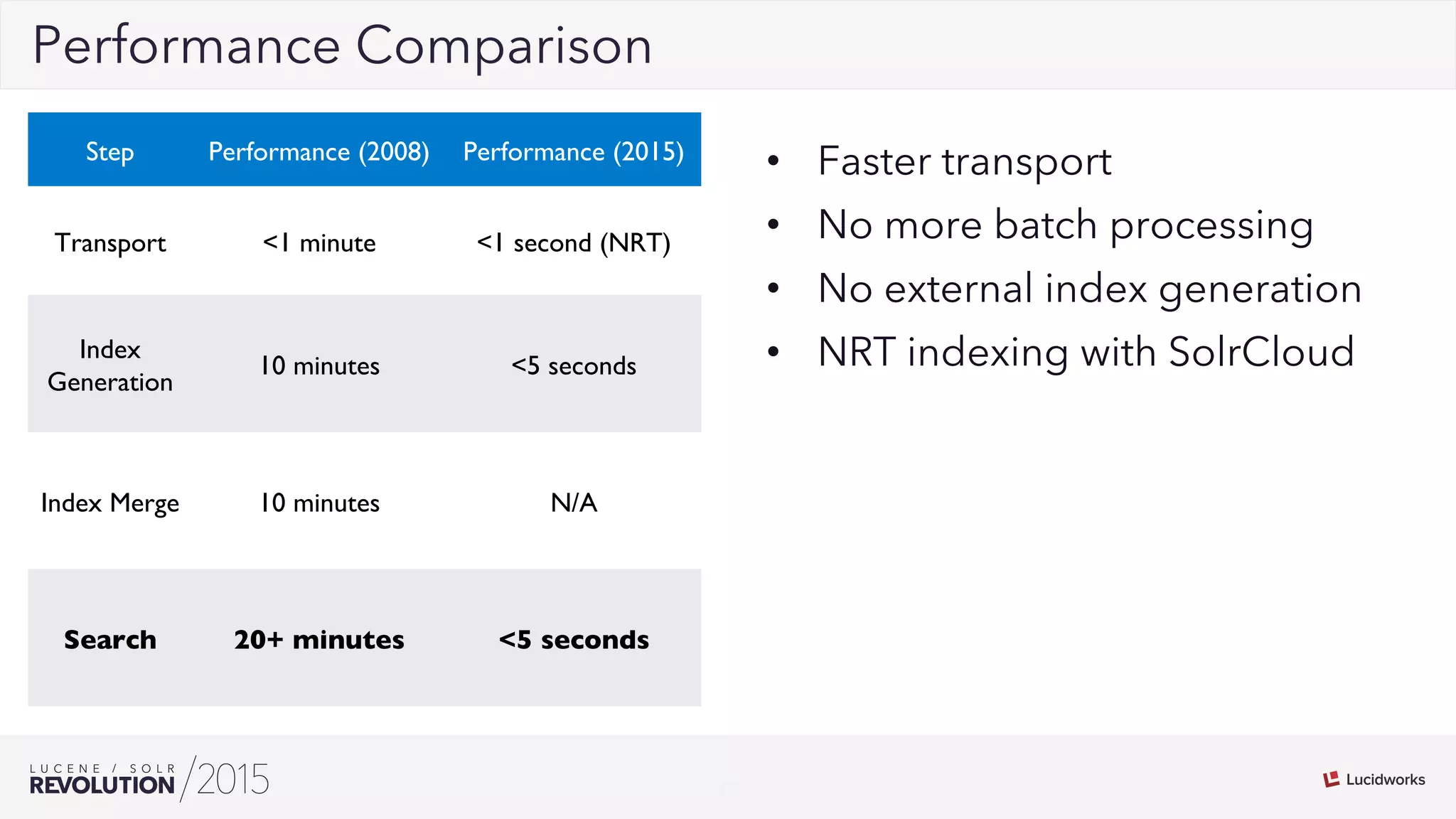

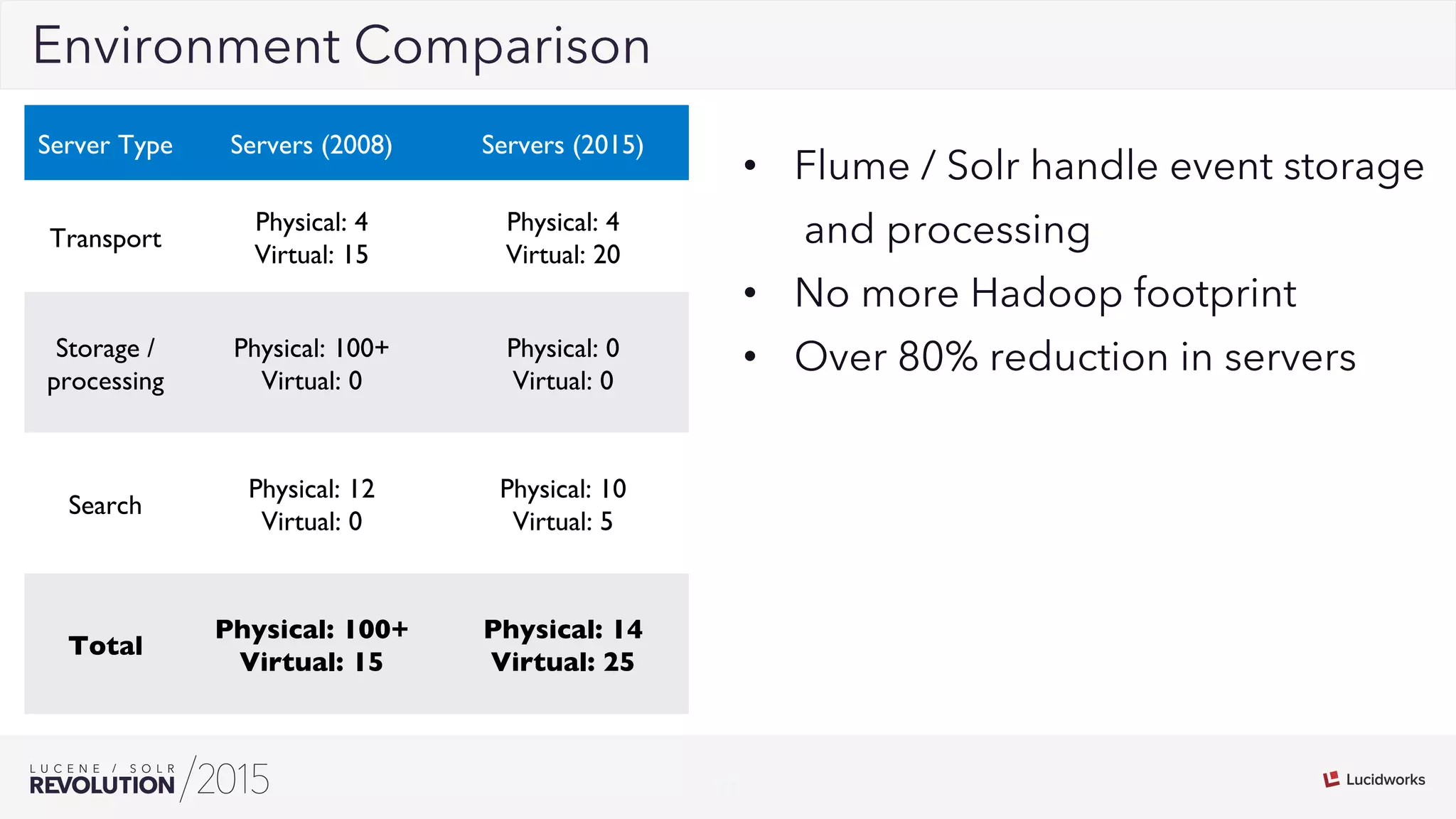

George Bailey and Cameron Baker of Rackspace presented their solution for indexing over 50,000 documents per second for Rackspace Email. They modernized their system using Apache Flume for event processing and aggregation and SolrCloud for real-time search. This reduced indexing time from over 20 minutes to under 5 seconds, reduced the number of physical servers needed from over 100 to 14, and increased indexing throughput from 1,000 to over 50,000 documents per second while supporting over 13 billion searchable documents.