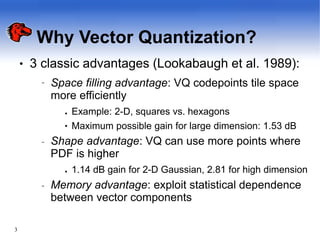

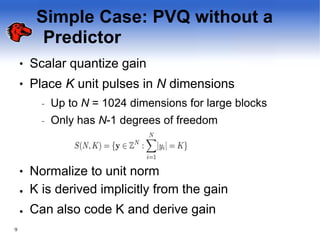

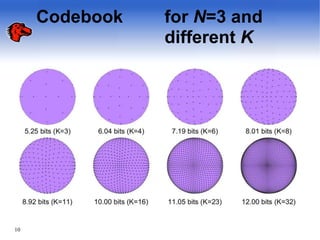

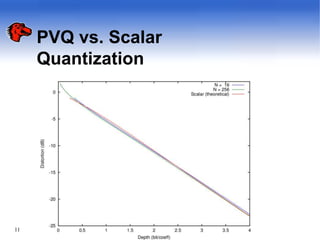

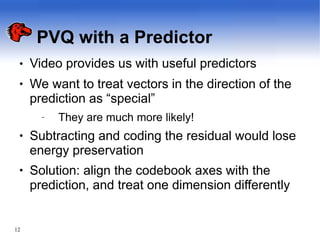

The document discusses pyramid vector quantization (PVQ), a technique for data compression. PVQ uses cubic lattice points on the surface of an L-dimensional pyramid to quantize vectors. It has simple encoding and decoding algorithms. The document analyzes the mean square error of PVQ and presents simulation results showing PVQ provides improvements in rate-distortion over scalar quantization for memoryless sources like Laplacian, gamma, and Gaussian distributions. While suboptimal, PVQ offers significant distortion reductions compared to scalar quantization and provides an alternative to other vector quantizers.