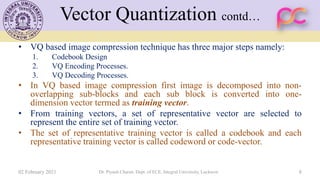

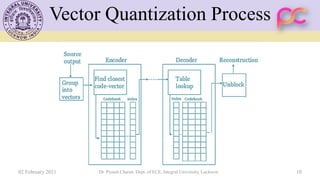

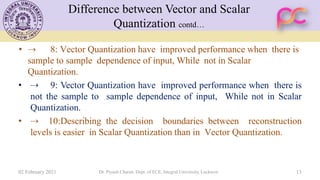

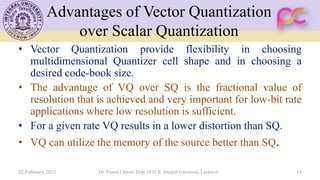

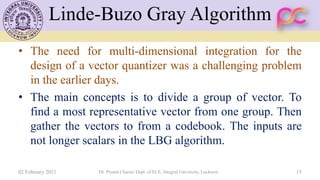

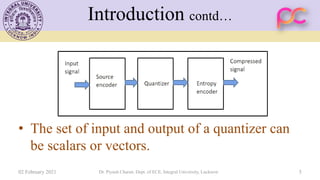

The lecture notes by Dr. Piyush Charan focus on quantization techniques for data compression, particularly vector quantization (VQ) as a method for lossy compression. It discusses the advantages of VQ over scalar quantization, the Linde-Buzo-Gray algorithm, and tree-structured vector quantization methods, emphasizing their application in multimedia data compression. Key points include the importance of an optimal codebook for performance and the benefits of structured approaches to reduce complexity and improve efficiency in quantization.

![Types of Quantization

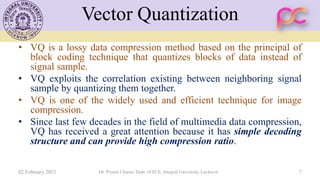

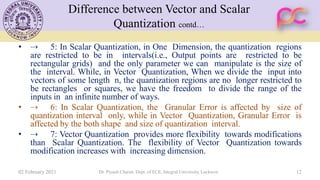

• Scalar Quantization: The most common types of

quantization is scalar quantization. Scalar quantization,

typically denoted as y = Q(x) is the process of using

quantization function Q(x) to map a input value x to scalar

output value y.

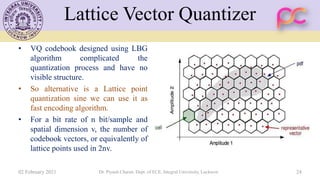

• Vector Quantization: A vector quantization map k-

dimensional vector in the vector space Rk into a finite set of

vectors Y=[Yi : i=1,2,..,N]. Each vector Yi is called a code

vector or a codeword and set of all the codeword is called a

codebook.

02 February 2021 Dr. Piyush Charan, Dept. of ECE, Integral University, Lucknow 6](https://image.slidesharecdn.com/unit5quantization-210523181147/85/Unit-5-Quantization-5-320.jpg)