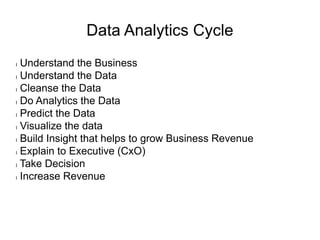

This document discusses big data architecture and cluster optimization using Python. It covers the data analytics cycle, capacity planning for clustering, predictive modeling, tuning operating system parameters, virtual memory configuration, tuning Kafka and Spark clusters, and using PySpark for MapReduce word counting. It also discusses a data science education initiative at the University of Kachchh to establish a data science lab and teach machine learning and data science with Python.