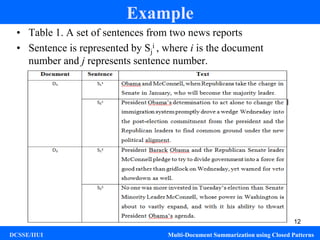

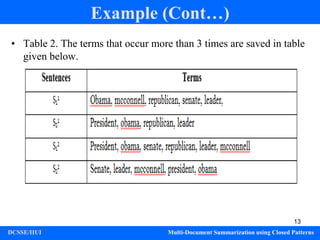

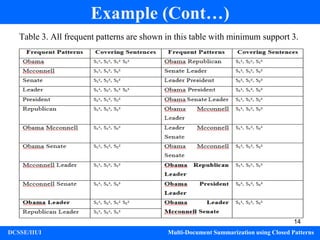

This document presents a research project on multi-document summarization using closed patterns, aimed at efficiently extracting informative sentences from a collection of documents. The proposed solution addresses challenges posed by the vast amount of electronic documents and aims to reduce information redundancy through a pattern-based model. The methodology includes a comprehensive evaluation using datasets like duc2004 and techniques such as the ROUGE metric for assessing summary quality.

![Multi-Document Summarization using

Closed Patterns

Superviser: Ms. Zakia Jalil

Co-Superviser: Ms Sabina Irum

Presented by

Hafsa Sattar [2896-FBAS/BSCS/F14]

Uswa Ihsan [2822-FBAS/BSCS/F14]

Department of Computer Science & Software Engineering

Faculty of Basic &Applied Sciences

International Islamic University, Islamabad.](https://image.slidesharecdn.com/projectpresentation-221213162747-5ea4bc69/85/Project-Presentation-ppt-1-320.jpg)

![25

References

DCSSE/IIUI Multi-Document Summarization using Closed Patterns

[1] Ji-Peng Qiang, Peng Chen, Wei Ding, Fei Xei, Xindong Wu,

Multi-document Summarization using Closed Patterns, Knowledge

Based System (2016).

[2] https://en.wikipedia.org/wiki/Multi-

document_summarization

[3] https://www.slideshare.net/LiaRatna1/sinonim-38250183

[4] https://www.hindawi.com/journals/tswj/2016/1784827/

[5] https://en.wikipedia.org/wiki/ROUGE_(metric)

[6] https://www.quora.com/What-is-the-meaning-and-formula-

for-the-ROUGE-SU-metric-for-evaluating-summaries

[7] https://en.wikipedia.org/wiki/N-gram

[8] https://en.wikipedia.org/wiki/F1_score](https://image.slidesharecdn.com/projectpresentation-221213162747-5ea4bc69/85/Project-Presentation-ppt-25-320.jpg)