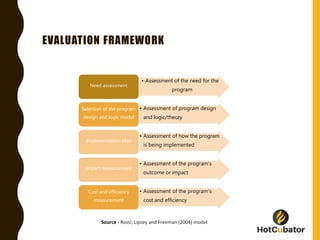

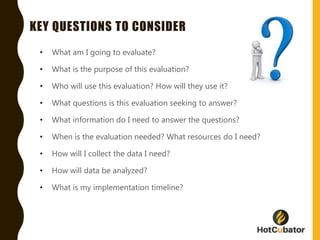

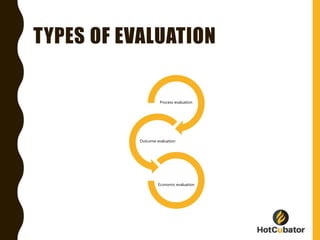

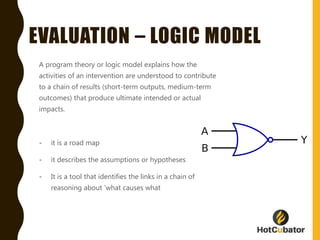

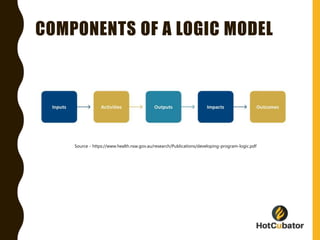

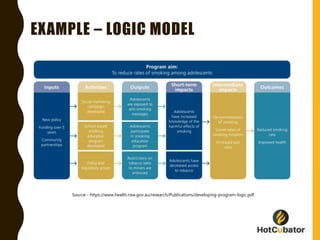

The document outlines the fundamentals of evaluation, including objectives, logic models, evaluation designs, and data analysis approaches. It emphasizes the importance of assessing program design, implementation, outcomes, and cost-effectiveness through formative and summative evaluations. Key questions guide evaluators in understanding the purpose, methodology, and impact of their evaluations.