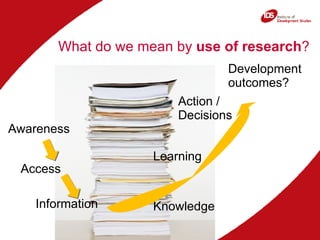

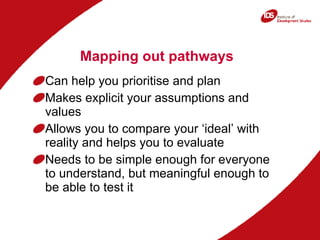

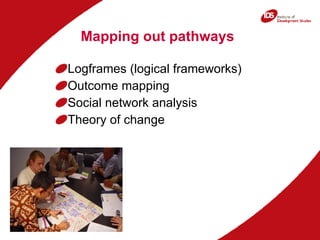

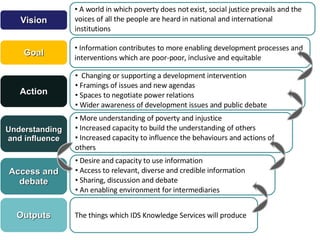

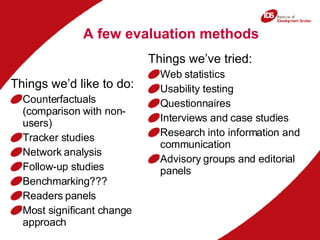

The document discusses the evaluation of research brokers and intermediaries to understand their success and impact on policy and decision-making. It highlights the complexities in measuring research communication outcomes and emphasizes the need for tailored evaluation methods to account for varying stakeholder definitions of success. Recommendations include clarifying the concept of 'use' of information, mapping pathways of influence, and engaging with target users to enhance the impact of development research.