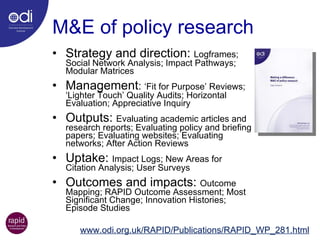

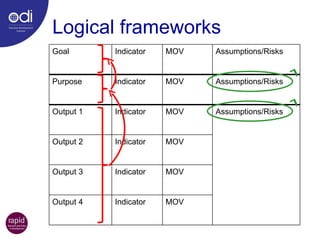

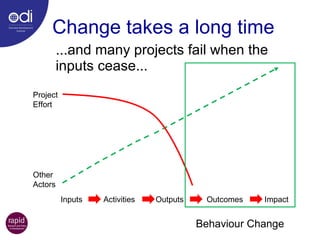

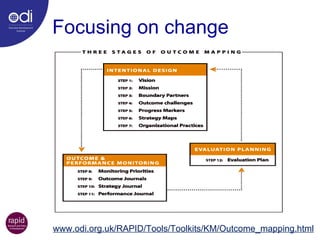

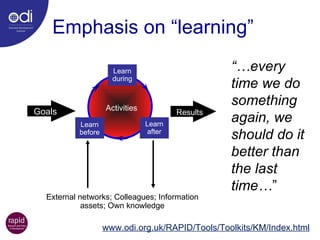

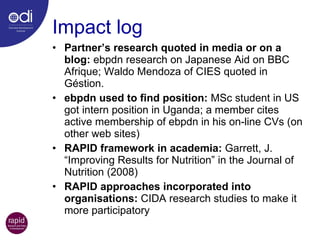

The document discusses various methods for monitoring and evaluating policy research including logical frameworks, outcome mapping, social network analysis, and case studies. It provides examples of specific tools like impact logs, stories of change, and after action reviews that can be used to assess outputs, outcomes, uptake, and impacts of research projects and better understand what works and what can be improved. The goal is to focus on learning and change over time through evaluation approaches that are participatory, capture unintended impacts, and help make future work more effective.

![Making a difference: M&E of policy research John Young: ODI, London [email_address]](https://image.slidesharecdn.com/jyrecoupmande-091207162722-phpapp01/85/Jy-Recoup-Mand-E-1-320.jpg)