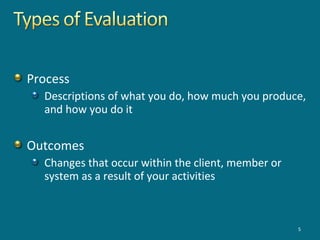

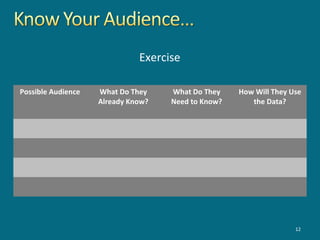

This document provides guidance on measuring outcomes for non-profit programs and services. It discusses the importance of measuring both process and outcome data. Process data describes what an organization does, like activities and outputs, while outcome data measures changes in clients, like knowledge gained or behaviors changed. The document then offers tips on determining which outcomes to measure, developing tools to measure them, assigning responsibilities, and timelines. Finally, it provides examples of tools that can be used, like surveys, interviews, and observations, and advises on documenting processes and keeping measurement systems simple.