Here are some key metrics that schedulers should optimize for in different contexts:

- Airline: Maximize revenue by prioritizing high-fare passengers to fill the plane.

- Sweatshop: Maximize output by prioritizing the fastest workers.

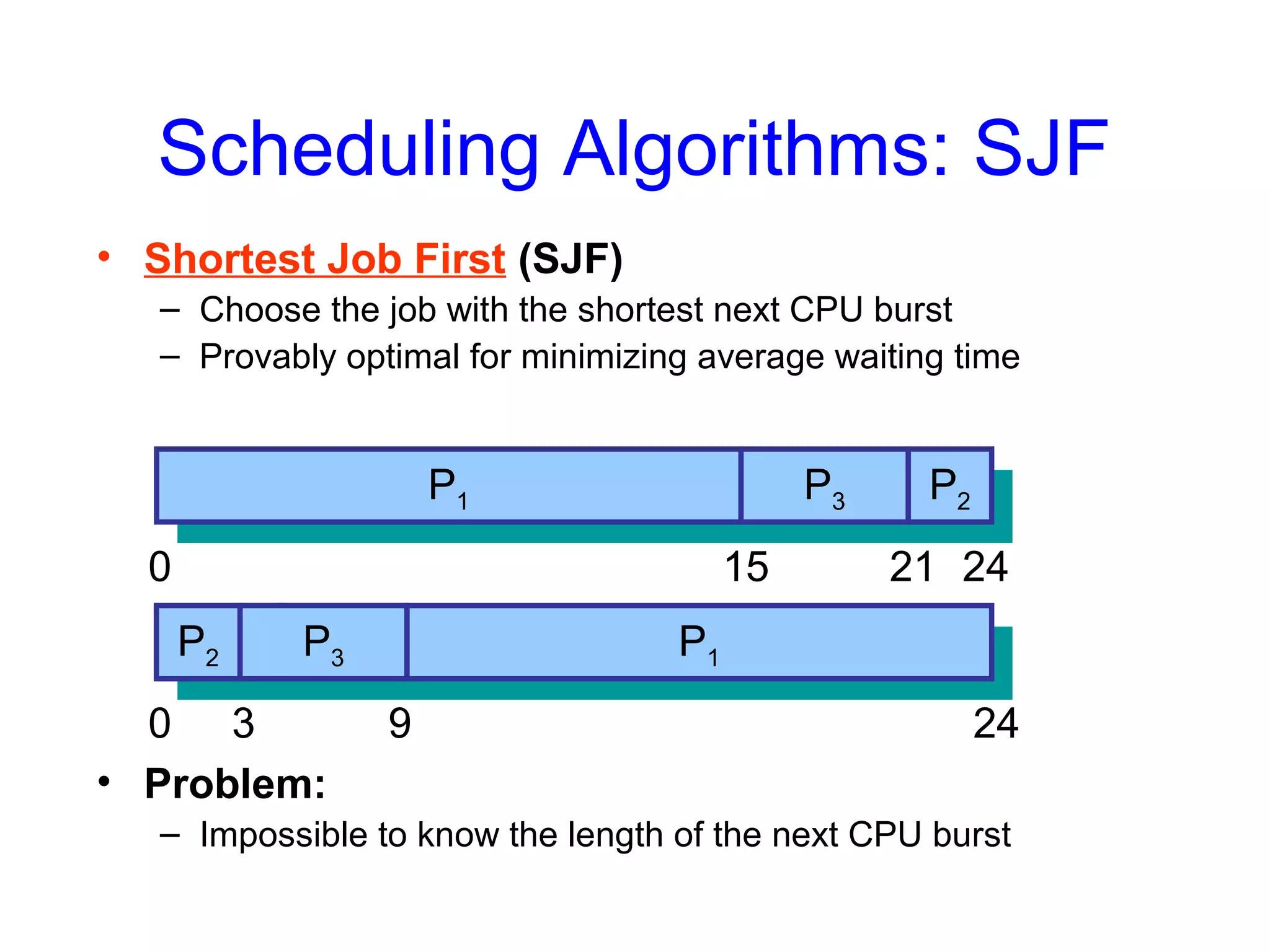

- Restaurant: Minimize average wait time for customers to receive their food order.

- DMV: Minimize average wait time for customers and maximize throughput of service completed per day.

- Project partner: Prioritize potential partners who are highly skilled and available to begin work promptly.

- Software company: Maximize likelihood of meeting the deadline by prioritizing and allocating resources to the most critical remaining tasks.

The right scheduling priorities depend