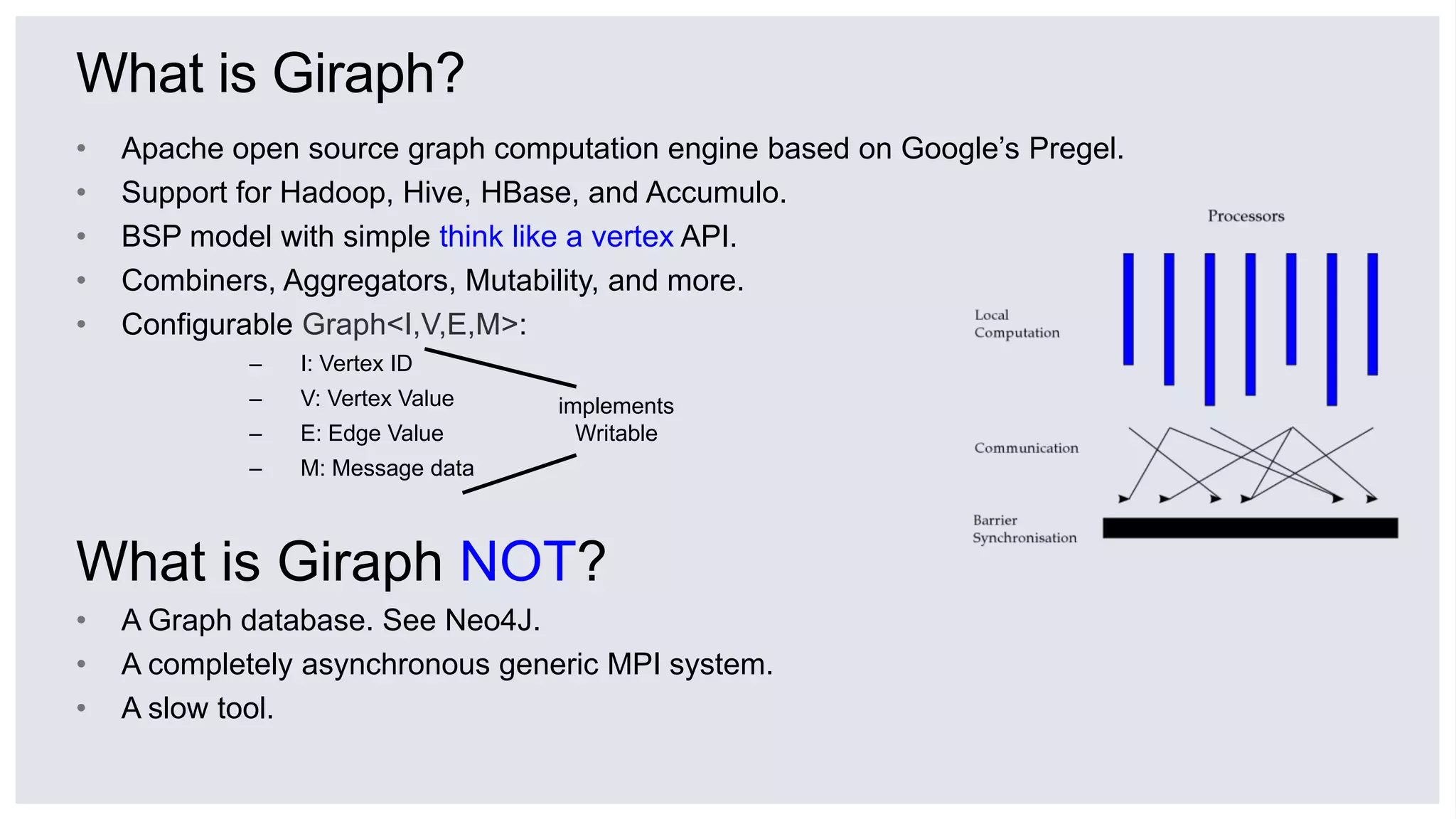

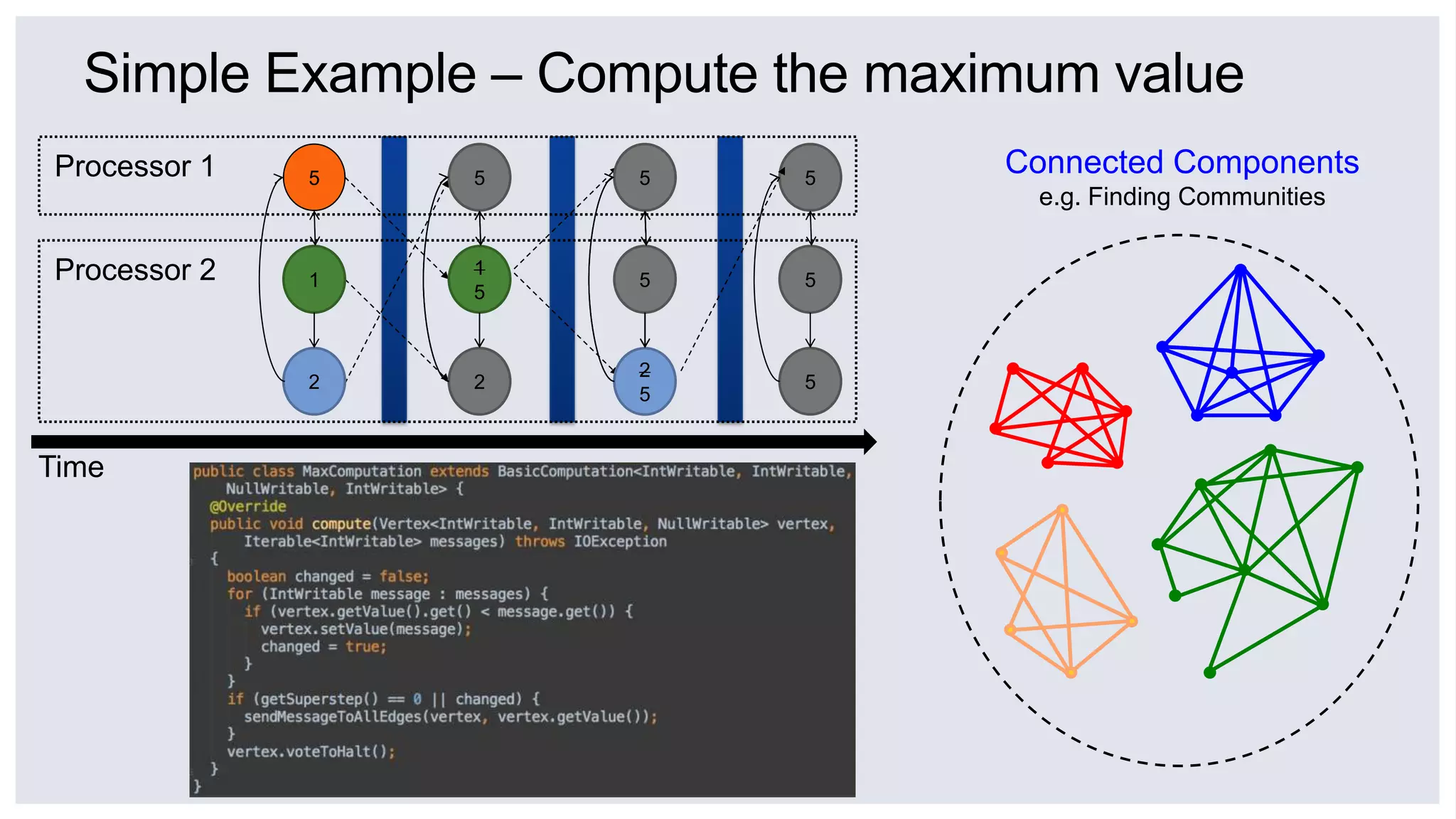

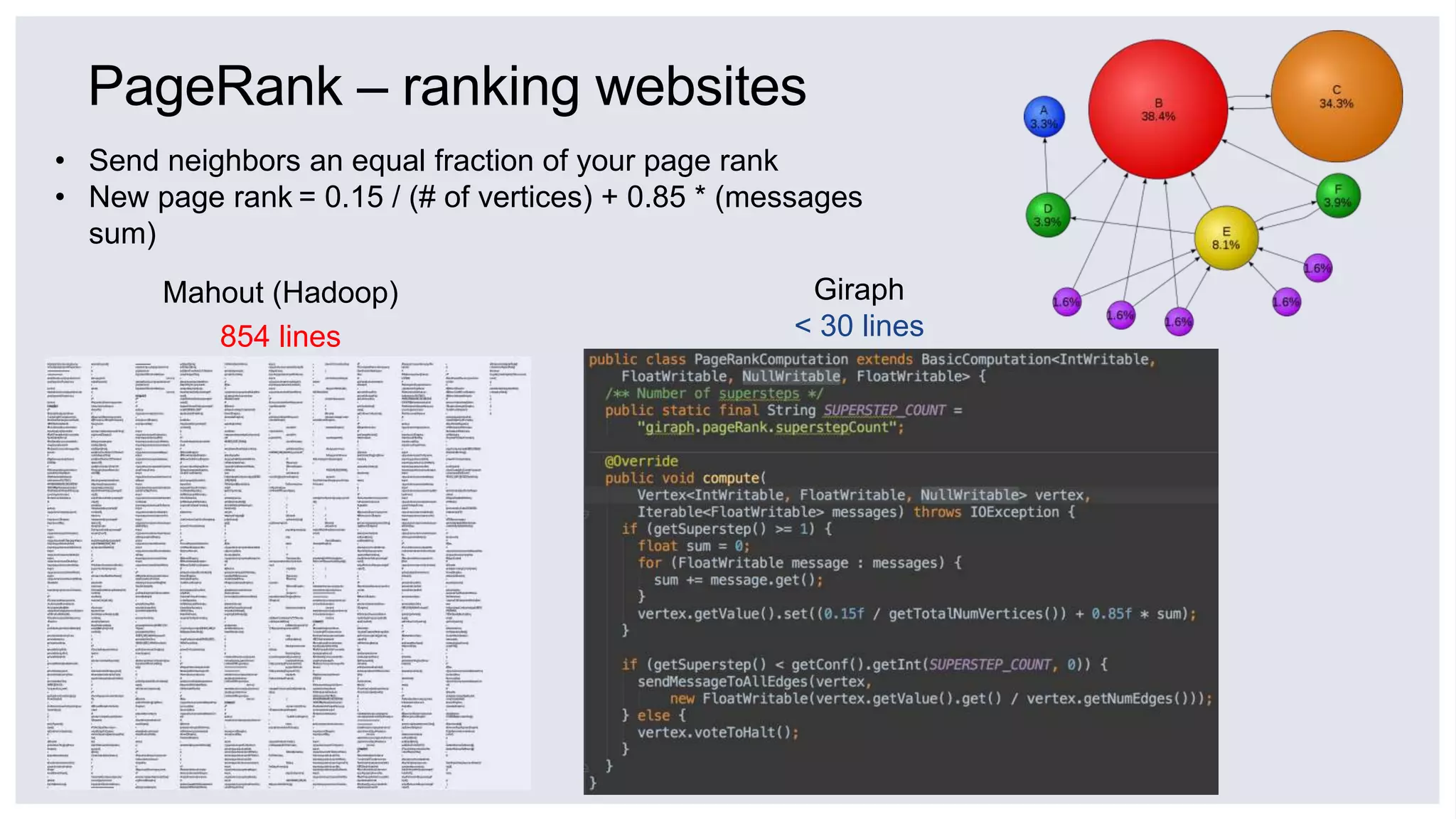

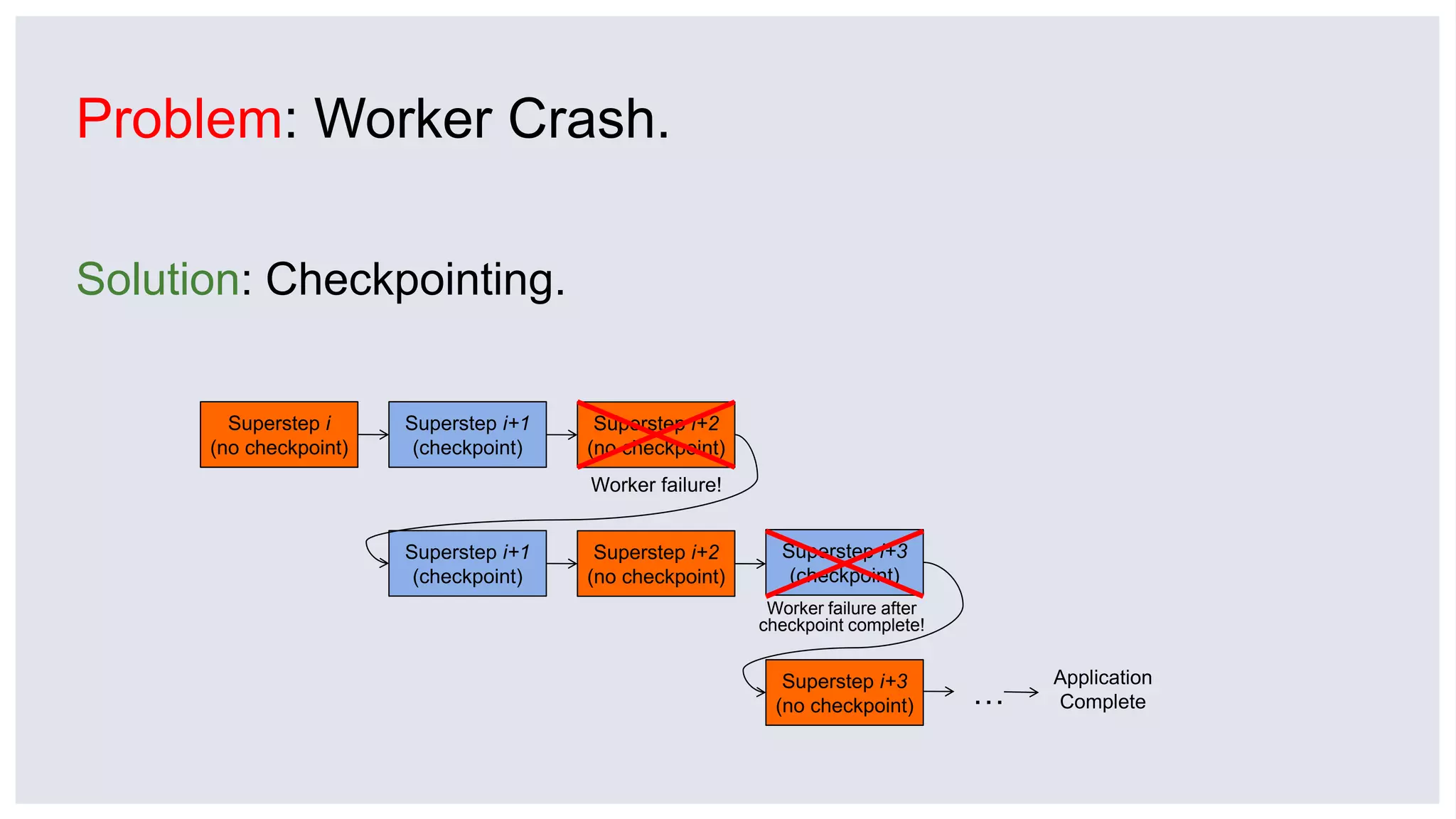

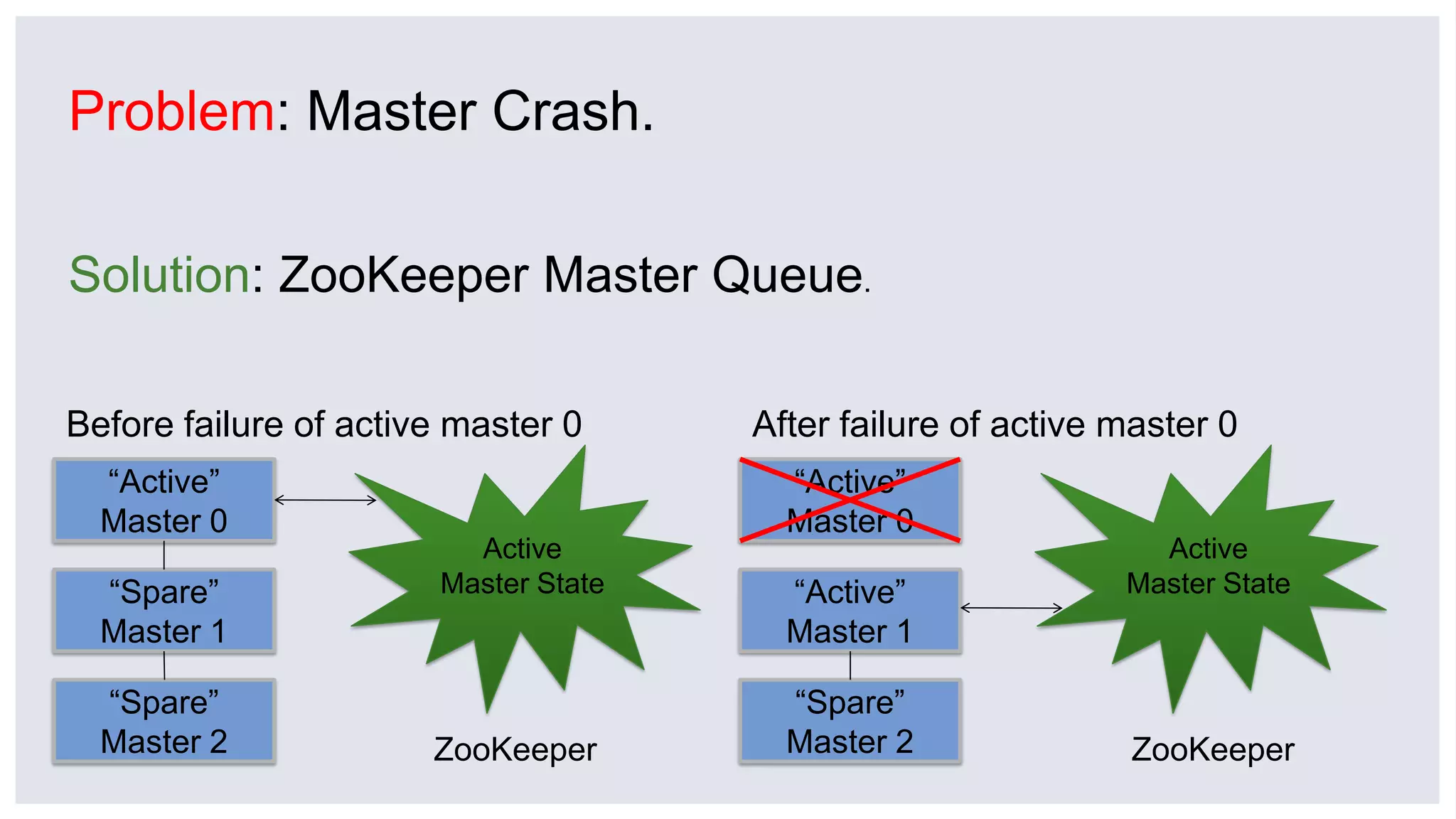

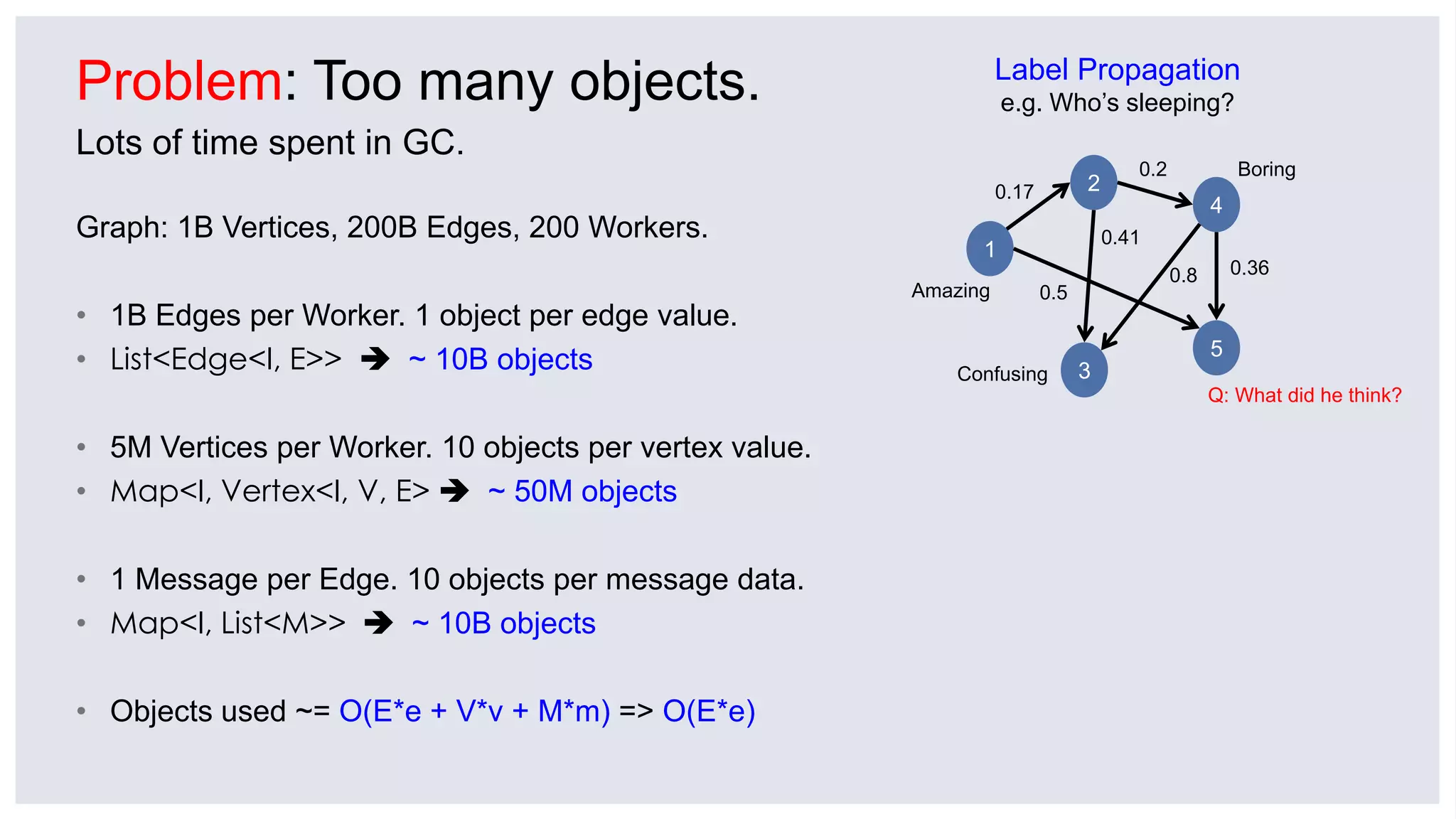

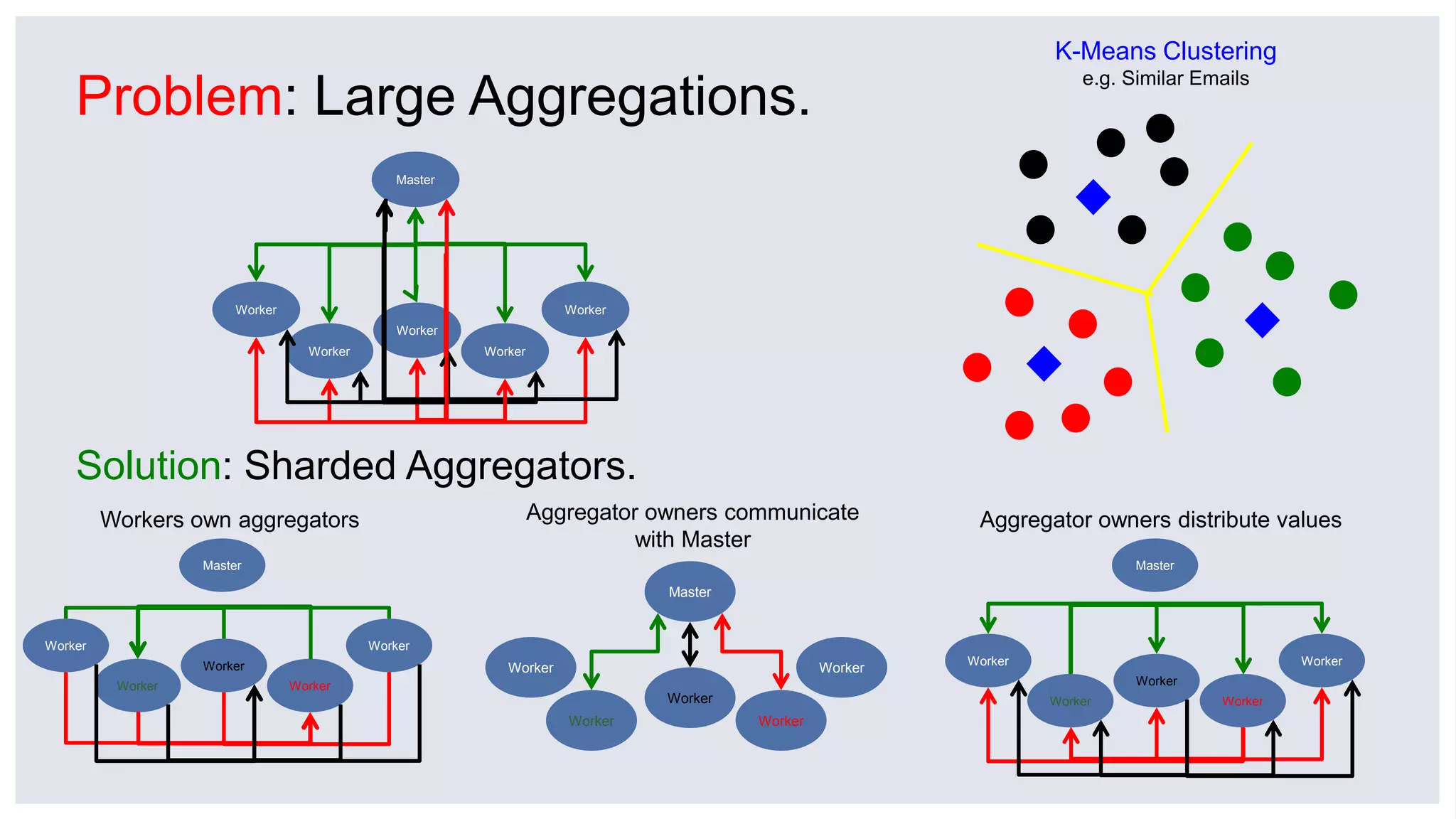

1) The document discusses scaling Apache Giraph, an open source graph computation engine. It outlines several problems that arise when scaling Giraph to large graphs, such as worker crashes and master crashes.

2) Solutions proposed to address these problems include checkpointing to handle worker crashes, using ZooKeeper for master queue handling to address master crashes, and using byte arrays and unsafe serialization to reduce object overhead.

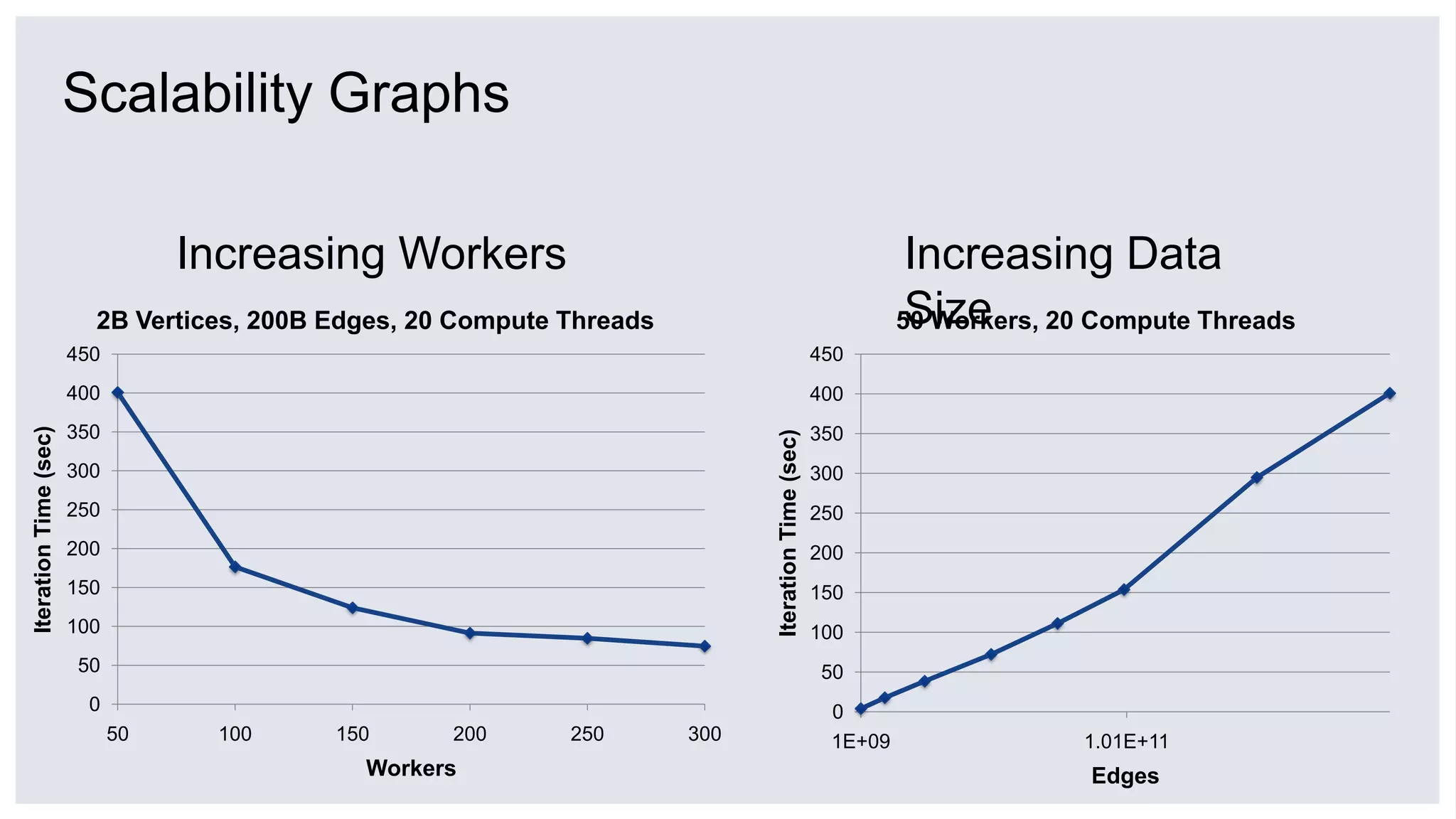

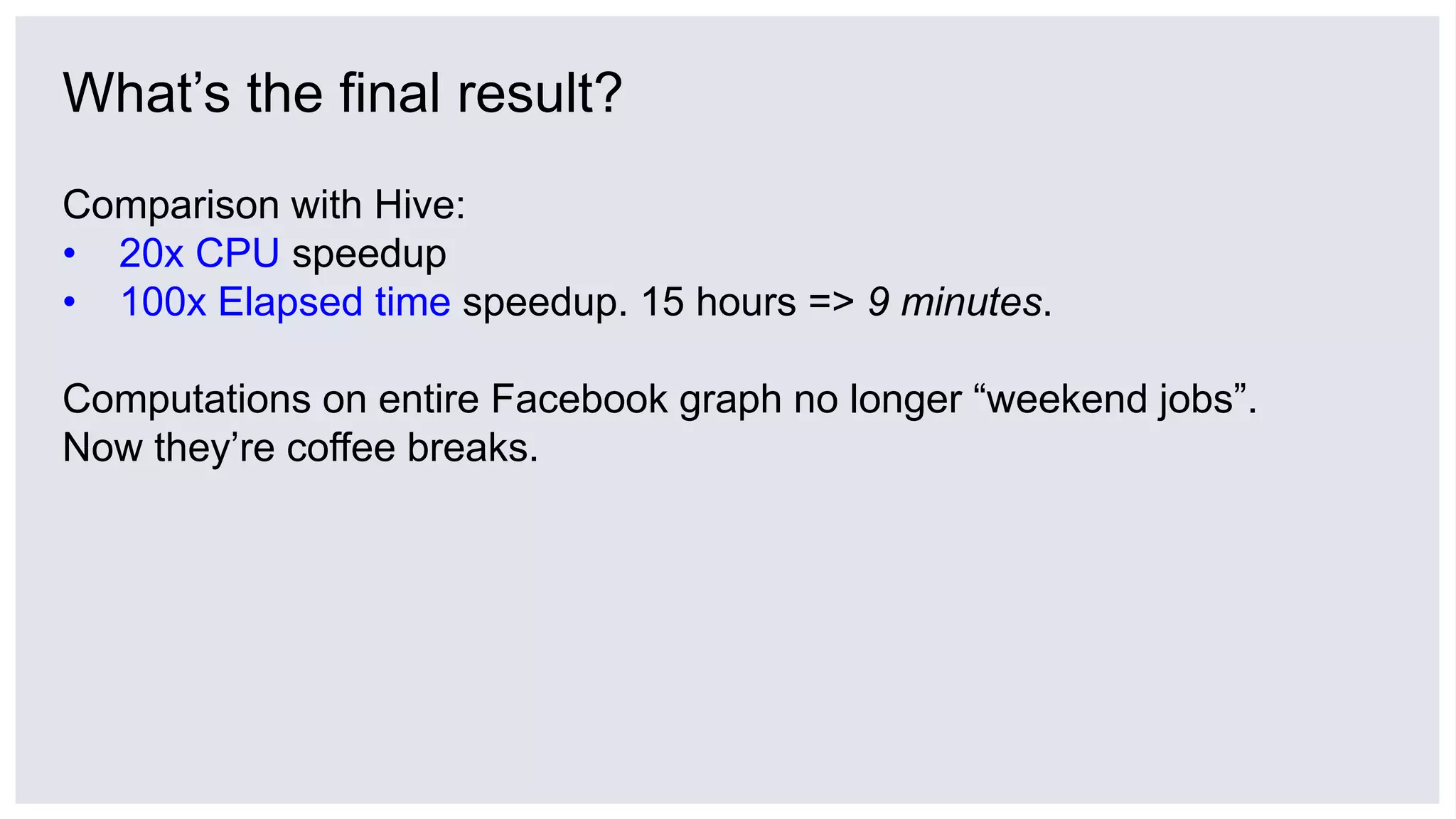

3) Test results show Giraph can scale to graphs with billions of vertices and edges on a cluster of 50 workers, achieving speedups of 20x CPU and 100x elapsed time compared to Hive for similar graph computations.

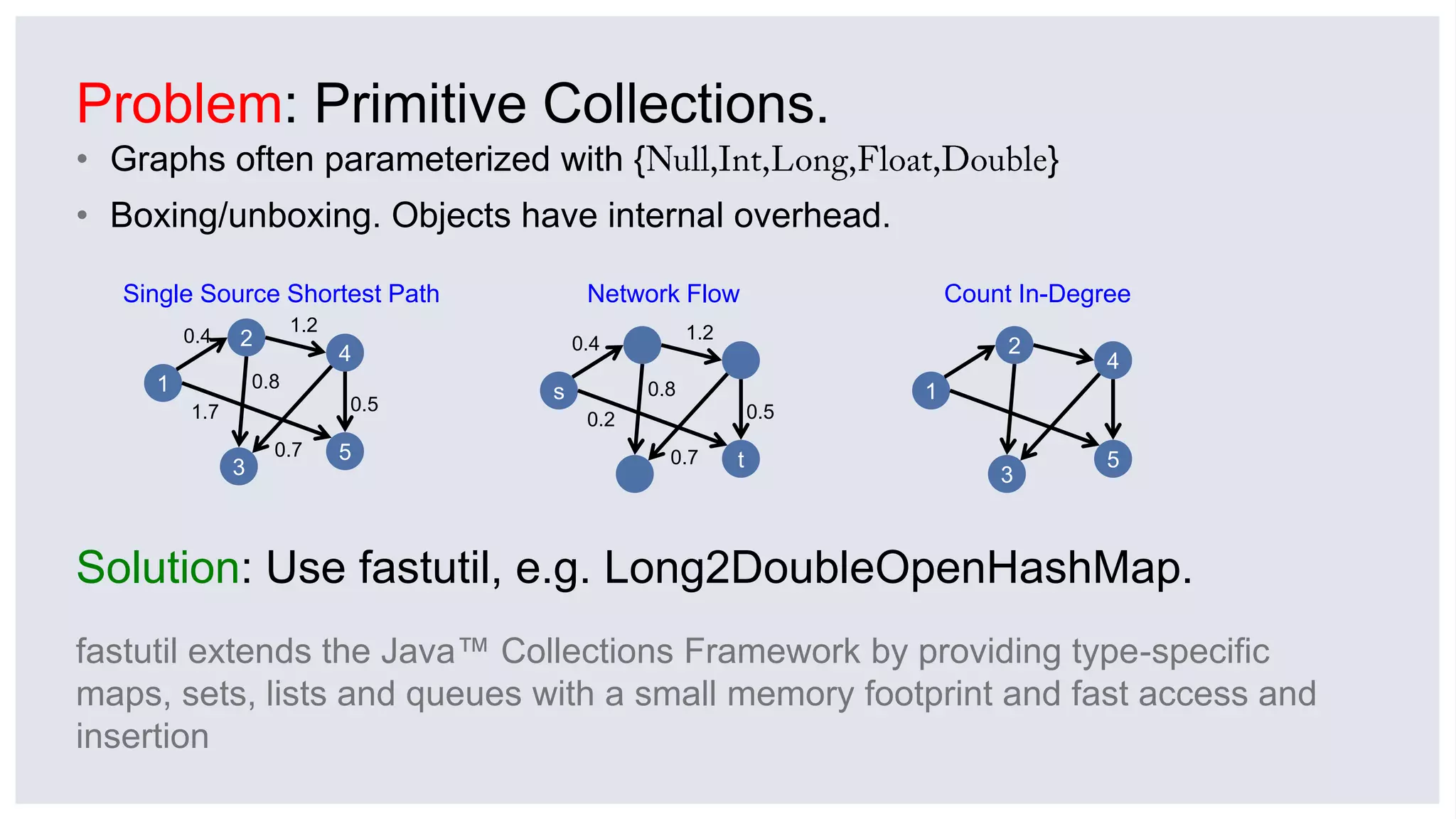

![Problem: Too many objects.

Lots of time spent in GC.

Solution: byte[]

• Serialize messages, edges, and vertices.

• Iterable interface with representative object.

Input Input Input

next()

next()

next()

Objects per worker ~= O(V)

Label Propagation

e.g. Who’s sleeping?

3

1

2

4

5

Boring

Amazing

Q: What did he think?

0.5

0.2

0.8 0.36

0.17

0.41

Confusing](https://image.slidesharecdn.com/2013-130910141143-phpapp02/75/2013-09-10-Giraph-at-London-Hadoop-Users-Group-16-2048.jpg)

![Problem: Serialization of byte[]

• DataInput? Kyro? Custom?

Solution: Unsafe

• Dangerous. No formal API. Volatile. Non-portable (oracle JVM only).

• AWESOME. As fast as it gets.

• True native. Essentially C: *(long*)(data+offset);](https://image.slidesharecdn.com/2013-130910141143-phpapp02/75/2013-09-10-Giraph-at-London-Hadoop-Users-Group-17-2048.jpg)

![Lessons Learned

• Coordinating is a zoo. Be resilient with ZooKeeper.

• Efficient networking is hard. Let Netty help.

• Primitive collections, primitive performance. Use fastutil.

• byte[] is simple yet powerful.

• Being Unsafe can be a good thing.

• Have a graph? Use Giraph.](https://image.slidesharecdn.com/2013-130910141143-phpapp02/75/2013-09-10-Giraph-at-London-Hadoop-Users-Group-22-2048.jpg)

![Problem: Mutations

• Synchronization.

• Load balancing.

Solution: Reshuffle resources

• Mutations handled at barrier between supersteps.

• Master rebalances vertex assignments to optimize distribution.

• Handle mutations in batches.

• Avoid if using byte[].

• Favor algorithms which don’t mutate graph.](https://image.slidesharecdn.com/2013-130910141143-phpapp02/75/2013-09-10-Giraph-at-London-Hadoop-Users-Group-26-2048.jpg)