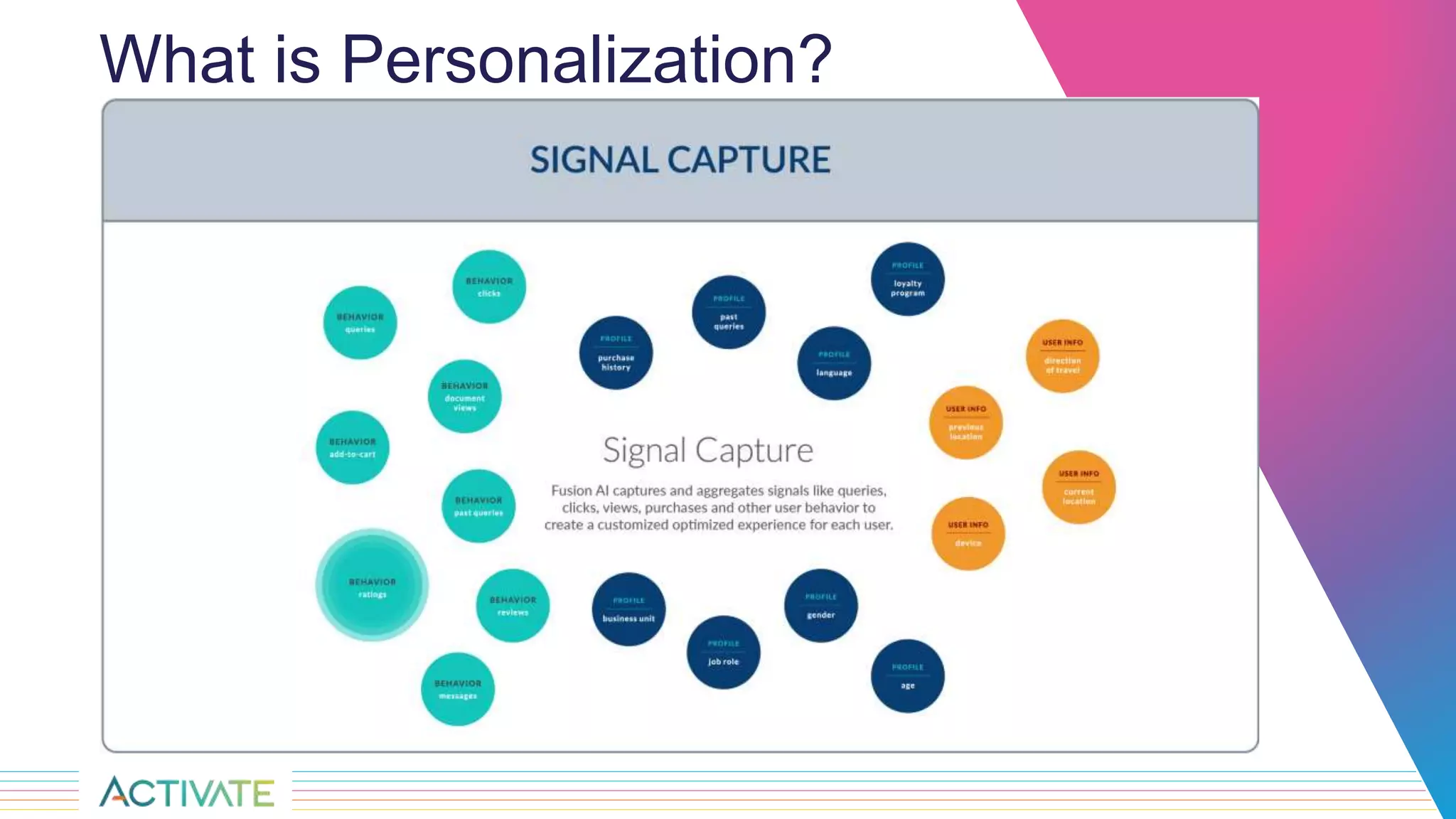

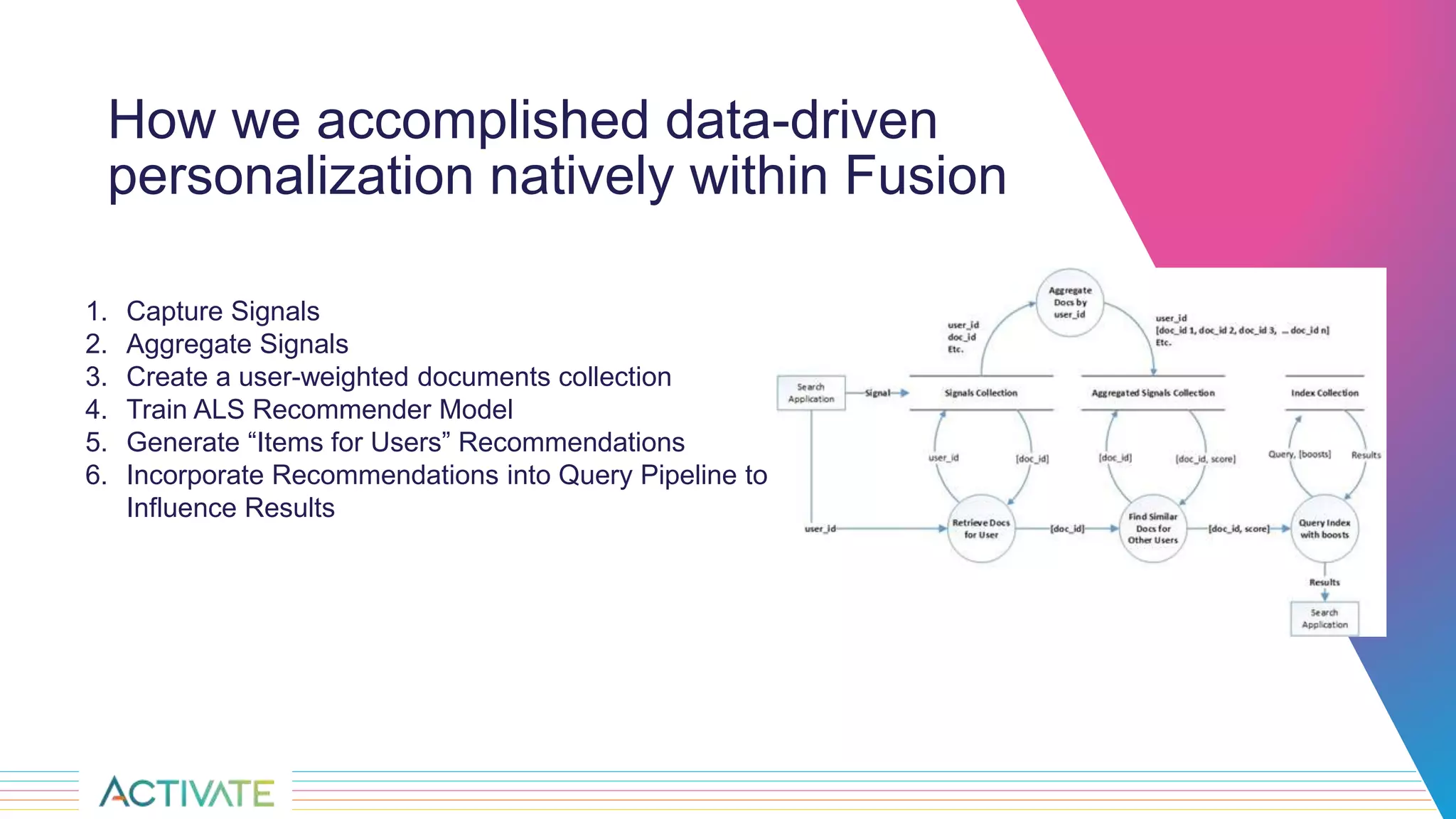

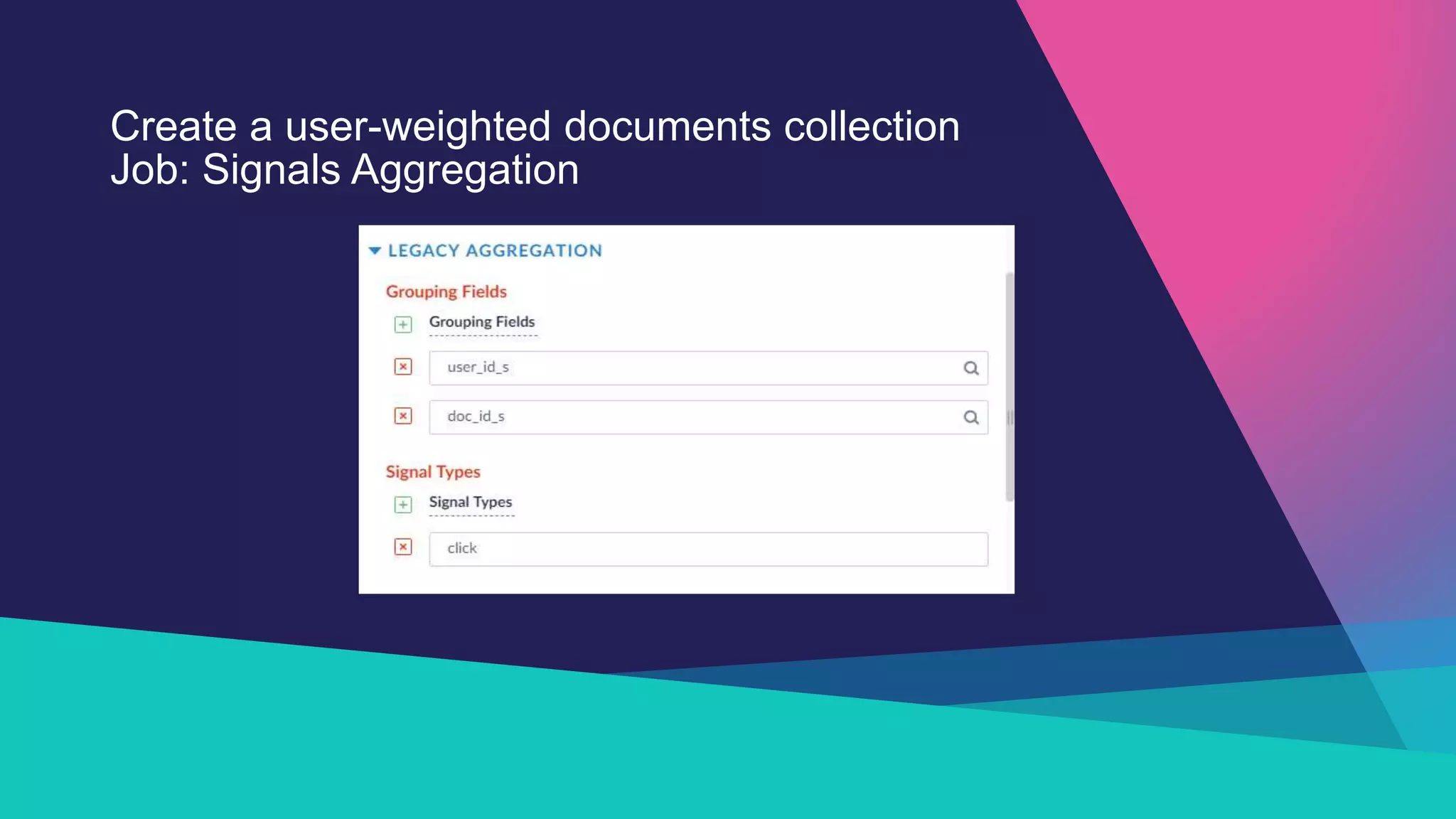

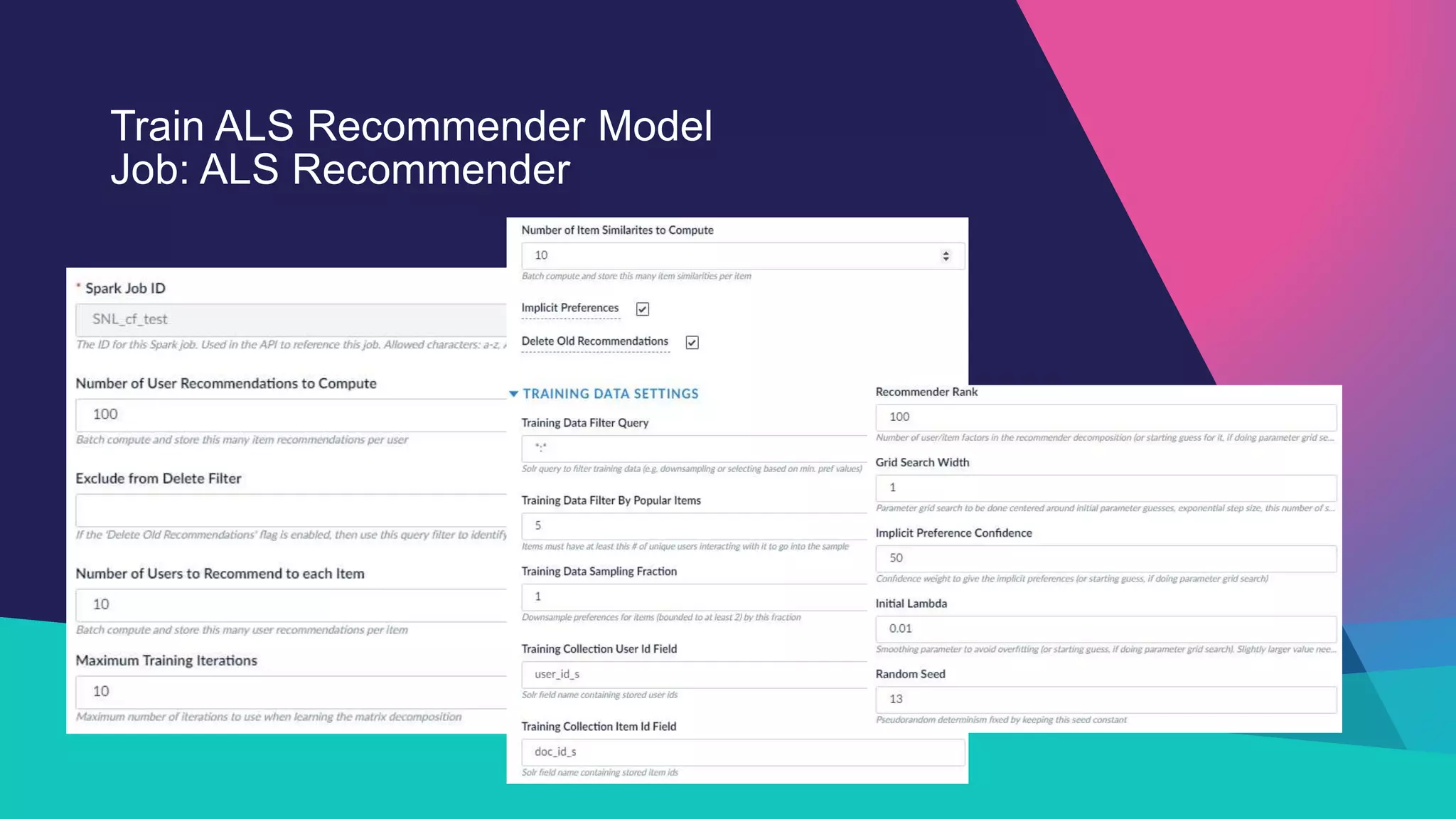

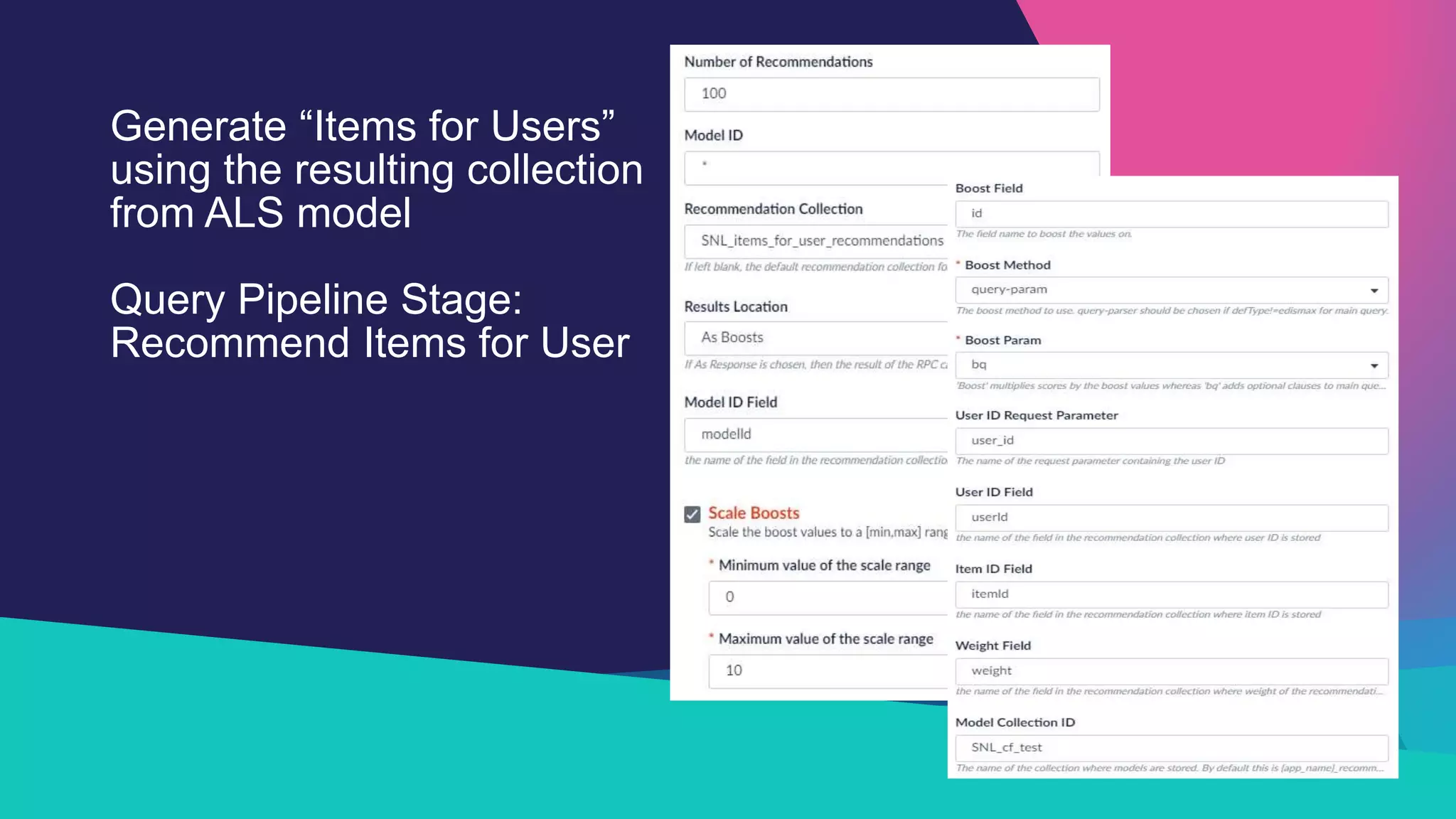

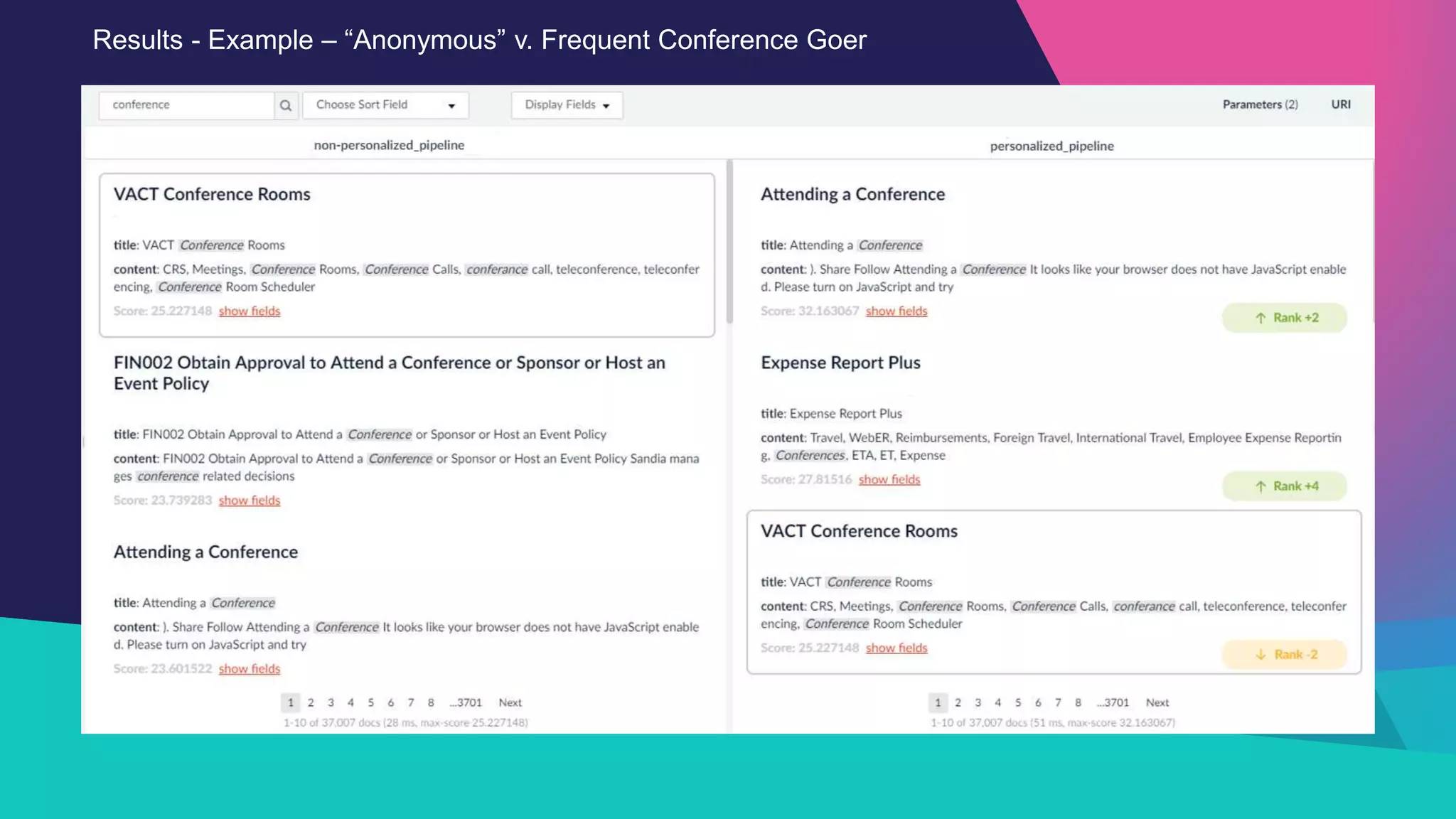

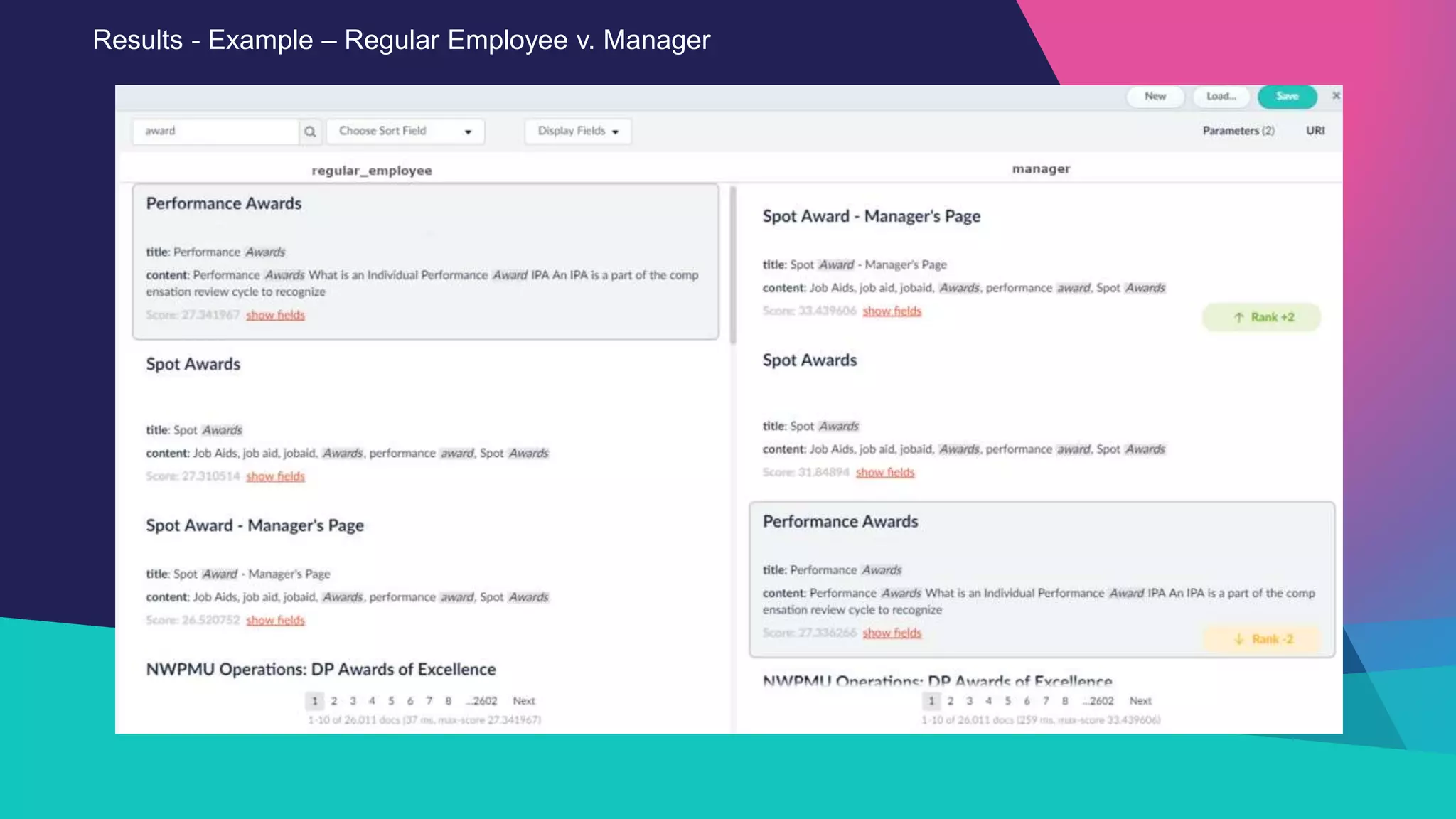

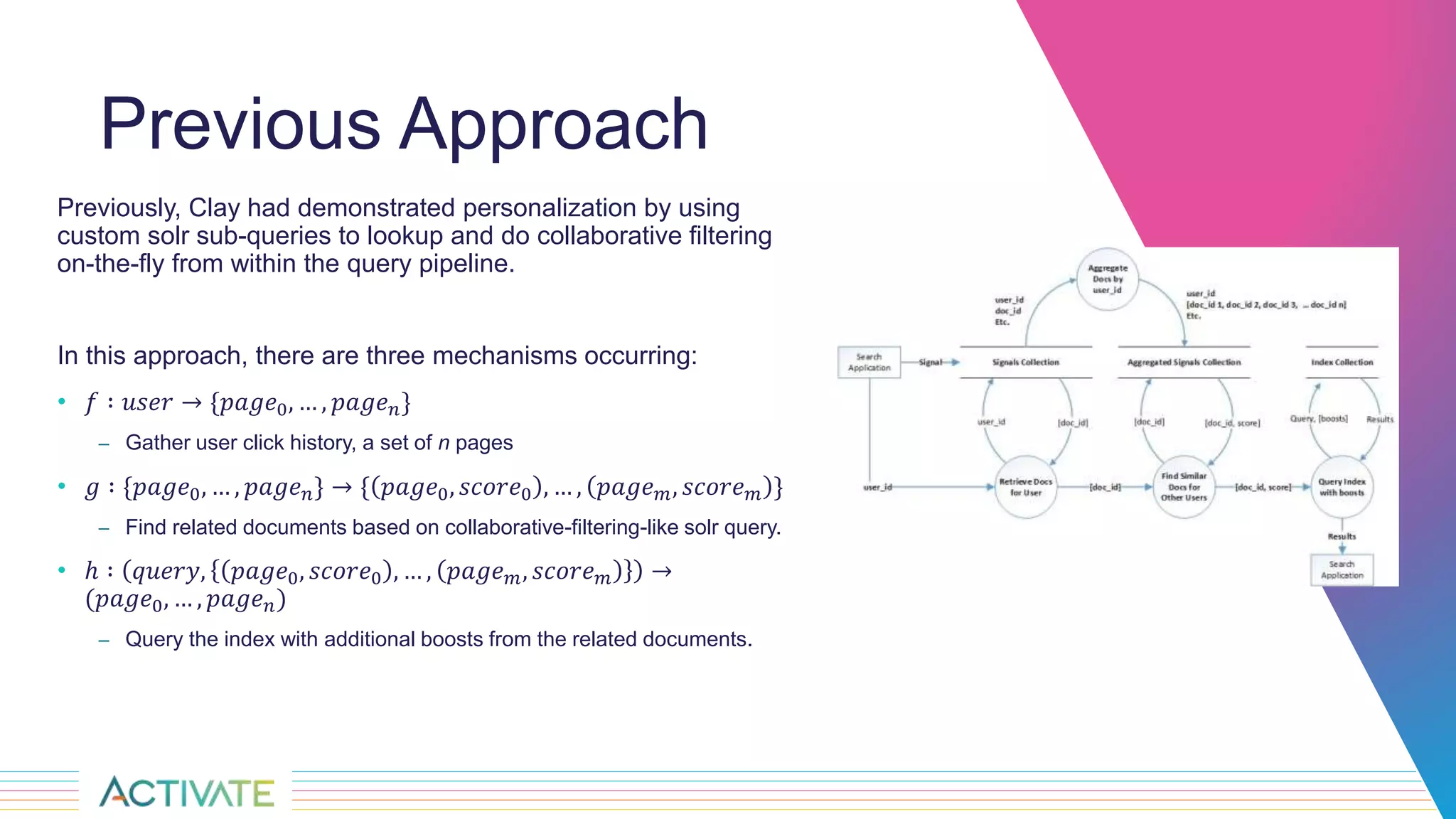

The Sandia National Laboratories presentation discusses the development of personalized search capabilities, which optimize results based on individual user click history and preferences. By implementing a data-driven approach within the Fusion platform, the team effectively configured personalized search algorithms, improving the relevance of results for users in an enterprise context. The results demonstrated significant improvements in personalization over standard search methods, thereby enhancing user experience.