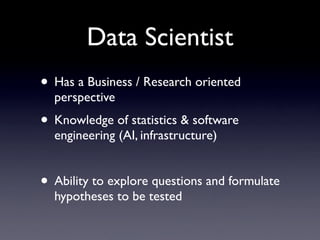

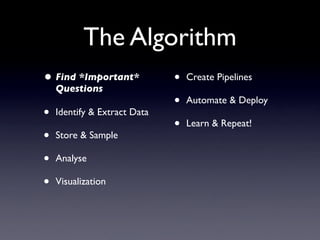

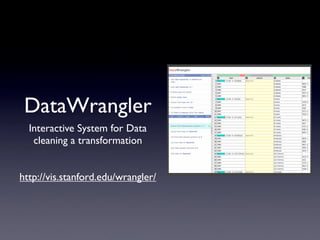

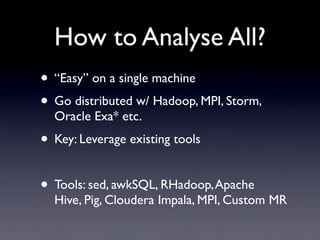

This document provides an overview of the data science process and tools for a data science project. It discusses identifying important business questions to answer with data, extracting relevant data from sources, cleaning and sampling the data, analyzing samples to create models and check hypotheses, applying results to full data sets, visualizing findings, automating and deploying solutions, and continuously learning and improving through an iterative process. Key tools mentioned include Hadoop, R, Python, Excel, and various data wrangling, analysis, and visualization tools.