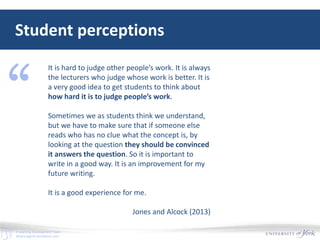

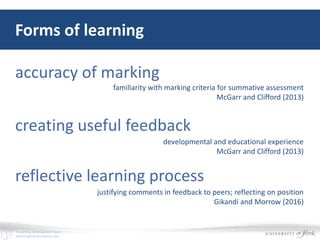

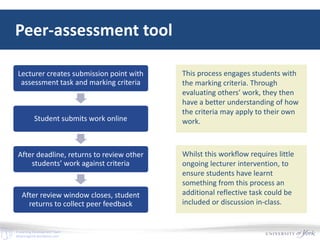

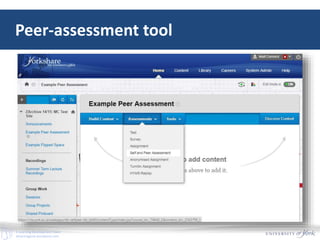

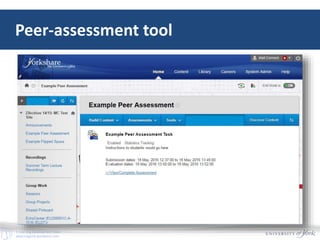

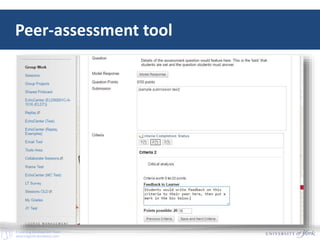

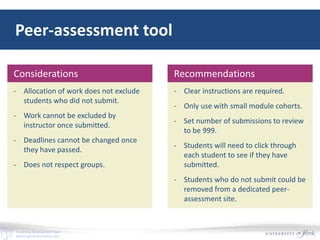

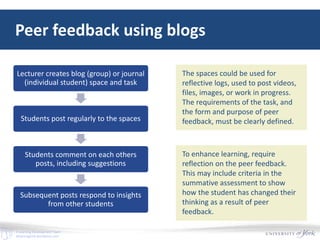

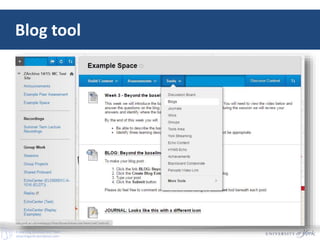

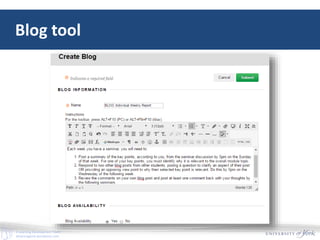

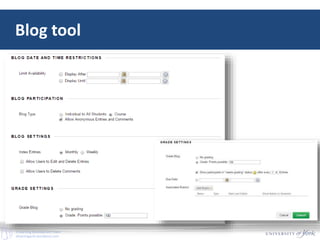

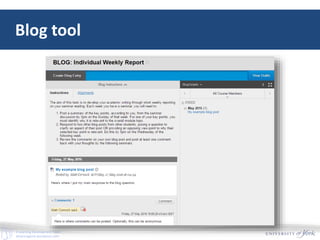

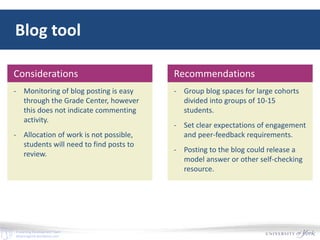

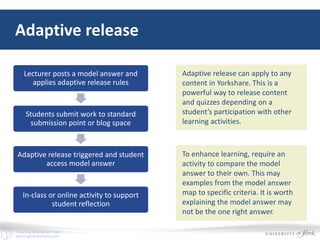

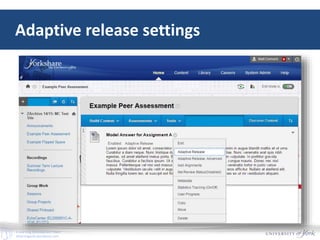

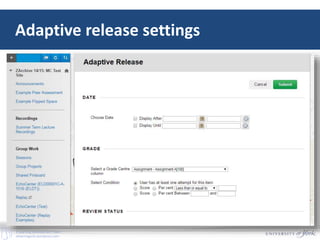

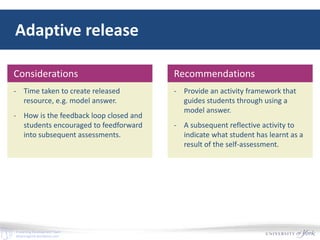

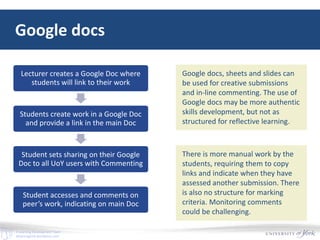

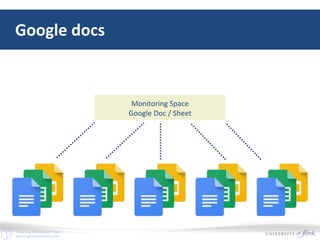

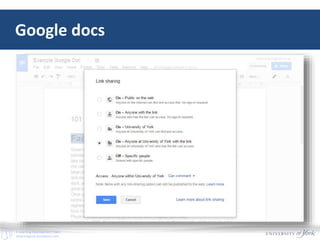

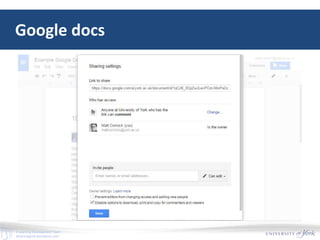

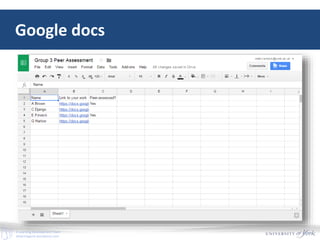

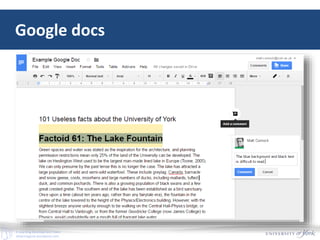

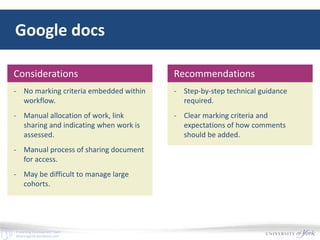

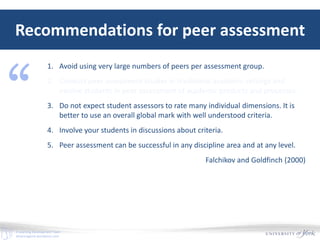

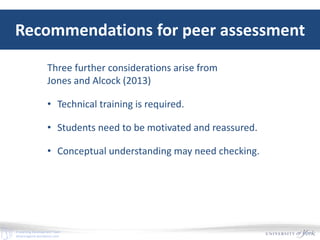

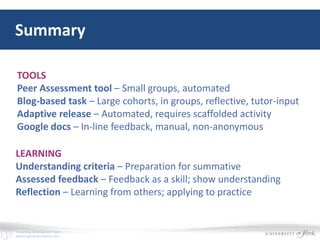

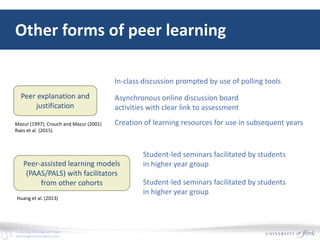

The document discusses peer assessment strategies within e-learning, highlighting various tools and approaches such as using blogs, Google Docs, and adaptive release for model answers. It emphasizes the importance of student engagement with marking criteria and reflective learning, while providing recommendations for effective implementation and addressing potential challenges. The overview also references studies and frameworks to support the integration of peer assessment in academic settings.