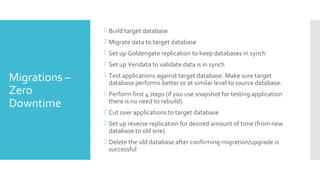

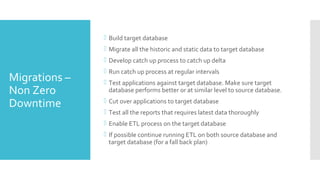

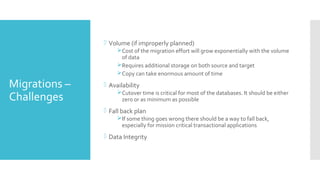

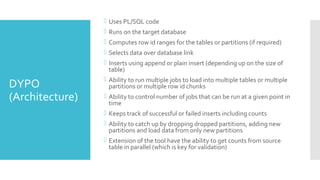

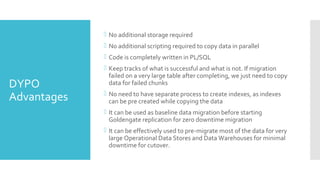

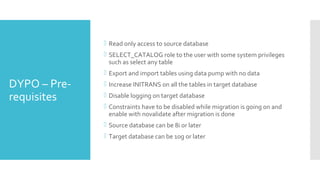

The document discusses approaches, challenges, and solutions for Oracle database migrations and upgrades, emphasizing the need for zero or minimal downtime during these processes. It outlines various upgrade types, including in-place and out-of-place upgrades, and details the tools and techniques utilized, such as Goldengate and custom-built solutions like DYPO for efficient data migration. Key challenges include managing volume and availability, ensuring data integrity, and developing effective fallback plans, particularly when dealing with large databases.