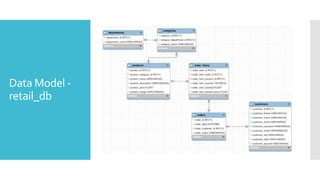

The document outlines a hands-on workshop on data processing using PySpark with Python, hosted by Itversity, which includes a recap of Python basics and an introduction to Spark. Attendees will learn to create Resilient Distributed Datasets (RDDs), read from various file formats, and perform standard transformations and actions. The workshop provides access to labs and requires prior knowledge of programming in Python or Scala.