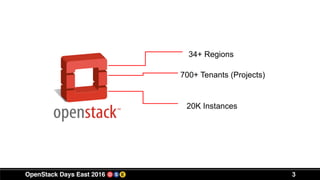

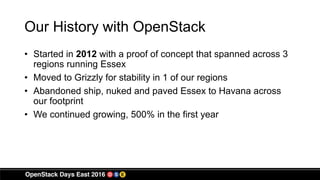

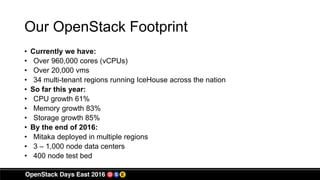

This document discusses Comcast's use of OpenStack for cloud computing. It notes that Comcast has 34 regions, over 700 tenants, and 20,000 instances running on OpenStack. It details Comcast's history with OpenStack, including starting in 2012 with three regions on Essex and upgrading to newer versions over time. Currently, Comcast runs IceHouse across 34 regions, with over 960,000 cores, 20,000 VMs, and plans to deploy Mitaka this year across multiple regions.