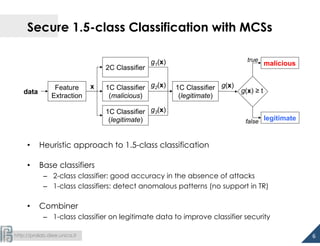

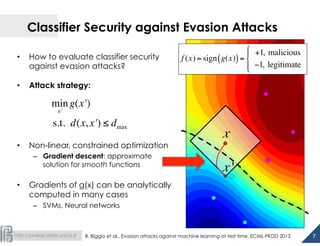

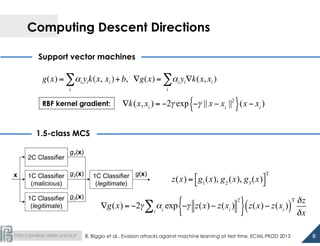

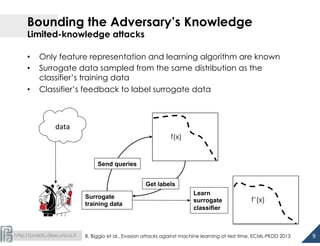

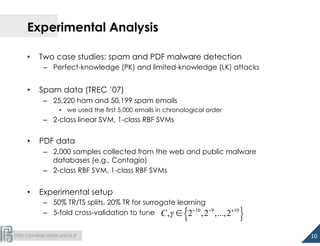

The document discusses the development of 1.5-class multi-class classifiers (MCSs) aimed at enhancing security against evasion attacks in machine learning applications, particularly in spam filtering and PDF malware detection. It details the methodology for evaluating classifier security and experimental outcomes demonstrating the effectiveness of MCSs in retaining high accuracy and security against such attacks. Future work aims to formalize the trade-offs between security and accuracy and address robustness to poisoning attacks.

![http://pralab.diee.unica.it

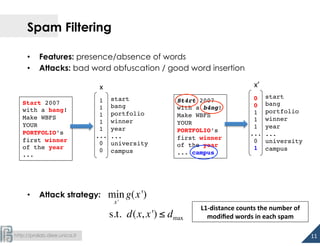

Experiments on PDF Malware Detection

• PDF: hierarchy of interconnected objects (keyword/value pairs)

• Attack strategy

– adding up to dmax objects to the PDF

– removing objects may

compromise the PDF file

(and embedded malware code)!

/Type

2

/Page

1

/Encoding

1

…

13

0

obj

<<

/Kids

[

1

0

R

11

0

R

]

/Type

/Page

...

>>

end

obj

17

0

obj

<<

/Type

/Encoding

/Differences

[

0

/C0032

]

>>

endobj

Features:

keyword

count

min

x'

g(x')

s.t. d(x, x') ≤ dmax

x ≤ x'

12](https://image.slidesharecdn.com/biggio15-mcs-150701164706-lva1-app6891/85/Battista-Biggio-MCS-2015-June-29-July-1-Guenzburg-Germany-1-5-class-MCSs-for-Secure-Learning-against-Evasion-Attacks-at-Test-Time-12-320.jpg)