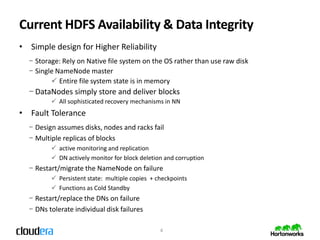

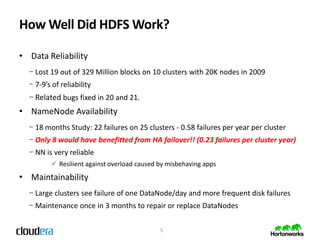

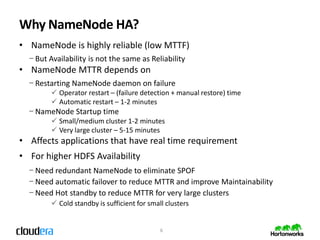

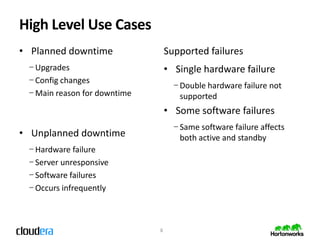

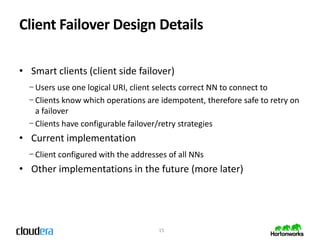

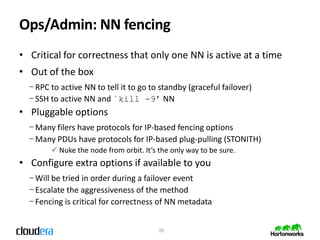

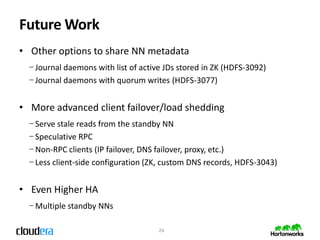

The document discusses High Availability (HA) for HDFS, covering its design, fault tolerance, and maintainability. It emphasizes the need for automatic failover and redundancy in the namenode to enhance availability and reduce downtime in large clusters. Future work includes more advanced client failover options and aiming for even greater HA with multiple standby namenodes.