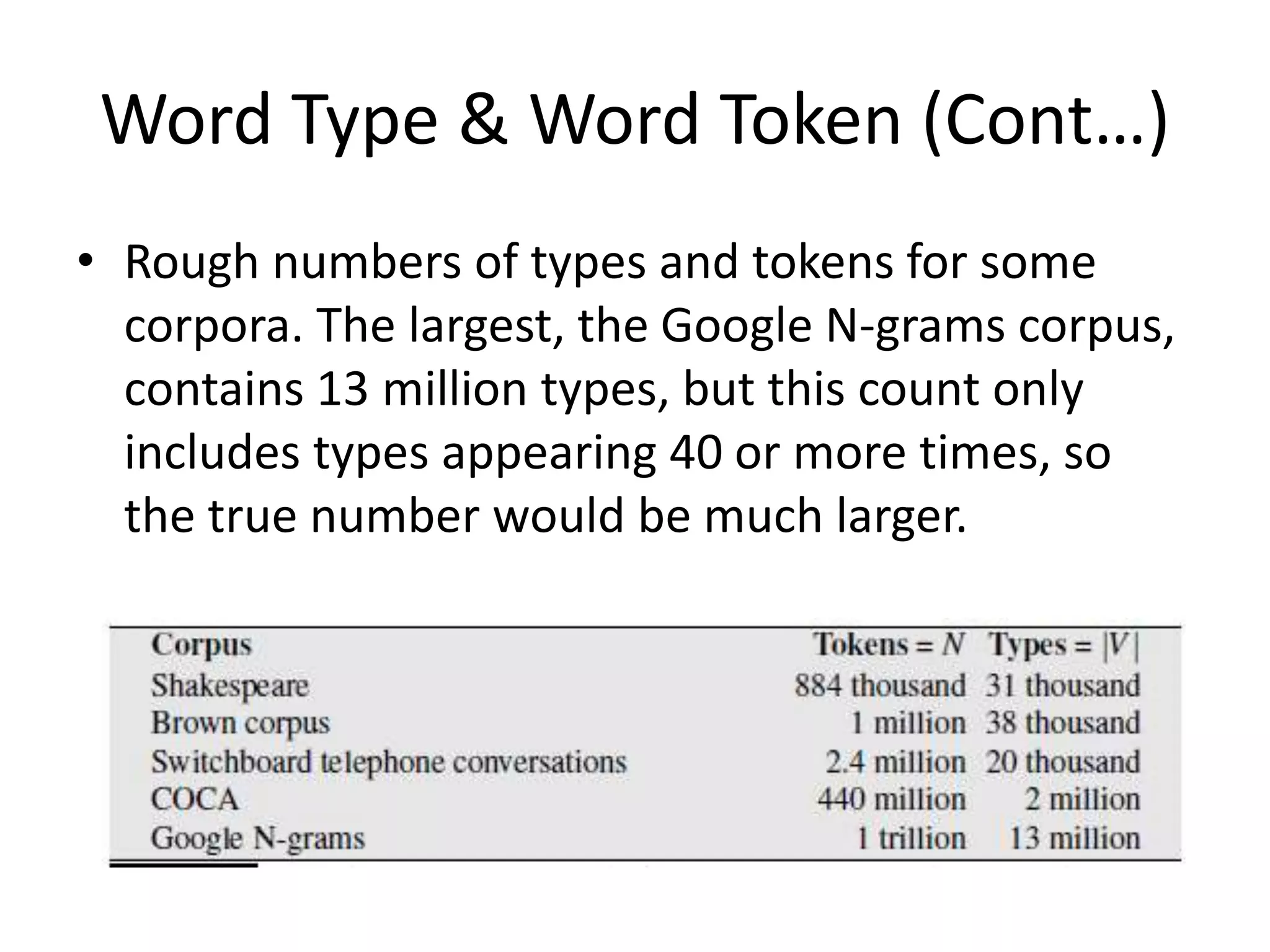

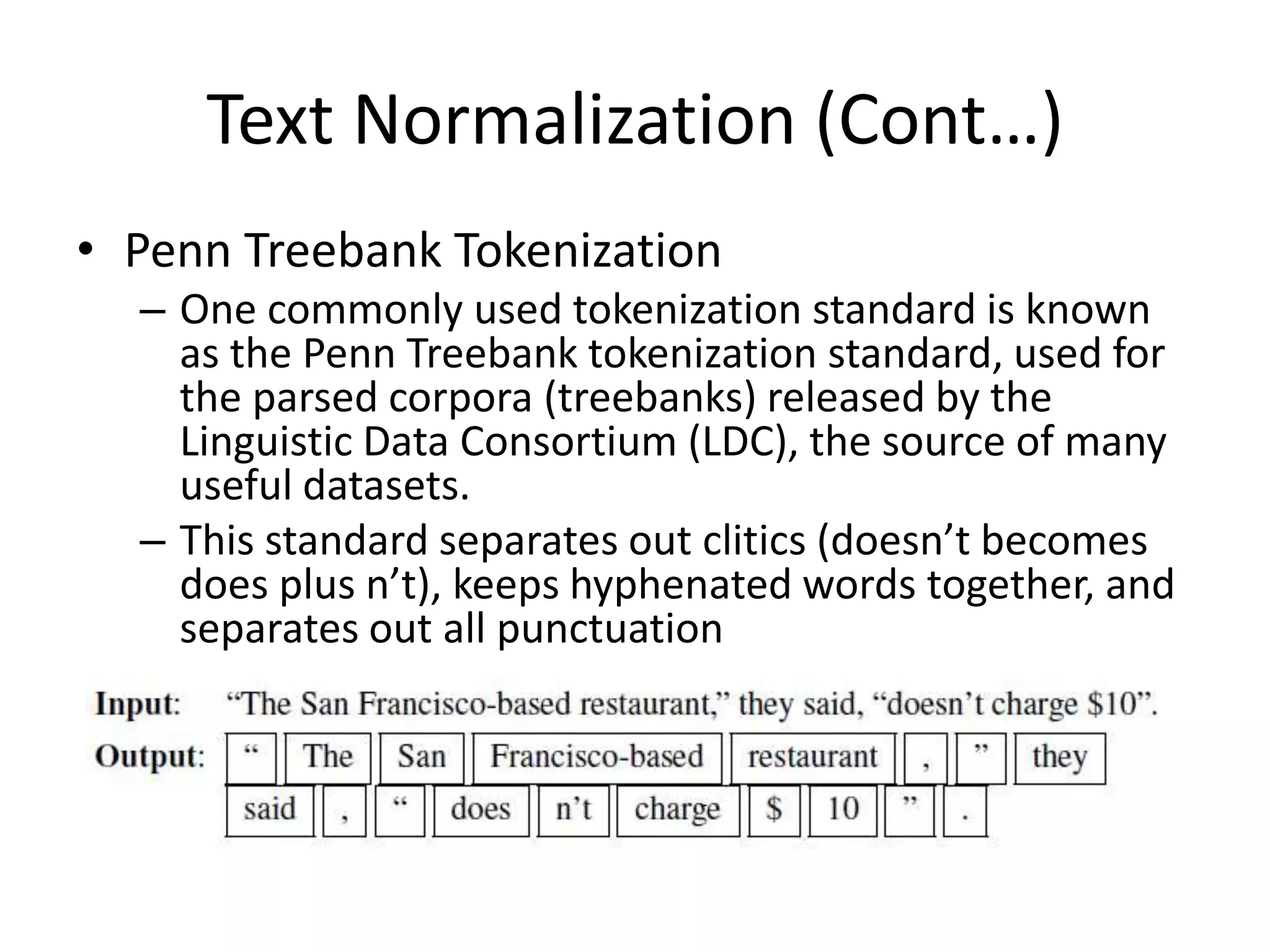

The document discusses text normalization, which involves segmenting and standardizing text for natural language processing. It describes tokenizing text into words and sentences, lemmatizing words into their root forms, and standardizing formats. Tokenization involves separating punctuation, normalizing word formats, and segmenting sentences. Lemmatization determines that words have the same root despite surface differences. Sentence segmentation identifies sentence boundaries, which can be ambiguous without context. Overall, text normalization prepares raw text for further natural language analysis.