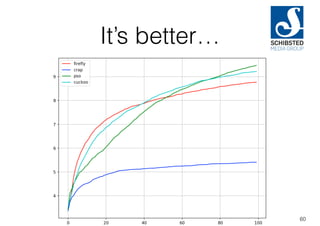

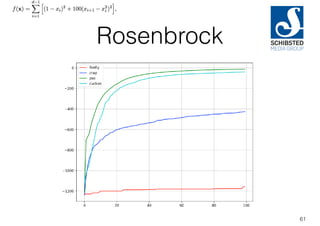

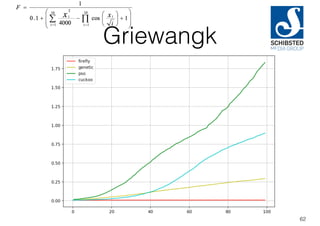

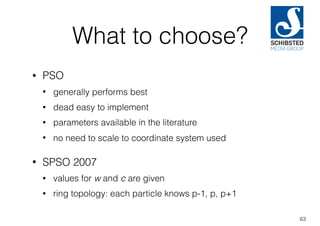

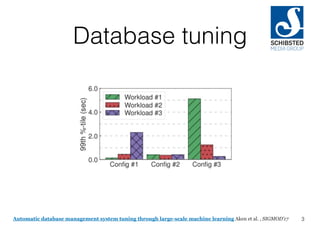

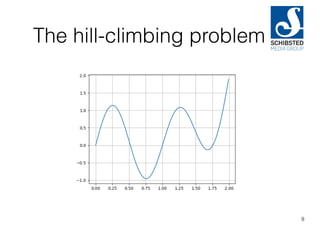

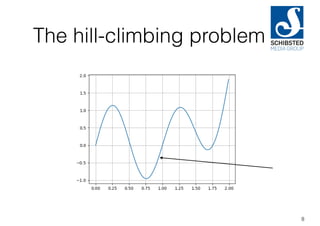

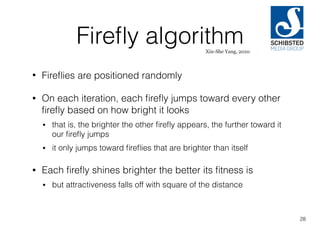

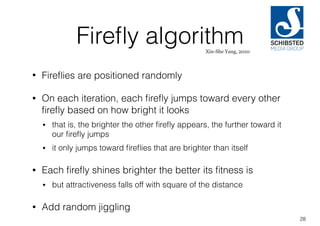

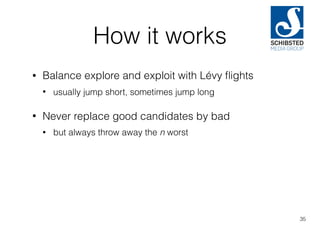

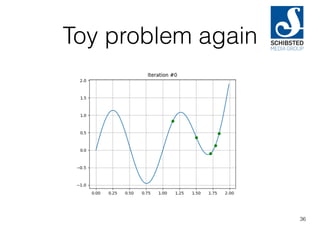

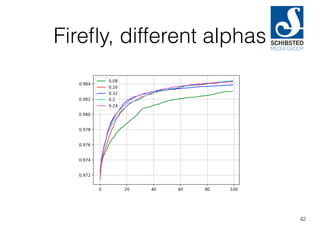

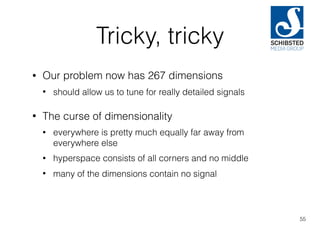

The document discusses nature-inspired algorithms, focusing on their application in tuning various systems like databases and machine learning. It outlines methods like genetic algorithms, particle-swarm optimization, and firefly algorithms, emphasizing the balance between exploration and exploitation in search spaces. It also highlights the challenges of tuning these algorithms, including the evaluation function and the need for considerable computational resources.

![Code

def f1(x):

return 1 + math.sin(2 * np.pi * x)

def f2a(x):

return x ** 3 - 2 * x ** 2 + 1 * x - 1

def f(x):

return f1(x) + f2a(x)

swarm = pso.Swarm(

dimensions = [(0.0, 2.0)],

fitness = lambda x: f(x[0]),

particles = 5

)

for ix in range(20):

swarm.iterate()

print swarm.get_best_ever()

14](https://image.slidesharecdn.com/javazone-2017-170915074213/85/Nature-inspired-algorithms-36-320.jpg)

![Implementation

class Particle:

def __init__(self, dimensions, fitness):

self._dimensions = dimensions

self._fitness = fitness

self._vel = [pick_velocity(min, max) for (min, max) in dimensions]

def iterate(self):

for ix in range(len(self._dimensions)):

self._vel[ix] = (

self._vel[ix] * w +

random.uniform(0, c) * (self._prev_best_pos[ix] - self._pos[ix]) +

random.uniform(0, c) * (self.neighbourhood_best(ix) - self._pos[ix])

)

self._pos[ix] += self._vel[ix]

self._constrain(ix)

self._update()

15](https://image.slidesharecdn.com/javazone-2017-170915074213/85/Nature-inspired-algorithms-37-320.jpg)

![Firefly code

def iterate(self):

for firefly in self._swarm.get_particles():

if self._val < firefly._val:

dist = self.distance(firefly)

attract = firefly._val / (1 + gamma * (dist ** 2))

for ix in range(len(self._dimensions)):

jiggle = alpha * (random.uniform(0, 1) - 0.5)

diff = firefly._pos[ix] - self._pos[ix]

self._pos[ix] = self._pos[ix] + jiggle + (attract * diff)

self._constrain(ix)

30](https://image.slidesharecdn.com/javazone-2017-170915074213/85/Nature-inspired-algorithms-75-320.jpg)

![More algorithms

• Flower-pollination algorithm [Xin-She Yang 2013]

• Bat-inspired algorithm [Xin-She Yang, 2010]

• Ant colony algorithm [Marco Dorigo, 1992]

• Bee algorithm [Pham et al 2005]

• Fish school search [Filho et al 2007]

• Artificial bee colony algorithm [Karaboga et al 2005]

• …

50

Looks interesting,

hard to find algorithm

Paper too vague,

can’t implement

Complicated,

didn’t finish implementation](https://image.slidesharecdn.com/javazone-2017-170915074213/85/Nature-inspired-algorithms-112-320.jpg)

![General trick

• Quite common to “cheat” this way to use numeric-

only machine learning algorithms

• Turn boolean into [0, 1] parameter

• Turn enumeration into one boolean per value

• Looks odd, but in general it does work

• of course, now there isn’t much spatial structure any more

54](https://image.slidesharecdn.com/javazone-2017-170915074213/85/Nature-inspired-algorithms-116-320.jpg)

![Meta-tuning again

def fitness(pos):

(firefly.alpha, firefly.gamma) = pos

averages = crap.run_experiment(

firefly,

dimensions = [(0.0, math.pi)] * 16,

fitness = function.michaelwicz,

particles = 20,

problem = 'michaelwicz',

quiet = True

)

return crap.average(averages)

dimensions = [(0.0, 1.0), (0.0, 10.0)] # alpha, gamma

swarm = pso.Swarm(dimensions, fitness, 10)

crap.evaluate(swarm, 'meta-michaelwicz-firefly')

59](https://image.slidesharecdn.com/javazone-2017-170915074213/85/Nature-inspired-algorithms-123-320.jpg)