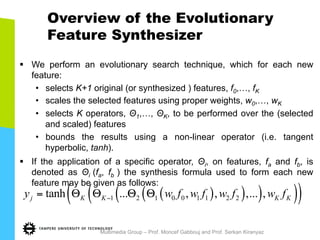

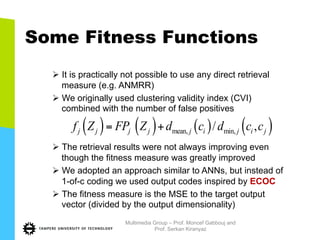

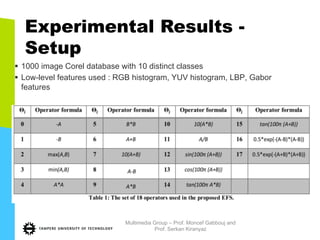

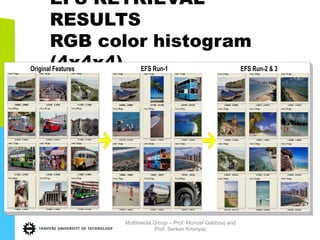

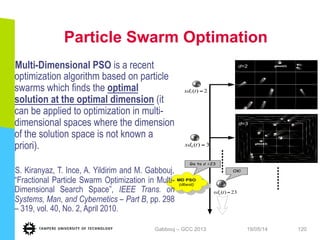

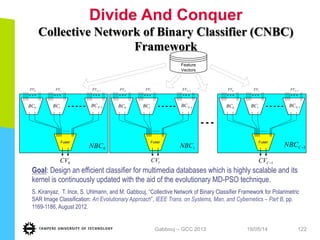

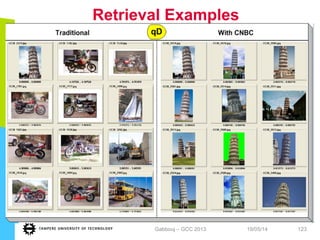

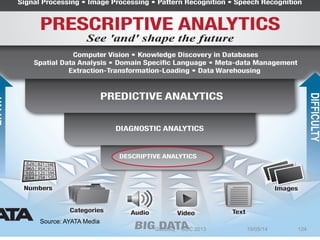

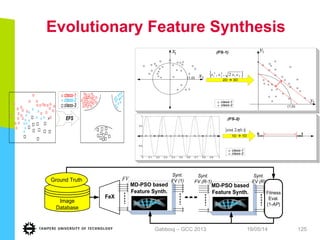

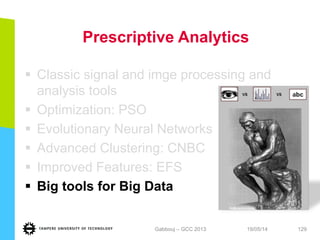

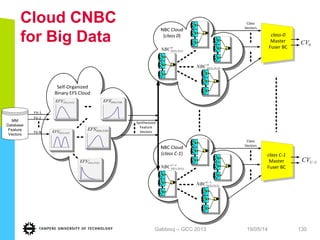

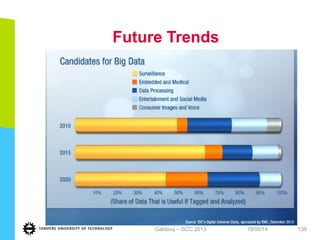

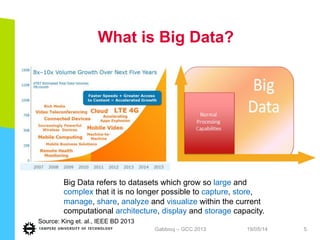

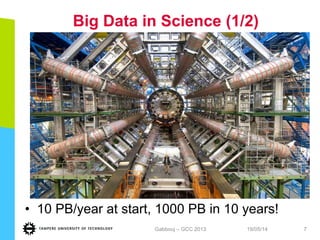

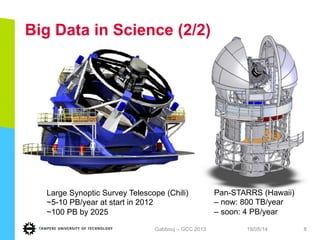

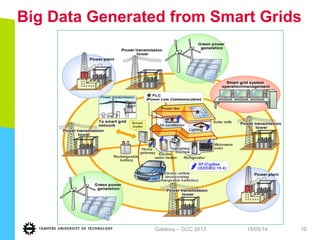

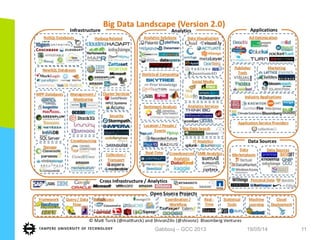

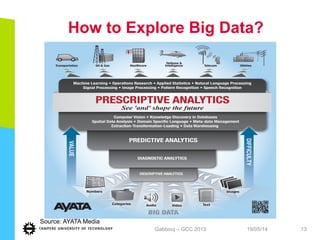

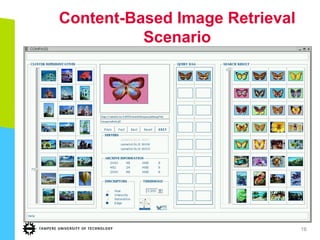

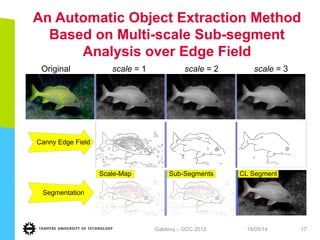

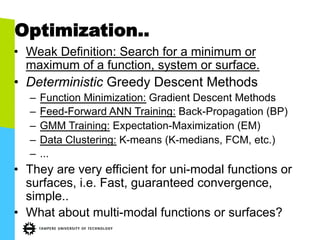

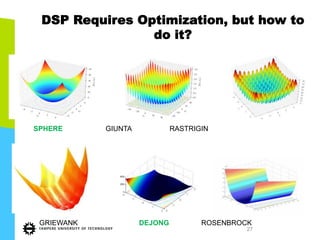

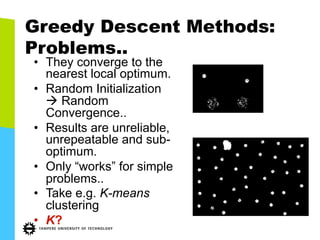

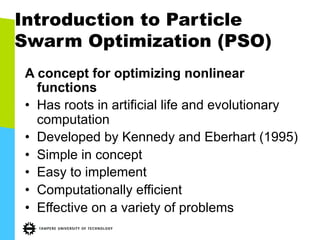

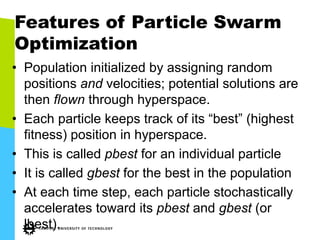

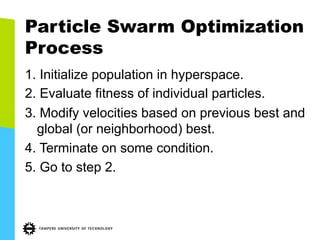

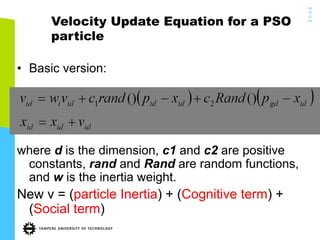

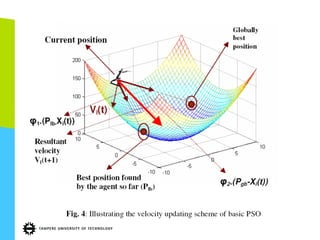

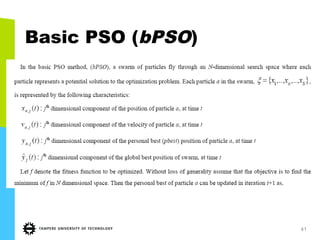

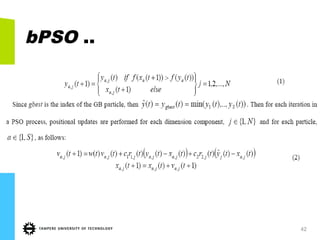

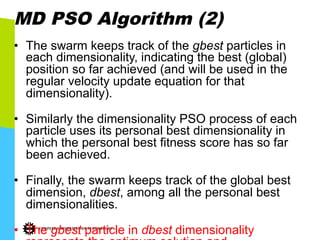

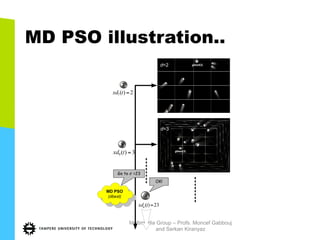

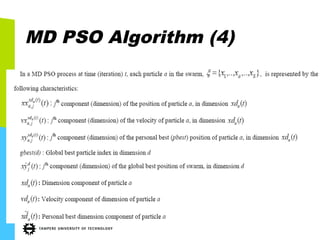

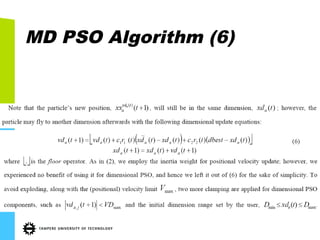

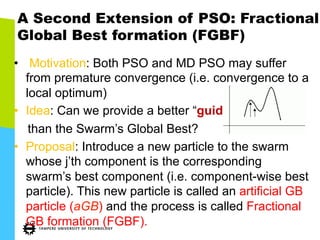

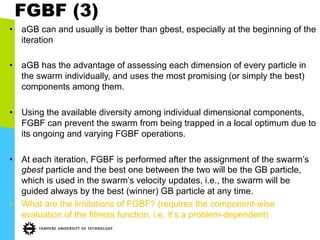

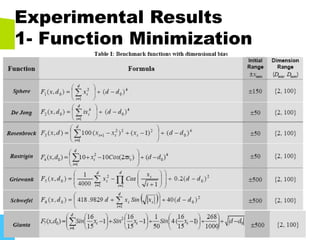

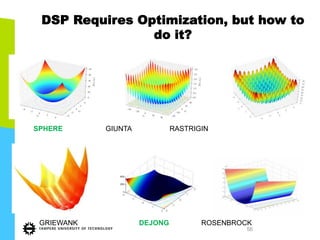

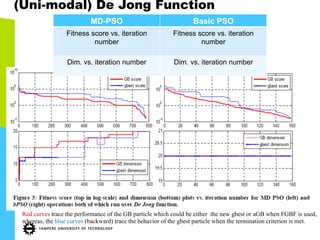

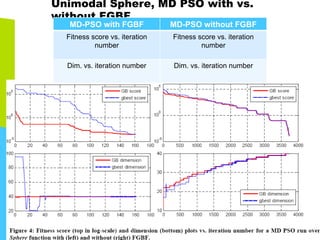

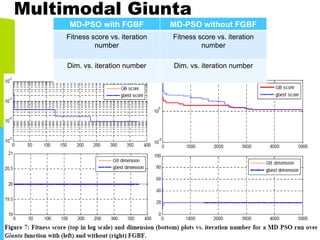

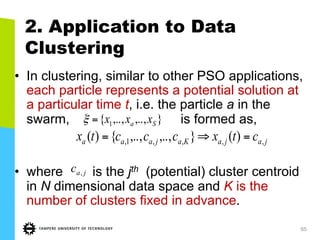

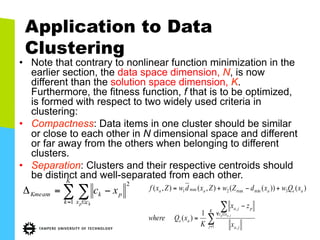

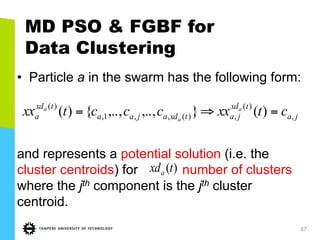

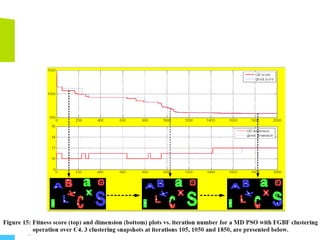

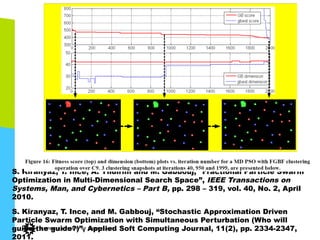

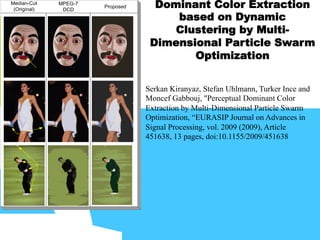

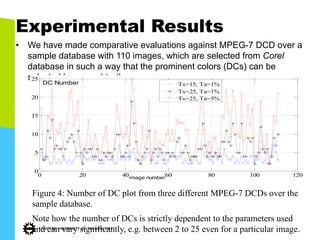

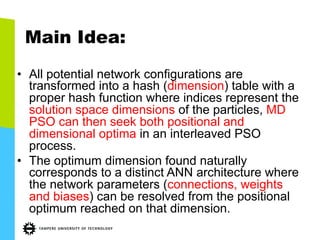

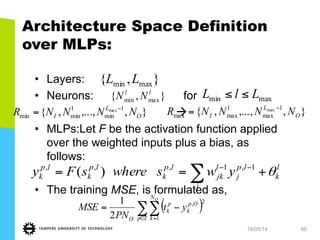

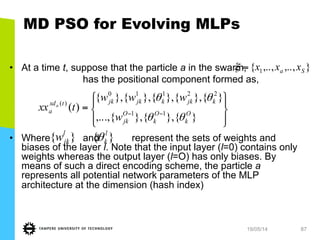

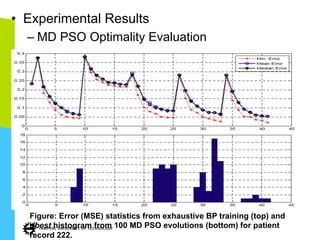

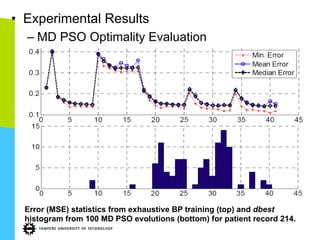

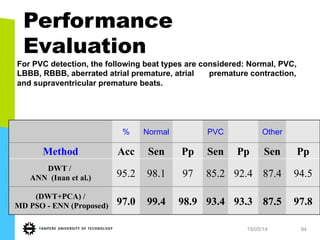

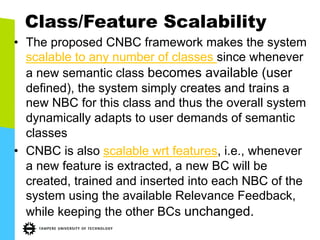

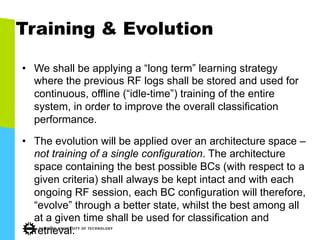

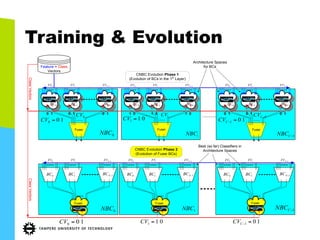

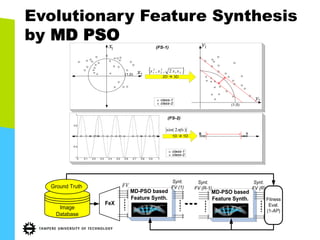

This document discusses machine learning tools and particle swarm optimization for content-based search in large multimedia databases. It begins with an outline and then covers topics like big data sources and characteristics, descriptive and prescriptive analytics using tools like particle swarm optimization, and methods for exploring big data including content-based image retrieval. It also discusses challenges like optimization of non-convex problems and proposes methods like multi-dimensional particle swarm optimization to address issues like premature convergence.

![0x

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎥

⎦

⎤

⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎣

⎡

Β

Β

Β

Α

=

K

K

d

jaxx

θ

θ

θ

...

...

2

2

1

1

1

,

where,

[ ] [ ) [ ] [ ]KiFNdj ii ,1,,1,,0,,1,0 1 ∈∈ℜ∈ΒΑ−∈ θ

⎣ ⎦ [ ] ⎣ ⎦ [ ]

)(

1,0,1

1,0,1,01

111

1

ii

iii

i

Operator

wwiwandw

NBiN

θ

βα

βα

βαβα

≡Θ

<≤−Β=−Α=

−∈=−∈Α=

Let:

1x

1αx

1βx

2βx

Kxβ

1−Nx

1αw

1βw

2βw

Kwβ

1Θ 2Θ KΘ

0y

1y

jy

1−dy

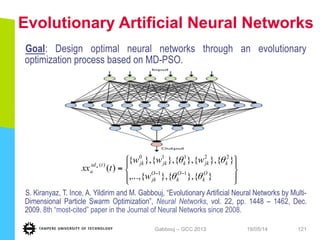

Original FV

(N-dimensional)

Synthesized FV

(d-dimensional)

Multimedia Group – Prof. Moncef Gabbouj and

Prof. Serkan Kiranyaz](https://image.slidesharecdn.com/gabbouj-thessaloniki-talk-2014-140529025213-phpapp01/85/Machine-Learning-Tools-and-Particle-Swarm-Optimization-for-Content-Based-Search-in-Big-Multimedia-Databases-113-320.jpg)