The document discusses machine learning and introduces "three ex's" - Express, Explain, and Experiment - as a framework.

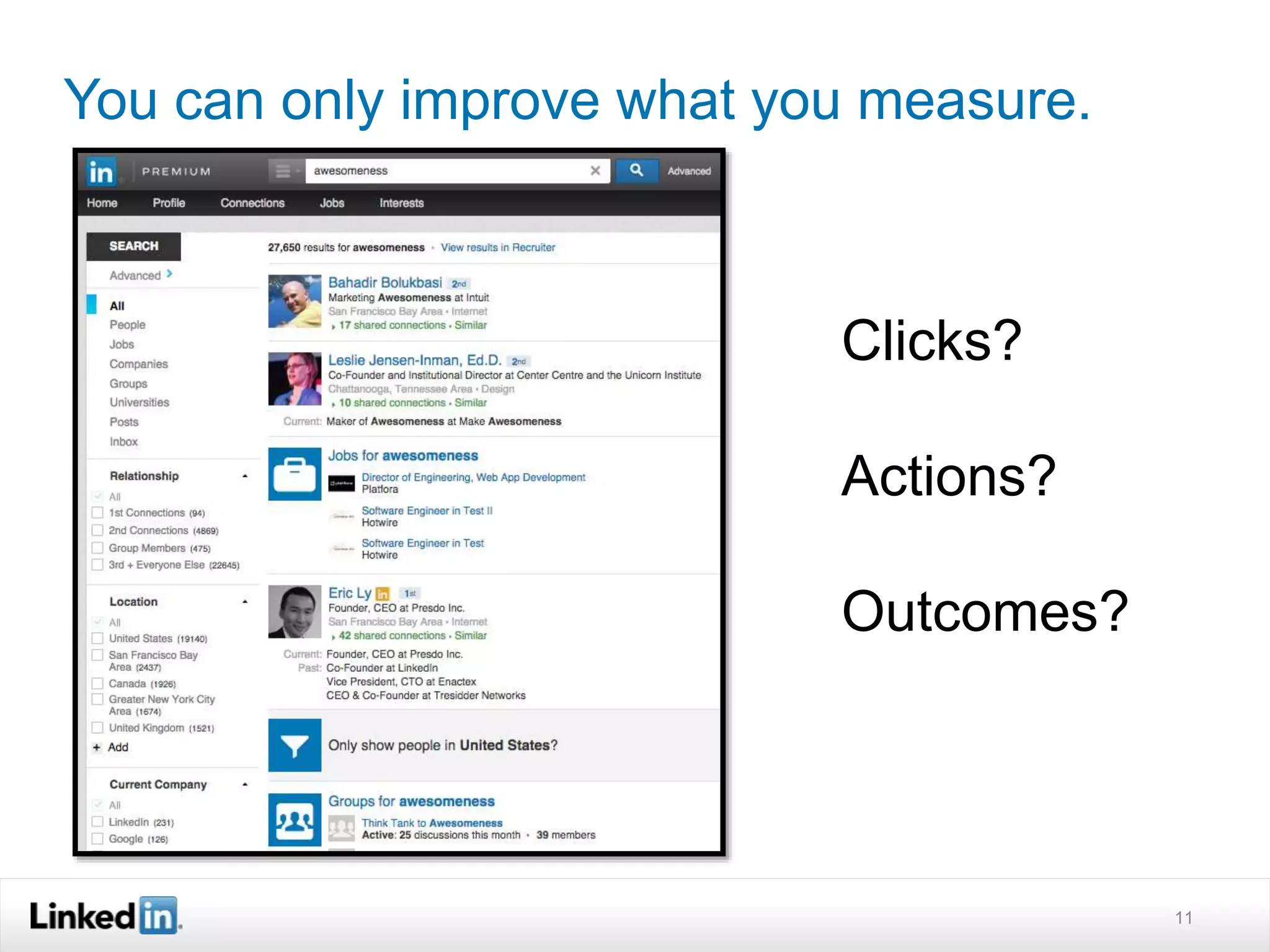

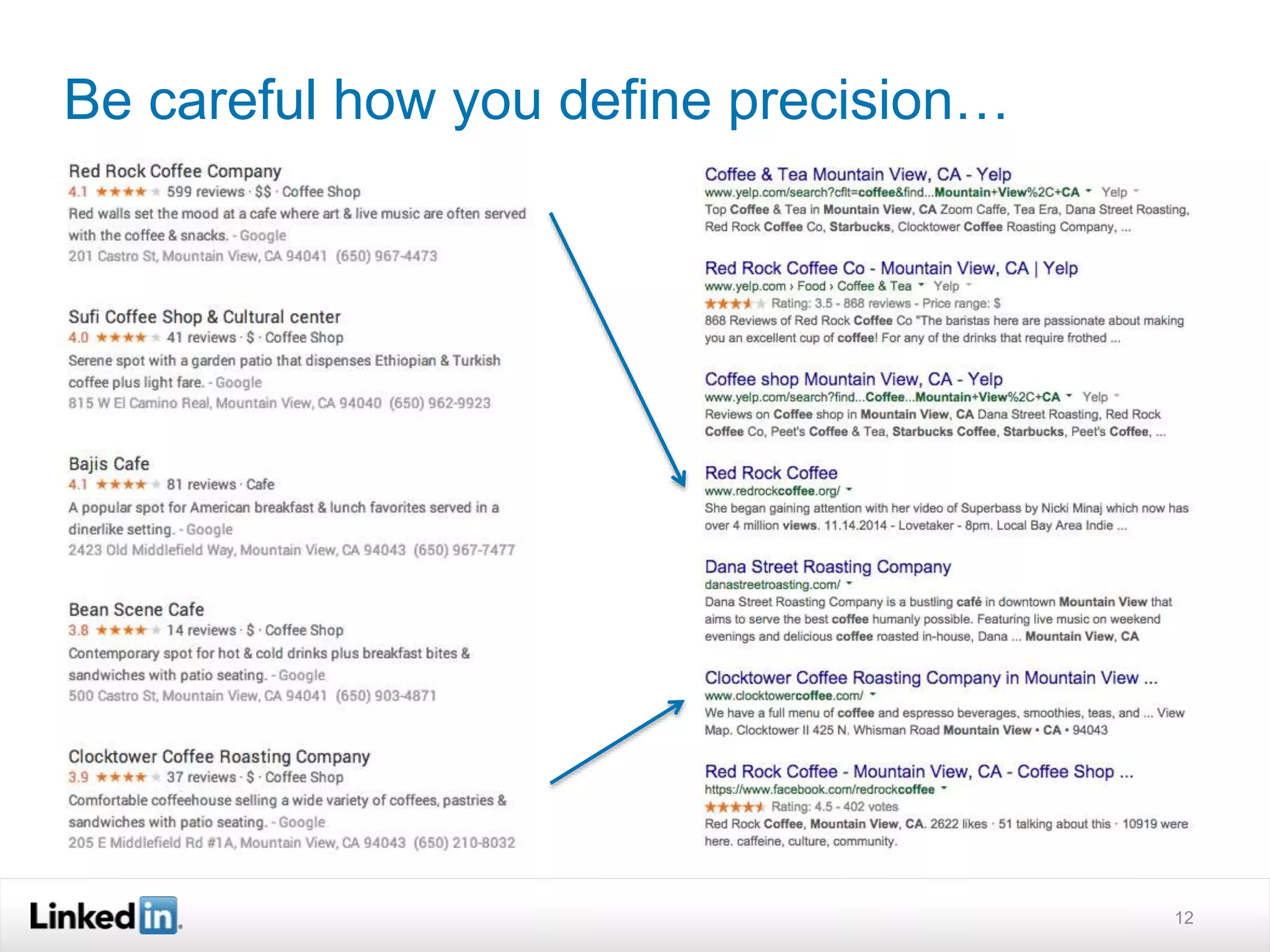

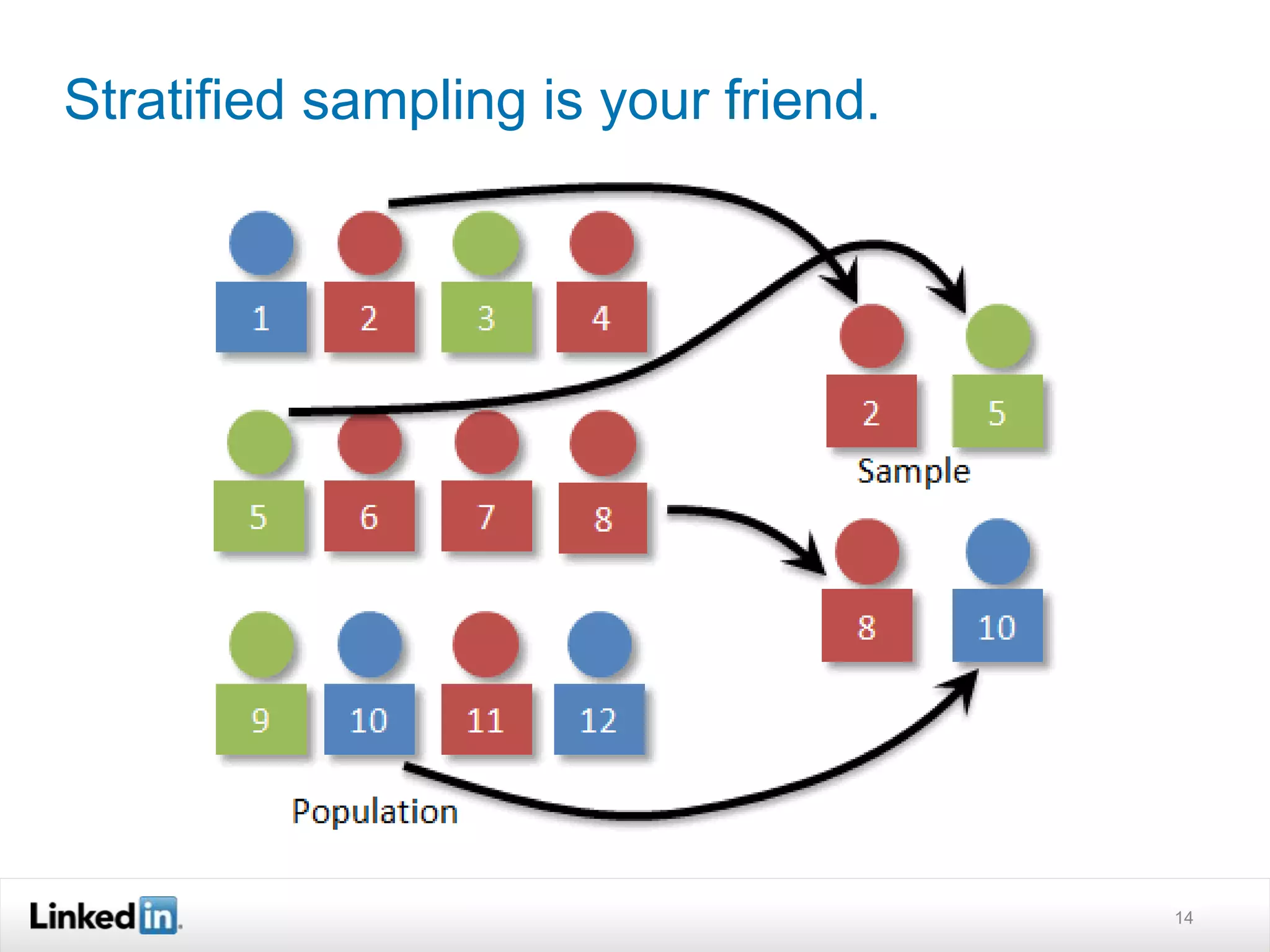

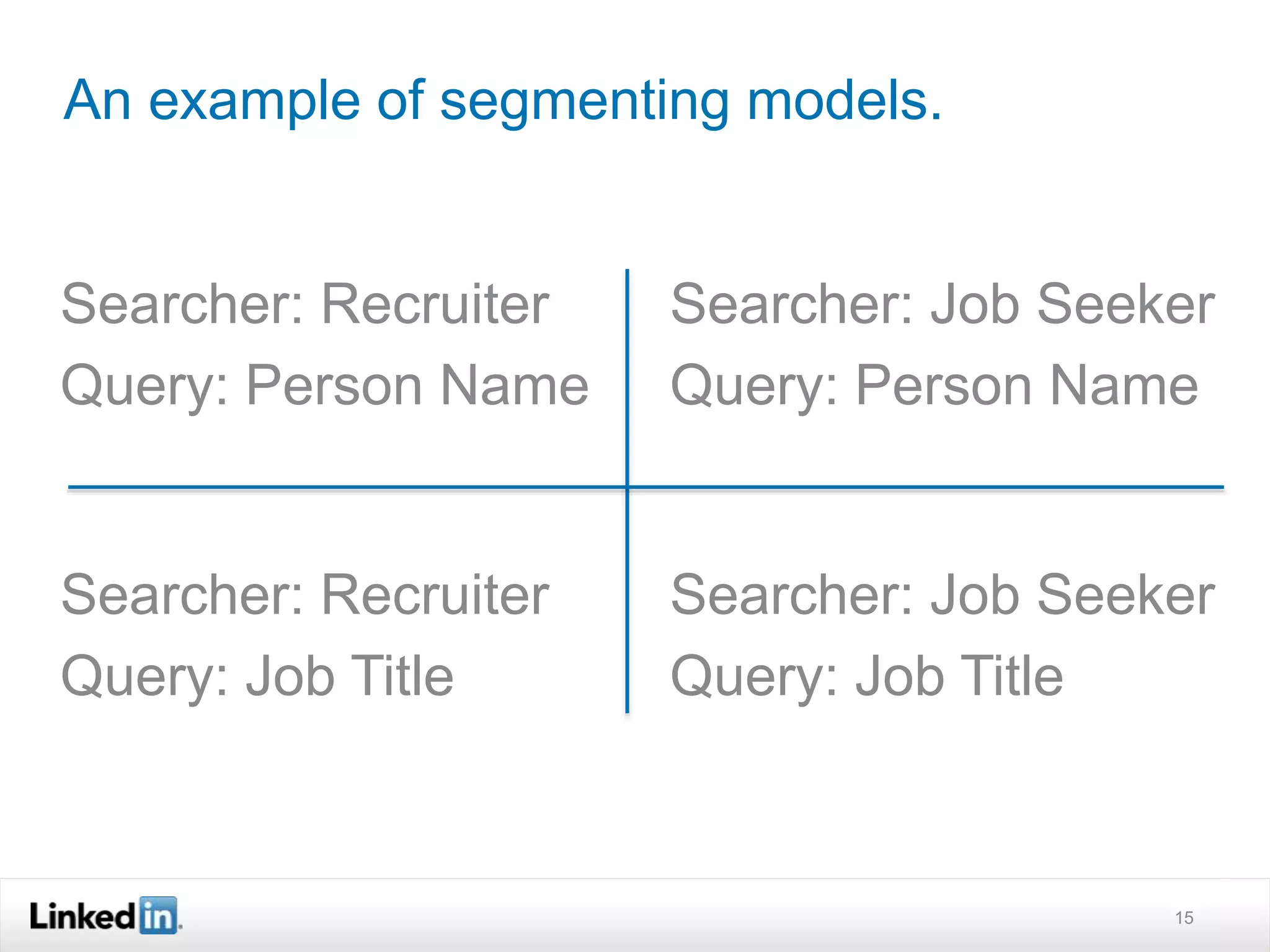

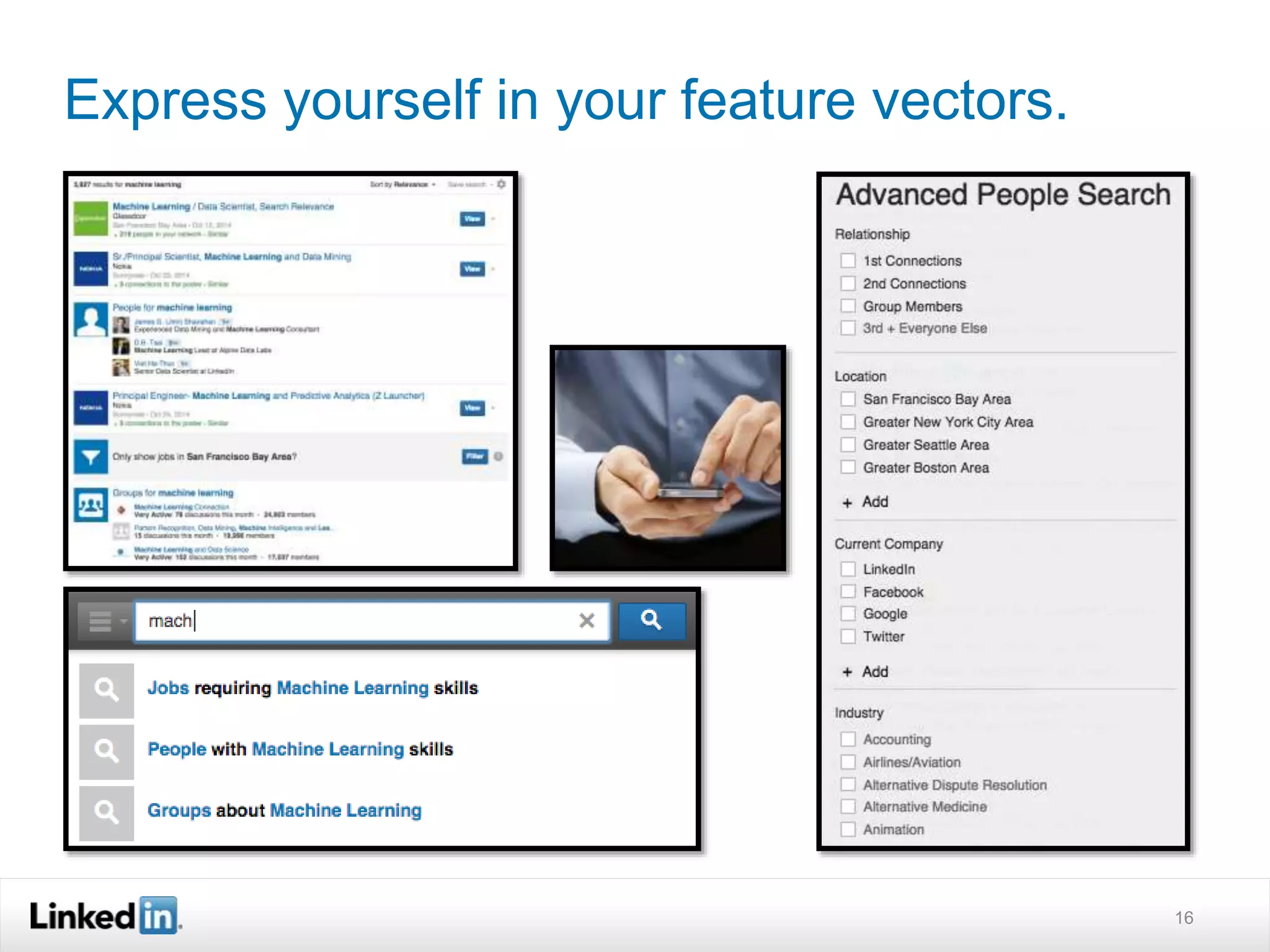

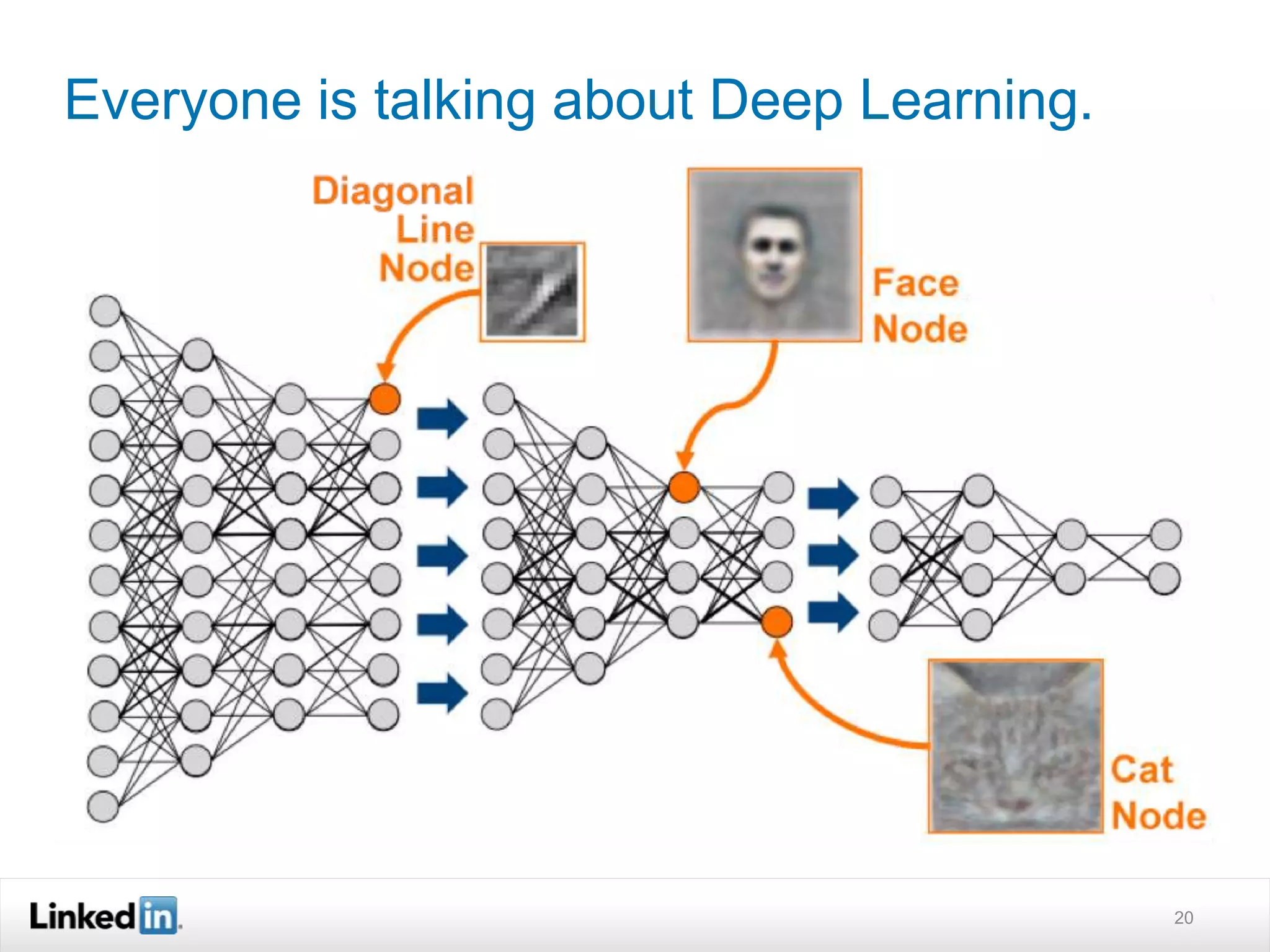

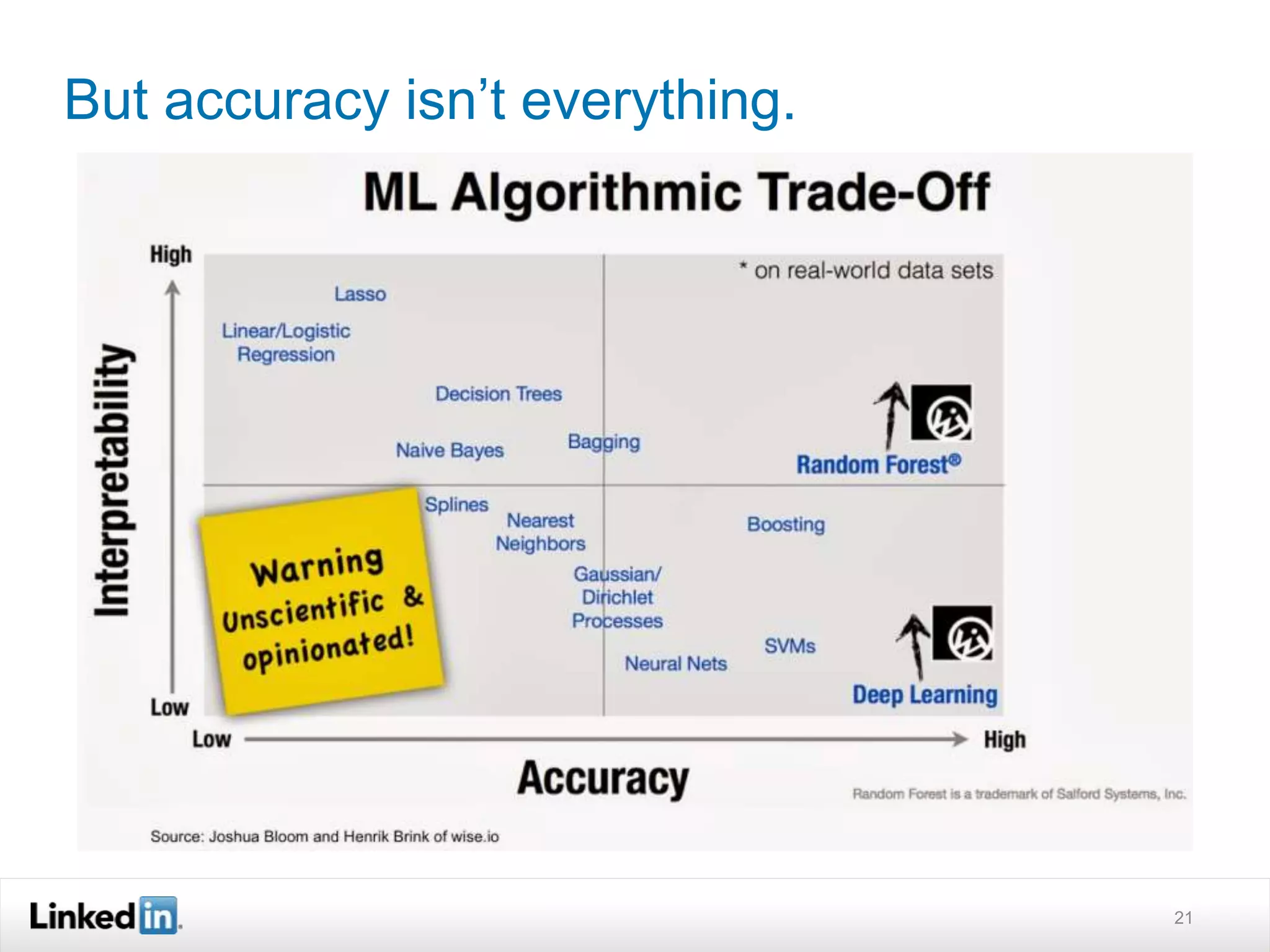

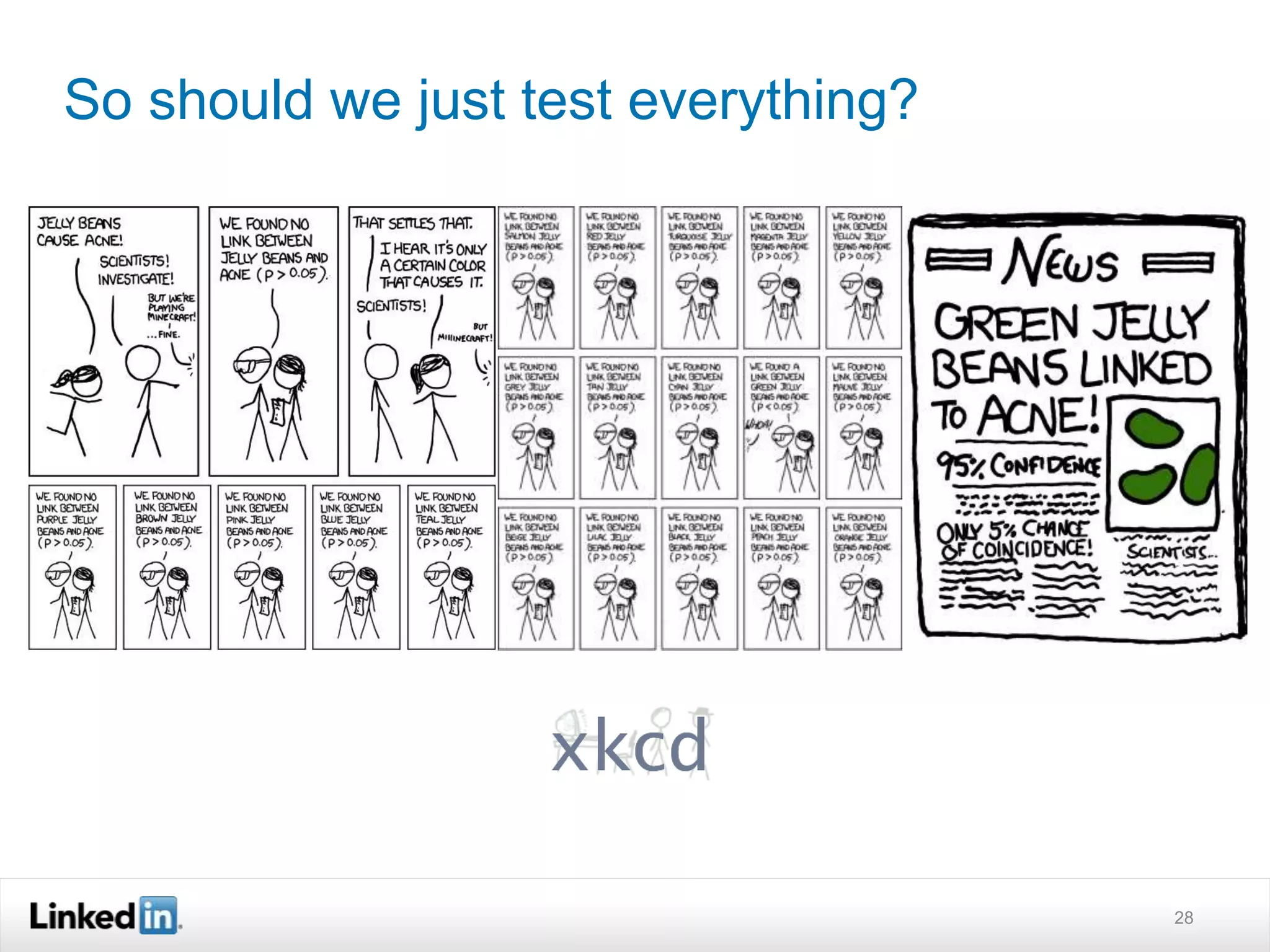

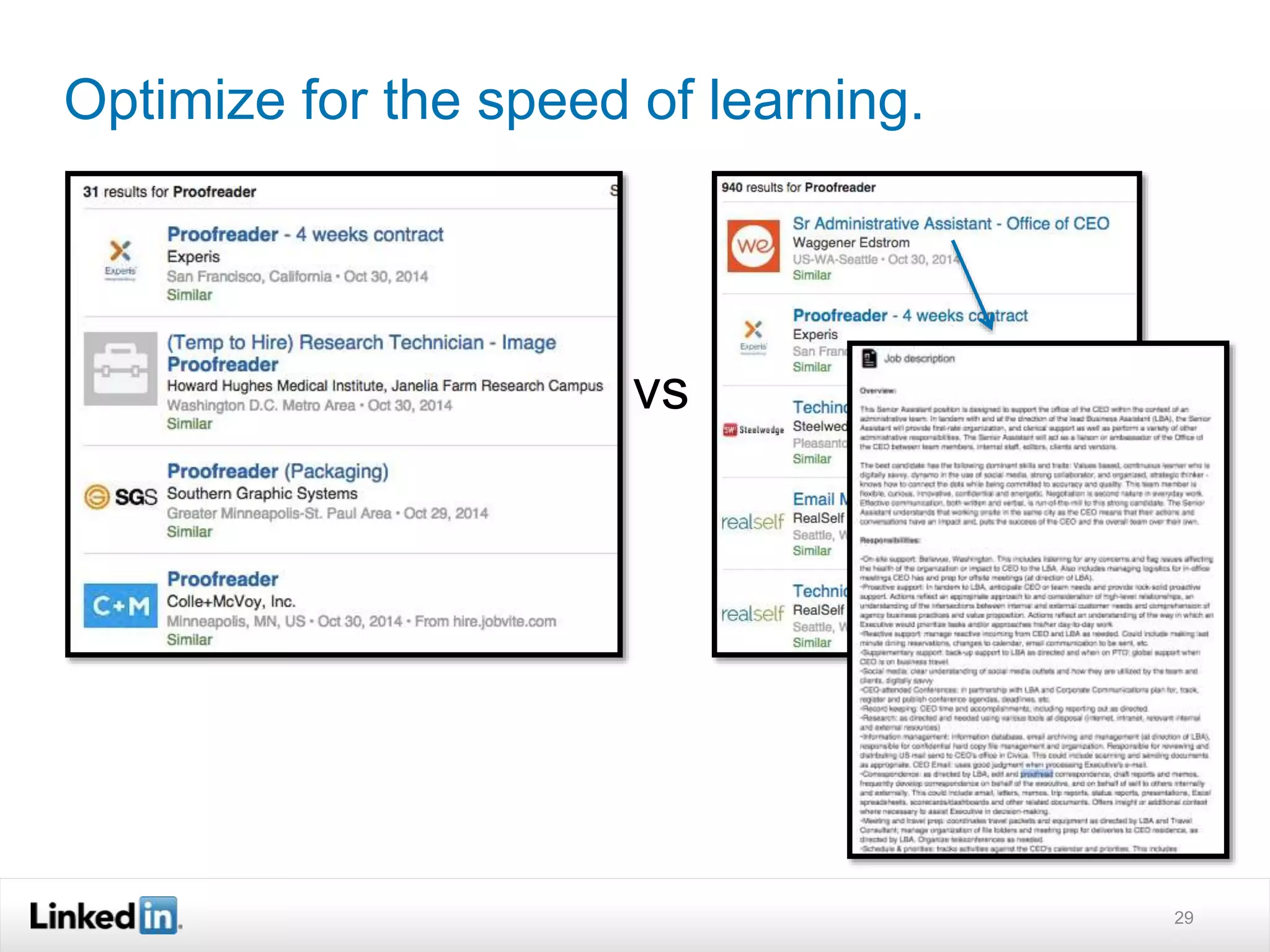

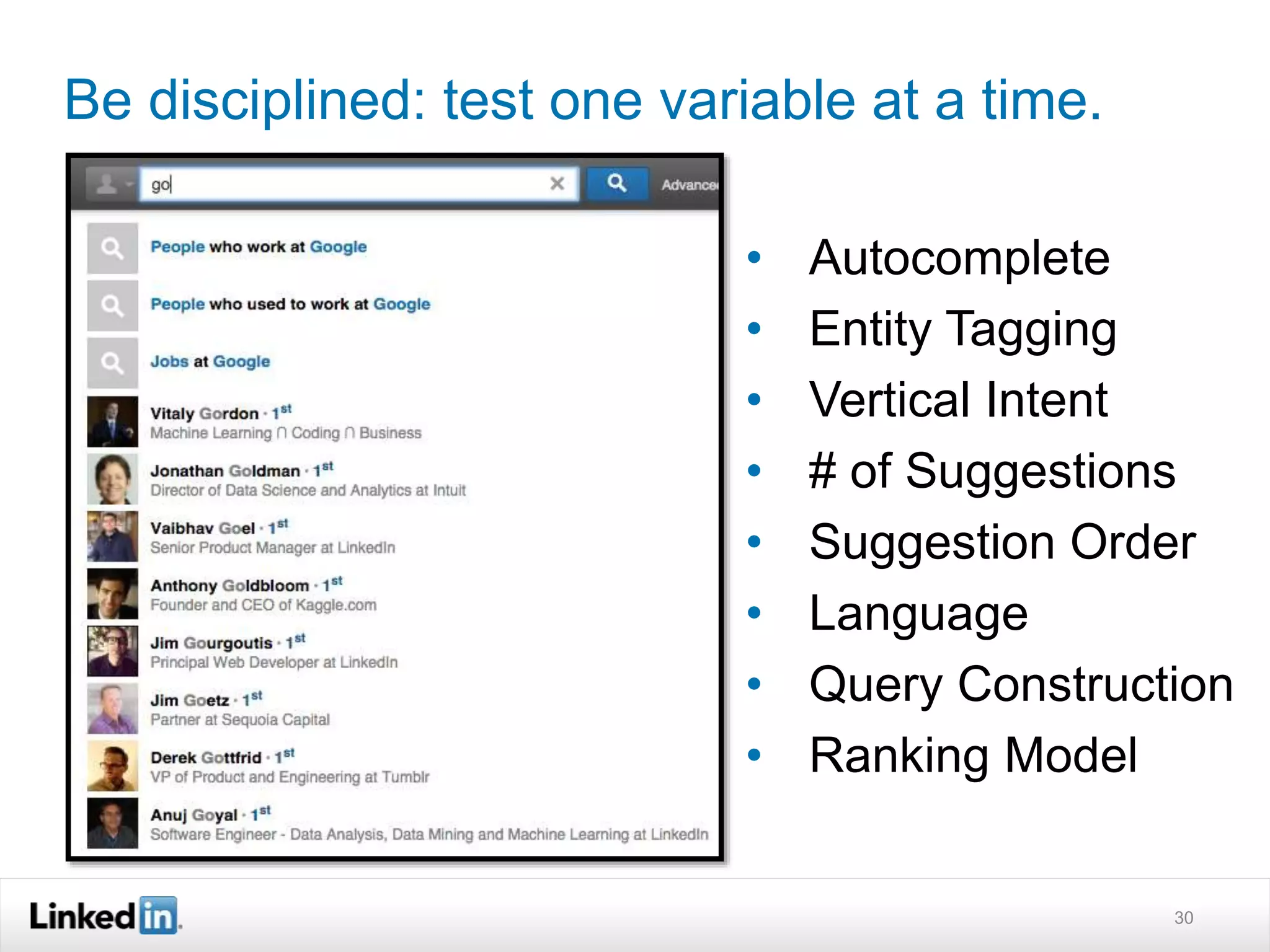

Express involves defining an objective function and collecting training data to understand utility and inputs. Explain focuses on model explainability over accuracy, using linear models or decision trees initially before upgrading models. Experiment emphasizes that experiments are now cheap, but to test variables disciplined and optimize the speed of learning.