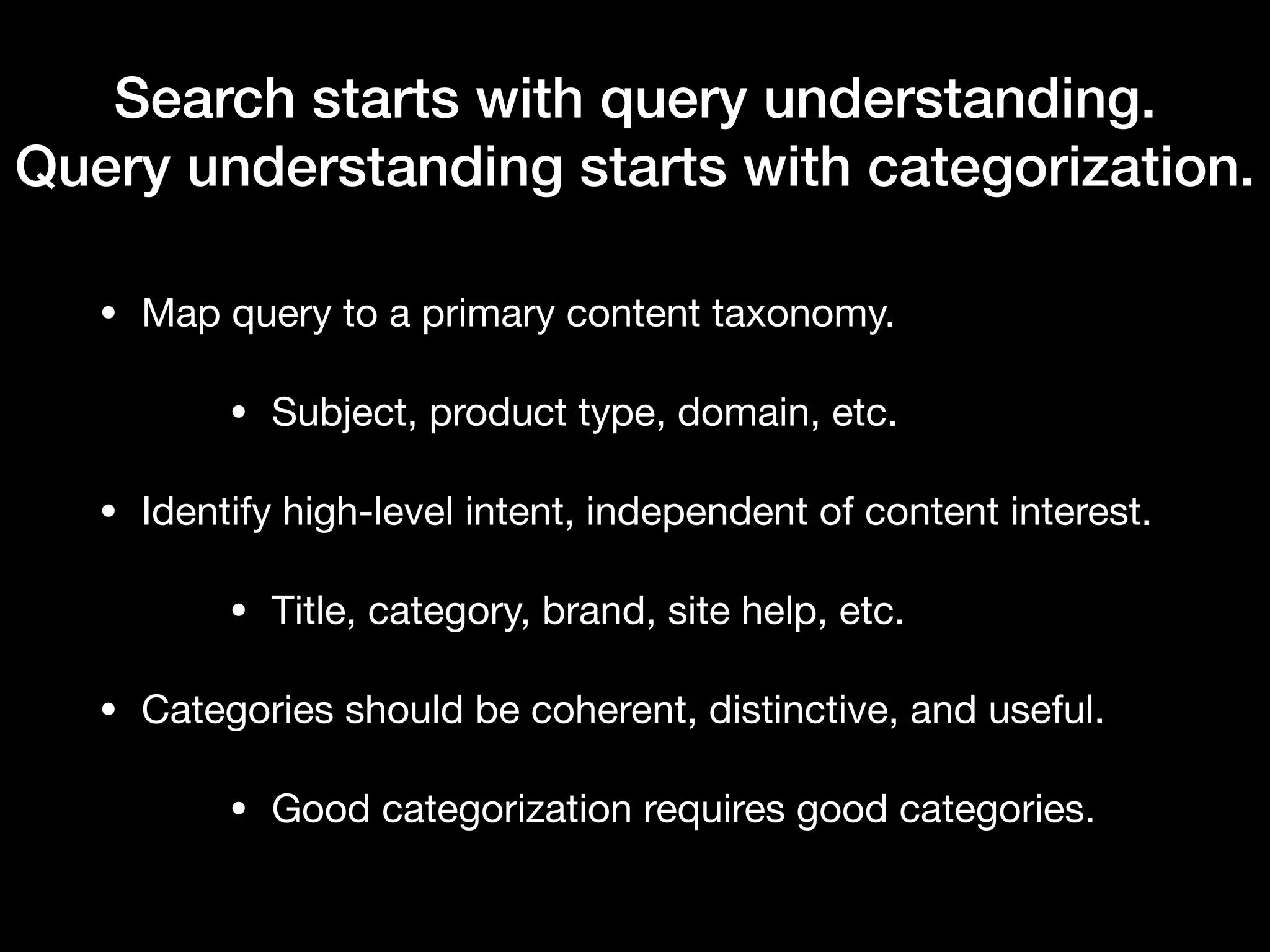

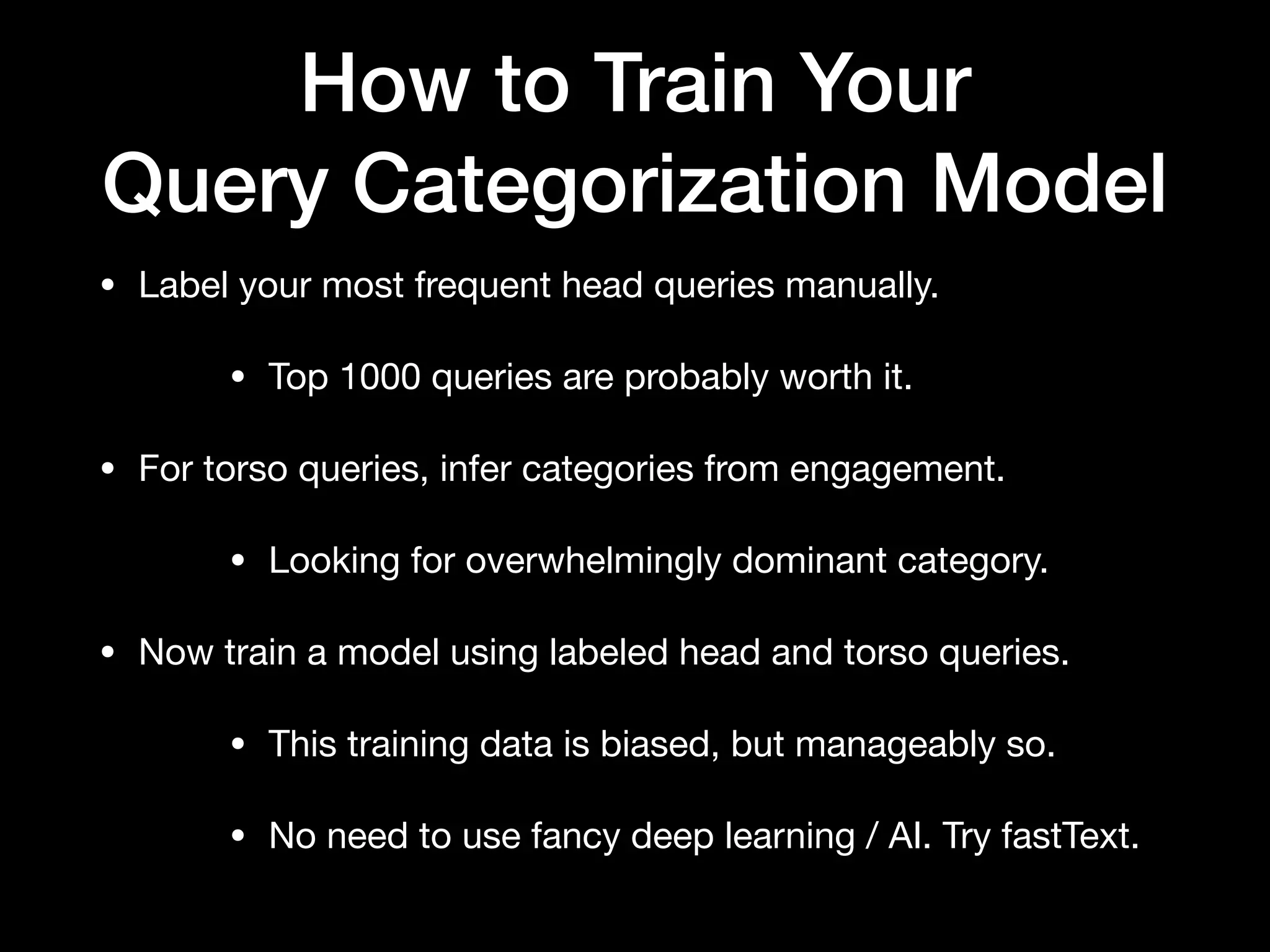

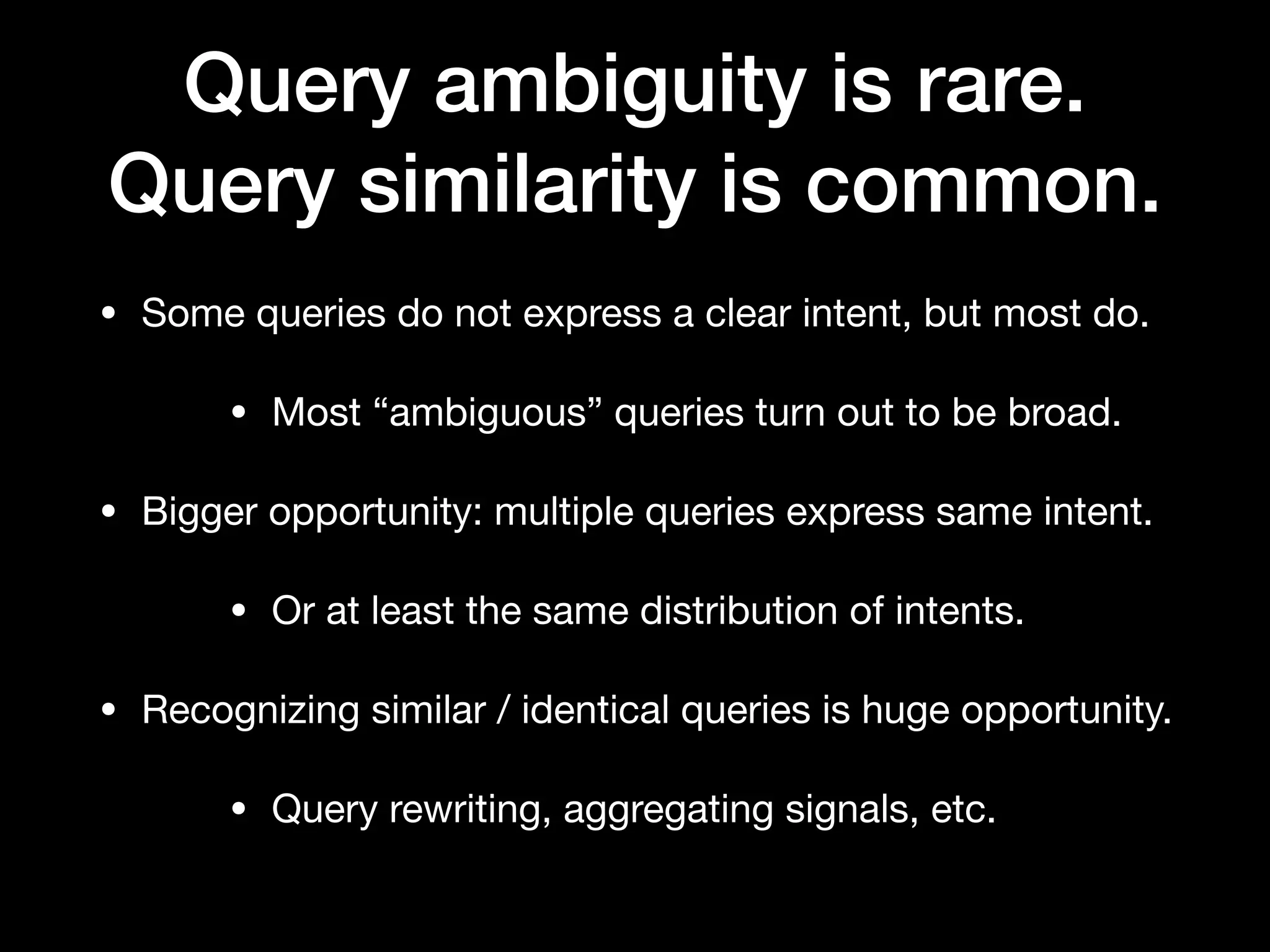

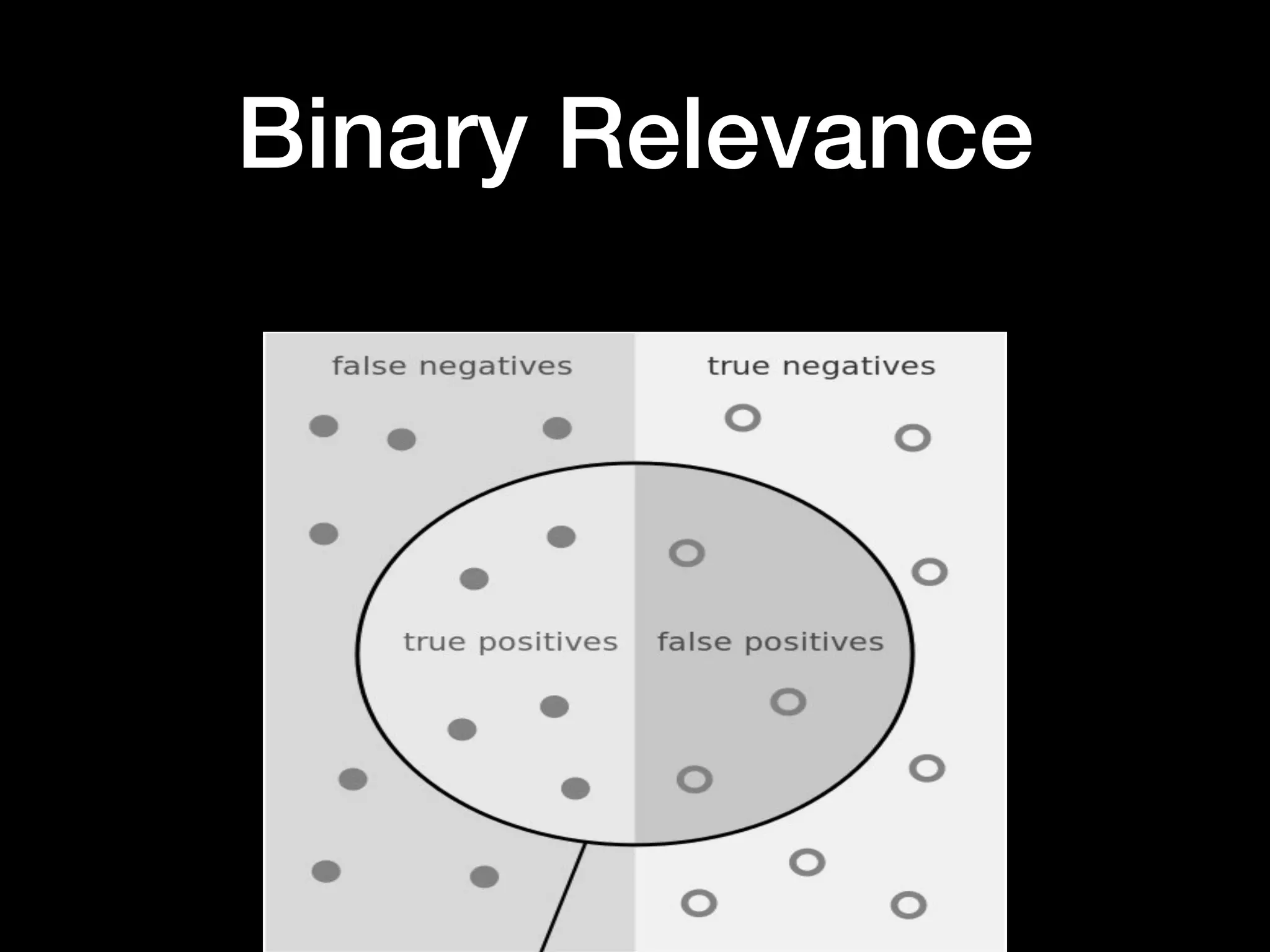

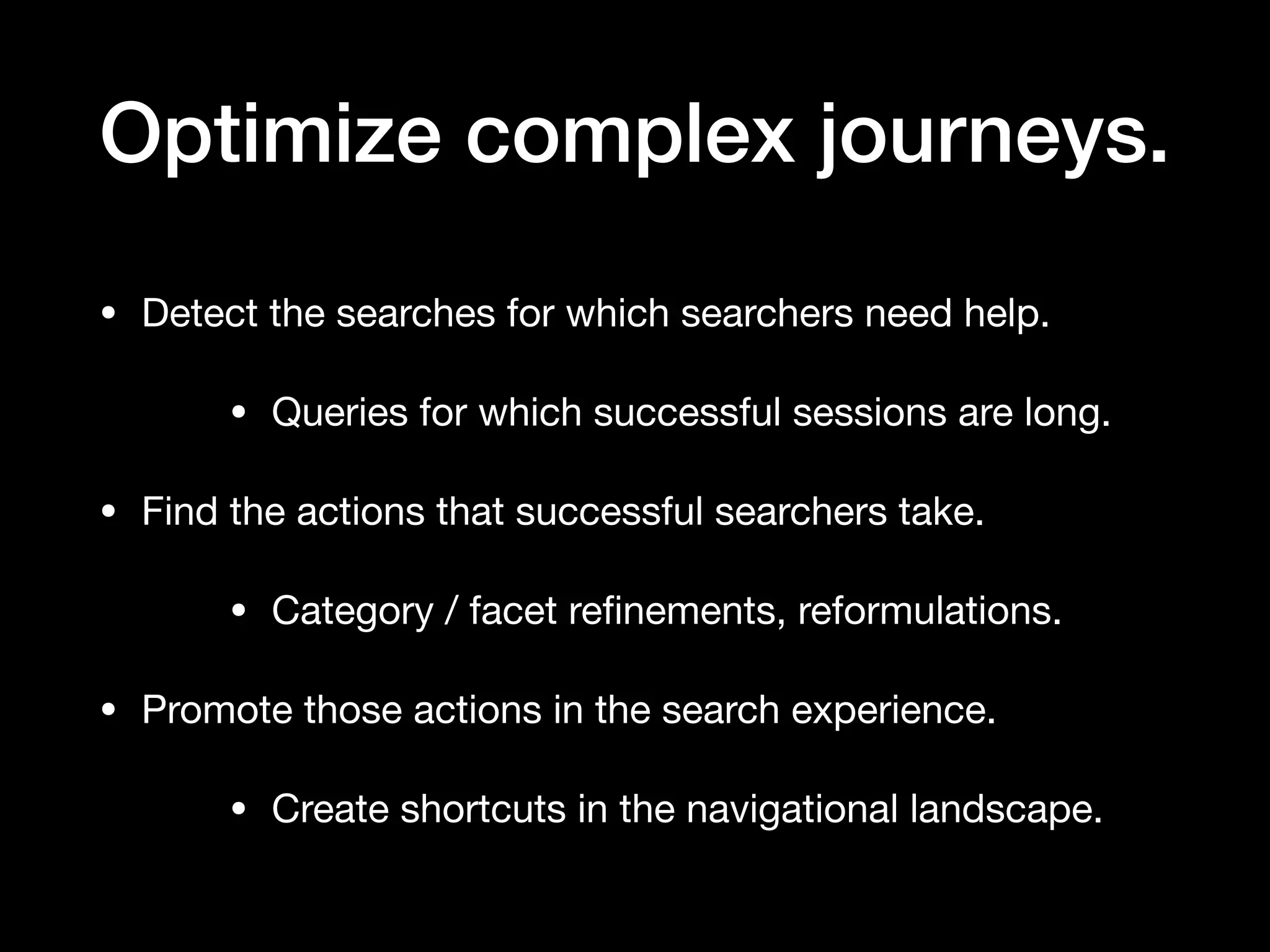

Daniel Tunkelang's presentation at Wikimedia Foundation on April 27, 2020, elaborates on the intricacies of search processes, emphasizing the importance of metrics, models, and methods for effective search engine functionality. Key insights include the distinction between known-item and exploratory searches, the need for understanding user intent, and the iterative nature of the search process. The presentation also highlights the importance of optimizing query performance and leveraging successful searcher behavior to enhance overall user experience.