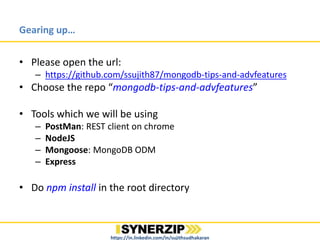

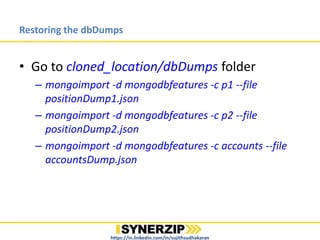

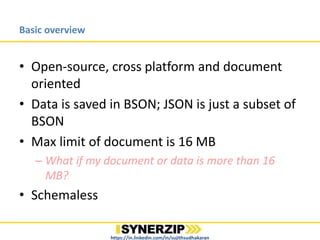

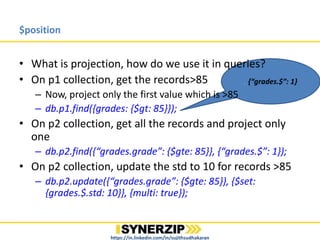

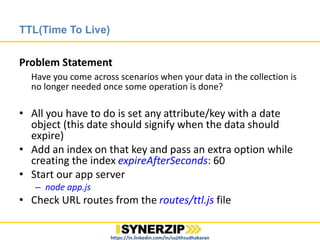

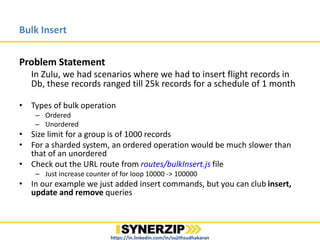

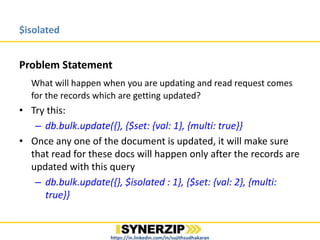

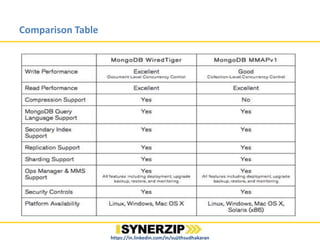

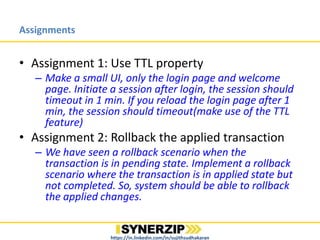

The document provides an overview of advanced MongoDB features and tips regarding database management using tools like Postman, Node.js, and Mongoose. It covers topics such as database restoration, bulk operations, time-to-live (TTL) properties, journaling, GridFS for handling large files, and transaction semantics in MongoDB. Additionally, it includes practical assignments to apply these concepts in a real-world context.