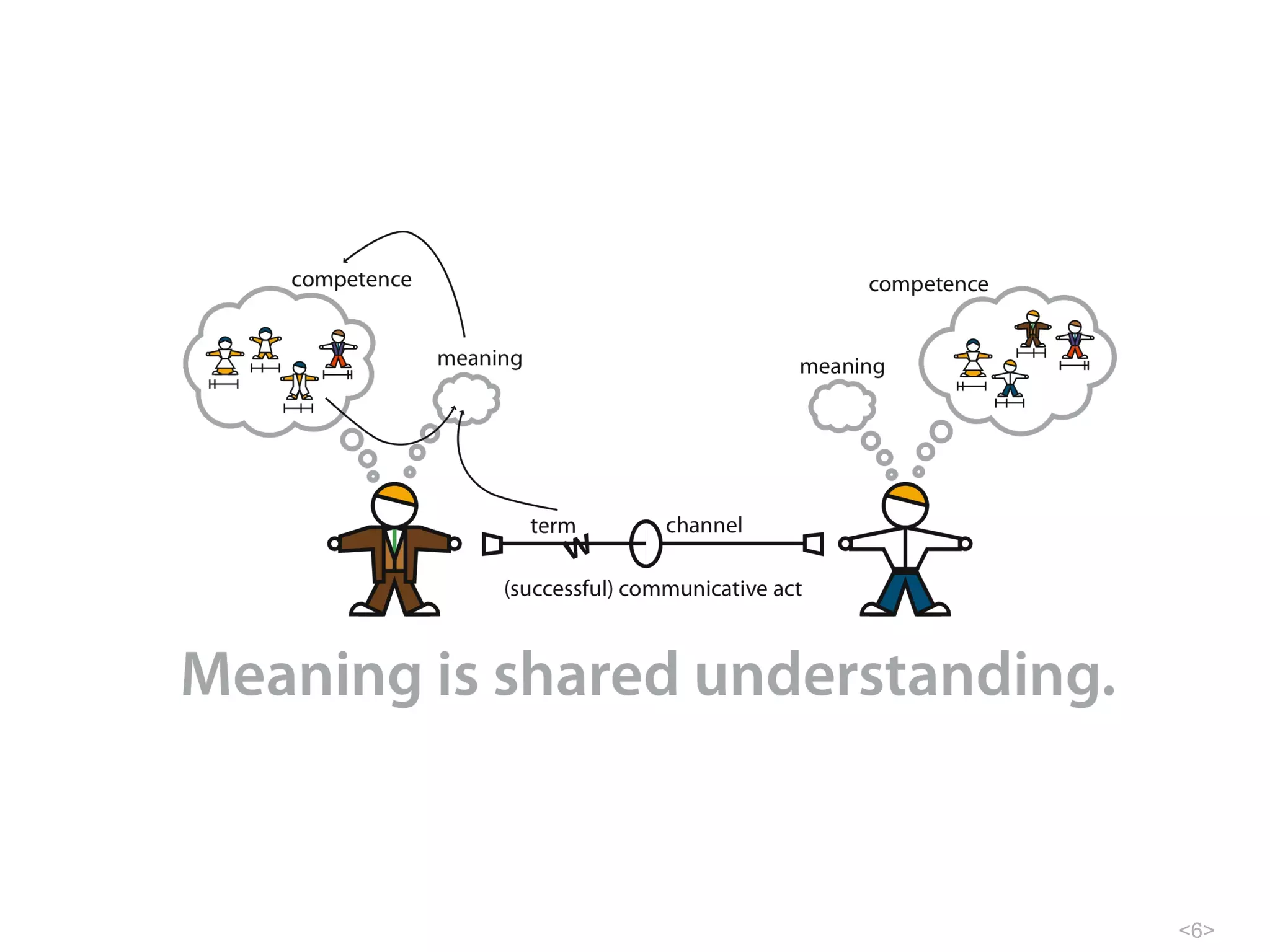

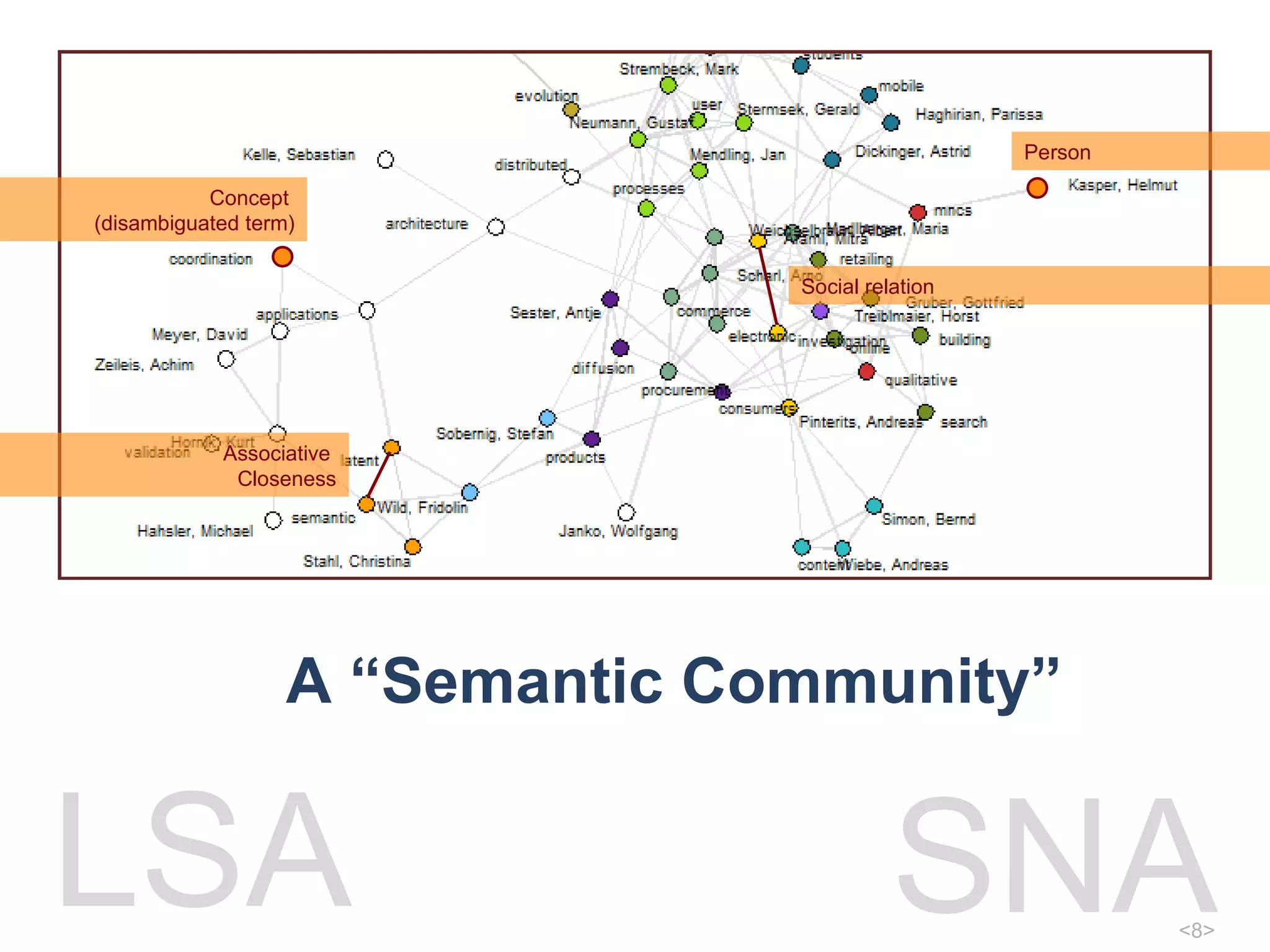

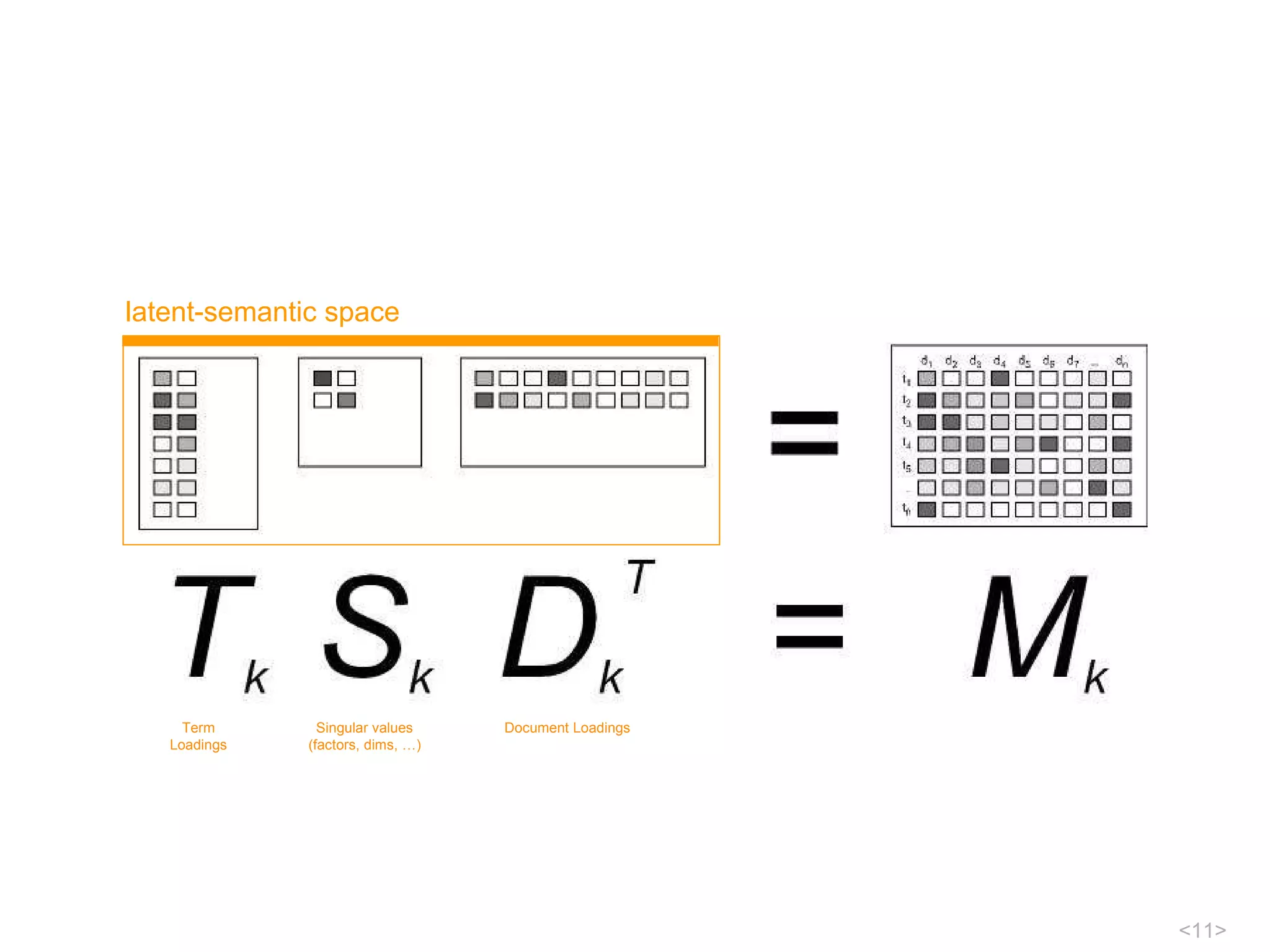

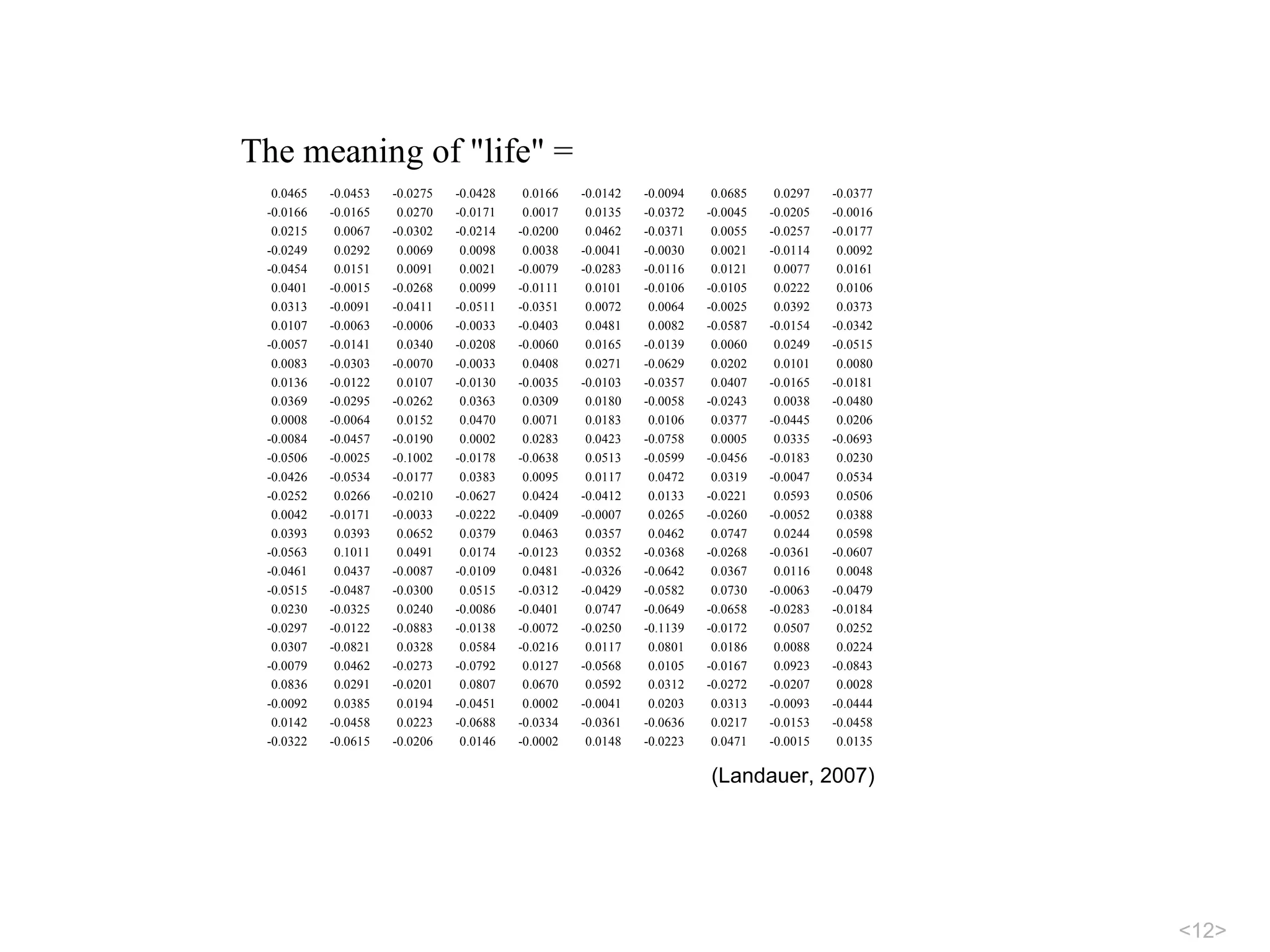

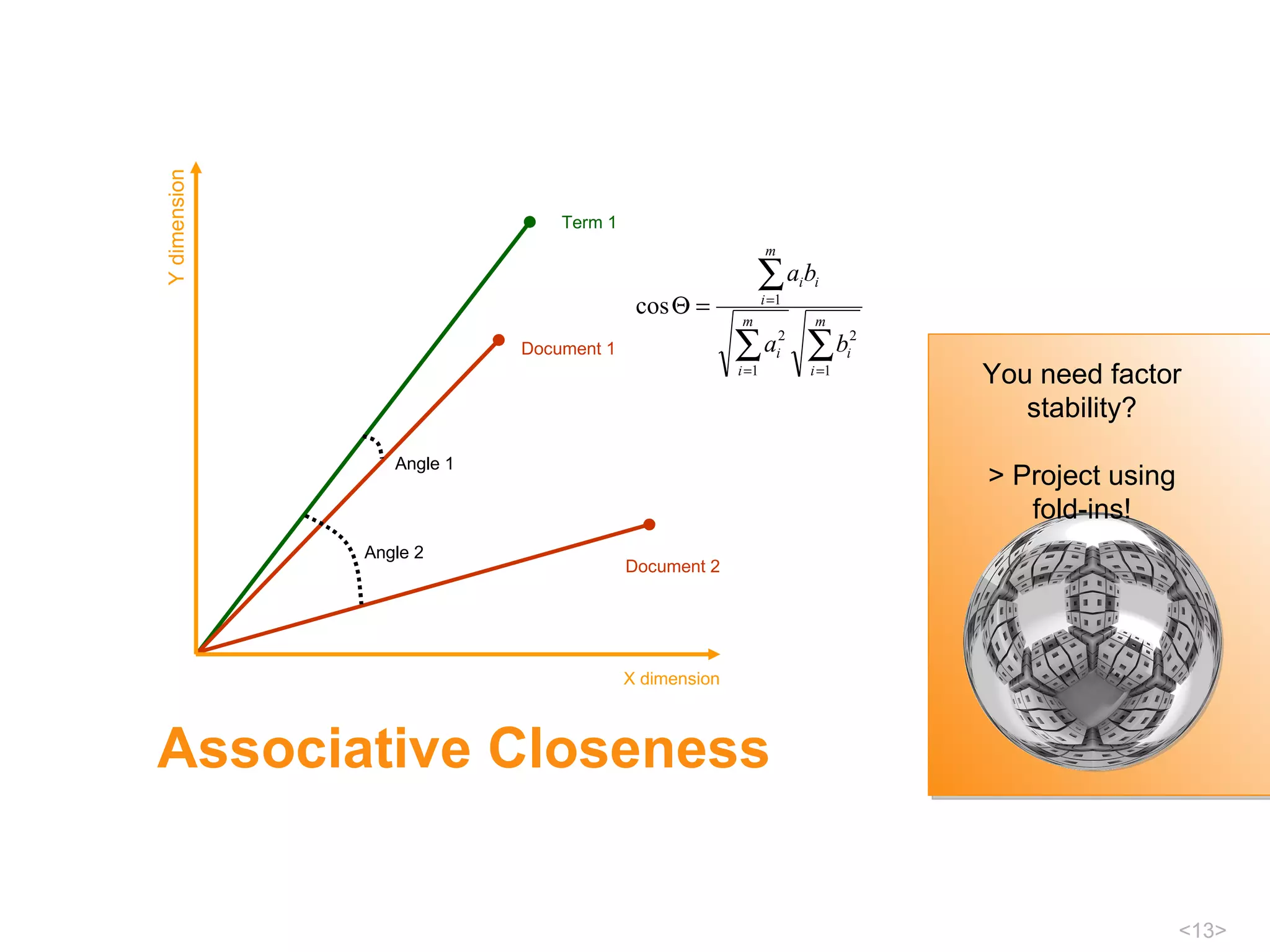

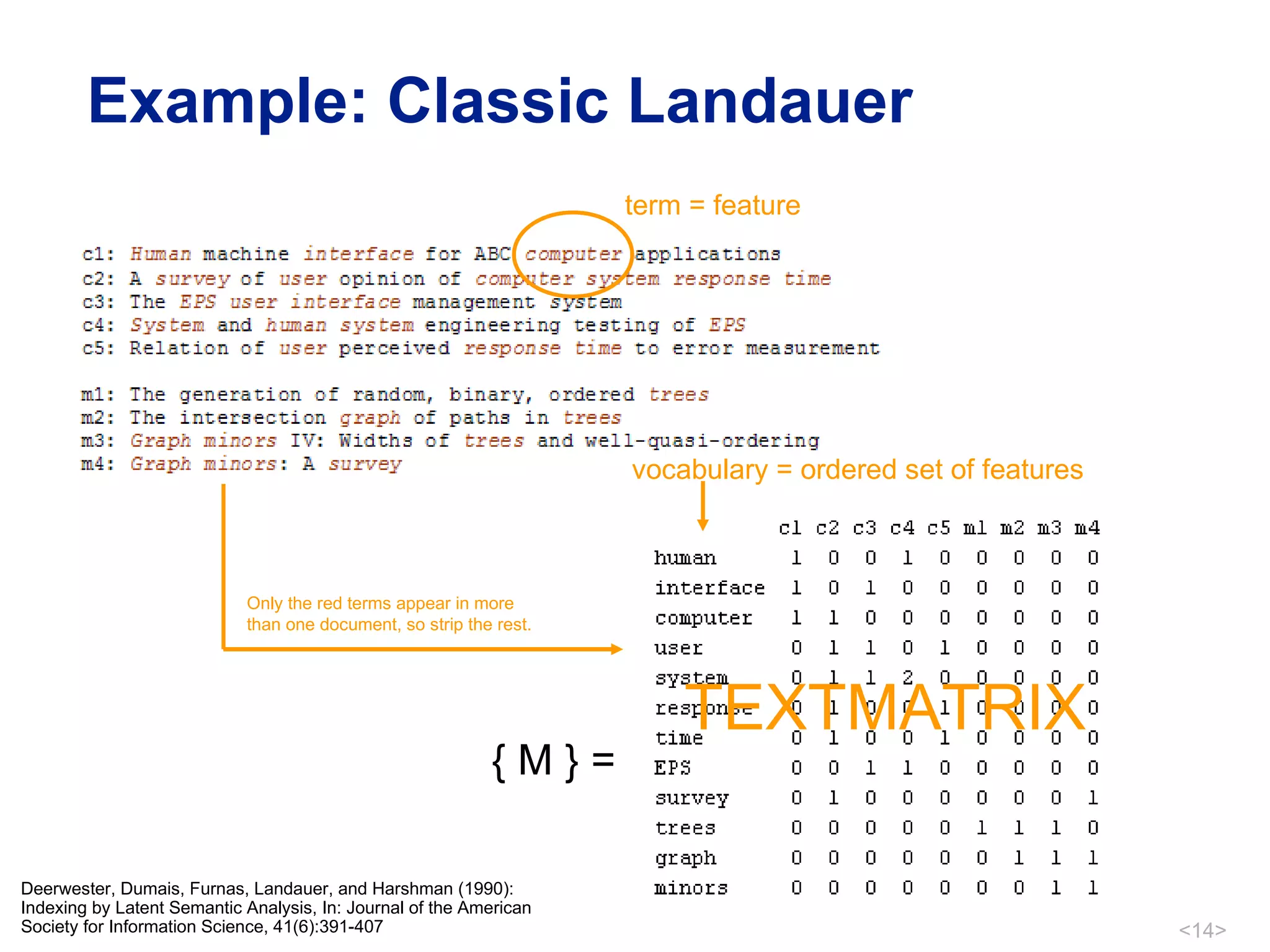

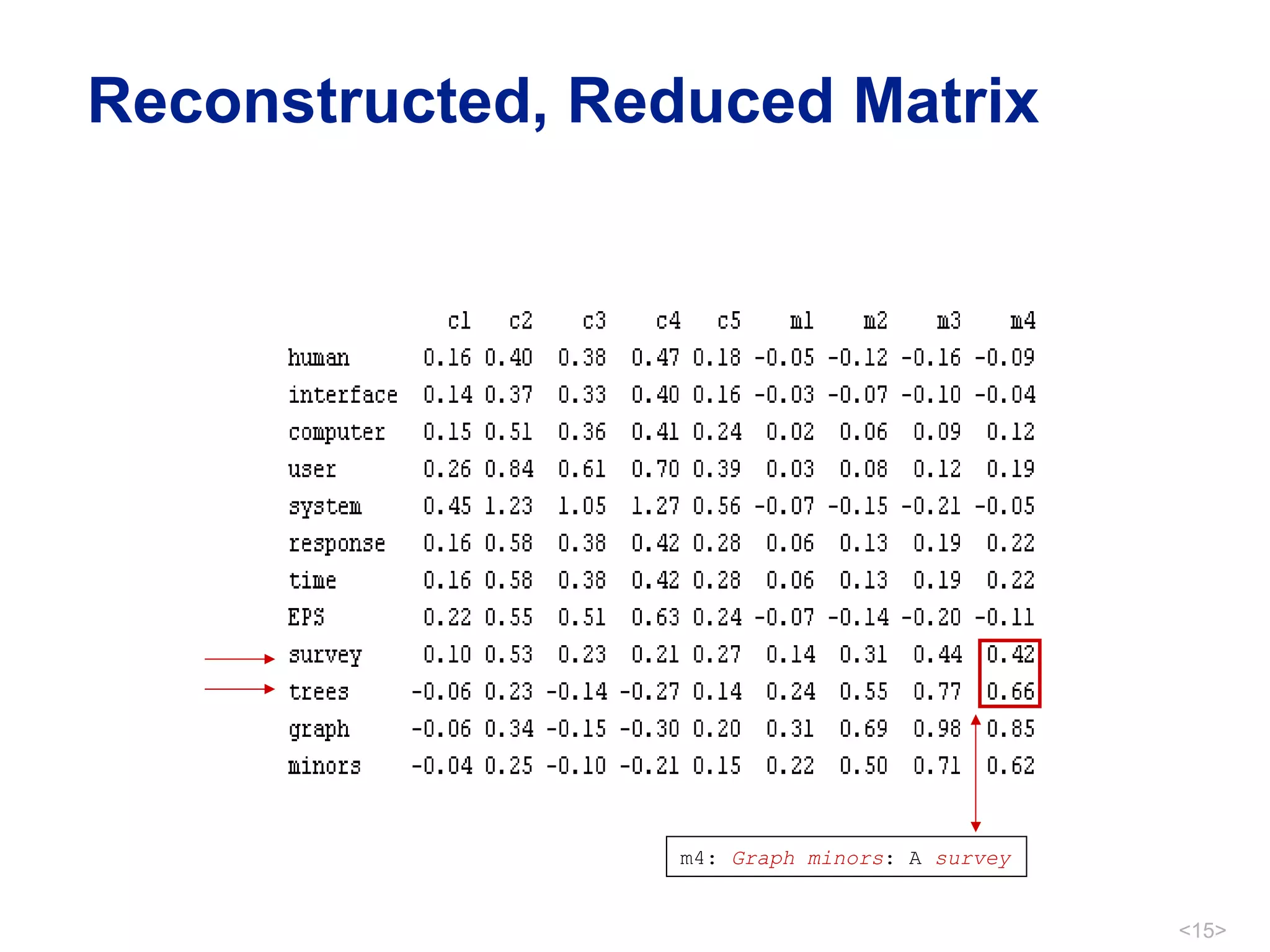

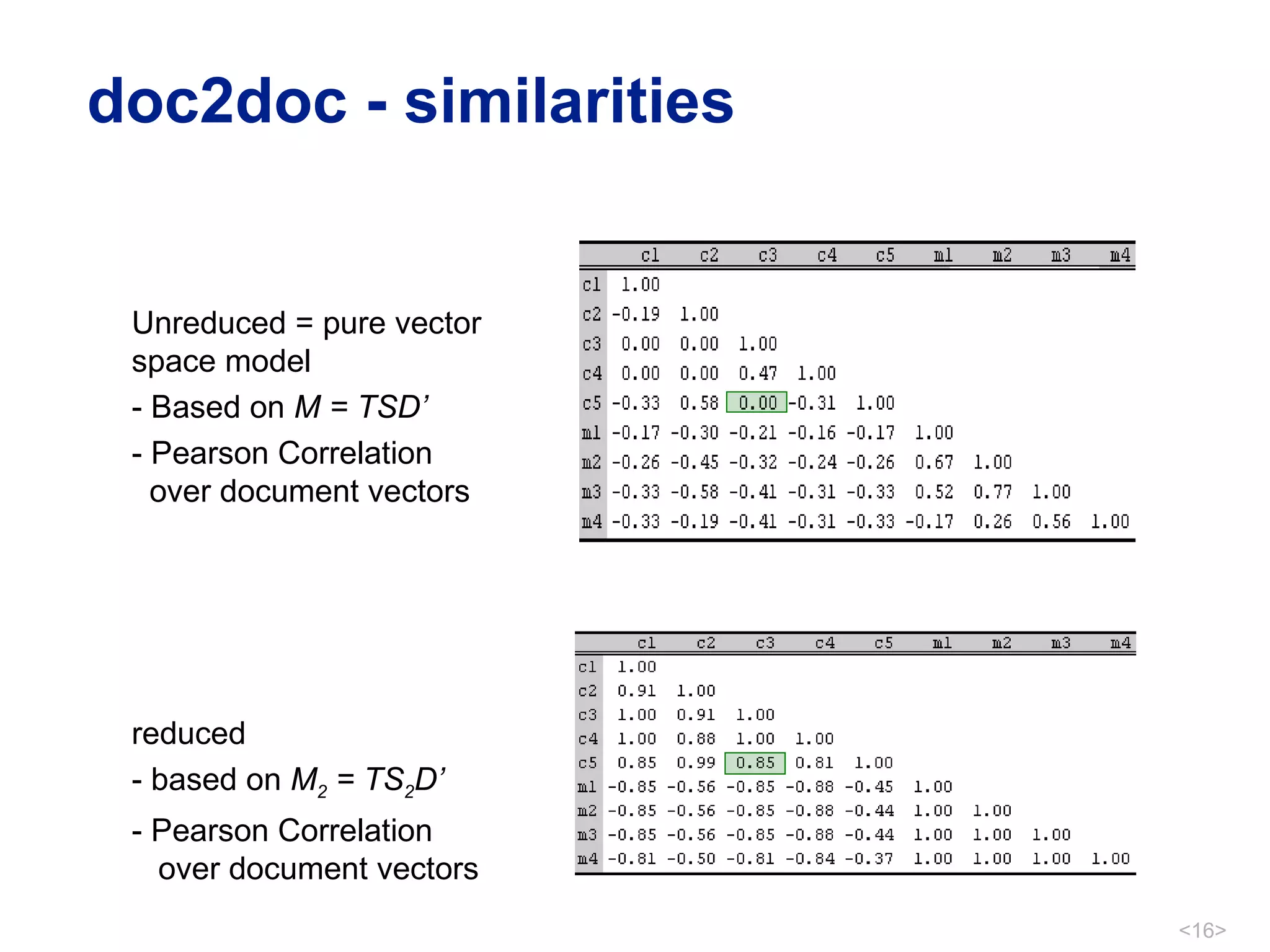

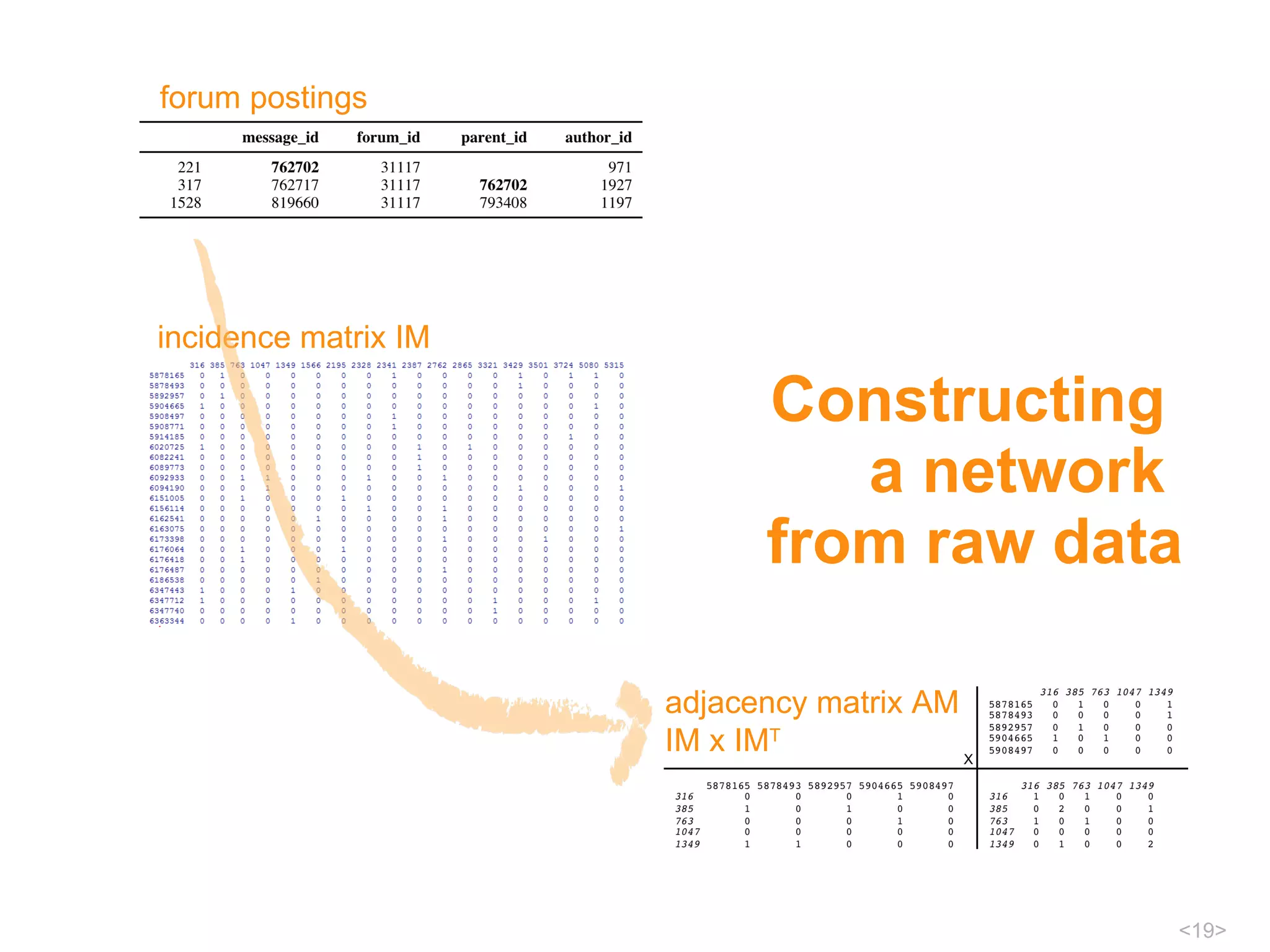

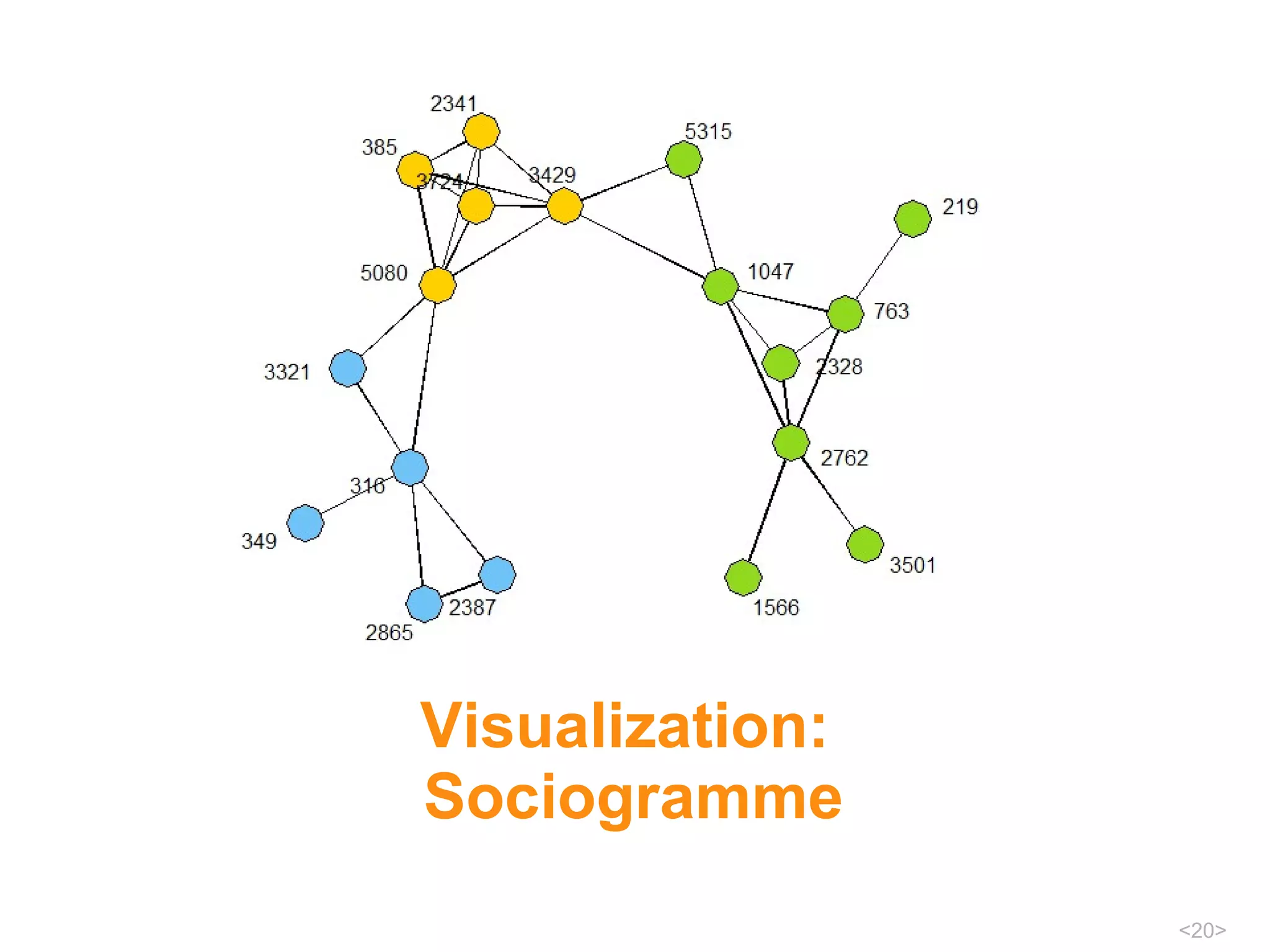

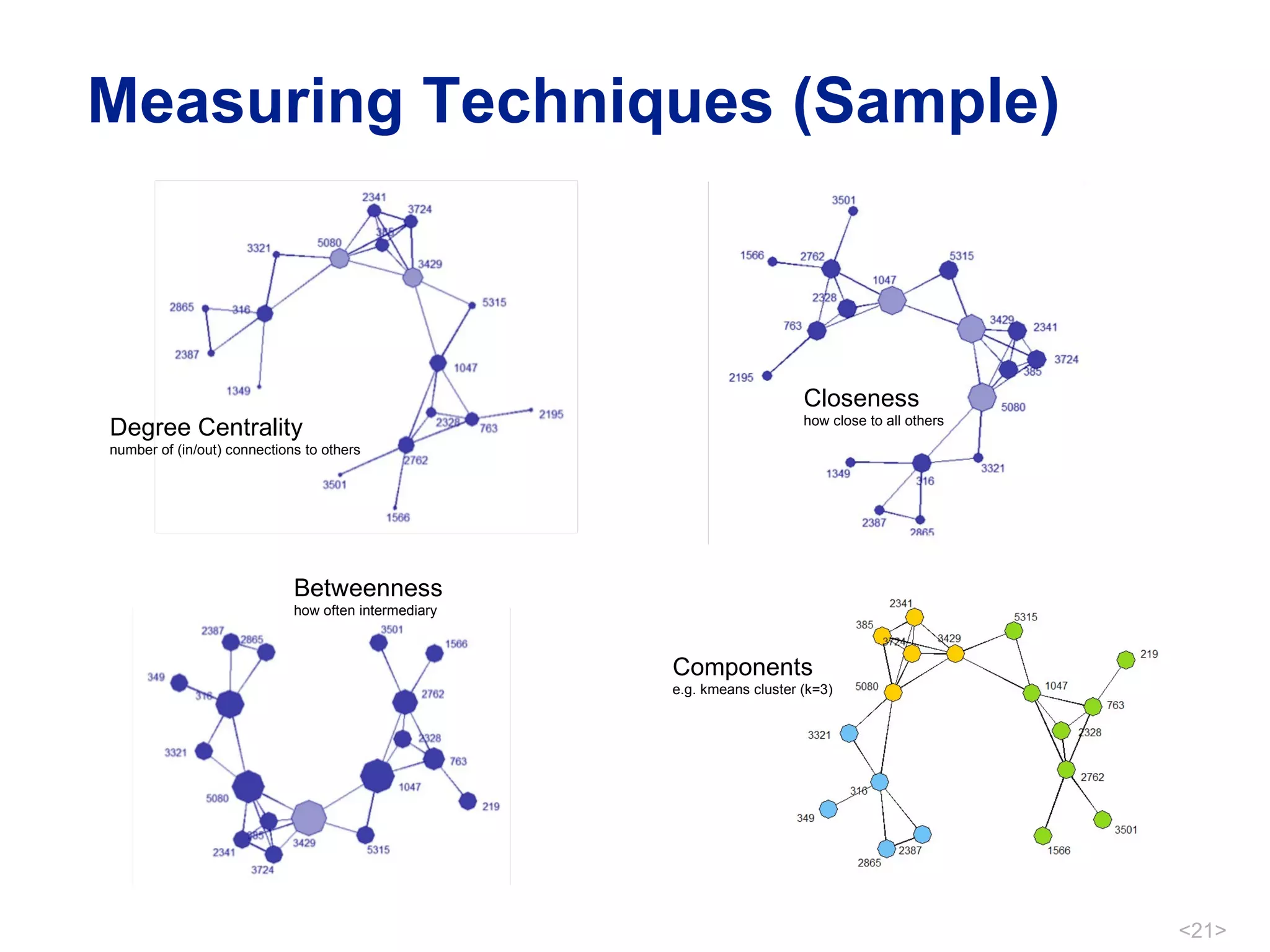

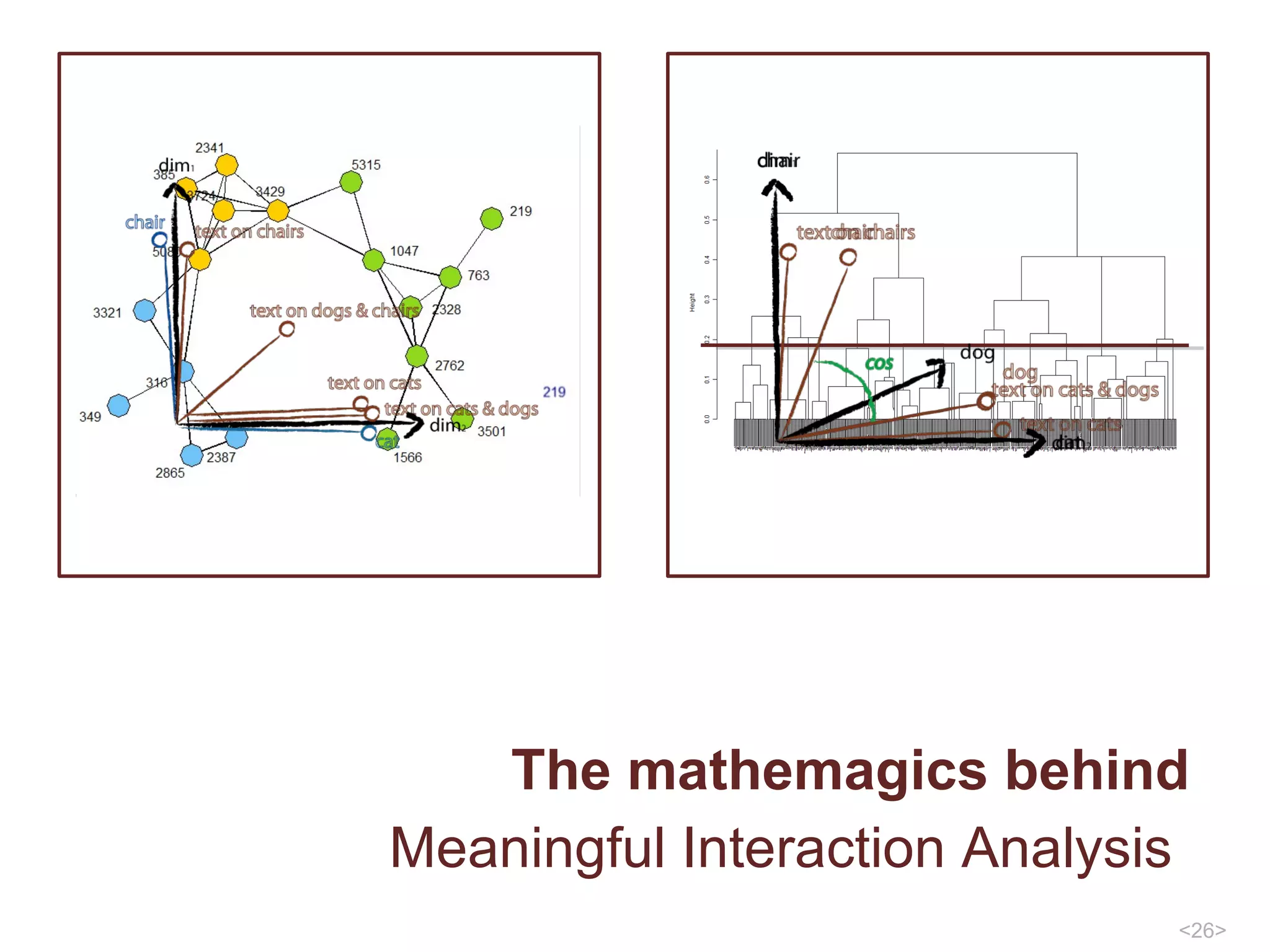

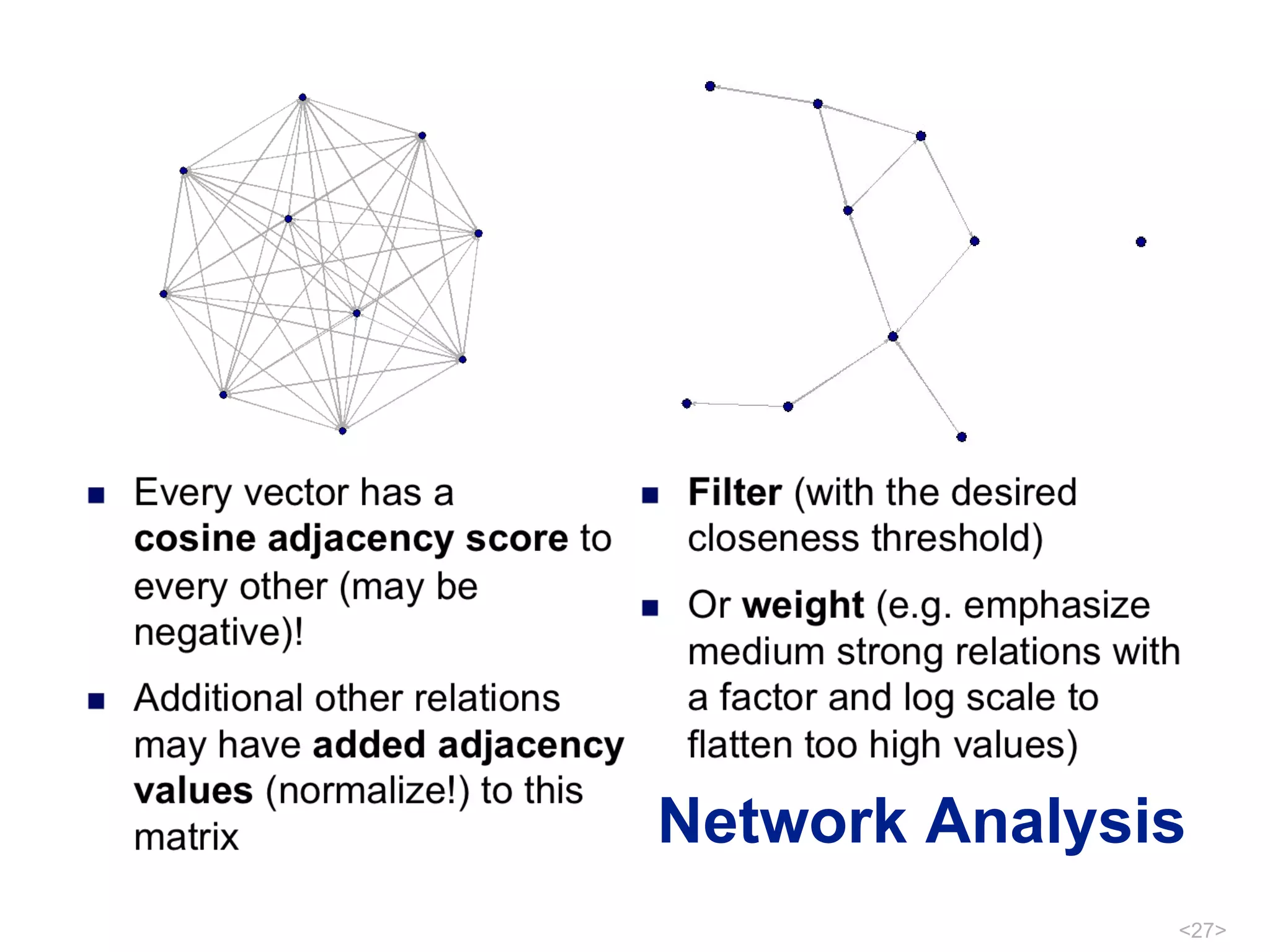

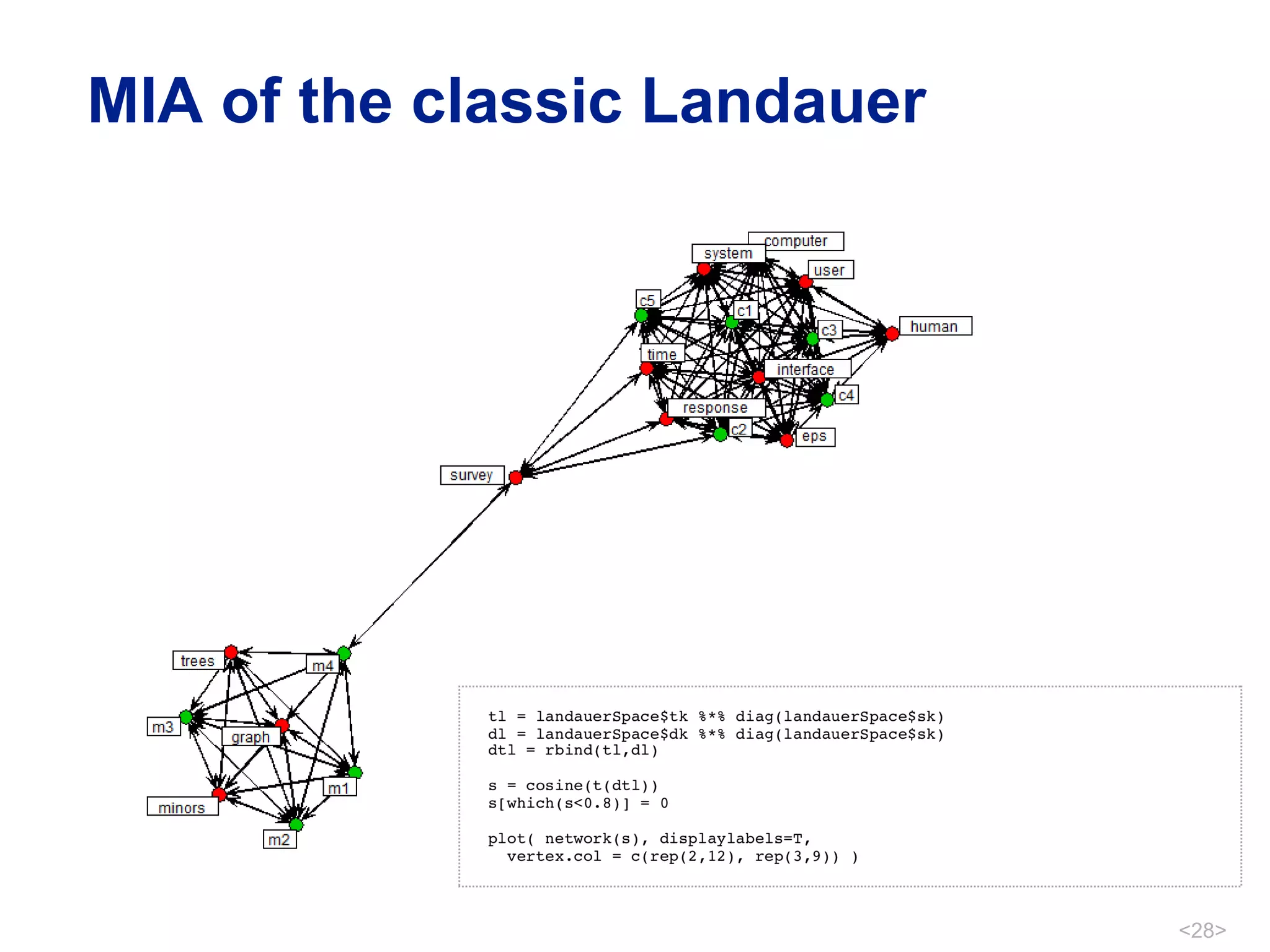

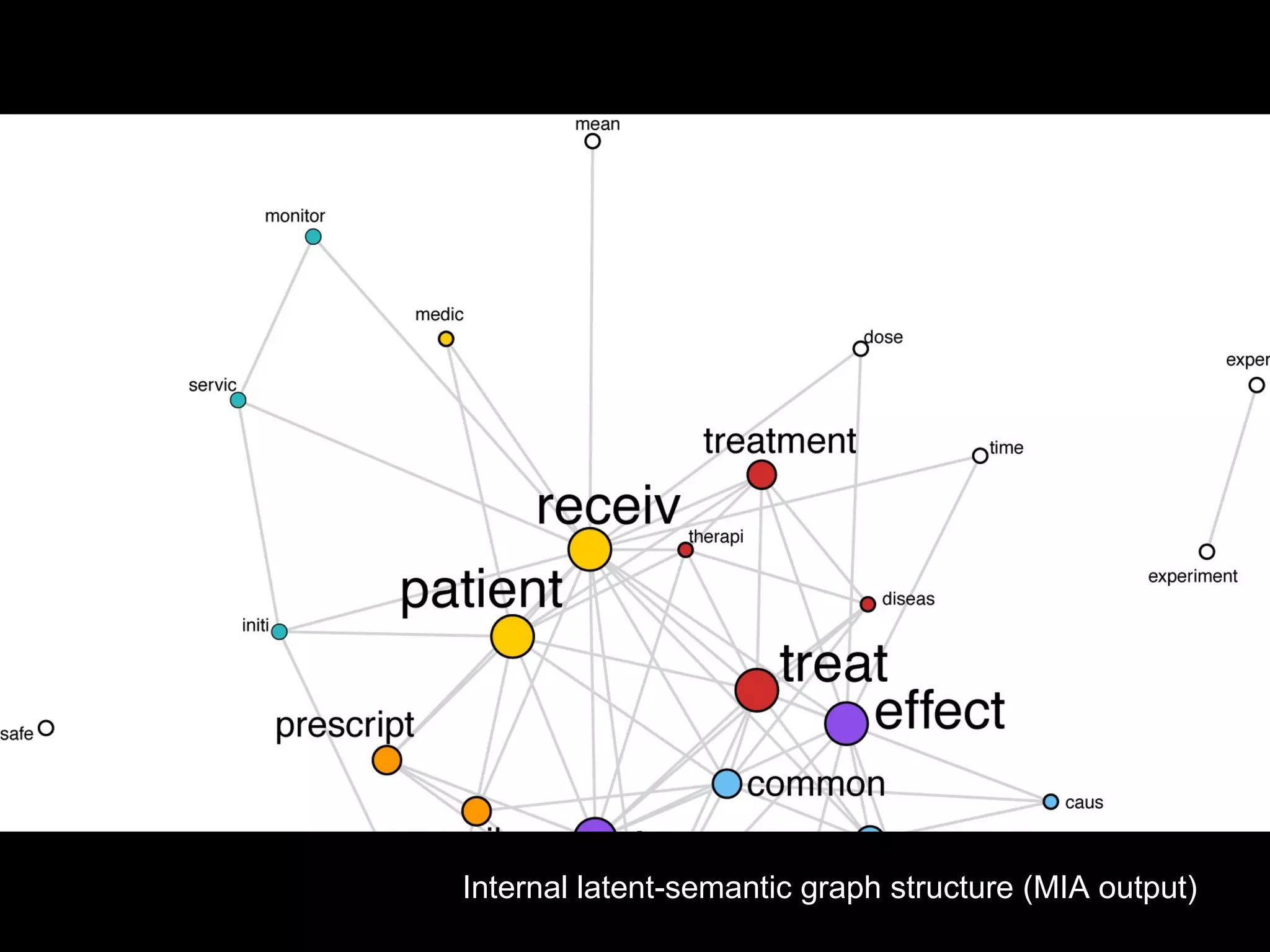

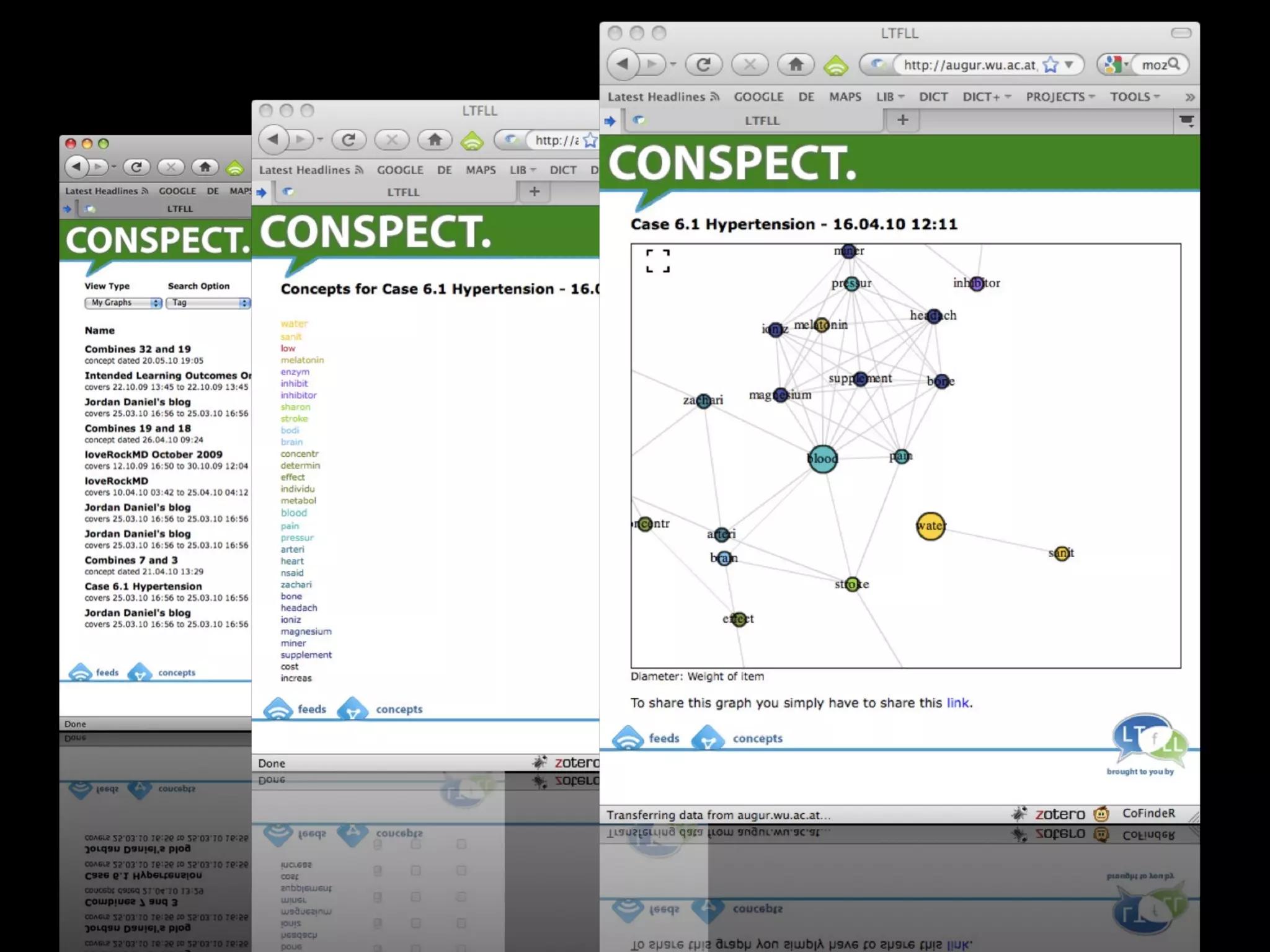

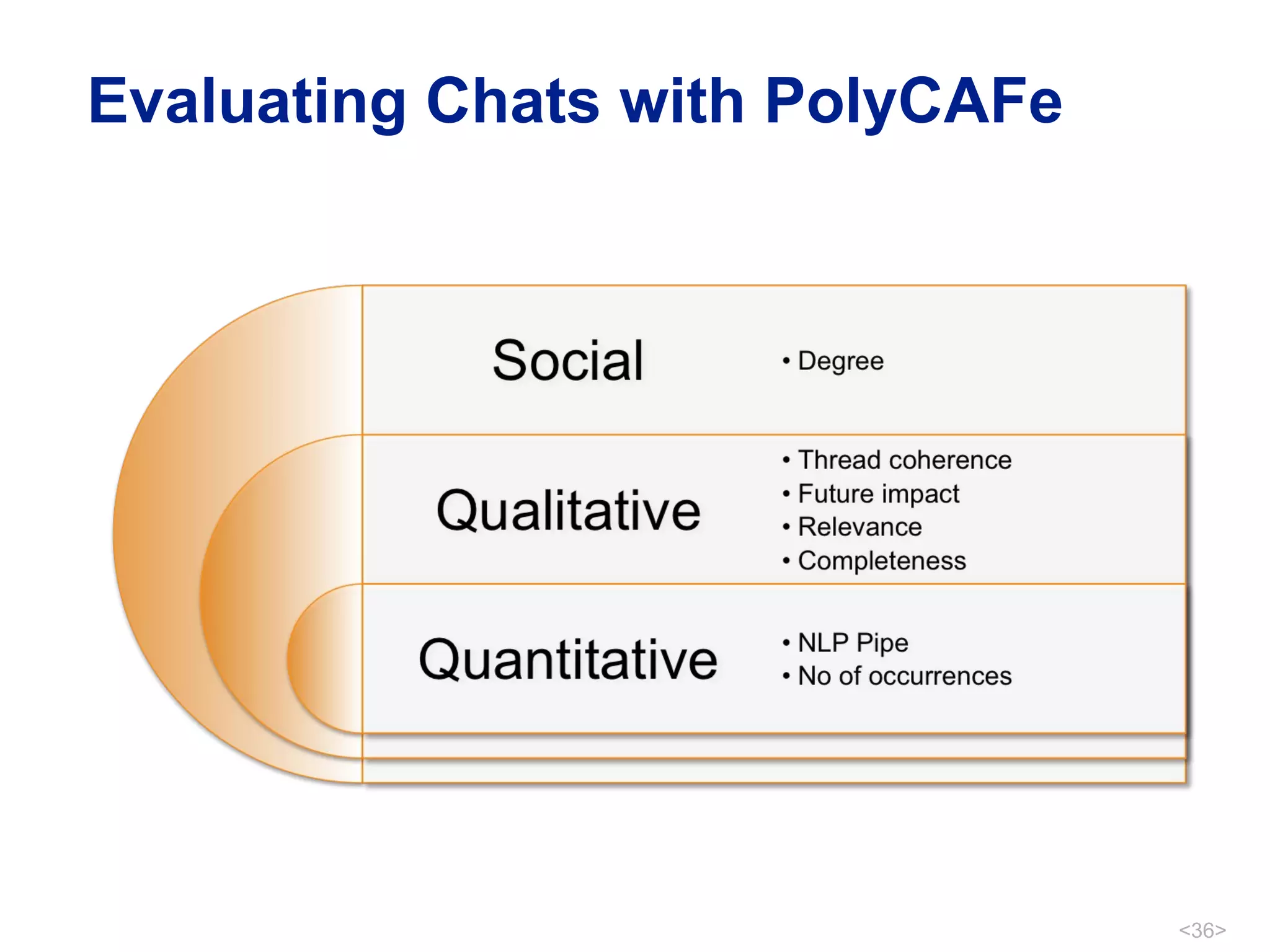

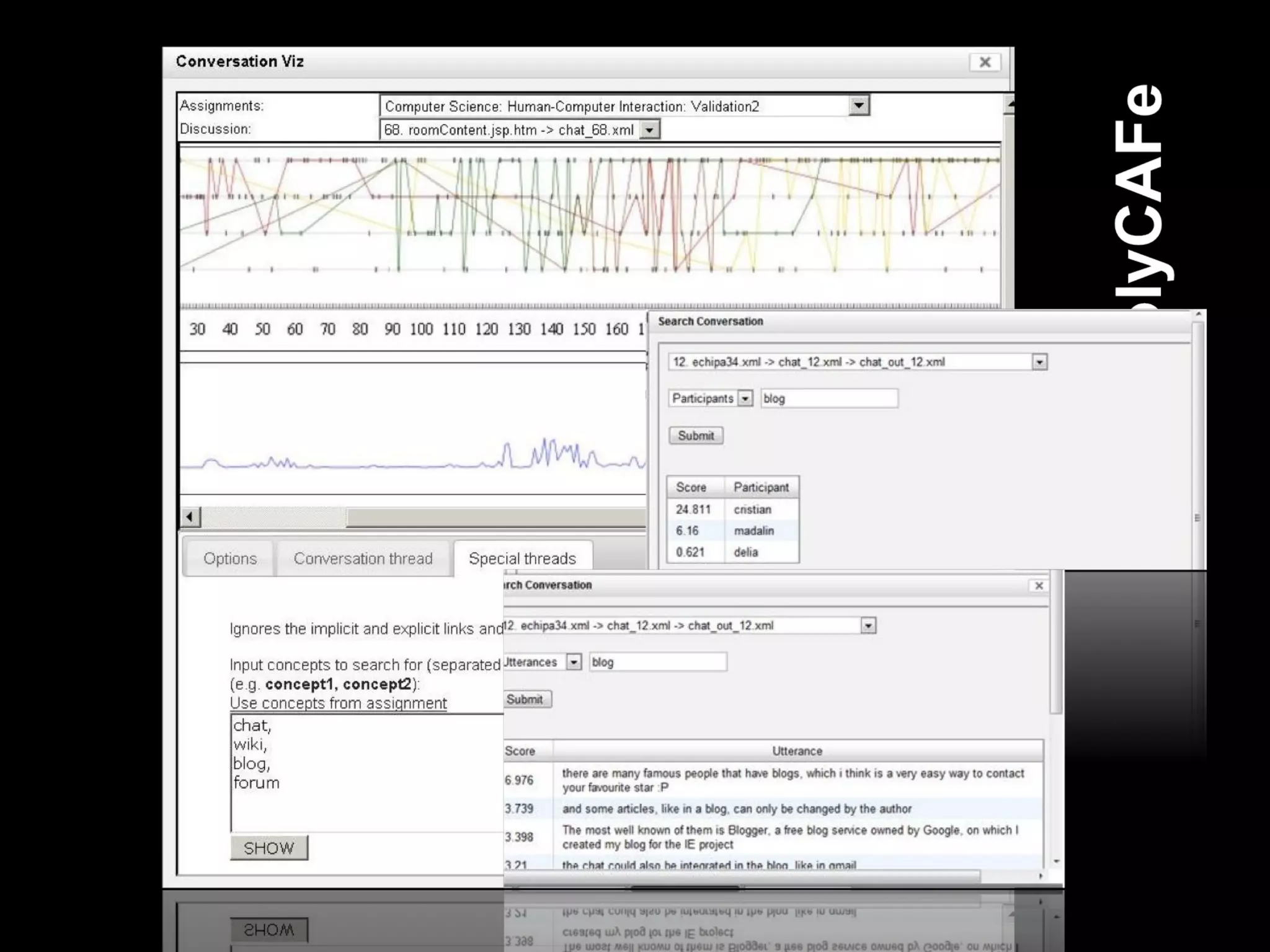

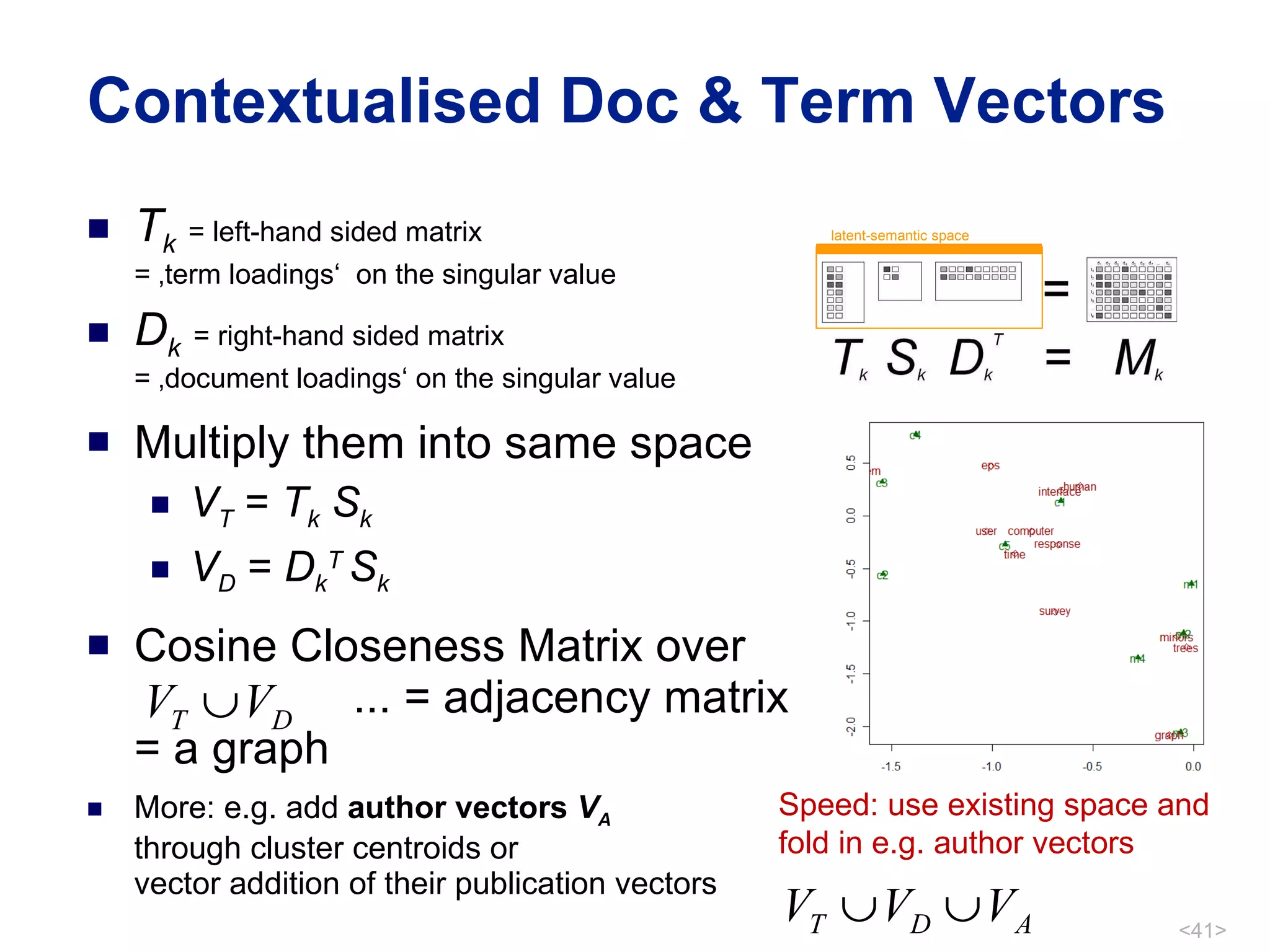

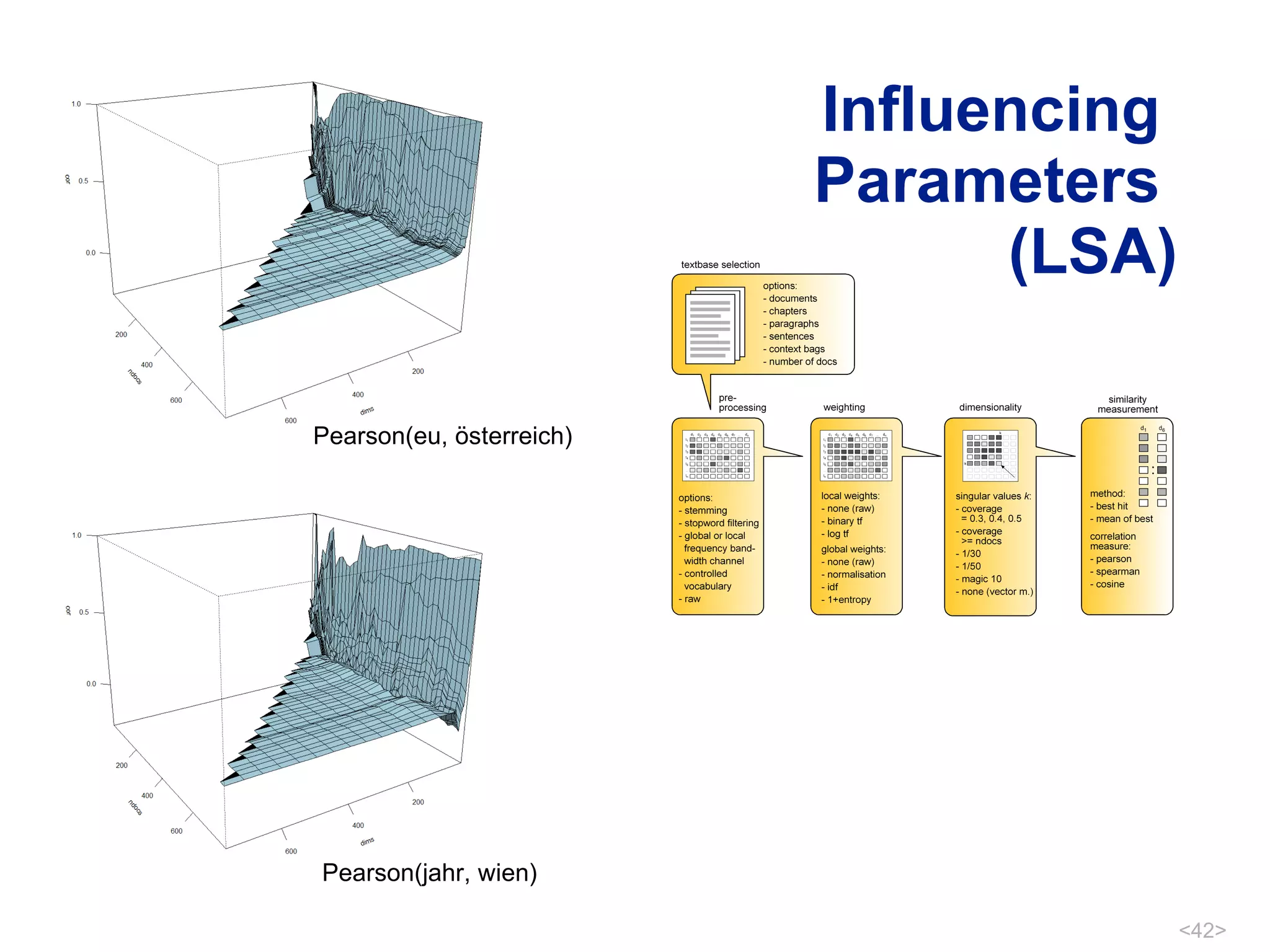

This document discusses theories of latent semantics and social interaction. It outlines latent semantic analysis (LSA) and social network analysis (SNA) as methods to analyze meaning and interactions. It proposes meaningful interaction analysis (MIA) as a technique that combines LSA and SNA to study associative closeness structures and social relations in latent semantic spaces. Examples of applying MIA to analyze forum postings, virtual meeting attendance, and blog subscriptions are provided.