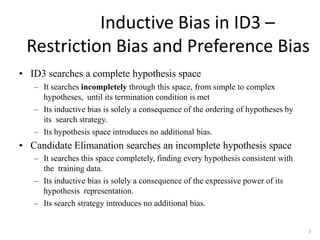

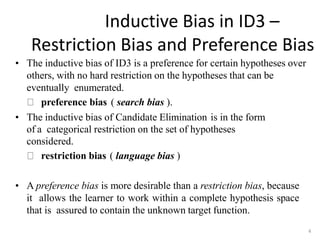

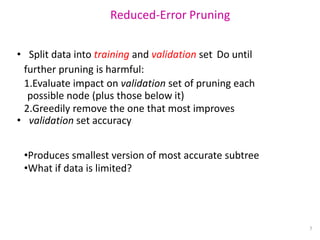

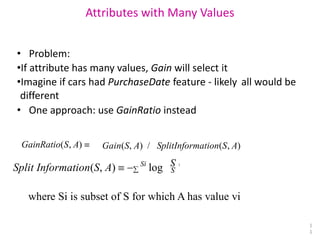

The document discusses various machine learning concepts, focusing on the decision tree learning algorithm ID3 and its inductive biases, including preference and restriction bias. It addresses overfitting and techniques to avoid it, such as pre-pruning and post-pruning of trees, as well as methods for handling continuous attributes and attributes with costs. Additionally, it compares different learning approaches and provides resources for further reading.

![Attributes with Costs

• Consider

•medical diagnosis, BloodTest has cost $150

•robotics, Width_from_1ft has cost 23 second

• How to learn consistent tree with low expected cost?

Approaches: replace gain by

• Tan and Schlimmer (1990) Gain2 (S, A) Cost( A)

• Nunez (1988)

• 2Gain( S , A) 1 / (Cost( A) 1)w

• where w [0,1] and determines importance of cost

1

2](https://image.slidesharecdn.com/machinelearning-entropy-240428132048-5998e5fc/85/MACHINE-LEARNING-ENTROPY-INFORMATION-GAINpptx-12-320.jpg)