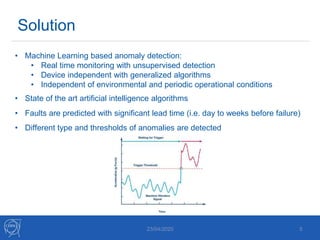

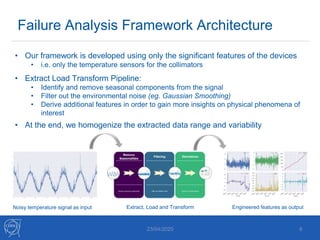

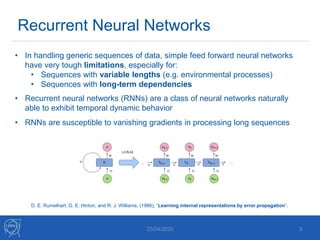

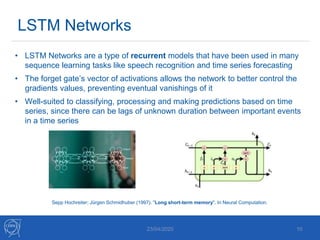

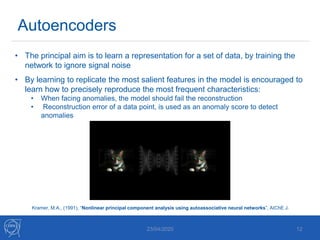

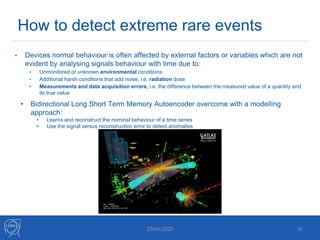

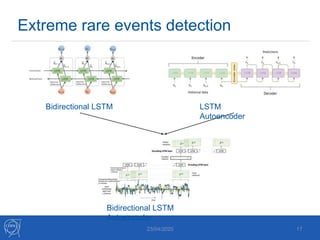

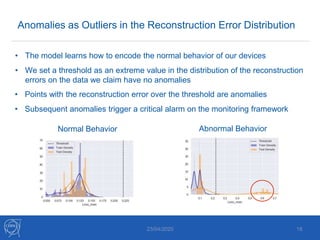

The document discusses the use of machine learning algorithms for anomaly detection in particle accelerators' technical infrastructure, aiming to predict failures before they occur. It presents various approaches, including Mahalanobis distance and LSTM networks, as well as a framework that utilizes neural networks for real-time monitoring of device behavior. The methods focus on improving reliability, reducing downtime, and facilitating predictive maintenance through advanced data analysis techniques.