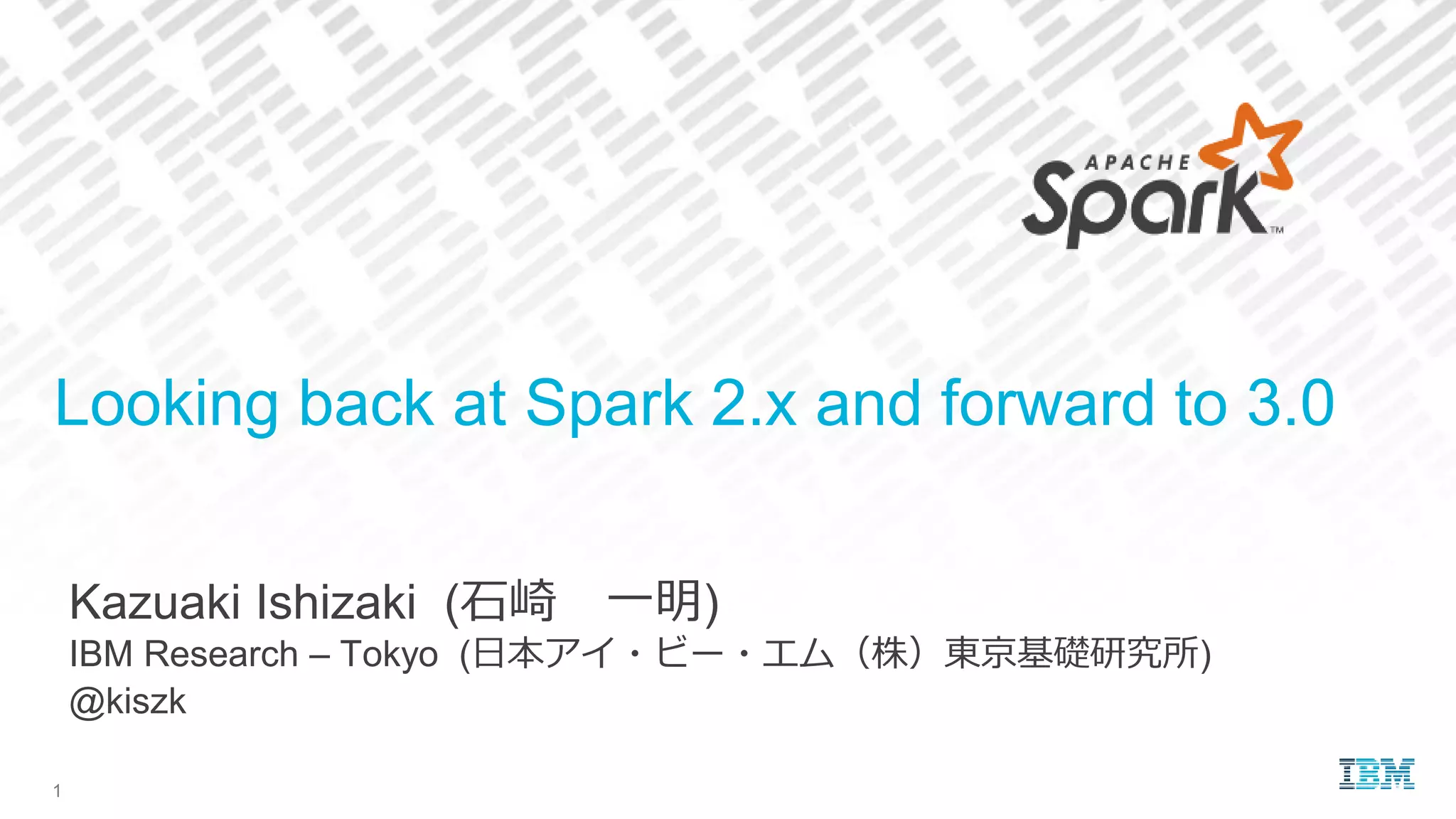

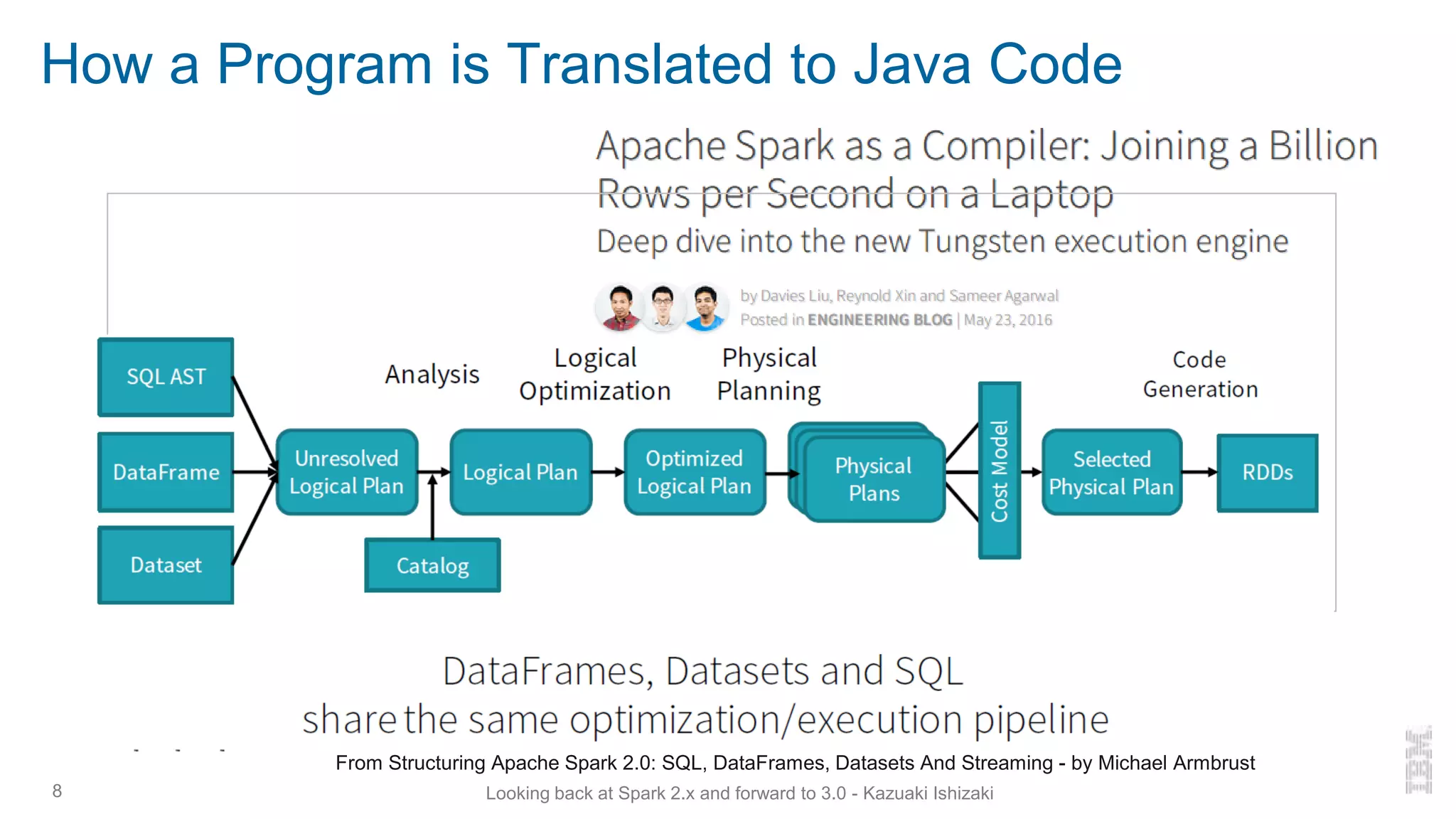

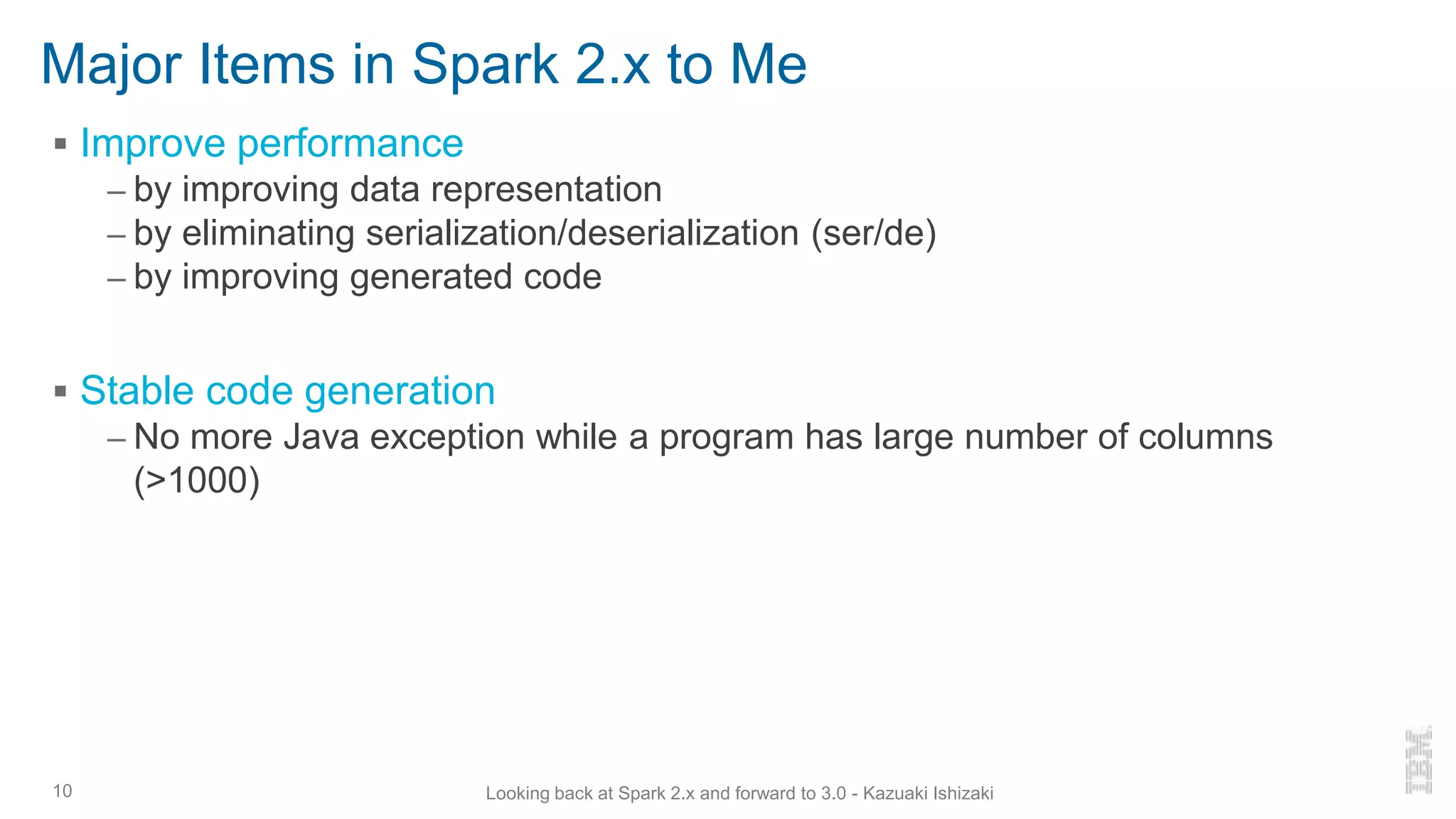

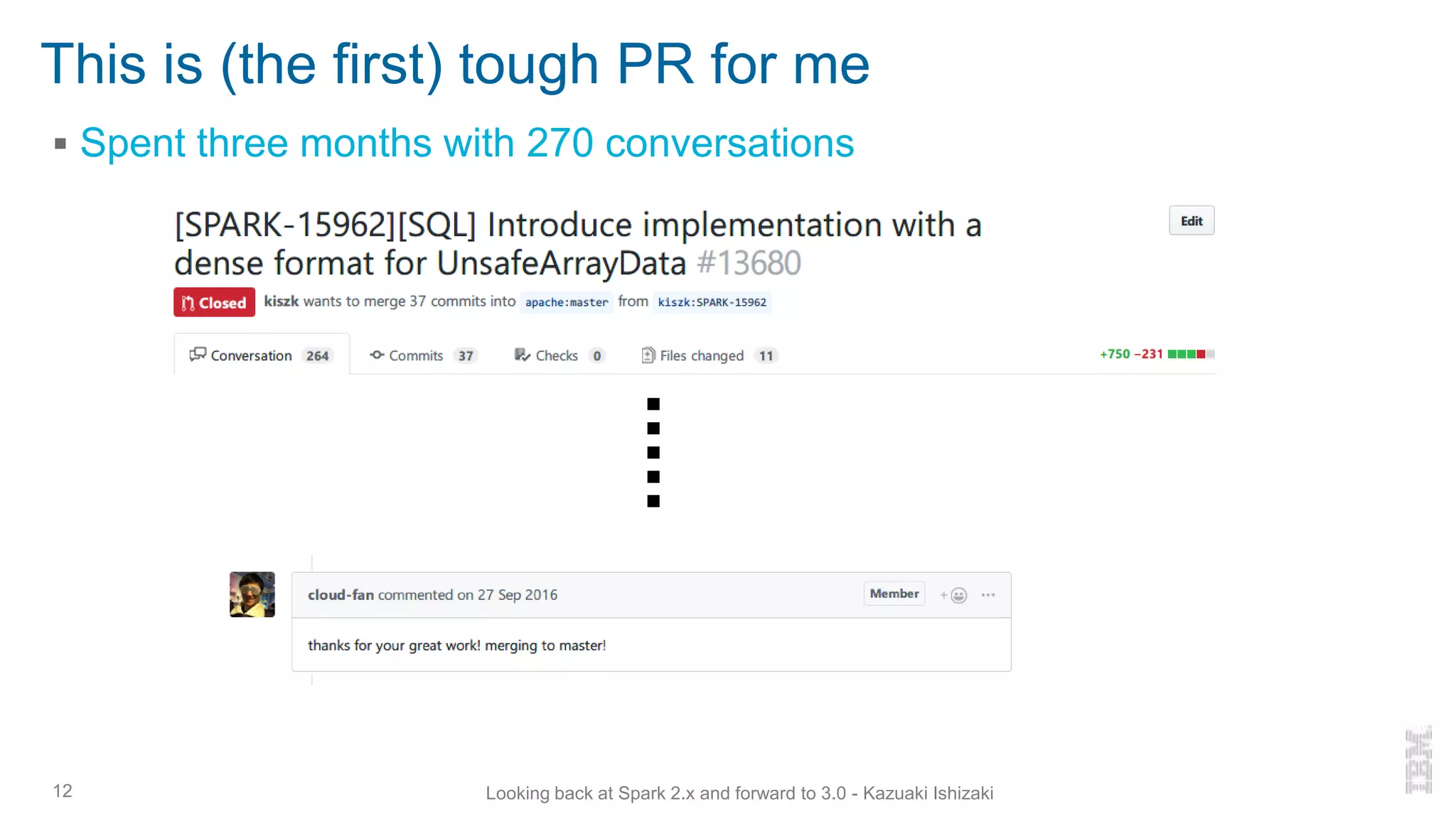

Kazuaki Ishizaki presented on improvements to Spark from versions 2.x to 3.0. Some key problems in Spark 2.x included slow performance due to excessive data conversion and element-wise copying when working with arrays. Spark 3.0 aims to address these issues by improving the internal data representation for arrays and eliminating unnecessary serialization. Ishizaki was appointed as an Apache Spark committer due to his contributions to performance optimizations through projects like Tungsten.

![Apache Spark Program is Written by a User

▪ This DataFrame program is written in Scala

6 Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki

df: DataFrame[int] = (1 to 100).toDF

df.selectExpr(“value + 1”)

.selectExpr(“value + 2”)

.show](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-6-2048.jpg)

![Java code is actually executed

▪ A DataFrame/Dataset program is translated to Java program to be

actually executed

– An optimizer combines two arithmetic operations into one

– Whole-stage codegen puts multiple operations (read, selectExpr, and

projection) into one loop

7

while (itr.hasNext()) { // execute a row

// get a value from a row in DF

int value =((Row)itr.next()).getInt(0);

// compute a new value

int mapValue = value + 3;

// store a new value to a row in DF

outRow.write(0, mapValue);

append(outRow);

}

df: DataFrame[int] = …

df.selectExpr(“value + 1”)

.selectExpr(“value + 2”)

.show

Code

generation

Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki

1, 2, 3, 4, …

Unsafe data (on heap)](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-7-2048.jpg)

![Array Internal Representation

▪ Before Spark 2.1, an array (UnsafeArrayData) is internally represented by

using an sparse/indirect structure

– Good for small memory consumption if an array is sparse

▪ After Spark 2.1, the array representation is dense/contiguous

– Good for performance

11 Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki

len = 2 7 8

a[0] a[1]offset[0] offset[1]

len = 2 Non

Null

Non

Null 7 8

a[0] a[1]

SPARK-15962 improves this representation](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-11-2048.jpg)

![A Simple Dataset Program with Array

▪ Read an integer array in a row

▪ Create a new array from the first element

13 Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki

ds: DataSet[Array[Int]] = Seq(Array(7, 8)).toDS

ds.map(a => Array(a(0)))](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-13-2048.jpg)

![Weird Generated Pseudo Code with DataSet

▪ Data conversion is too slow

– Between internal representation (Tungsten) and Java object format (Object[])

▪ Element-wise data copy is too slow

14 Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki

ArrayData inArray;

while (itr.hasNext()) {

inArray = ((Row)itr.next().getArray(0);

append(outRow);

}

ds: DataSet[Array[Int]] =

Seq(Array(7, 8)).toDS

ds.map(a => Array(a(0)))

Data conversion

Element-wise data copy

Element-wise data copy

int[] mapArray = new int[1] { a[0] };

Code

generation

Data conversion

Element-wise data copy

Ser

De

Copy each element with null check

Data conversion

Data conversion Copy with Java object creation

Element-wise data copy](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-14-2048.jpg)

![Generated Source Java Code

▪ Data conversion is done by boxing or unboxing

▪ Element-wise data copy is done

by for-loop

15 Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki

ds: DataSet[Array[Int]] =

Seq(Array(7, 8)).toDS

ds.map(a => Array(a(0)))

Data conversion

Code

generation

Element-wise data copy

ArrayData inArray;

while (itr.hasNext()) {

inArray = ((Row)itr.next().getArray(0);

Object[] tmp = new Object[inArray.numElements()];

for (int i = 0; i < tmp.length; i ++) {

tmp[i] = (inArray.isNullAt(i)) ?

null : inArray.getInt(i);

}

ArrayData array =

new GenericIntArrayData(tmpArray);

int[] javaArray = array.toIntArray();

int[] mapArray = (int[])map_func.apply(javaArray);

outArray = new GenericArrayData(mapArray);

for (int i = 0; i < outArray.numElements(); i++) {

if (outArray.isNullAt(i)) {

arrayWriter.setNullInt(i);

} else {

arrayWriter.write(i, outArray.getInt(i));

}

}

append(outRow);

}

Ser

De](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-15-2048.jpg)

![Too Long Actually-Generated Java Code (Spark 2.0)

▪ Too to

16 Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki

Data conversion Element-wise data copy

final int[] mapelements_value = mapelements_isNull ?

null : (int[]) mapelements_value1.apply(deserializetoobject_value);

mapelements_isNull = mapelements_value == null;

final boolean serializefromobject_isNull = mapelements_isNull;

final ArrayData serializefromobject_value = serializefromobject_isNull ?

null : new GenericArrayData(mapelements_value);

serializefromobject_holder.reset();

serializefromobject_rowWriter.zeroOutNullBytes();

if (serializefromobject_isNull) {

serializefromobject_rowWriter.setNullAt(0);

} else {

final int serializefromobject_tmpCursor = serializefromobject_holder.cursor;

if (serializefromobject_value instanceof UnsafeArrayData) {

final int serializefromobject_sizeInBytes = ((UnsafeArrayData) serializefromobject_value).getSizeInBytes();

serializefromobject_holder.grow(serializefromobject_sizeInBytes);

((UnsafeArrayData) serializefromobject_value).writeToMemory(

serializefromobject_holder.buffer, serializefromobject_holder.cursor);

serializefromobject_holder.cursor += serializefromobject_sizeInBytes;

} else {

final int serializefromobject_numElements = serializefromobject_value.numElements();

serializefromobject_arrayWriter.initialize(serializefromobject_holder, serializefromobject_numElements, 8);

for (int serializefromobject_index = 0; serializefromobject_index < serializefromobject_numElements;

serializefromobject_index++) {

if (serializefromobject_value.isNullAt(serializefromobject_index)) {

serializefromobject_arrayWriter.setNullAt(serializefromobject_index);

} else {

final int serializefromobject_element = serializefromobject_value.getInt(serializefromobject_index);

serializefromobject_arrayWriter.write(serializefromobject_index, serializefromobject_element);

}

}

}

serializefromobject_rowWriter.setOffsetAndSize(0, serializefromobject_tmpCursor,

serializefromobject_holder.cursor - serializefromobject_tmpCursor);

serializefromobject_rowWriter.alignToWords(serializefromobject_holder.cursor - serializefromobject_tmpCursor);

}

serializefromobject_result.setTotalSize(serializefromobject_holder.totalSize());

append(serializefromobject_result);

if (shouldStop()) return;

}

}

protected void processNext() throws java.io.IOException {

while (inputadapter_input.hasNext()) {

InternalRow inputadapter_row = (InternalRow) inputadapter_input.next();

boolean inputadapter_isNull = inputadapter_row.isNullAt(0);

ArrayData inputadapter_value = inputadapter_isNull ?

null : (inputadapter_row.getArray(0));

boolean deserializetoobject_isNull1 = inputadapter_isNull;

ArrayData deserializetoobject_value1 = null;

if (!inputadapter_isNull) {

final int deserializetoobject_n = inputadapter_value.numElements();

final Object[] deserializetoobject_values = new Object[deserializetoobject_n];

for (int deserializetoobject_j = 0;

deserializetoobject_j < deserializetoobject_n; deserializetoobject_j ++) {

if (inputadapter_value.isNullAt(deserializetoobject_j)) {

deserializetoobject_values[deserializetoobject_j] = null;

} else {

boolean deserializetoobject_feNull = false;

int deserializetoobject_fePrim =

inputadapter_value.getInt(deserializetoobject_j);

boolean deserializetoobject_teNull = deserializetoobject_feNull;

int deserializetoobject_tePrim = -1;

if (!deserializetoobject_feNull) {

deserializetoobject_tePrim = deserializetoobject_fePrim;

}

if (deserializetoobject_teNull) {

deserializetoobject_values[deserializetoobject_j] = null;

} else {

deserializetoobject_values[deserializetoobject_j] = deserializetoobject_tePrim;

}

}

}

deserializetoobject_value1 = new GenericArrayData(deserializetoobject_values);

}

boolean deserializetoobject_isNull = deserializetoobject_isNull1;

final int[] deserializetoobject_value = deserializetoobject_isNull ?

null : (int[]) deserializetoobject_value1.toIntArray();

deserializetoobject_isNull = deserializetoobject_value == null;

Object mapelements_obj = ((Expression) references[0]).eval(null);

scala.Function1 mapelements_value1 = (scala.Function1) mapelements_obj;

boolean mapelements_isNull = false || deserializetoobject_isNull;

ds.map(a => Array(a(0))).debugCodegen](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-16-2048.jpg)

![Simple Generated Code for Array on Spark 2.2

▪ Data conversion and element-wise copy are not used

▪ Bulk copy is faster than element-wise data copy

17

ds: DataSet[Array[Int]] =

Seq(Array(7, 8)).toDS

ds.map(a => Array(a(0)))

Bulk data copy Copy whole array using memcpy()

while (itr.hasNext()) {

inArray =((Row)itr.next()).getArray(0);

int[]mapArray=(int[])map.apply(javaArray);

append(outRow);

}

Bulk data copy

int[] mapArray = new int[1] { a[0] };

Bulk data copy

Bulk data copy

SPARK-15985 and SPARK-17490 simplify Ser/De by using bulk data copy

Code

generation

Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-17-2048.jpg)

![Simple Generated Java Code for Array

▪ Data conversion and element-wise copy are not used

▪ Bulk copy is faster than element-wise data copy

18

ds: DataSet[Array[Int]] =

Seq(Array(7, 8)).toDS

ds.map(a => Array(a(0)))

Bulk data copy Copy whole array using memcpy()

SPARK-15985 and SPARK-17490 simplify Ser/De by using bulk data copy

while (itr.hasNext()) {

inArray =((Row)itr.next()).getArray(0);

int[] javaArray = inArray.toIntArray();

int[] mapArray = (int[])mapFunc.apply(javaArray);

outArray = UnsafeArrayData

.fromPrimitiveArray(mapArray);

outArray.writeToMemory(outRow);

append(outRow);

}

Code

generation

Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-18-2048.jpg)

![Dataset for Array Is Not Extremely Slow

▪ Good news: 4.5x faster than Spark 2.0

▪ Bad news: still 12x slower than DataFrame

19

0 10 20 30 40 50 60

Relative execution time over DataFrame

DataFrame Dataset

ds = Seq(Array(…), Array(…), …)

.toDS.cache

ds.map(a => Array(a(0)))

4.5x

df = Seq(Array(…), Array(…), …)

.toDF(”a”).cache

df.selectExpr(“Array(a[0])”)

12x

Shorter is better

Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-19-2048.jpg)

![Spark 2.4 Supports Array Built-in Functions

▪ These built-in functions operate on array elements without writing a loop

– Single array input: array_min, array_max, array_position, ...

– Two-array input: array_intersect, array_union, array_except,

▪ Before Spark 2.4, users have to write a function using Dataset or UDF

20

SPARK-23899 is an umbrella entry

ds: DataSet[Array[Int]] = Seq(Array(7, 8)).toDS

ds.map(a => a.mix)

df: DataSet[Array[Int]] = Seq(Array(7, 8)).toDF(“a”)

df.array_min(“a”)

@ueshin co-wrote an blog entry at

https://databricks.com/blog/2018/11/16/introducing-new-built-in-functions-and-higher-order-functions-for-complex-data-types-in-apache-spark.html

Looking back at Spark 2.x and forward to 3.0 - Kazuaki Ishizaki](https://image.slidesharecdn.com/hadoopsourcecodereading-ishizaki-20181119-181120022514/75/Looking-back-at-Spark-2-x-and-forward-to-3-0-20-2048.jpg)