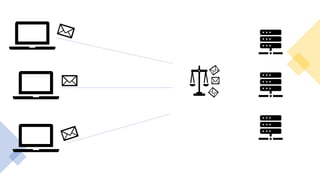

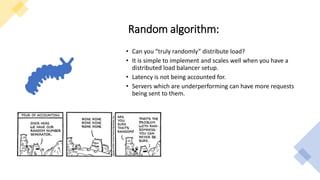

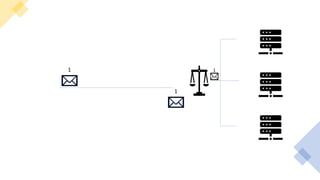

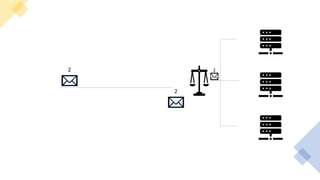

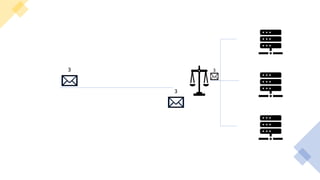

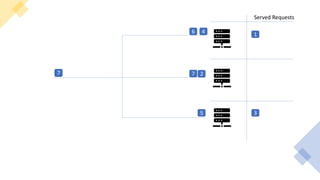

The document discusses various load balancing algorithms including random, round robin, weighted round robin, least connections, and least connections with randomness. Each algorithm has its advantages and disadvantages, particularly concerning latency, server performance, and system scalability, especially in distributed environments. The author notes the challenges of maintaining persistent connections and references additional materials for further reading on load balancing strategies.