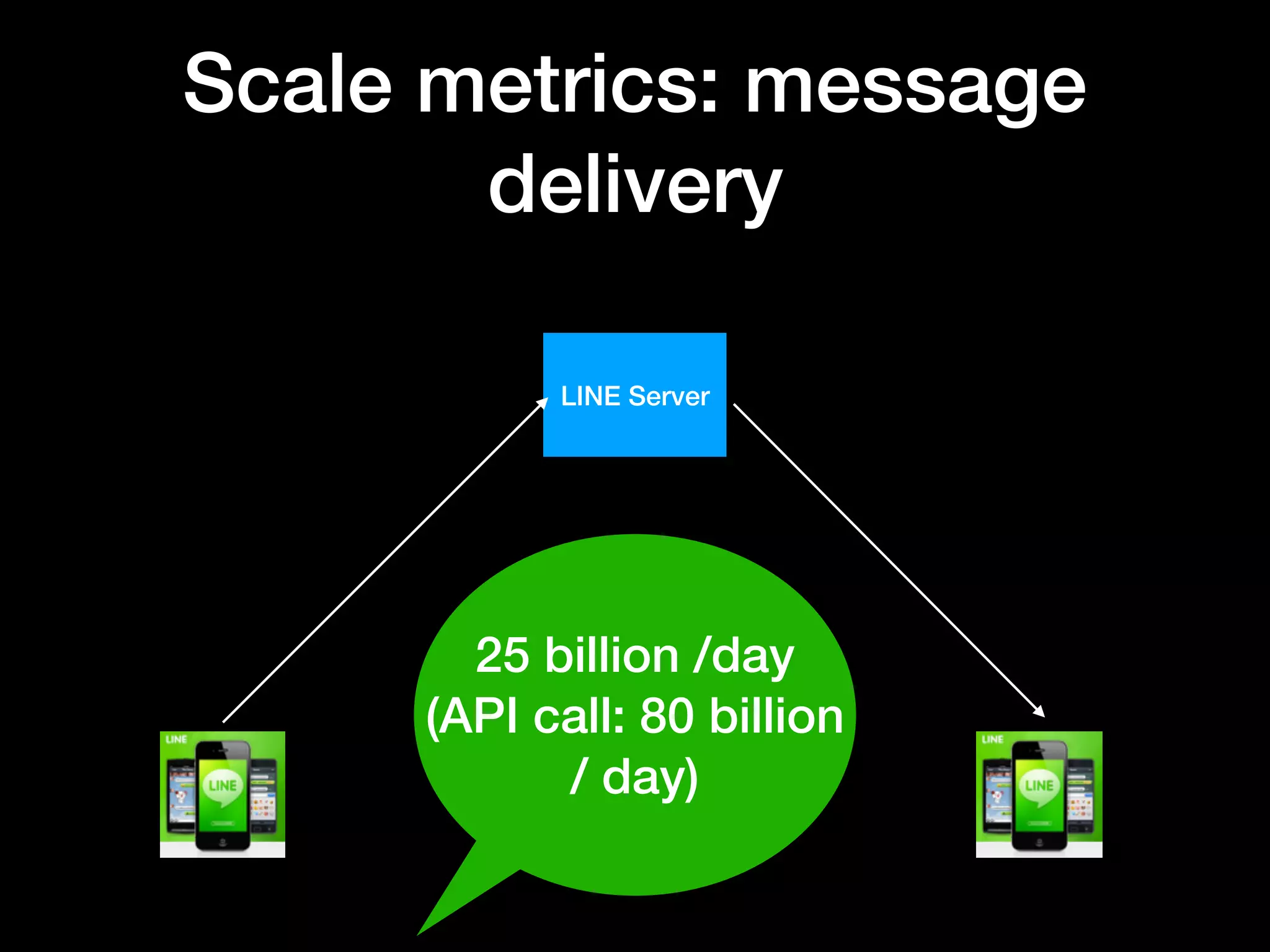

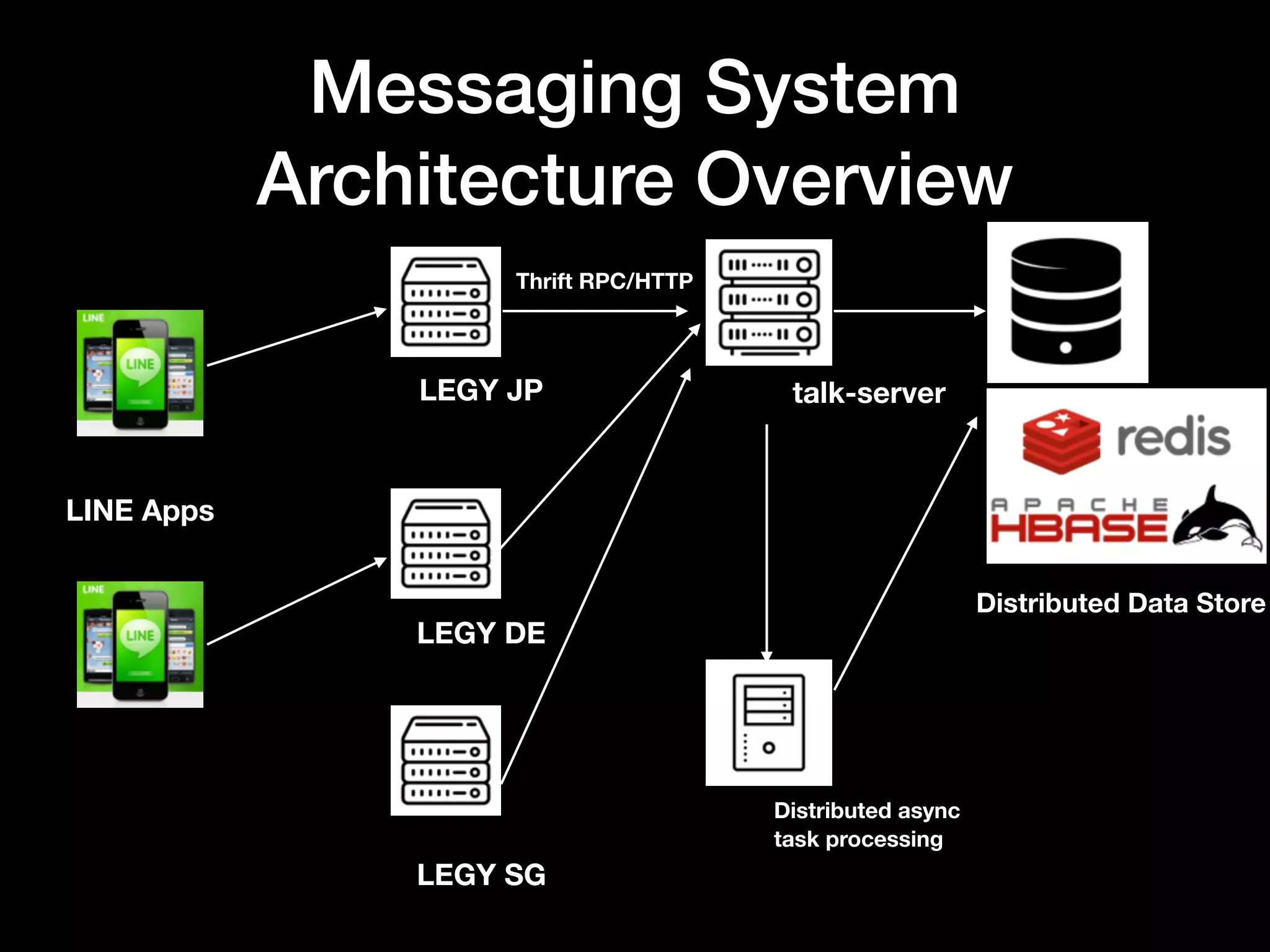

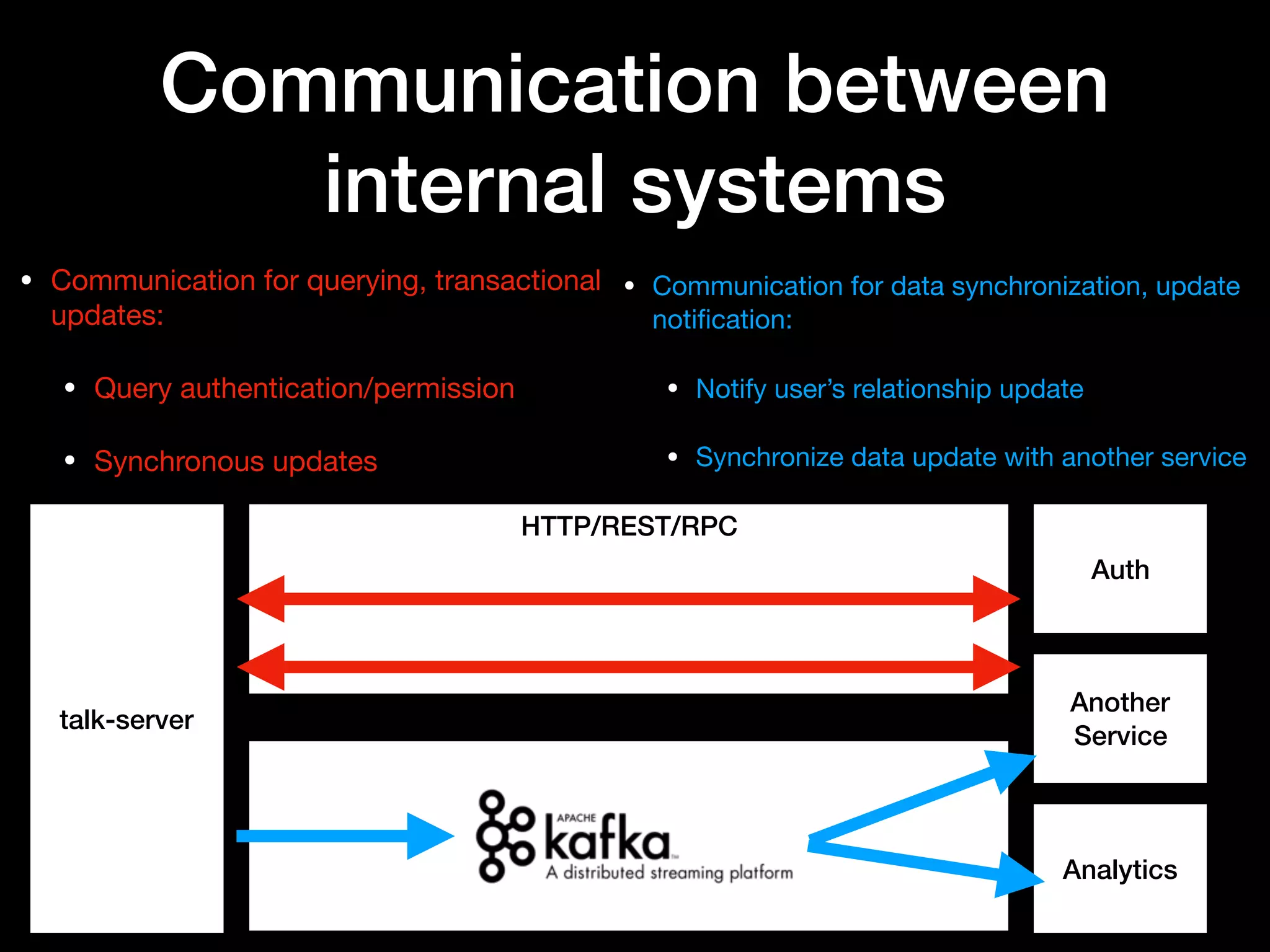

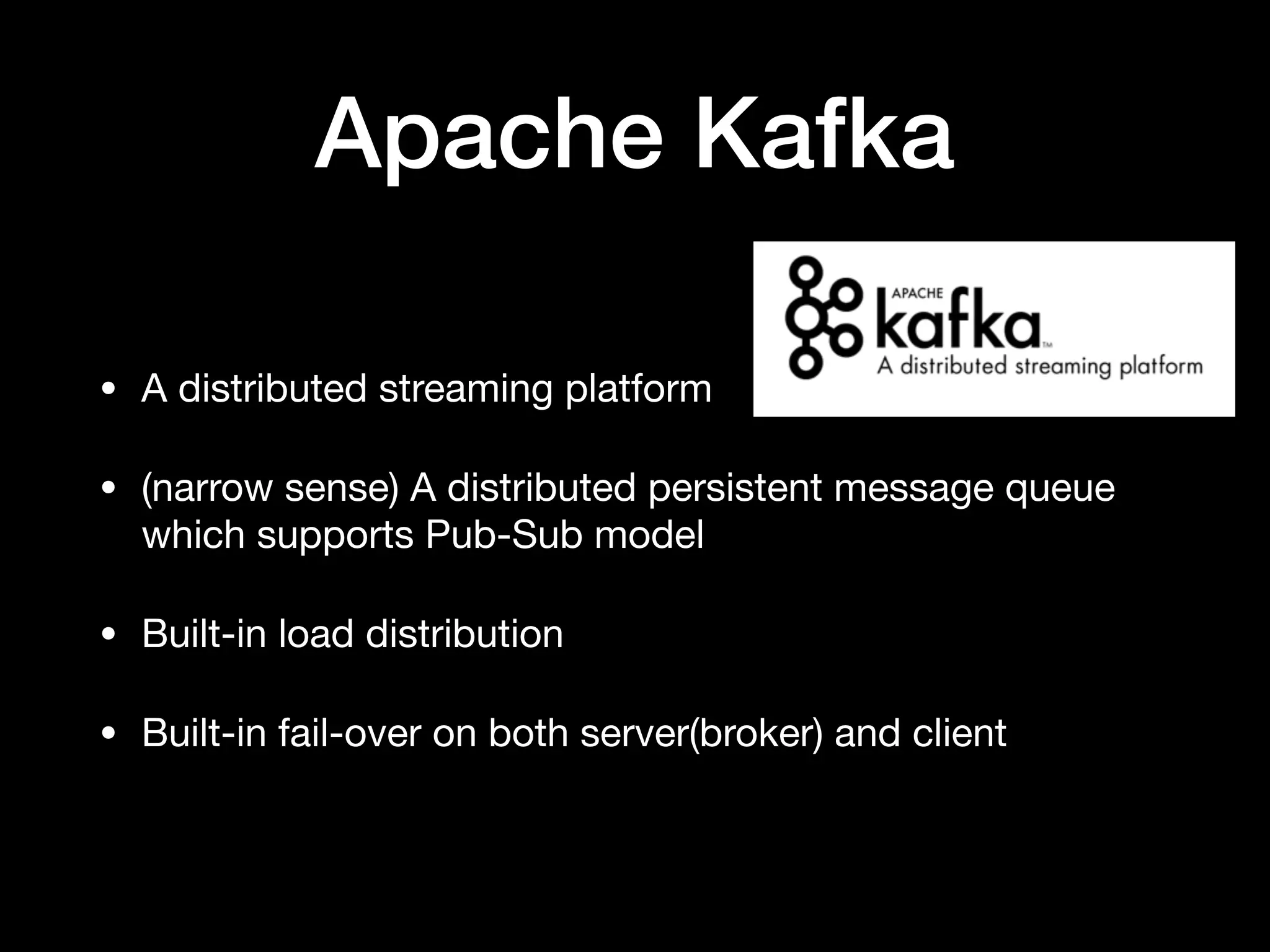

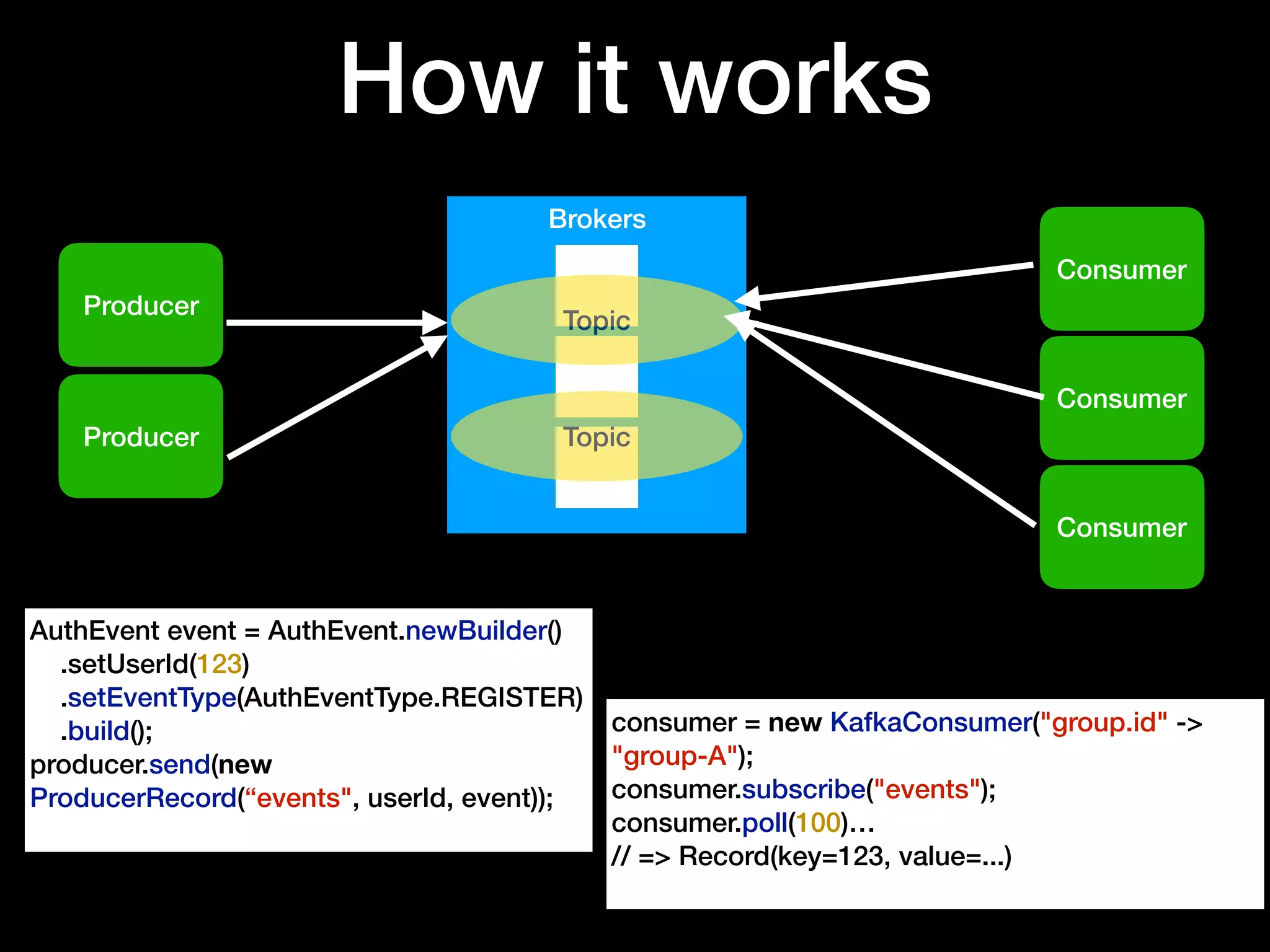

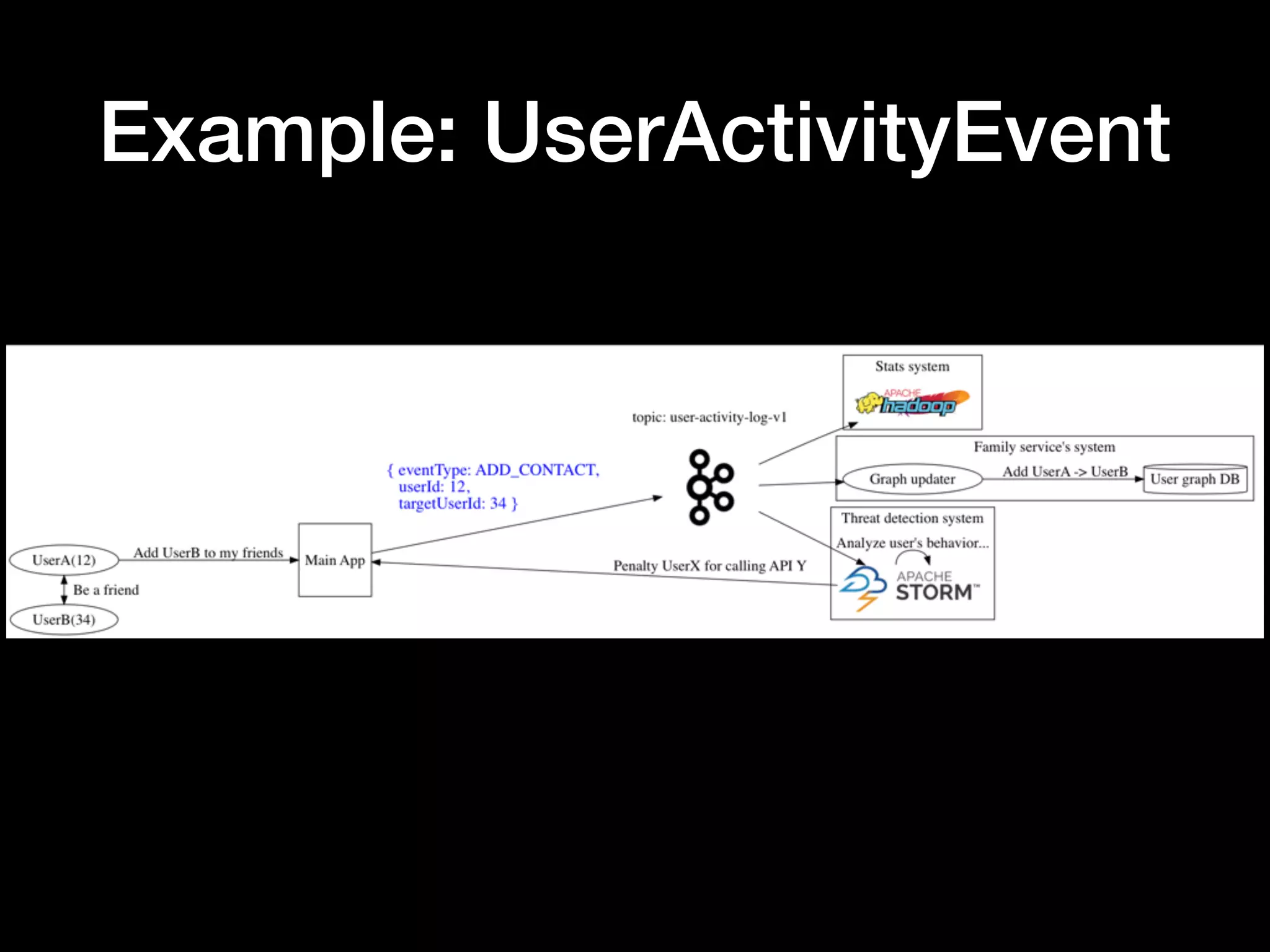

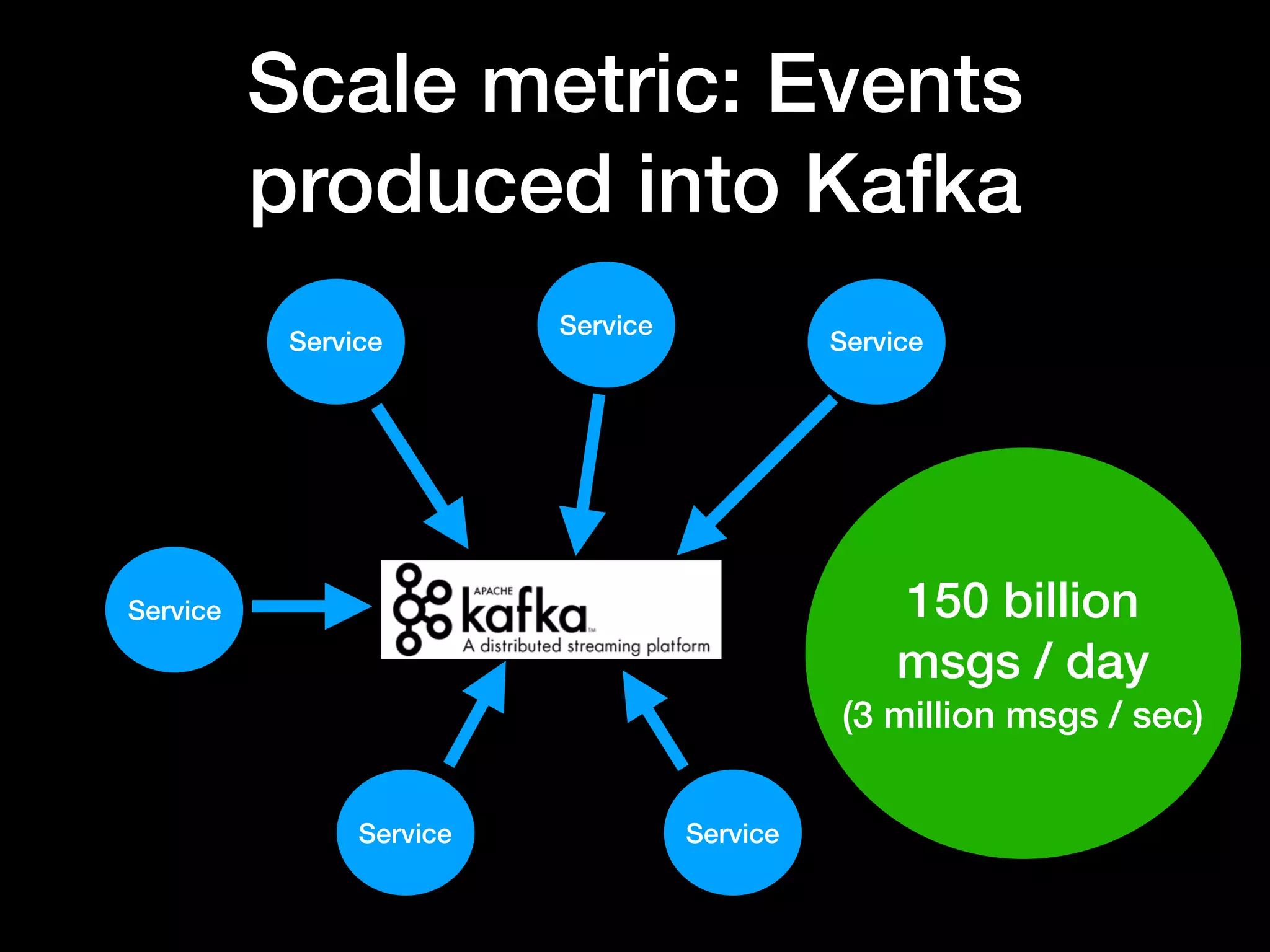

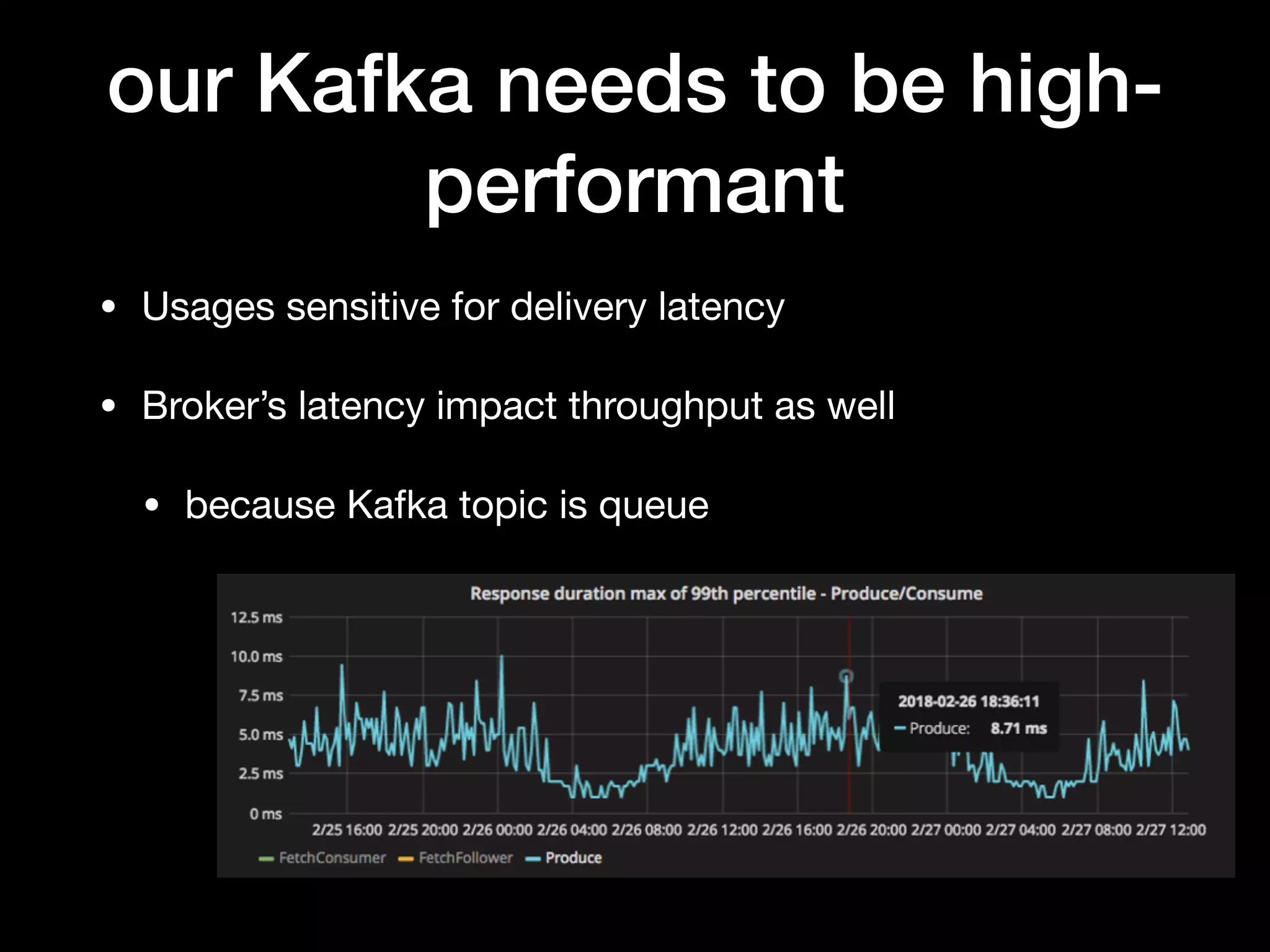

This document discusses LINE's use of Apache Kafka to build a company-wide data pipeline to handle 150 billion messages per day. LINE uses Kafka as a distributed streaming platform and message queue to reliably transmit events between internal systems. The author discusses LINE's architecture, metrics like 40PB of accumulated data, and engineering challenges like optimizing Kafka's performance through contributions to reduce latency. Building systems at this massive scale requires a focus on scalability, reliability, and leveraging open source technologies like Kafka while continuously improving performance.

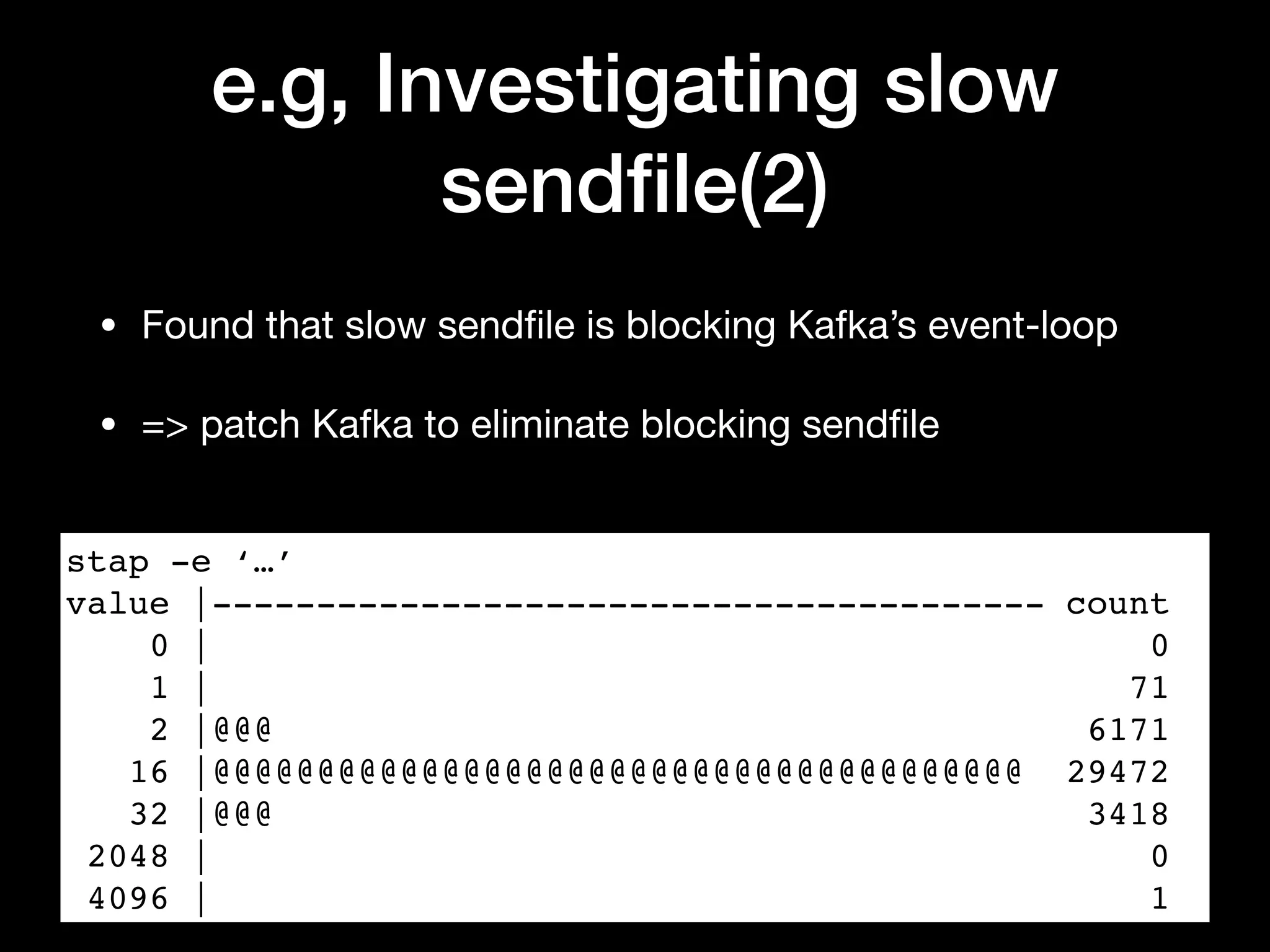

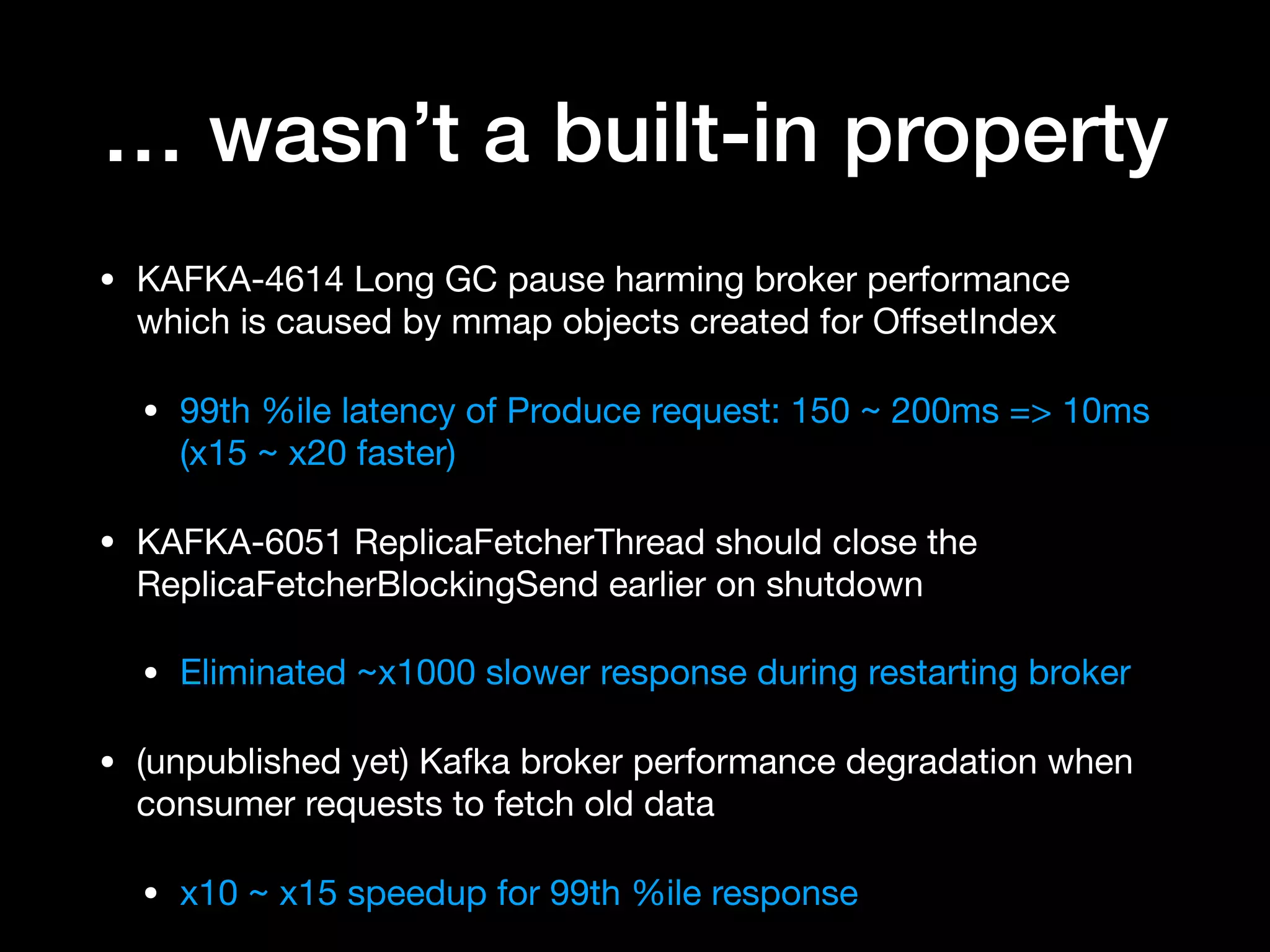

![Consumer GroupA

Pub-Sub

Brokers

Consumer

Topic

Topic

Consumer

Consumer GroupB

Consumer

Consumer

Records[A, B, C…]

Records[A, B, C…]

• Multiple consumer “groups” can

independently consume a single topic](https://image.slidesharecdn.com/vn-opening-day-180331041135/75/Building-a-company-wide-data-pipeline-on-Apache-Kafka-engineering-for-150-billion-messages-per-day-17-2048.jpg)

![e.g, Investigating slow

sendfile(2)

• SystemTap: A kernel dynamic tracing tool

• Inject script to probe in-kernel behavior

stap —e '

...

probe syscall.sendfile {

d[tid()] = gettimeofday_us()

}

probe syscall.sendfile.return {

if (d[tid()]) {

st <<< gettimeofday_us() - d[tid()]

delete d[tid()]

}

}

probe end {

print(@hist_log(st))

}

'](https://image.slidesharecdn.com/vn-opening-day-180331041135/75/Building-a-company-wide-data-pipeline-on-Apache-Kafka-engineering-for-150-billion-messages-per-day-23-2048.jpg)